16.1 INTRODUCTION

An operating system is a library of computer programs that provides an interface between application programs and system hardware components. It performs process management, interprocess communication and process synchronization, memory management, and input/output (I/O) management. The process management module is responsible for process creation, process loading and execution control, the interaction of the process with signal events, process monitoring, CPU allocation and process termination. Interprocess communication covers issues such as synchronization and coordination, deadlock and live lock detection and handling, process protection, and data exchange mechanisms. Memory management includes services for file creation, deletion, reposition, and protection. I/ O management handles request and release subroutines for a variety of peripheral and I/O device read, write, and reposition programs.

16.1.1 Real-time operating systems basics

A real-time operating system or RTOS (sometimes known as a real-time executive, real-time nucleus or kernel) is an operating system that implements rules and policies concerning time-critical allocation of system resources. An ordinary operating system is normally designed to provide logical correctness only, but a real-time operating system involves both logical and timewise correctness.

Real-time industrial control systems are classified as hard, firm or soft systems, as discussed in chapter 1, and real-time operating systems need to be capable of satisfying the needs of each. In hard real-time control systems, failure to meet response-time constraints leads to system failure. Firm real-time systems have hard deadlines, but where a certain, low probability of missing a deadline can be tolerated. Systems in which performance is degraded, but not destroyed, by failure to meet response-time constraints are called soft real-time systems.

A RTOS will typically use specialized scheduling algorithms to produce deterministic behavior. It is valued more for how quickly and or predictably it can respond to a particular event than for how much work it can perform in a given time. The key factors in a RTOS are therefore minimal interrupt response time, fixed-time task (also called process) switching, a minimal task scheduling latency, and dynamic memory allocation, discussed in the following paragraphs.

(1) Fixed-time task switching

An ordinary (non-preemptive) operating system might do task switching only at timer tick times, which might, for example, be ten milliseconds apart. In more sophisticated task switching, the scheduler in an operating system may search through arrays of tasks to determine which should be run next, thus making them non-deterministic. Real-time operating systems, on the other hand, avoid such searches by using incrementally updated tables that allow the task scheduler to identify the task that should run next. In this way the RTOS achieves fixed-time task switching.

Preemptive task switching or task preemption is the act of temporarily interrupting a task, without requiring its cooperation, and with the intention of resuming it at a later time. This is known as a context switch, normally carried out by a privileged task or part of the system known as a preemptive scheduler. A preemptive scheduler has the power to preempt or interrupt, and later resume, system tasks.

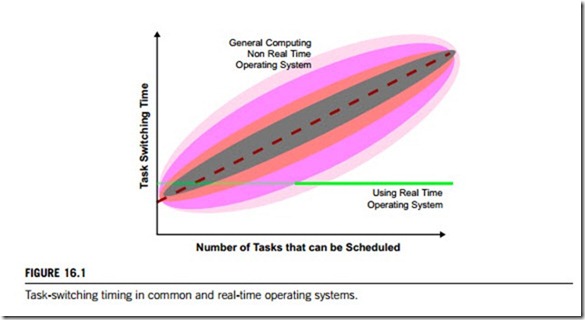

There are two types of timing behavior for task switching, as can be seen in Figure 16.1. In a standard operating system, the task-switching time generally rises as the number of tasks to schedule increases. However, the actual time for a task switch is not the time shown by the dashed line, but may be well above or well below it. The shaded regions in the figure show how the actual task-switching time follows what is predicted. The horizontal solid line shows how the task-switching time of a real- time operating system compares with this. It is constant; independent of any load factor.

In some instances, such as the leftmost area of Figure 16.1, the task-switching time may be quicker for a standard operating system than for an RTOS, but for real-time embedded applications this is not important. In fact, the term real-time does not mean as fast as possible but rather indicates consistency and repeatability; that is, it is deterministic.

To have fixed-time task switching, a RTOS needs to have information about the timing performance of its final system. The behavior metrics to be specified are:

(a) Task-switching latency: task-switching or context-switching latency is the time needed to save the context of a currently executing task and switch into another task. It is important that this is short enough.

(b) Interrupt latency: this is the time that elapses between the execution of the last instruction of the interrupted task and the first instruction in the interrupt handler, or simply the time from interrupt to task run. This is a metric of the response of the system to an external event.

(c) Interrupt dispatch latency: this is the time to go from the last instruction in the interrupt handler to the task next scheduled to run, i.e. the time needed to go from interrupt level to task level.

(2) Task scheduling and synchronization

Most operating systems offer a variety of mechanisms for scheduling and synchronization, necessary in a preemptive environment containing many tasks, because without them the tasks might be well corrupted. They are also provided by a RTOS, by means of the following implementations.

(a) Multitasking and preemptibility

The scheduler in a RTOS should be able to preemp any task in the system, and give the resource to the task that needs it most. It should also be able to handle multiple levels of interrupts; the RTOS should not only be preemptible at task level, but at the interrupt level as well.

(b) Task priority and priority inheritance

In order to preempt tasks effectively, a RTOS needs to be able to determine which task has the earliest deadline to meet, so each task is assigned a priority level. Deadline information is converted to priority levels which are used by the operating system to allocate resources. When using priority scheduling, it is important that the RTOS has a sufficient number of possible levels, so that applications with stringent priority requirements can be implemented. Unbounded priority inversion occurs when a higher-priority task must wait for a low-priority task to release a resource at the same time as the low-priority task is waiting for a medium-priority task. The RTOS can prevent this by giving the lower- priority task the same priority as the one that is being blocked (called priority inheritance). In this case, the blocking task can finish execution without being preempted by a medium-priority task.

(c) Predictable task synchronization

For multiple tasks to communicate with each other in a timely fashion, predictable intertask communication and synchronization mechanisms are required. The ability to lock and unlock resources should be supported, as should the ability to access critical sections whilst maintaining data integrity. For hard real-time industrial control systems, mechanisms and policies to ensure consistency and minimize worst-case blocking, without incurring unbounded or excessive run-time overheads are required. Since most recent work in maintaining the integrity of shared data has been carried out in the context of database systems, these control techniques could be adpated to RTOS task scheduling. The techniques adapted must employ semantic information that is necessarily available at design time to guarantee optimum scheduling.

(3) Determinism and high-speed message passing

Multitasking systems must share data and hardware resources among multiple tasks, because it is usually unsafe for more than two tasks to access the same data or hardware resource simultaneously. This is a critical area of a computer or a control system, which must be protected from access colli- sions among the tasks that are accessing them. There are four approaches to resolve this problem: temporarily masking and disabling interrupts; binary semaphores; mutexes for mutual exclusion; and message passing.

Intertask (or interprocess) message communication is an area where different operating systems have different characteristics. Most actually copy messages twice during the transfer process. An approach that avoids this non-determinism, and also accelerates performance is to copy a pointer (a program pointer takes a register in memory) to the message, and deliver that pointer to the message- receiver task without moving the message contents at all. In order to avoid access collisions, the operating system then needs to go back to the message-sender task and delete its copy of the pointer. For large messages, this eliminates the need for lengthy copying and eliminates non-determinism.

(4) Dynamic memory allocation

Determinism of service times is also an issue in the area of dynamic memory allocation. Many standard operating systems offer memory allocation services from what is termed the heap. Each task can temporarily borrow some memory heap from the operating system and specify the size of memory buffer needed. When this task (or another task) has finished with this memory buffer it returns to the operating system which returns it to the heap, where its memory can be used again.

Heaps suffer from a phenomenon called memory fragmentation, which may cause its services to degrade. This fragmentation is caused by the continued reuse of the buffer, sometimes in smaller pieces. When a buffer is no longer needed, it is released and frees the memory. After a heap has undergone many cycles of “in use” and “free”, small slivers of memory may appear between memory buffers that are being used by tasks. Over time, a heap will have more and more of these slivers, which will eventually result in situations where tasks requesting memory buffers of a certain size will be refused by the operating system, even though there is enough available memory in its heap. This problem can be solved by so-called garbage collection (defragmentation) software, but algorithms used are often wildly non-deterministic, injecting randomly appearing random-duration delays into heap services.

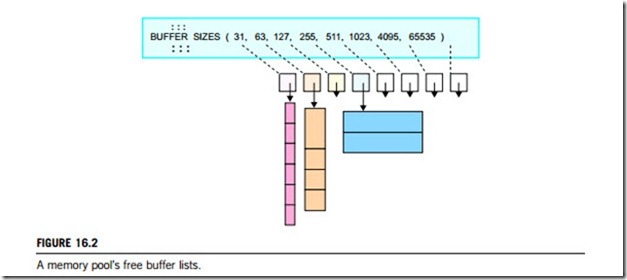

Real-time operating systems solve this problem by avoiding altogether both memory fragmentation, garbage collection, and their consequences. A RTOS can offer non-fragmenting memory allocation techniques instead of heaps by limiting the variety of memory chunk sizes they make available to application software. While this approach is less flexible than memory heaps, it does avoid memory fragmentation and the need for defragmentation that these generate. For example, the pools memory allocation mechanism allows application software to allocate chunks of memory of perhaps four or eight different buffer sizes per pool. Pools totally avoid external memory fragmentation, by not permitting a buffer that is returned to the pool to be broken into smaller buffers in the future. Instead, the buffer is put onto a free buffer list of buffers of its own size that are available for future re-use at this same size. This is shown in Figure 16.2.

(5) Minimizing interrupt response time

The most important characteristic of a RTOS is its ability to service interrupts quickly. A failure to meet an interrupt response time requirement in a real-time control system can be catastrophic, thus a RTOS must ensure that interrupt-handling time is minimized. If possible, the interrupt handler of an operating system should save the context, create a task that will handle the interrupt service, and return control back to the operating system. When the system fires an interrupt, its microprocessor (or CPU) executes an interrupt service routine (ISR). The amount of time that elapses between a device interrupt

request and the first instruction of the corresponding ISR is known as interrupt latency. The ISR can optionally execute an operating system instruction that will cause a task to be awakened. The amount of time that elapses between the interrupt request and the first instruction of the task that is awakened to handle it is known as task response time. In order to compute the system’s worst-case response time, it is necessary to examine all of the sources of interrupt response delays, to ascertain which causes the longest delay to the servicing of the highest-priority interrupt.

Theoretical worst-case delays in interrupt latency and task response time may depend on the choice of CPU, choice of operating system, and how device drivers and other software programs are written. Simple rules and sound programming techniques coupled with proper RTOS interrupt architecture can ensure minimum response times. There are five methodologies that can be used to avoid worst-case delays in both interrupt latency and task response time:

(a) keep all of ISR code as simple and short as possible;

(b) do not disable interrupts;

(c) avoid instructions that increase latency;

(d) avoid improper use of operating system calls in ISR;

(e) properly prioritize interrupts relative to tasks.

A RTOS operates on a set of program structures commonly defined as classes, which is especially true in object-oriented software. Each class in an operating system supports a set of operators, commonly called kernel services, that are invoked by application processes or external events to achieve an expected behavior. For most industrial applications, a RTOS should include these program classes:

(a) Tasks that manage execution of program code; each task is independent of other tasks but can establish many forms of relationships with other tasks in, including data structures, input, output, or other constructs.

(b) Intertask communications, which are mechanisms to pass information from one task to another.

Commonly used classes for intertask communications include semaphores, messages and mailboxes, queues, pipes and event flags.

(c) Semaphores, which provide a means of synchronizing tasks with events.

(d) Mutexes, which permit a task to gain exclusive access to an entity or resource.

(e) Timers and alarms, which count ticks and generate signals.

(f) Memory partitions, which manage RAM to prevent fragmentation.

(g) Queues (or pipes, or mailboxes), which permit the passing of fixed amounts of data from a producer to a consumer in FIFO order.

(h) Messages, which are useful in managing variable size data transmissions from a sender to a receiver.

(i) Kernel services, which are routines that are executed to achieve a certain behavior of the system.

When an application code entity requires a function provided by the kernel, it initiates a kernel service request for that function.

(j) Event broker or handler, which is a programming paradigm in which the flow of the program is determined by sensor outputs or user actions (mouse clicks, key presses) or messages from other programs or threads. In embedded systems the same may be achieved using interrupts instead of a constantly running main loop; in that case the former portion of the architecture resides completely in hardware.

(k) ISR (interrupt service routine), which is a software routine that is activated to respond to an interrupt.

16.1.2 Real-time operating systems for different platforms

A real-time system is called an embedded control system when the software is encapsulated by the hardware it controls. The microprocessor chipset used to control the mixture of fuel with air in the carburettor of many automobiles is an example of a real-time embedded control system. A RTOS differs from a standard operating system in that the a user of the former can access the microprocessor and peripherals directly. Such an ability helps to meet deadlines.

Any embedded control system has a set of hardware components on which processing can operate.

In an embedded control system, they constitute the system with which the operating system is working. Any RTOS also requires a compatible hardware platform. There are two of basic assumptions made about the hardware platforms, as specified below.

(1) Real-time operating systems for single-microprocessor platforms

The assumptions below are the commitments made by the single-microprocessor platforms to meet the requirements of real-time operating systems:

(a) The microprocessor should provide sufficient processing throughput to meet the time requirements of each application.

(b) The microprocessor should have access to or provide the required I/O devices and memory resources.

(c) The microprocessor should have access to timer resources to enable sharing of CPU cycles based on system time.

(d) The microprocessor should provide a mechanism for the RTOS to take control if an application attempts to perform an operation that is not valid. Valid operations are internal to an application, or they may cross the boundary between an application and the modules or components with which they interact. If they cross that boundary, the interactions should be identified, agreed, and verified. They form a set of commitments that the application supplier must convey to the embedded control system integrator, platform supplier, and RTOS supplier.

(2) Real-time operating systems for multiprocessor platforms

Embedded multiprocessor systems typically have a microprocessor controlling each device. Most real- time operating systems that are multiprocessor-capable use a separate instance of the kernel on each microprocessor. The multiprocessor capability comes from the kernel’s ability to send and receive information between microprocessors. In many systems that support multiprocessing there is no difference between the single-microprocessor case and the multiprocessor case from the task’s point of view. The RTOS uses a table in each local kernel that contains the location of each task in the system. When one task sends a message to another, the local kernel looks up the location of the destination task and routes the message appropriately. From the task’s point of view, all tasks are executing on the same microprocessor.

Multiprocessors can be configured into a variety of forms, from loosely coupled computing grids that use the Internet for communication, to tightly coupled shared-resource architectures referred to as symmetric multiprocessors, or SMPs. With the latter all microprocessors (generally configured into pairs or groups of four) are identical and share a set of resources. This model lends itself to certain programming approaches, and RTOS facilities that can be exploited to benefit the developer. Since all microprocessors have access to the same physical memory, a process or a task can run on any microprocessor from the same memory location. This is the key to adapting an application to SMP architecture.

To utilize the resources of a SMP system, the RTOS must meet the following requirements:

(a) The RTOS that runs on SMP architecture must be ported to work with the SMP and the underlying microprocessor architecture.

(b) The RTOS enables utilization of all the microprocessors.

(c) The RTOS must get all the microprocessors to work on the data simultaneously.

(d) The RTOS must manage operation of multiple instruction streams on independent microprocessors.

(e) The RTOS must provide a mechanism to handle inter-processor (note: not “interprocess”) communication.

(f) The RTOS must enable synchronization among the microprocessors.

One method of achieving programming transparency in a multiprocessor system is to assign individual tasks to run on specific microprocessors based on their availability. In this way, the processing load can be shared among microprocessors, with work automatically assigned to one that is free. The RTOS must determine whether a microprocessor is free and, if it is, then a task can be run on it even though the others may already be running other tasks.

Priorities are important to consider, because the RTOS scheduler is designed to maintain priority execution of all tasks. Tasks can therefore safely assume that no lower-priority task can also be executing concurrently. The RTOS must preserve this rule even in the case of a SMP, or the underlying logic upon which an application might be based could falter, and hence may not perform as intended.

Priority-based, preemptive scheduling uses a multiple core of microprocessors to run tasks that are ready. The scheduler automatically runs tasks on available cores. A task that is ready can run on microprocessor-n if and only if it is of the same priority as the task(s) running on microprocessor(s)- (n-1). After initialization, the RTOS scheduler determines the highest-priority task that is ready to run. It sets the context for that task, and runs it on microprocessor-1. The scheduler then determines whether another task of equal priority is also ready. If so, that task is run on microprocessor-2, and so on. If no additional tasks are ready to run, the scheduler goes to an idle state awaiting either an external event, a service request, interrupt causing preemption, task resume, task sleep or relinquish or finally, task exit.

Preemption occurs when a task is made ready to run at the same time as a lower-priority task is already running. In this event, the lower-priority task is suspended (context saved into heaps), the higher-priority task is started (context restored or initialized), as are any lower-priority tasks on other microprocessors. This is critical to maintain the priority order of executing tasks.

Within this automatic load-balancing approach to managing the resources of SMP architecture, additional features are beneficial to overall performance:

(a) One microprocessor can be made responsible for all external interrupt handling (does not include inter-processor interrupts needed for synchronization or communication).

(b) This leaves the other microprocessor(s) with virtually zero overhead from interrupt handling, enabling it (them) to focus all of its (their) cycles on application processing, even during periods of intense interrupt activity that otherwise might degrade performance.

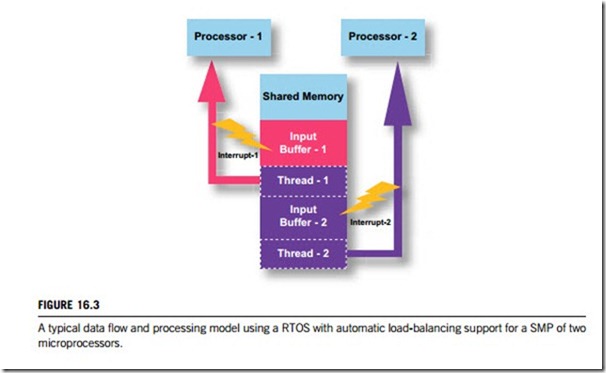

in real-time. This is a typical data flow and processing model using a RTOS with automatic load- balancing support for an SMP.

In this example, the input is set up to fill Buffer-1 in memory, with an interrupt generated upon a buffer-full condition (or based on input of a specified number of bytes). As Buffer-1 reaches a full condition:

(a) Buffer-1 FULL generates Interrupt-1;

(b) the ISR handling Interrupt-1 marks Task-1 READY-TO-RUN;

(c) the scheduler runs Task-1 on Microprocessor-1;

(d) data are directed to Buffer-2;

(e) Microprocessor-2 remains idle.

Then, more data arrive while Task-1 is still active, and Buffer-2 fills up:

(f) Buffer-2 FULL generates Interrupt-2;

(g) the ISR handling Interrupt-2 marks Task-2 READY-TO-RUN;

(h) the scheduler runs Task-2 on Microprocessor-2.