Chapter 12. Local Area Networking

Computers are conspirators. They owe most of their success to deviously working together, subverting the established order like Bolsheviks plotting the overthrow of the Czar. Each computer by itself can be safely contained and controlled in a home or office. Linked together, however, and there’s no telling what mischief they will get into. They might join together to pass subversive messages (which we call email). They might pool their resources so they can take advantage of powers beyond their capabilities and budgets (such as sharing a printer or a high-speed Internet connection). They can be each others’ eyes and ears, letting them pry into affairs halfway around the world. They can gather information about you—even the most delicate personal matters, such as your credit card number—and share it with other conspirators (I mean “computers,” of course) elsewhere in the world. They can just make your life miserable—oh wait, they did that when they weren’t connected, too.

This computer conspiracy is the key to the power of the local area network and the Internet. It gives your computer more reach, more power, and more capabilities. It simply makes your life more convenient and your computer more connected. In fact, at one time local area networking was called connectivity.

Fortunately, computers themselves don’t plot against you (although the people behind those computer may). Even when connected to a network, computers remain their obedient selves, ready to do your bidding.

The challenge you face in linking your computer to others is the same as faced by a child growing up with siblings—your computer has to learn to share. When kids share, you get more quiet, greater peace of mind, and less bloodshed. When computers share, you get the convenience of using the same files and other resources, centralized management (including the capability to back up all computers from one location or use one computer to back up others), and improved communication between workers in your business.

The drawback to connectivity is that computer networks are even more difficult to understand and manage than a platoon of teenagers. They have their own rules, their own value systems, their own hardware needs, and even their own language. Just listening in on a conversation between network pros is enough to make you suspect that an alien invasion from the planet Oxy-10 has succeeded. To get even a glimmer of understanding, you need to know your way around layers of standards, architectures, and protocols. Installing a network operating system can take system managers days; deciphering its idiosyncrasies can keep users and operators puzzled for weeks. Network host adapters often prove incompatible with other computer hardware, with their required interrupts and I/O addresses locking horns with SCSI boards, port controllers, and other peripherals. And weaving the wiring for a network is like threading a needle while wearing boxing gloves during a cyclone that has blown out the electricity, the candles, and your last rays of hope.

In fact, no one in his right mind would tangle with a network were not the benefits so great. File sharing across the network alone eliminates a major source of data loss, which is duplication of records and out-of-sync file updates. Better still, a network lets you get organized. You can put all your important files in one central location where they are easier to protect, both from disaster and theft. Instead of worrying about backing up half a dozen computers individually, you can easily handle the chore with one command. Electronic mail can bring order to the chaos of tracking messages and appointments, even in a small office. With network-based email, you can communicate with your coworkers without scattering memo slips everywhere. Sharing a costly laser printer or large hard disk (with some networks, even modems) can cut your capital cost of the computers’ equipment by thousands or tens of thousands of dollars. Instead of buying a flotilla of personal laser printers, for example, you can serve everyone’s hard copy needs with just one machine.

Concepts

Network designers get excited when someone expresses interest in their rather esoteric field, which they invariable serve up as a layer cake. Far from a rich devil’s food with a heavy chocolate frosting (with jimmies), they roll out a network model, something that will definitely disappoint your taste buds because it’s entirely imaginary. They call their cake the Open Systems Interconnection Reference Model (or the OSI Model for short), and it’s a layer cake the way software is layered. It represents the structure of a typical network with the most important functions each given its own layer.

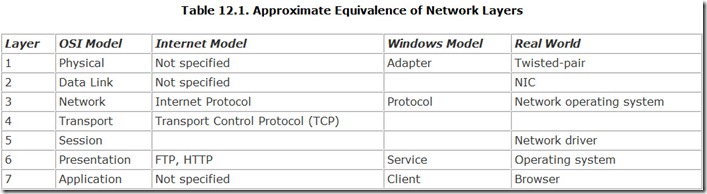

Although the discussion of networks usually begins with this serving of cake, the OSI Model does not represent any particular network. In fact, networks are by no means obliged to follow the model. Although most have layers of functions, they are often stacked differently. And while the OSI Model preaches a seven-layer model, even the foremost networkers in the world—the Institute of Electrical and Electronic Engineers—slip in one more layer for good measure. Table 12.1 highlights the equivalence of different layers in several common networking systems, including the OSI model.

What the OSI Model shows is how many different functions you need to make a network and how those functions interrelate to one another. It’s a good place to begin, and it adds an academic aura to our network discussion, which just might make you believe you’re learning something useful.

OSI Model

In 1984, the International Standards Organization laid out a blueprint to bring order to the nonsense of networking by publishing the Open Systems Interconnection Reference Model. You can imagine the bickering between hundreds of engineers trying to refine the right recipe for cooking up a network, then arguing over how many layers into which to divide their batter. The final, seven-layer model looks as if it might be the one and only way to build a network. These layers, ranging from the connecting wire to software applications, define functions and protocols that enable the wide variety of network hardware and software to work together. It just seems so logical, so compelling, that it is taught in most colleges and endorsed by major organizations such as IBM.

Regardless of where it fits in with your beliefs, philosophies, and diet, the OSI Model presents an excellent way to understand networks. The layering defined by the OSI Reference Model illustrates how the various elements of a network—from the wire running through your office ceiling to the Windows menu of your mail program—fit together and interact. Although few actual networks or network products exactly fit the model, the layers show how networks must be structured as well as the problems in building a network.

Physical

The first layer of the OSI Reference Model is the Physical layer. It defines the basic hardware of the network, which is the cable that conducts the flow of information between the devices linked by the network, or even the lack of a cable in wireless networking designs. This layer defines not only the type of wire (for example, coaxial cable, twisted-pair wire, and so on) but also the possible lengths and connections of the wire, the signals on the wire, and the interfaces of the cabling system—or the frequency and modulation of the radio devices that carry wireless network signals. This is the level at which the device that connects a computer to the network (the network host adapter) is defined.

Data Link

Layer 2 in a network is called the Data Link layer. It defines how information gains access to the wiring system. The Data Link layer defines the basic protocol used in the local network. This is the method used for deciding which computer can send messages over the cable at any given time, the form of the messages, and the transmission method of those messages.

This level defines the structure of the data that is transferred across the network. All data transmitted under a given protocol takes a common form called the packet or network data frame. Each packet is a block of data that is strictly formatted and may include destination and source identification as well as error-correction information. All network data transfers are divided into one or more packets, the lengths of which are carefully controlled.

Breaking network messages into multiple packets enables the network to be shared without interference and interminable waits for access. If you transfer a large file (say, a bitmap) across the network in one piece, you might monopolize the entire network for the duration of the transfer. Everyone would have to wait. By breaking all transfers into manageable pieces, everyone gets access in a relatively brief period, thus making the network more responsive.

Network

Layer 3 in the OSI Reference Model is the Network layer, which defines how the network moves information from one device to another. This layer corresponds to the hardware-interface function of the BIOS of an individual computer because it provides a common software interface that hides differences in underlying hardware. Software of higher layers can run on any lower-layer hardware because of the compatibility this layer affords. Protocols that enable the exchange of packets between different networks operate at this level.

Transport

Layer 4 controls data movement across the network. The Transport layer defines how messages are handled—particularly how the network reacts to packets that become lost as well as other errors that may occur.

Session

Layer 5 of the OSI Reference Model defines the interaction between applications and hardware, much as a computer BIOS provides function calls for programs. By using functions defined at this Session layer, programmers can create software that will operate on any of a wide variety of hardware. In other words, the Session layer provides the interface for applications and the network. Among computers, the most common of these application interfaces is IBM’s Network Basic Input/Output System (NetBIOS).

Presentation

Layer 6, the Presentation layer, provides the file interface between network devices and the computer software. This layer defines the code and format conversions that must take place so that applications running under a computer operating system, such as DOS, OS/2, or Macintosh System 7, can understand files stored under the network’s native format.

In classic networking, this function would be served by the computer’s BIOS. The first personal computers lacked any hint of network connectibility, but in 1984 IBM introduced a trend-setting system called the IBM PC Network, which has been the foundation for small computer networking ever since. The critical addition was a set of new codes to the system BIOS developed by Sytek. This set of new codes—specifically, the Interrupt 5C(hex) routines—has become known as the Network BIOS, or NetBIOS.

The NetBIOS serves as a low-level application program interface for the network, and its limitations get passed on to networks built around it. In particular, the NetBIOS imposed requires that each computer on the network wear a unique name up to 15 characters long. This limits the NetBIOS to smaller networks. Today’s operating systems use driver software that takes the place of the NetBIOS.

Application

Layer 7 is the part of the network that you deal with personally. The Application layer includes the basic services you expect from any network, including the ability to deal with files, send messages to other network users through the mail system, and control print jobs.

Internet Model

The Internet does not neatly fit the seven-layer OSI Model for the simple reason that the Internet is not meant to be a network. It describes a network of networks. Consequently, the Internet standards do not care about, nor do they describe, a “physical” layer or an “application” layer. (The World Wide Web standards arguably define a “presentation” layer.) When you use the Internet, however, the computer network you use to tie into the Internet becomes a physical layer, and your browser acts as an application layer.

Similarly, Ethernet does not conform to a seven-layer model because its concerns are at only the Physical through Session layers. Note that Ethernet breaks the Data Link layer into its own two-layer system.

Windows Model

When you work across a network using Windows, all the OSI layers come into play. Windows sees networks as layered somewhere between the two-tier practical approach and the seven layers of OSI. Microsoft assigns four levels of concern when configuring your network, using your network or operating system software. Under Windows, these levels include the adapter, protocol, service, and client software, as shown in the Windows Select Network Component Type dialog box (see Figure 12.1).

In this model, the network has four components—the client, the adapter, the protocol, and the service. Each has a distinct role to play in making the connection between computers.

Adapter

The adapter is the hardware that connects your computer to the network. The common term network interface card, often abbreviated as NIC, is one form of host adapter, a board you slide into an expansion slot. The host adapter function also can be integrated into the circuitry of the motherboard, as it often is in business-oriented computers.

No matter its form or name, the adapter is the foundation of the physical layer of the network, the actual hardware that makes the network work with your computer. It translates the parallel bus signals inside your computer into a serial form that can skitter through the network wiring. The design and standards that the adapter follows determine the form and speed of the physical side of the network.

From a practical perspective, the network adapter is generally the part of the network that you must buy if you want to add network capabilities to a computer lacking them. You slide a network host adapter or NIC into an expansion slot in your computer to provide a port for plugging into the network wire.

Protocol

The protocol is the music of the packets, the lyrics that control the harmony of the data traffic through the network wiring. The protocol dictates not only the logical form of the packet—the arrangement of address, control information, and data among its bytes—but also the rules on how the network deals with the packets. The protocol determines how the packet gets where it is going, what happens when it doesn’t, and how to recover when an error appears in the data as it crosses the network.

Support for the most popular and useful protocols for small networks is included with today’s operating systems. It takes the form of drivers you install to implement a particular networking system. Windows, for example, includes several protocols in its basic package. If you need to, you can add others as easily as installing a new software driver.

Service

The service of the network is the work the packets perform. The services are often several and always useful. Network services include exchanging files between disk drives (or making a drive far away on the network appear to be local to any or every computer in the network), sharing a printer resource so that all computers have access to a centralized printer, and passing electronic mail from a centralized post office to individual machines.

Most networking software includes the more useful services as part of the basic package. Windows includes file and printer sharing as its primary services. The basic operating system also includes e-mail support. Again, new services are as easy to add as new driver software.

Client

To the network, the client is not you but rather where the operating system of your computer and the network come together. It’s yet another piece of software, the one that brings you the network resources so that you can take advantage of the services. The client software allows the network to recognize your computer and to exchange data packets with it.

Architecture

Now that we’re done with the stuff that college professors want you to know to understand networks, let’s get back to the real world and look at how networks are put together. Networks link computers, but a link can take many forms, which a visit to any sausage shop will readily confirm.

With networks, computers link together in many ways. The physical configuration (something those professors call a topology), describes how computers are connected, like the sausages that squirt out of the grinder. Sausages link in a straight line, one after another. Networks are more versatile.

Moreover, by their nature, networks define hierarchies of computers. Some networks make some computers more important than others. The terms describing these arrangements are often bandied about as if they mean something. The descriptions that follow should help you keep up with the conversation.

Topologies

In mathematics, topology is an elusive word. Some mathematicians see it as the study of geometry without shapes or distance. Some definitions call it the study of deformation. It is the properties of an object that survive when you change its size or shape by stretching or twisting it. A beach ball has the same topology with or without air. Concepts such as inside and outside survive the deformation, although they change physical places. It’s a rich, rewarding, and fun part of mathematics.

Topology describes how the parts of an object relate to and connect with one another. The same word is applied to networks to describe how network nodes connect together. It describes the network without reference to distance or physical location. It shows the paths that signals must travel from one node to another.

Designers have developed several topologies for computer networks. Most can be reduced to one of four basic layouts: linear, ring, star, and tree. The names describe how the cables run throughout an installation.

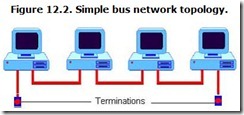

Linear

A network with linear cabling has a single backbone, one main cable that runs from one end of the system to the other. Along the way, computers tap into this backbone to send and receive signals. The computers link to the backbone with a single cable through which they both send and receive. In effect, the network backbone functions as a data bus, and this configuration is often called a bus topology. It’s as close as a network comes to the sausages streaming from the grinding machine. Figure 12.2 shows a network bus.

In the typical installation, a wire leads from the computer to the backbone, and a T-connector links the two. The network backbone has a definite beginning and end. In most cases, these ends are terminated with a resistor matching the characteristic impedance of the cable in the background. That is, a 61-ohm network cable will have a 61-ohm termination at either end. These terminations prevent signals from reflecting from the ends of the cable, thus helping ensure signal integrity.

Ring

The ring topology looks like a linear network that’s biting its own tail. The backbone is a continuous loop, a ring, with no end. But the ring is not a single, continuous wire. Instead, it is made of short segments daisy-chained from one computer to the next, the last connected, in turn, to the first. Each computer thus has two connections. One wire connects a computer to the computer before it in the ring, and a second wire leads to the next computer in the ring. Signals must traverse through one computer to get to the next, and the signals typically are listened to and analyzed along the way. You can envision it as a snake eating its own tail (or the first sausage swallowing the last link). If neither image awakens your imagination, Figure 12.3 also shows a network ring.

Star

Just as rays blast out from the core of a star, in the star topology, connecting cables emanate from a centralized location called a hub, and each cable links a single computer to the network. A popular image for the star topology is an old-fashioned wagon wheel—the network hub is the hub of the wheel, the cables are the spokes, and the computers are ignored in the analogy. Try visualizing them as clumps of mud clinging to the rim (which, depending on your particular network situation, may be an apt metaphor). Figure 12.4 shows a small network using the star topology.

Star-style networks have become popular because their topology matches that of other office wiring. In the typical office building, the most common wiring is used by telephones, and telephone wiring converges at the wiring closet, which is the Private Branch Exchange, or PBX (the telephone switching equipment for a business). Star-style topologies require only a single cable and connection for each device to link to the central location where all cables converge into the network hub.

Tree

When multiple hubs are connected together, the result is a tree. More like a family tree than some great oak, the tree spreads out and, at least potentially, connects many more nodes than might a single hub. Figure 12.5 shows a small network tree.

The typical home or small business network is a star, whereas large networks take the form of trees. (And network users eat the sausages.)

Hierarchies

Computers have classes—or at least a class system—in some networking schemes. Whereas some networks treat all computers the same, others elevate particular computers to a special, more important role as servers. Although the network performs many of the same functions in either case, these two hierarchical systems enforce a few differences in how the network is used.

Client/Server

In the client/server system, the shared resources of the network—files, high-speed connections with the Internet, printers, and the email system—are centralized on one or more powerful computers with very large disk storage capacity called servers. Individual workstation computers used by workers at the company are called clients.

Exactly what is on the server (or servers) and what is local to each client depends on the choices of the network manager. The server may host as little as a single shared database. The server may also host the data file used by each client. In extreme cases, programs used by each client load from a disk in the server.

As the network places more reliance on the server, the load on both the network and server increase. Because nearly every computer operation becomes a network operation, normal day-to-day use swallows up network bandwidth, and a slowdown in the network or the server slows work on every computer.

The strong point of the centralized client/server network is ease of administration. The network and all its connected clients are easier to control. Centralizing all data files on the server makes data and programs easier to secure and back up. Also, it helps ensure that everyone on the network is using the same programs and the same data.

When you surf the Internet, your computer becomes the client, and the computer that provides the Web pages you read is the server.

Peer-to-Peer

The client/server system is a royalist system, particularly if you view a nation’s leader as a servant of the people rather than a profiteer. The opposite is the true democracy, in which every computer is equal. Computers share files and other resources (such as printers) among one another. They share equally, each as the peer of the others, so this scheme is called peer-to-peer networking.

Peer-to-peer means that there is no dedicated file server. All computers have their own, local storage, but each computer is (or can be) granted access to the disk drives and printers connected to the other computers. The peer-to-peer system is not centralized. In fact, it probably has no center, only a perimeter.

When you use the Internet to share files (say, downloading an MP3 file from someone else’s collection), you’re using peer-to-peer networking.

In a peer-to-peer network, no one computer needs to be particularly endowed with overwhelming mass storage or an incomprehensible network operating system. But all computers need not be equal. In fact, one peer may provide a network resource, such as a connection to a printer or the Internet. It may even have a large disk that’s used to back up the files from other peers.

In other words, the line between client/server and peer-to-peer systems can be fuzzy, indeed. There is no electrical difference between the two systems. A peer-to-peer network and a client/server network may use exactly the same topology. In fact, the same network may take different characterizations, depending on how it is used.

Standards

A network is a collection of ideas, hardware, and software. The software comprises both the programs that make it work and the protocols that let everything work together. The hardware involves the network adapters as well as the wires, hubs, concentrators, routers, and even more exotic fauna. Getting it all to work together requires standardization.

Because of the layered design of most networks, these standards can appear at any level in the hierarchy; and they do. Some cover a single layer; others span them all to create a cohesive system.

Current technology makes the best small computer network a hub-based peer-to-peer design, cabled with twisted-pair wiring and running the software built in to your operating system. The big choice you face is the hardware standard. In the last few years, networks have converged on two basic hardware standards: 10Base-T and 100Base-T. Both are specific implementations of Ethernet.

Just as celebrities are people famous principally for being famous, 10Base-T and 100Base-T are popular because they are popular. They are well known and generally understood. Components for either are widely available and inexpensive. Setting them up is easy and support is widely available.

The distinguishing characteristic of network hardware is the medium used for connecting nodes. Small networks—the kind you might use in your home, office, or small business—most commonly use one of three interconnection types. They may be wired together in the classic style, typically using twisted-pair wires in a star-based topology. They may be linked wirelessly using low-powered radio systems, or their signals may be piggybacked on an already existing wiring system, such as telephone lines or utility power lines.

Ethernet

The elder statesman of networking is Ethernet. It still reigns as king of the wires, and wireless systems have appropriated much of its technology. It shows the extreme foresight of the engineers at Xerox Corporation’s Palo Alto research center who developed it in the 1970s for linking the company’s early Alto workstations to laser printers. The invention of Ethernet is usually credited to Robert Metcalf, who later went on to found 3Com Corporation, an early major supplier of computer networking hardware and software. During its first years, Ethernet was proprietary to Xerox, a technology without a purpose, in a world in which the personal computer had not yet been invented.

In September, 1980, however, Xerox joined with minicomputer maker Digital Equipment Corporation and semiconductor manufacturer Intel Corporation to publish the first Ethernet specification, which later became known as E.SPEC VER.1. The original specification was followed in November, 1982, by a revision that has become today’s widely used standard, E.SPEC VER.2.

This specification is not what most people call Ethernet, however. In January, 1985, the Institute of Electrical and Electronic Engineers published a networking system derived from Ethernet but not identical to it. The result was the IEEE 802.3 specification. Ethernet and IEEE 802.3 share many characteristics—physically, they use the same wiring and connection schemes—but each uses its own packet structure. Consequently, although you can plug host adapters for true Ethernet and IEEE 802.3 together in the same cabling system, the two standards will not be able to talk to one another. No matter. No one uses real Ethernet anymore. They use 802.3 instead and call it Ethernet.

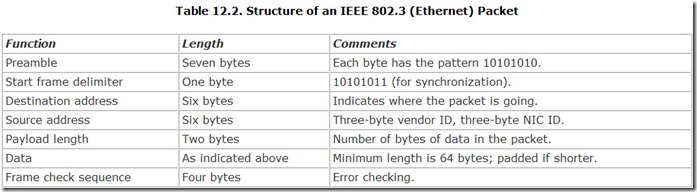

Under the OSI Model, Ethernet provides the Data Link and Physical layers—although Ethernet splits the Data Link layer into two layers of its own: Media Access Control and the Logic Link Control layer (or the MAC-client layer). The Ethernet specifications define the cable, connections, and signals as well as the packet structure and control on the physical medium. Table 12.2 shows the structure of an IEEE 802.3 packet.

Data Link Layer

The basis of Ethernet is a clever scheme for arbitrating access to the central bus of the system. The protocol, formally described as Carrier Sense, Multiple Access with Collision Detection (CSMA/CD) is often described as being like a party line. It’s not. It’s much more like polite conversation. All the computers in the network patiently listen to everything that’s going on across the network backbone. Only when there is a pause in the conversation will a new computer begin to speak. And if two or more computers start to talk at the same time, all become quiet. They will wait for a random interval (and because it is random, each will wait a different interval) and, after the wait, attempt to begin speaking again. One will be lucky and win access to the network. The other, unlucky computers hear the first computer blabbing away and wait for another pause.

Access to the network line is not guaranteed in any period by the Ethernet protocol. The laws of probability guide the system, and they dictate that eventually every device that desires access will get it. Consequently, Ethernet is described as a probabilistic access system. As a practical matter, when few devices (compared to the bandwidth of the system) attempt to use the Ethernet system, delays are minimal because all of them trying to talk at one time is unlikely. As demand approaches the capacity of the system, however, the efficiency of the probability-based protocol plummets. The size limit of an Ethernet system is not set by the number of computers but by the amount of traffic; the more packets computers send, the more contention, and the more frustrated attempts.

Physical Layer

The Ethernet packet protocol has many physical embodiments. These can embrace just about any topology, type of cable, or speed. The IEEE 802.3 specification defines several of these, and it assigns a code name and specification to each. Among today’s Ethernet implementations, the most basic operates at a raw speed of 10MHz. That is, the clock frequency of the signals on the Ethernet (or IEEE 802.3) wire is 10MHz. Actual throughput is lower because packets cannot occupy the full bandwidth of the Ethernet system. Moreover, every packet contains formatting and address information that steals space that could be used for data.

Originally, Ethernet used coaxial cables, and two versions of the 10MHz IEEE version of Ethernet have proved popular: 10Base-5 (which uses thick coaxial cable, about one-half inch in diameter) and 10Base-2 (which uses a thin coaxial cable, about 2/10th inch in diameter).

Twisted-pair wiring is now used almost universally in Ethernet systems, except in special applications (for example, to link hubs together or to extend the range of connections). Basically the same kind of wire is used for speed that spans two orders of magnitude.

The base level for twisted-pair Ethernet is 10Base-T, which transfers the same signals as the coaxial systems onto two pairs of ordinary copper wires. Each wire pair carries a 10MHz balanced signal—one carrying data to the device and the other carrying it away (in other words, separate receive and transit channels) for full-duplex operation.

The next increment up is 100Base-T, which is likely today’s most popular wired networking standard. The 100Base-T system operates at 100MHz, yielding higher performance consistent with transferring multimedia and other data-intensive applications across the network. Its speed has made it the system of choice in most new installations.

During its gestation, 100Base-T wasn’t a single system but rather a family of siblings, each designed for different wiring environments. 100Base-TX is the purest implementation and the most enduring—but it’s also the most demanding. It requires Class 5 wiring, shielded twisted-pair designed for data applications. In return for the cost of the high-class wiring, it permits full-duplex operation so that any network node can both send and receive data simultaneously. The signals on the cable actually operate at 125MHz but use a five-bit encoding scheme for every four bits. The extra code groups are used primarily for error control. The current 100Base-T standard is formalized in the IEEE 802.3u specification.

To make the transition from 10Base-T to higher speeds easier, 100Base-T4 was designed to work with shielded or unshielded voice-grade wiring, but it only allows for half-duplex operations across four wire pairs. In addition, 100Base-T2 uses sophisticated data coding to squeeze a 100Mbps data rate on ordinary voice-grade cables using one two-wire pair. Currently neither of these transitional formats is in general use.

At the highest-speed end, Gigabit Ethernet moves data at a billion bits per second. The first implementations used fiber optic media, but the most popular format is using the same Category 5 twisted-pair wires as slower versions of Ethernet. Commonly called 1000Base-T and officially sanctioned under the IEEE 802.3ab standard, Gigabit Ethernet’s high speed is not a direct upward mapping of 100MHz technology. Category 5 cables won’t support a 1GHz speed. Instead, 1000Base-T uses all four pairs in a standard Cat 5 cable, each one operating at 125MHz. To create a 1000Gbps data rate, the 1000Base-T adapter splits incoming data into four streams and then uses a five-level voltage coding scheme to encode two bits in every clock cycle. The data code requires only four levels. The fifth is used for forward error correction. The standard allows both half-duplex (four pairs) and full-duplex (eight pairs using two Cat 5 cables) operation.

Wireless

The problem with wiring together a network is the wiring, and the best way to eliminate the headaches of network wiring is to eliminate the wires themselves. True wireless networking systems do exactly that, substituting radio signals for the data line. Once an exotic technology, wireless networking is now mainstream with standardized components both readily available and affordable.

Wireless networking has won great favor, both in homes and businesses, because it allows mobility. Cutting the cable means you can go anywhere, which makes wireless perfect for notebook computers (and why many new notebooks come with built-in wireless networking). If you want to tote your computer around your house and work anywhere, wireless is the only way to go. Many airports and businesses also use standard wireless connections, so if you equip your portable computer with wireless for your home, you may be able to use it when you’re on the move.

Moreover, wireless networks are easier to install, at least at the hardware end. You slide a PC Card into your notebook computer, plug the hub into a wall outlet, and you’re connected. If you’re afraid of things technical, that makes wireless your first choice. Of course, the hardware technology does nothing to make software installation easier, but wireless doesn’t suffer any handicaps beyond all other network technologies.

On the other hand, wireless has a limited range that varies with the standard you use. Government rules restrict the power that wireless network systems can transmit with. Although equipment-makers quote ranges of 300 meters and more, that distance applies only in the open air—great if you’re computing on the football field but misleading should you want to be connected indoors. In practical application, a single wireless network hub might not cover an entire large home.

All wireless networks are also open to security intrusions. Once you put your data on the air, it is open for inspection by anyone capable of receiving the signals. Encryption, if used, will keep your data reasonably secret (although researchers have demonstrated that resourceful snoops can break the code in an hour). More worrisome is that others can tap into your network and take advantage of your Internet connection. When you block them, you sacrifice some of the convenience of wireless.

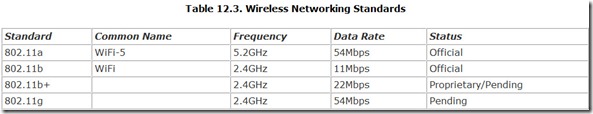

Wireless networks endured a long teething period during which they suffered a nearly fatal malaise—a lack of standardization. Wireless systems were, at one time, all proprietary. But wireless technology proved so useful that the IEEE standardized it and keeps on adding new standards. Two are currently in wide deployment, and several others are nearing approval. Table 12.3 summarizes the current standards situation.

The following discussion covers today’s wireless networking standards and those likely to become standards in order of adoption or introduction.

802.11b

Through the year 2001, IEEE 802.11b was the most popular wireless standard on the market, popularized under the name Wireless Fidelity (WiFi), given to it by the industry group, the Wireless Ethernet Compatibility Alliance. That group now uses the name WiFi Alliance. WiFi equipment is also sometimes called 2.4MHz wireless networking because of the frequency band in which it operates.

Until October 2001, WiFi was the only official wireless networking standard. Despite its “b” designation, 802.11b was the first official wireless networking standard to be marketed. Its destiny was set from its conception. It was the one IEEE wireless standard with acceptable performance that could readily be built.

The range of 802.11b is usually given as up to 300 meters—nearly 1000 feet—a claim akin to saying that you’re up to 150 feet tall. Anything that gets in the way—a wall, a drapery, or a cat—cuts down that range. Moreover, the figure is mostly theoretical. Actual equipment varies widely in its reach. With some gear (such as the bargain models I chose), you’ll be lucky to reach the full length of your house.

Moreover, range isn’t what you might think. 802.11b is designed to gracefully degrade. What that engineering nonsense means is that the farther away you get, the slower your connection becomes. When you’re near the limits of your system, you’ll have a 2Mbps connection.

Note that the range is set by the least powerful transmitter and weakest receiver. In most cases, the least powerful transmitter is in the PC Card adapter in your notebook computer. Power in these is often purposely kept low to maintain battery life and to stay within the engineering limits of the card housing. You can often achieve longer distances with an external adapter, such as one that plugs into your computer’s USB port.

802.11b is less than ideal for a home wireless network for more reasons than speed. Interference is chief among them. The 2.4GHz band within which 802.11b works is one of the most popular among engineers and designers. In addition to network equipment, it hosts cordless telephones and the Bluetooth interconnection system. Worse, it is close to the frequency used by consumer microwave ovens. Because the band is unlicensed, you can never be sure what you’ll be sharing it with. Although 802.11b uses spread-spectrum modulation, which makes it relatively immune to interference, you can still lose network connections when you switch on your cordless phone.

802.11a

The place to start with 802.11a is with the name. Back-stepping one notch in the alphabet doesn’t mean moving down the technological ladder, as it does with software revisions. When the standards-makers at the IEEE put pen to paper for wireless standards, 802.11a did come first, but it was the dream before the reality. The high speed of 802.11a was what they wanted, but they amended their views to accommodate its slower sibling, which they knew they could deliver faster. When manufacturers introduced equipment following the 802.11a standard, they initially promoted it as WiFi-5 because of the similarity with the earlier technology. The “5” designated the higher frequency band used by the newer system. In October, 2002, however, the WiFi Alliance decided to drop the WiFi-5 term—mostly because people wondered whatever happened to WiFi-2, 3, and 4.

From the beginning, 802.11a was meant by its IEEE devisors as the big brother to 802.11b. It is designed to be faster and provide more channels to support a greater number of simultaneous users. The bit-rate of the system is 54Mbps, although in practical application actual throughput tops out at about half that rate. In addition, as the distance between the computers and access points increase, the 802.11a system is designed to degrade in speed to ensure data integrity, slowing down to about 6Mbps at the farthest reaches of its range.

802.11a fits into the UN-II (Unlicensed National Information Infrastructure) band that’s centered at about 5.2GHz, a band that’s currently little used by other equipment and services. The design of 802.11a provides for eight channels, allowing that number of access points to overlap in coverage without interference problems.

The higher frequency band has a big advantage—bandwidth. There’s more room for multiple channels, which means more equipment can operate in a given space without interference.

But the UN-II band occupied by 802.11a isn’t without its problems. The biggest is physics. All engineering rules of thumb say the higher the frequency, the shorter the range. The 5.2GHz operating frequency puts 802.11a at a severe disadvantage, at least in theory.

Practically, however, the higher intrinsic speed of 802.11a not only wipes out the disadvantage but also puts the faster standard ahead. Because of the digital technology used by the common wireless networking systems, weaker signals mean lower speed rather than an abrupt loss of signal. The farther away from the access point a user ventures, the lower the speed his connection is likely to support.

Although physics doesn’t favor 802.11a’s signals, mathematics does. Because 802.11a starts out faster, it maintains a performance edge no matter the distance. For example, push an 802.11b link to 50 feet, and you can’t possibly get it to operate faster than 11Mbps. Although that range causes some degradation at 802.11a’s higher frequency, under that standard you can expect about 36Mbps, according to one manufacturer of the equipment.

Raw distance is a red herring in figuring how many people can connect to a wireless system. When the reach of a single access point is insufficient, the solution is to add another access point rather than to try to push up the range. Networks with multiple access points can serve any size campus. The eight channels available under 802.11a make adding more access points easier.

The security weakness is part of the plan. 802.11a is designed to match 802.11b at the software level.

802.11b+

There is no such thing as an 802.11b+ standard, at least a standard adopted by some official-sounding organization such as the IEEE. But you’ll find a wealth of networking gear available that uses the technology that’s masquerading under the name. Meant to fill the gap between slow-but-cheap 802.11b and faster-but-pricier 802.11a, this technology adds a different modulation system to ordinary 802.11b equipment to gain double the speed and about 30 percent more range. Because the technology is, at heart, 802.11b, building dual-mode equipment is a breeze, and most manufacturers do it. As a result, you get two speeds for the price of one (often less, because the 802.11b+ chipset is cheaper than other 802.11b chipsets).

The heart of 802.11b+ is the packet binary convolutionary coding system developed by Texas Instruments (TI) under the trademark PBCC-22. (A convolutionary code encodes a stream of data in which the symbol encoding a given bit depends on previous bits.) The “22” in the name refers to the speed. The only previous version was PBCC-11, the forerunner of PBCC-22 and also developed by TI.

PBCC-22 is a more efficient coding system than that used by ordinary 802.11b. In addition, the TI system uses eight-phase shift keying instead of 802.11b quadrature phase shift keying, but at the same data rate (11Mbps). The result is that the system fits twice the data in the same bandwidth as 802.11b. At the same time, the signal stands out better from background noise, which, according to TI, produces 30 percent more linear range or coverage of 70 percent more area. The same technology could be used to increase the speed of the 802.11b signaling system to 33MHz, although without benefit of the range increase. This aspect of PBCC-22 is not part of the TI chipset now offered for 802.1b+ components and has not received FCC approval.

802.11b+ can be considered a superset of 802.11b. Adapters and hubs meant for the new system also work with all ordinary 802.11b equipment, switching to their high speed when they find a mate operating at the higher speed.

Texas Instruments makes the only chipset to use 802.11b+ technology, so all equipment using the technology uses the TI chipset. TI often prices this chipset below the price of ordinary 802.11b chipsets, so the higher speed of 802.11b+ often is less expensive than the performance of equipment strictly adhering to the official 802.11b standard.

802.11g

As this is written, 802.11g is a proposal rather than an official standard, although its approval is expected in 2003. In effect a “greatest hits” standard, 802.11g combines the frequency band used by 802.11b with the modulation and speed of 802.11a, putting 54Mb operation in the 2.4GHz Industrial-Scientific-Medical (ISM) band.

Because of the lower frequency of the ISM band, 802.11g automatically gets an edge in range over 802.11a. At any given speed, 802.11g nearly doubles the coverage of 802.11a.

In addition, promoters cite that the ISM band is subject to fewer restrictions throughout the world than the 5GHz band, part of which is parceled out for military use in many countries. Of course, the opposite side of the coin is that the ISM band is more in demand and subject to greater interference. It also does not allow for as many channels as the 5GHz band.

Many of its promoters see 802.11g as a stepping stone to 802.11a. It allows engineers to create dual-speed equipment more easily because the two speeds (11Mbps and 54Mbps) will be able to share the same radio circuits.

Dual-Standard Systems

Nothing restricts a network or its equipment to using only one standard. To ease people who have already installed WiFi equipment into the higher-performance WiFi-5 standard, many manufacturers offer dual-standard equipment, including hubs that can recognize and use either standard to match the signals it receives. They also offer dual-standard network adapters capable of linking at the highest speed to whatever wireless signals are available. Nothing in the WiFi and WiFi-5 standards requires this interoperability.

Other high-speed standards do, however, guarantee interoperability. The proprietary 802.11b+ system is built around and is compatible with standard WiFi. Equipment made to one standard will operate with equipment made to the other, at the highest speed both devices support.

Similarly, 802.11g promises interoperability. In fact, at the time this is written, 802.11b+ has been proposed as an addition to 802.11g, making the proprietary standard official.

Sanctioning Organization

The various 802.11 standards are promulgated by the IEEE but are promoted by the WiFi Alliance. The organization maintains a Web site at www.weca.net.

HomePNA

One of the original design goals of the 10Base-T wiring scheme was for it to use the same kind of wires as used by ordinary telephones. Naturally, some people thought it would be a great idea to use the same wires as their telephones, but they were unwilling to give up the use of their phones when they wanted to network their computers.

Clever engineers, however, realized that normal voice conversations use only a tiny part of the bandwidth of telephone wiring—and voices use an entirely different part of that bandwidth than do computer networks. By selectively blocking the network signals from telephones and the telephone signals from networking components, they could put a network on the regular telephone wiring in a home or office while still using the same wiring for ordinary telephones.

Of course, there’s a big difference between wiring a network and wiring a telephone system. A network runs point to point, from a hub to a network adapter. A telephone system runs every which way, connecting telephones through branches and loops and often leaving stubs of wire trailing off into nowhere. Telephone wiring is like an obstacle course to network signals.

History

Tut Systems devised a way to make it all work by developing the necessary signal-blocking adapters and reducing the data rate to about a megabit per second, to cope with the poor quality of telephone wiring. Tut Systems called this system HomeRun.

In June, 1998, eleven companies, including Tut (the others being 3Com, AMD, AT&T Wireless, Broadcom, Compaq, Conexant, Hewlett-Packard, IBM, Intel, and Lucent Technologies) formed the Home Phoneline Networking Alliance (HomePNA) to promote telephone-line networking. The alliance chose HomeRun as the foundation for its first standard, HomePNA version 1.0

In September, 2000, the alliance (which had grown to over 150 companies) kicked up the data rate to about 10Mbps with a new HomePNA version—Version 2.0.

On November 12, 2001, HomePNA released market (not technical) specifications for the next generation of telephone-line networking, HomePNA Version 3.0, with a target of 100Mbps speed. The new standard will be backward compatible with HomePNA 1.0 and 2.0 and won’t interfere with normal and advanced telephone services, including POTS, ISDN, and xDSL. A new feature, Voice-over-HomePNA, will allow you to send up to eight high-quality telephone conversations through a single pair of ordinary telephone wires within your home. According to the association, the HomePNA Version 3.0 specification will be released by the end of 2002 and was not available as this is being written.

Version 1.0

Version 1.0 is a fairly straightforward adaptation of standard Ethernet, with only the data rate slowed to accommodate the vagaries of the unpredictable telephone wiring environment. The system does not adapt in any way to the telephone line. It provides its standard signal and that’s that. Low speed is the only assurance of signal integrity. When a packet doesn’t get through, the sending device simply sends it again. The whole system is therefore simple, straightforward, and slow.

The design of Version 1.0 allows for modest-sized networks. Its addressing protocol allows for a maximum of 25 nodes networked together. The signals system has a range of about 150 meters (almost 500 feet), although its range will vary with the actual installation—some telephone systems are more complex (and less forgiving) than others.

Version 2.0

Although HomePNA Version 1.0 is capable of most routine business chores, such as sharing a printer or DSL connection, it lacks the performance required for the audio and video applications that are becoming popular in home networking systems. Version 2.0 combines several technologies to achieve the necessary speed. It has two designated signaling rates: 2 million baud and 4 million baud (that’s 2MHz and 4MHz, respectively). The signal uses quadrature amplitude modulation (the same technology of 28.8Kbps modems), but it can use several coding schemes, from 4 to 256 symbols per baud. As a result, the standard allows for actual data rates from 4Mbps (2 Mbaud carrier with 4 QAM modulation) to 32Mbps (4 Mbaud at 256 QAM modulation). The devices negotiate the actual speed.

Compared to ordinary 10Base-T Ethernet systems, HomePNA Version 2.0 suffers severely from transmission overhead, cutting its top speed well below its potential. To ensure reliability, HomePNA uses its highest speeds only for the data part of its packets (if at all). The system sends the header and tailer of each data frame, 84 bytes of each frame, at the lowest rate (4Mbps) to ensure that control information is error free. As a consequence, in every frame, overhead eats almost one-third of the potential bandwidth at the 32Mbps data rate, about 80 percent at the 16Mbps data rate, and correspondingly less at lower rates.

Equipment

HomePNA is a peer-to-peer networking system. It does not use a special hub or server. Each computer on the network plugs into a HomePNA adapter (usually through a USB port), which then plugs into a telephone wall jack. If you do not have a wall jack near your computer, you can use extension telephone cables to lengthen the reach of the adapter. No other hardware is required. Once all your computers are plugged in, you set up the network software as you would for any wired network.

Sanctioning Organization

The HomePNA specification is maintained by the Home Phoneline Networking Alliance. The group maintains a Web site at www.homepna.org.

HomePlug

The underlying assumption of the HomePlug networking system is that if you want to network computers in your home, you likely already have power wiring installed. If not, you don’t likely need a network because you don’t have any electricity to run your computers. Those big, fat, juicy electrical wires whisking dozens of amps of house current throughout your house can easily carry an extra milliwatt or two of network signal. In that the power lines inside your walls run to every room of your house, you should be able to plug your network adapter into an outlet and transport the signals everywhere you need them.

You’ll want to be careful if you try such a thing. Unlike computer signals, house current can kill you—and the circuitry of your network adapter. House current is entirely different stuff from network signals. That’s bad if you stick your fingers into a light socket, but it’s good if you want to piggyback network signals on power lines. The big difference between the two means that it’s relatively easy for a special adapter (not an ordinary network adapter!) to separate the power from the data.

On the other hand, although those heavy copper wires are adept at conducting high-current power throughout your home, they are a morass for multimegahertz data signals. At the frequencies needed for practical network operation, home wiring is literally a maze, with multiple paths, most of which lead into dead-ends. Some frequencies can squeak through the maze unscathed, whereas others disappear entirely, never to be seen again. Motors, fluorescent lights, even fish-tank pumps add noise and interference to your power lines, not to mention stuff that sneaks in from the outside, such as bursts of lightning. Radio frequency signals like those a network uses bounce through this booby-trapped electrical maze like a hand grenade caught in a pinball machine.

Transmission Method

The HomePlug solution is to try, try again. Rather than using a single technology to bust through the maze, HomePlug uses several. It is adaptive. It tests the wiring between network nodes and checks what sort of signals will work best to transport data between them. It then switches to those signals to make the transfer. Just in case, everything that HomePlug carries is doused with a good measure of error correction. The result is a single networking standard built using several technologies that seamlessly and invisibly mate, so you can treat your home wiring as a robust data communications channel.

The basic transmission technique in the HomePlug system is called orthogonal frequency division multiplexing (OFDM), similar to that used in some telephone Digital Subscriber Lines. The OFDM system splits the incoming data stream into several with lower bit rates. Each stream then gets its own carrier to modulate, with the set of carriers carefully chosen so that the spacing between them is inversely proportional to the bit-rate of each carrier (this is the principle of orthogonality). Combining the modulated carriers of different frequencies together results in the frequency division multiplexing. In the resulting signal, it is easy to separate out the data on each carrier because the data on one carrier is easily mathematically isolated from the others.

Each isolated carrier can use a separate modulation scheme. Under HomePlug, differential quarternary phase shift keying (DQPSK) is the preferred method. It differs from conventional QPSK in that the change in the phase of the carrier, not the absolute phase of the carrier, encodes the data. Alternately, the system can shift to differential binary phase shift keying (DBPSK) for lower speed but greater reliability when the connection is poor. DQPSK shifts between four phases, whereas DBPSK shifts between two. The data streams use a convolutionary code as forward error correction. That is, they add extra bits to the digital code, which help the receiver sort out errors.

In the ideal case, with all carriers operating at top speed, the HomePlug system delivers a raw data rate of about 20Mbps. Once all the overhead from data preparation and error correction gets stripped out, it yields a clear communication channel for carrying data packets with an effective bit-rate of about 14Mbps.

If two devices cannot communicate with the full potential bandwidth of the HomePlug system, they can negotiate slower transmissions. To best match line conditions, they can selectively drop out error-plagued carrier frequencies, shift from DQPSK to more reliable DBPSK modulation, or increase the error correction applied to the data.

The HomePlug system uses a special robust mode for setting up communication between channels. Robust mode activates all channels but uses DBPSK modulation and heavy error correction. Using all channels ensures that all devices can hear the signals, and the error correction ensures messages get through, if slowly. Once devices have negotiated the connection starting with robust mode, they can shift to higher-speed transmissions.

The HomePlug system selects its carriers in the range between frequencies of 4.5 and 21MHz, operating with reduced power in the bands in which it might cause interference with amateur radio transmissions. In addition, HomePlug transmits in bursts rather than maintaining a constant signal on the line.

Protocol

The HomePlug system moves packets between nodes much like the Ethernet system with a Carrier Sense Multiple Access with Collision Avoidance (CSMA/CA) protocol. That is, each device listens to the power line and starts to transmit only when it does not hear another device transmitting. If two devices accidentally start transmitting at the same time (resulting in a collision), they immediately stop, and each waits a random time before trying again. HomePlug modifies the Ethernet strategy to add priority classes, greater fairness, and faster access.

All packets sent from one device to another must be acknowledged, thus ensuring they have been properly received. If a packet is not acknowledged, the transmitting device acts as if its packet had collided with another, waiting a random period before retransmitting. An acknowledgement sent back also signals to other devices that the transmission is complete and that they can attempt to send their messages.

According to the HomePlug alliance, the protocol used by the system results in little enough delay that the system can handle time-critical applications such as streaming media and Voice-over-Internet telephone conversations.

To prevent your neighbors from listening in on your network transmissions or from sneaking into your network and stealing Internet service, the HomePlug system uses a 56-bit encryption algorithm. The code is applied to packets at the Protocol layer. All devices in a given network—that is, the HomePlug adapters for all of your computers—use the same key, so all can communicate. Intruders won’t know the key and won’t be recognized by the network.

With HomePlug, such intrusions are more than theoretical. Anyone sharing the same utility transformer with you—typically about six households tap into the same utility transformer—will share signals on the power line. Your HomePlug networking signals will consequently course through part of your neighborhood. That’s bad if you want to keep secrets, but it could allow you and a neighbor share a single high-speed Internet connection (if your Internet Service Provider allows you to).

Equipment

HomePlug is a peer-to-peer networking system. It does not use a special hub or server. Each computer on the network plugs into a HomePlug adapter (usually through a USB port), which then plugs into a wall outlet. No other hardware is required. Once all your computers are plugged in, you set up the network software as you would for any wired network.

Sanctioning Organization

The specifications for the HomePlug networking system are maintained by the HomePlug Powerline Alliance.

HomePlug Powerline Alliance, Inc.

2694 Bishop Drive, Suite 275

San Ramon, CA 94583

Phone: 925-275-6630

Fax: 925-275-6691

Hardware

To make a network operate under any standard requires actual hardware—pieces of equipment that plug into each other to make the physical network. Most of today’s networking systems require three different kinds of hardware. Network interface cards link your computer to the network wiring system (or wireless signaling system). A hub or access point brings the signals from all the computers and other devices in the network together. And a medium (such as wires or radio waves) links these two together. You build a network using these three components from one network standard.

Network Interface Cards

The network interface card (NIC) and its associated driver software have the most challenging job in the network. The NIC takes the raw data from your computer, converts it into the proper format for the network you’re using, and then converts the electrical signals to a format compatible with the rest of the network. If it doesn’t work properly, your data would be forever trapped in your computer.

Because it converts the stream of data between two different worlds (inside your computer and the outside world of the network), it needs to match two standards: a connection system used by your computer and the connection system used by the network.

Traditionally, a network adapter has plugged into the expansion bus of the host computer. No other connection could provide the speed required for network use. Desktop computers used internal NICs that slid into expansion slots. Portable computers used NICs in the form of PC Cards.

With the advent of USB, however, that situation has changed. Although expansion bus–based NICs remain important, many networks are shifting to NICs that plug into USB ports. The primary reason for this is that USB-based NICs are easier to install. Plug in one connector, and you’re finished. Although early USB-based NICs gave up speed for this convenience, a USB 2.0 port can handle the data rates required even by 100Base-T networking.

The simplified piggyback networking systems HomePlug and HomePNA rely on USB-based NICs for easy installation. In wireless networking, USB-based NICs often have greater range than their PC Card–based peers because they can operate at higher power and with more sophisticated antennae. Compared to installing a PC Card adapter in your desktop computer and then sliding a wireless NIC into the adapter, the USB-based NIC makes more sense, both for the added range as well as the greater convenience and lower cost.

The NIC you choose must match the standard and speed used by the rest of your network. The exception is, of course, that many NICs operate at more than one speed. For example, nearly all of today’s 100Base-T NICs slow down to accommodate 10Base-T networks. Dual-speed 802.11b+ wireless NICs will accommodate either 11 or 22MHz operation.

Most dual-speed NICs are autosensing. That is, they detect the speed on the network wire to which they are connected and adjust their own operating speed to match. Autosensing makes a NIC easier to set up, particularly if you don’t know (or care) the speed at which your network operates. You can just plug in the network wire and let your hardware worry about the details.

Some network adapters allow for optional boot ROMs, which allow computers to boot up using a remote disk drive. However, this feature is more applicable to larger businesses with dedicated network servers rather than a home or small business network.

Hubs and Access Points

A network hub passes signals from one computer to the next. A network access point is the radio base station for a wireless network. Piggyback networks such as HomePlug and HomePNA do not use dedicated hubs, although one of the computers connected to the network acts like a hub.

Hubs

The most basic hub for a wired network has two functions. It provides a place to plug in the network wire from each computer, and it regenerates the signals to ensure against errors.

The design of Ethernet requires all the signals in the network loop to be shared. Every computer in the loop—that is, every computer connected to a single hub—sees exactly the same signals. The easiest way to do this would be to short all the wires together. Electrically, such a connection would be an anathema.

The circuitry of the hub prevents such disasters. It mixes all the signals it receives together and then sends out the final mixture in its output.

To make the cabling for the system easy, the jacks on a hub are wired the opposite of the jacks on NICs. That is, the send and receive connections are reserved—the connections the NIC uses for sending, the hub uses for receiving.

Inexpensive hubs are distinguished primarily by the number and nature of the ports they offer. You need one port on your hub for each computer or other device (such as a network printer or DSL router) in your network. You may want to have a few extra ports to allow for growth, but more ports cost more, too. Some hubs include a crossover jack or coaxial connection that serves as an uplink to tie additional hubs into your network.

Expensive hubs differ from the economic models chiefly by their management capabilities—things such as remote monitoring and reconfiguration, which are mostly irrelevant to a small network.

As with NICs, hubs can operate at multiple speeds. Although some require each network connection to be individually configured for a single speed (some are even physically set only at one speed), most modern dual-speed hubs are autosensing, much like NICs. Coupling a low-cost autosensing NIC and low-cost autosensing hub can sometimes be problematic with marginal wiring. The hub’s sense may negotiate the highest-speed signals on the network, even though many packets fail to negotiate the wiring at higher speeds. In such instances, performance can fall below that of a low-speed-only network.

Some hubs are called switches; others have internal switches. A switch divides a network into segments and shifts data between them. Most dual-speed hubs actually act as switches when passing packets between 10Base-T and 100Base-T devices.

When used on a single-speed network, a switch can provide faster connections because the full bandwidth of the network gets switched to service each NIC. When several NICs contend for time on the network, however, the switch must arbitrate between them, and in the end, they share time as they would on a hub-based network.

Switches usually have internal buffers to make it easier to convert between speeds and arbitrate between NICs. In general, the larger the buffer the better, because a large buffer helps the switch operate at a higher speed more of the time.

Access Points

Access points earn their name because they provide access to the airwaves. Although in theory you could have a wireless-only hub to link together a small network of computers, such a network would shortchange you. You could not access the Internet or any devices that have built-in wired-networking adapters, such a network printers. In other words, most wireless networks also require a wired connection. The access point provides access to the airwaves for those wired connections.

The centerpiece of the access point is a combination of radio transmitter and receiver (which engineers often talk about simply as the radio). Any adjustment you might possibly make to the radio is hidden from you, so you don’t have to be a radio engineer to use one. The access point’s radio is both functionally and physically a sealed box, permanently set to the frequencies used by the standard used by the networking system supported by the access point. Even the power at which the access point operates is fixed. All access points use about the same power because the maximum limit is set by government regulations.

Access points differ in several ways, however. Because every access point has a wired connection, they differ just as wired network do—they may support different speed standards. In fact, most access points have multiple wired connections, so they are effectively wired hubs, too. The same considerations that apply to wired hubs therefore apply to the wired circuitry of access points.

Access points also differ in the interface between their radios and the airwaves—namely, their antennae. Lower-cost access points have a single, permanently attached antenna. More expensive hubs may have two or (rarely) more antennae. By using diversity techniques, multiple-antenna access points can select which of the antennae has the better signal and use it for individual transmissions. Consequently, dual-antennae access points often give better coverage.

Some access points make their antennae removable. You can replace a removable antenna with a different one. Some manufacturers offer directional antennae, which increase the range of an access point in one direction by sacrificing the range in all other directions. In other words, they concentrate the radio energy in one direction. Directional antennae are particularly useful in making an access point into a radio link between two nearby buildings.

Routers

When you want to link more than one network together—for example, you want to have both wired and wireless networks in your office—the device that provides the necessary bridge is called a router because it gives the network signals a route from one system to another. When you want to link a home network to the Internet through a DSL line, you need a router. Routers are more complex than hubs because they must match not only the physical medium but also the protocols used across the different media.

Sometimes what you buy as a hub actually functions as a router. Most wireless access points include 10Base-T or 100Base-T connections to link with a wired network. Many DSL adapters are sold as hubs and have multiple network connections (or even a wireless access point), even though they are, at heart, routers.

Cabling

Network wires must carry what are essentially radio signals between NICs and hubs, and they have to do this without letting the signals actually become radio waves—broadcasting as interference—or finding and mixing with companion radio waves, thus creating error-causing noise on the network line. Two strategies are commonly used to combat noise and interference in network wiring: shielding the signals with coaxial cable and preventing the problem with balanced signaling on twisted-pair wiring.

Coaxial Cables

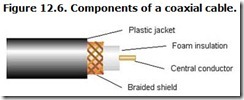

Coaxial cables get their name because they all have a central conductor surrounded by one or more shields that may be a continuous braid or metalized plastic film. Each shield amounts to a long, thin tube, and each shares the same longitudinal axis—the central conductor. The surrounding shield typically operates at ground potential, which shields stray signals from leaking out of the central conductor or prevents noise from seeping in. Figure 12.6 shows the construction of a simple coaxial cable.

Coaxial cables are tuned as transmission lines—signals propagate down the wire and are completely absorbed by circuitry at the other end—which allows them to carry signals for long distances without degradation. For this reason, they are often used in networks for connecting hubs, which may be widely separated.

Twisted-Pair Wires

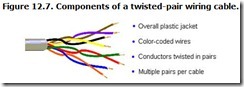

The primary alternative is twisted-pair wiring, which earns its name from being made of two identical insulated conducting wires that are twisted around one another in a loose double-helix. The most common form of twisted-pair wiring lacks the shield of coaxial cable and is often denoted by the acronym UTP, which stands unshielded twisted pair. Figure 12.7 shows a simplified twisted-pair cable.

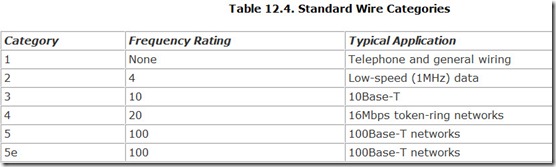

Manufacturers rate their twisted-pair cables by category, which defines the speed of the network they are capable of carrying. Currently standards define six categories: 1 through 5 and 5e (which stands for 5 enhanced). Two higher levels, 6 and 7, exist on the periphery (proposed but not official standards). As yet, they are unnecessary because Gigabit Ethernet operates with Category 5 wiring. Note that, strictly speaking, until the standards for Levels 6 and 7 get approved, wires of these quality levels are not termed Category 6 and Category 7, because the categories are not yet defined. Instead, they are termed Level 6 and Level 7.

Those in the know don’t bother with the full word Category. They abbreviate it Cat, so look for Cat 5 or Cat 5e wire when you shop. Table 12.4 lists the standards and applications of wiring categories.

Most UTP wiring is installed in the form of multipair cables with up to several hundred pairs inside a single plastic sheath. The most common varieties have 4 to 25 twisted pairs in a single cable. Standard Cat 5 wire for networking has four pairs, two of which are used by most 10Base-T and 100Base-T systems.

The pairs inside the cable are distinguished from one another by color codes. The body of the wiring is one color alternating with a thinner band of another color. In the two wires of a given pair, the background and banding color are opposites—that is, one wire will have a white background with a blue band, and its mate will have a blue background with a white band. Each pair has a different color code.

To minimize radiation and interference, most systems that are based on UTP use differential signals. For extra protection, some twisted-pair wiring is available with shielding. As with coaxial cable, the shielding prevents interference from getting to the signal conductors.

The specifications for most UTP networking systems limit the separation between any NIC and hub to no more than 100 meters (about 325 feet). Longer runs require repeaters or some other cabling system.