TASK CONTROLS

Multitasking concepts

Any task, or process, running on a computer control system requires certain resources to be able to run. The simplest ones merely have a requirement for some time in the CPU and a volume of system memory, while more complex processes may need extra resources (a serial port, perhaps, or a certain file on a hard disk, or a tape driver). The basic concept of multitasking, often called multi-programming, is to allow the operating system to split the time over which each system resource is available into small chunks, so allowing each of the running processes in turn to access its desired resources. Different processes have different requirements, so the essence of a multitasking system is in the cleverness of the algorithm that allocates resources. As microprocessors have become more powerful, so it has become possible to implement increasingly powerful and complex resource allocation algorithms without compromising the speed of the computer itself.

Most mainstream computers probably have a CPU with a single-core microprocessor. However, it can still do more than one task at the same time with very little, if any, drop in speed seeming to be processing two sets of code at the same time. A typical single-core microprocessor cannot process more than one line of code at any given instant, so it is actually switching quickly from one task to the next, creating an illusion of simultaneous processing. On computers with more than one CPU (called multiprocessor machines), multitasking allows many more tasks to be run than there are CPUs by using two basic methods of cooperative multitasking and preemptive multitasking.

(1) Cooperative multitasking

When one task is already occupying the microprocessor, a wait line is formed for others that also need to use the CPU. Each application is programmed such that after a certain number of cycles, the program will step down and allow other tasks to take their processor time. However, this cooperation schema is rather outdated, and is hampered by limitations. Programmers were free to decide whether and when their application would surrender CPU time. In the perfect world, every program would respect the other programs running alongside it, but unfortunately, this is not the case. Even when program A could be using CPU cycles while program B and program C are waiting in line, there is no way to stop program A unless it voluntarily steps down.

(2) Preemptive multitasking

The inefficiency of cooperative multitasking left the computer industry scrambling for different ideas, resulting in a new standard called preemptive multitasking, in which the system has the power to halt or “preempt” one code from hogging CPU time. After forcing an interrupt, the operating system has control and can appropriately hand CPU cycle time to another task. Inconvenient interrupt timing is the greatest drawback of preemptive multitasking, but in the end, it is better that all programs see some CPU time rather than having a single program work very slightly faster. Preemptive and cooperative multitasking are, as mentioned, “illusion” multitasking. There are microprocessors that can physically address two streams of data simultaneously; dual or multiprocessor, dual or multicore, and simultaneous multithreading.

The main problem that multitasking introduces in a system is deadlock, which occurs when two or more processes are unable to continue running because each holds some resources that the other needs. Imagine you have processes A and B and resources X and Y. Each process requires both X and Y to run, but process A is holding onto resource X, and B is holding onto Y. Clearly, neither process can continue until it can access the missing resources so the system is said to be in deadlock.

Fortunately, there are a number of ways of avoiding this problem. Option one is to force each process to request all of the resources it is likely to need to run before it actually begins processing. If all resources are not available, it has to wait and try again later. This works but is inefficient (a process may be allocated a resource but not use it for many seconds or minutes, during which time the resource is unavailable). Alternatively, we could define an ordering for resources, such that processes are required to ask for them in the order stipulated. In our example above, we could say that resources must be requested in alphabetical order. So processes A and B would both have to ask for resource X before asking for Y; we could never reach the situation where process B is holding resource Y, because it would have had to be granted access to resource X first.

Task properties

When an operating system is running, each of its tasks is an executing object. To control them at run-time, an operating system is designed to specify every task by their respective properties at any run-time instance. These task properties are primarily task types, task stack and heap, task states, and task body.

(1) Task types

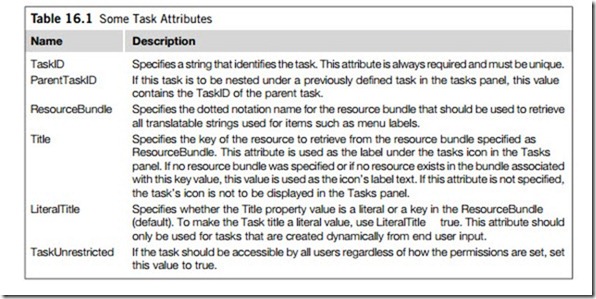

A task type is a limited type that mainly depends on the task attributes given in Table 16.1. Hence, neither assignment nor the predefined comparison for equality and inequality are defined for objects of task types; moreover, the mode out is not allowed for a formal parameter whose type is a task type.

A task object is an object whose type is a task type. The value of a task object designates a task that has the entries of the corresponding task type, whose execution is specified by the corresponding task

body. If a task object is the object, or a subcomponent of the object, declared by an object declaration, then the value of the task object is defined by the elaboration of the object declaration. If a task object is the object, or a subcomponent of the object, created by the evaluation of a scheduler, then the value of the task object is defined by the evaluation of the scheduler. For all parameter modes, if an actual parameter designates a task, the associated formal parameter designates the same task; the same holds for a subcomponent of an actual parameter and the corresponding subcomponent of the associated formal parameter; finally, the same holds for generic parameters.

(2) Task stack and heap

The process or task stack is typically an area of prereserved main storage (system memory) that is used for return addresses, procedure arguments, temporarily saved registers, and locally allocated variables. The microprocessor typically contains a register that points to the top of the stack, called the stack pointer. It is implicitly used by machine code instructions that call a procedure, return from a proce- dure, store or fetch a data item to/from the stack.

The process or task heap is also an area of prereserved main storage (system memory) that a program process can use to store data in some variable amount that is unknown until the program is running. For example, a program may accept different amounts of input from one or more users for processing, and then process all the input data at once. Having a certain amount of heap storage already obtained from the operating system makes it easier for the process to manage storage, and is generally faster than asking the operating system for storage every time it is needed. The process manages its allocated heap by requesting a chunk of it (called a heap block) when needed, returning the blocks when no longer needed, and doing occasional garbage collecting, which makes unused blocks available, and also reorganizes the available space in the heap so that it is not being wasted in small, unused pieces.

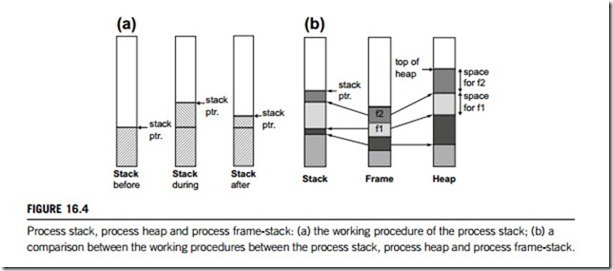

A stack and a heap are similar to each other, except that the blocks are taken out of storage in a certain order and returned in the same way. The following are some descriptions for the working procedure of the stack, heap, and frame-stack that are used in the execution of a process or task.

(a) Process stack

A stack holds the values used and computed during run time. When the machine calls a function, the parameters are pushed on the top of the stack though they are actually merged to form a single list datum. If the function requires any local variable, the machine allocates space for their values. When the function returns, the allocated local variables and the arguments stored on the stack are popped; and the value is returned by pushing it onto the stack. Figure 16.4(a) shows the working procedure of the process stack; at points before a function is called, during a function call, the arguments having been pushed onto the stack, and after the function has returned, the arguments and local variables having been popped, and the answer pushed onto the stack. Since the stack is a fixed-size memory block, a deep function call may cause a stack overflow error.

(b) Process heap

To reduce the frequency of copying the contents of such data structures as strings and lists, another data structure, called the heap, is used to hold the contents of temporary strings or lists during the string and list operations. The heap is a large memory block. Memory is allocated within this block. A pointer keeps track of the top of the heap, in a similar way to the stack pointer of the stack.

The process heap is not affected by the return of the function call. There are normally some “free- heap” instructions that are automatically inserted by the programmer at the points that it thinks safe to free memory. However, if a computation involves long strings or lists, or too deep a function is called, the machine may not have the chance to free the memory and thus causes a heap overflow error. The programmer may have to modify the program to minimize the load on the stack and the heap to avoid memory overflow errors; for instance, an iterative function has less demand in this regard than its recursive equivalent.

(c) Process frame-stack

When returning from a function call, the machine has to store the previous status, for example, stack pointer (pseudo machine) program counter, number of local variables (since local variables were put on the stack), and so on. A frame-stack is a stack that holds this information.

When a function is called, the current machine status is pushed on the frame-stack. For example, if function f1() calls function f2(), the status of stack, heap, and frame-stack is as shown in Figure 16.4 (b). The frame-stack keeps track of the stack and heap pointers. A free-heap instruction causes the top- of-heap to return to the bottom pointed to by the top-most frame-stack (e.g., f2()). On the other hand, the stack pointer is lowered only if the function returns.

A frame-stack is popped when a function returns and the machine status resumes its previous state. Since the frame-stack is implemented as a small stack, say about 64 entries, the number of levels of nested function calls is about 64 in this case. This maximum limit is enough for general use, but if a function is nested too deep, the machine will generate a “nested too deep” error.

(3) Task states

When an application program is executing, at any moment a task is characterized by its state. Nor- mally, the following five task states are defined for tasks in a RTOS:

(a) Running. In the running state, the CPU (or at least one if it is in multiprocessor chipset) is assigned to the task, so that its code instructions can be executed. Only one task can be in this state at any point in time, while all the other states can be adopted simultaneously by several tasks.

(b) Ready. All functional prerequisites for a transition into the running state exist, and the task only waits for allocation of the microprocessor. The scheduler decides which ready task is executed next.

(c) Waiting. A task cannot continue execution because it has to wait for at least one event. Only extended tasks can jump into this state (because they are the only ones that can use events).

(d) Suspended. In the suspended state the task is passive and can be activated.

(e) Terminated. In this task state, the task allocator deletes the corresponding task object and releases its resources.

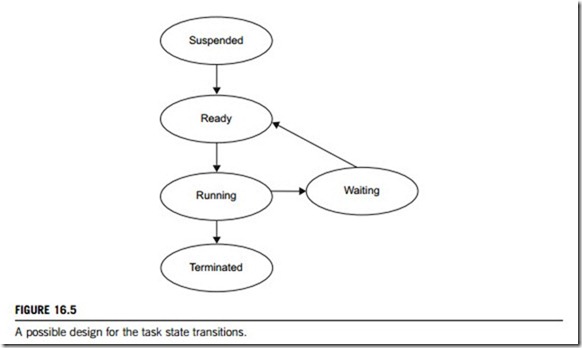

Figure 16.5 is a diagram of a possible design for the transitions between these five task states. Note that basic tasks (also called main or background tasks) have no waiting state: a basic task can only represent a synchronization point at its beginning and end. Application parts with internal synchronization points have to be implemented by more than one basic task. An advantage of extended tasks is that they can handle a coherent job in a single task, no matter which synchronization requests are active. Whenever current information for further processing is missing, the extended task switches over into the waiting state. It exits this state whenever corresponding events signal the receipt or the update of the desired data or events.

Depending on the conformance class a basic task can be activated once or many times. The latter means that activation issued when a task is not in the suspended state will be recorded and then executed when the task finishes the current instance.

The termination of a task instance only happens when it terminates itself (to simplify the RTOS, no explicit task kill primitives are provided).

(4) Task body

The execution of the task is defined by the corresponding task body. A task body documents all the executable contents of code. Task objects and types can be declared in any declarative part, including task bodies themselves. For any task type, the specification and body must be declared together in the same unit, with the body usually being placed at the end of the declarative part.

The simple name at the start of a task body must repeat the task unit identifier. Similarly, if a simple name appears at the end of the task specification or body, it must also repeat this. Within a task body, the name of the corresponding task unit can also be used to refer to the task object that designates the task currently executing the body; furthermore, the use of this name as a type mark is not allowed within the task unit itself.

The elaboration of a task body has no other effect than to establish that the body can, from then on, be used for the execution of tasks designated by objects of the corresponding task type. The execution of a task body is invoked by the activation of a task object of the corresponding type. The optional exception handlers at the end of a task body handle exceptions raised during the execution of the sequence of statements of the task body.

Task creation and termination

A task object can be created, either as part of the elaboration of an object declaration that occurs immediately within some declarative region, or as part of the evaluation of an allocator. All tasks created by the elaboration of object declarations of a single declarative region (including subcomponents of the declared objects) are activated together. Similarly, all tasks created by the evaluation of a single allocator are activated together. The execution of a task object has three main active phases:

1. Activation. The elaboration of the declarative part, if any, of the task body (local variables in the body of the task are created and initialized during activation); the activator identifies the task that created and activated the task.

2. Normal execution. In this phase, the execution of the statements is visible within the body of the

task.

3. Finalization. The execution of any finalization code associated with any objects in its declarative part.

The parent task is the task on which a task depends, determined by the following rules: If the task has been declared by means of an object declaration, its parent is the task which declared the task object. If the task has been declared by means of an allocator, its parent is the task that has the corresponding access declaration. When a parent creates a new task, the parent’s execution is suspended while it waits for the child to finish activating (either immediately, if the child is created by an allocator, or after the elaboration of the associated declarative part). Once the child has finished its activation, parent and child proceed concurrently. If a task creates another during its activation, then it must also wait for its child to activate before it can begin execution.

The master is the execution of a construct that includes finalization of local objects after it is complete (and after waiting for any local task), but before leaving. Each task depends on one or more masters, as follows. If the task is created by the evaluation of an allocator for a given access type, it depends on each master that includes the elaboration of the declaration of the ultimate ancestor of the given access type. If the task is created by the elaboration of an object declaration, it depends on each master that includes its elaboration. Furthermore, if a task depends on a given master, it is defined as depending on the task that executes the master, and (recursively) on any master of that task.

For the finalization of a master, dependent tasks are first awaited. Then each object whose accessibility level is the same as that of the master is finalized if the object was successfully initialized and still exists. Note that any object whose accessibility level is deeper than that of the master would no longer exist; those objects would have been finalized by some inner master. Thus, after leaving a master, the only objects yet to be finalized are those whose accessibility level is not as deep as that of the master.

Task queue

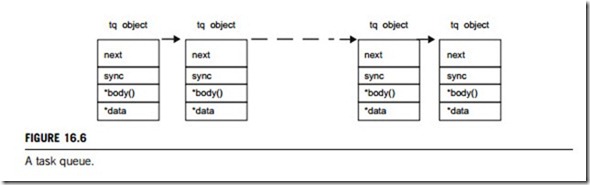

Task queues are the way to defer work until later, in the kernels of the RTOS. A task queue is a simple data structure; see Figure 16.6, which consists of a singly linked list of “tq object” data structures, each of which contains the address of a task body routine and a pointer (of the prefix )) to some data. The routine will be called when the element on the task queue is processed, and it will be passed a pointer to the data. Anything in the kernel, such as a device driver, can create and use task queues but there are four task queues created and managed by the kernel:

(a) Timer. This queue is used to queue work that will be done as soon after the next system clock tick as is possible. At each clock tick, this queue is checked to see whether it contains any entries and, if it does, the timer queue handler is made active. This is processed, along with all the

other handlers, when the scheduler next runs. This queue should not be confused with system timers, which are a much more sophisticated mechanism.

(b) Immediate. This queue is also processed when the scheduler processes the active handlers by means of their priorities. The immediate handler is not as high in priority as the timer queue handler and so these tasks will be run later.

(c) Scheduler. This task queue is processed directly by the scheduler. It is used to support other task queues in the system and, in this case, the task to be run will be a routine that processes a task queue, say, for a device driver or an interface monitor.

(d) Waiting. There are many instances when a process must wait for a system resource before it can carry on. To deal with this, the kernel uses a simple data structure, called a wait queue. When processes are added to the end of a wait queue they can either be interruptible or uninterruptible. Interruptible processes may be interrupted by events such as timers expiring, or signals being delivered whilst they are waiting on a wait queue. Uninterruptible processes can never be interrupted at any time or by any event. When the wait queue is processed, the state of every process in the wait queue is set to “running”, and is moved into corresponding task queue. The next time the scheduler runs, the processes that are on the wait queue are now candidates to be run as they are now no longer waiting. When a process on the wait queue is scheduled, the first thing that it will do is remove itself from the wait queue. Wait queues can be used to synchronize access to system resources and they are used by Linux in its implementation of semaphores.

When task queues are processed, the pointer to the first element in the queue is removed and replaced with a null pointer. In fact, this removal is an atomic operation that cannot be interrupted. Then each element in the queue has its handling routine called in turn. The elements in the queue are often statically allocated data; however, there is no inherent mechanism for discarding allocated memory. The task queue processing routine simply moves onto the next element in the list. It is the job of the task itself to ensure that it properly cleans up any allocated kernel memory.

Task context switch and task scheduler

(1) Task context switch

A context switch (also sometimes referred to as a process or a task switch) is the switching of the CPU from one process or task to another. Sometimes, a context switch is described as the kernel suspending execution of one process on the CPU and resuming execution of some other that had previously been executed. Context switching is an essential feature of multitasking operating systems. The illusion of concurrency is achieved by means of context switches that occur in rapid succession (tens or hundreds of times per second), either as a result of processes voluntarily relinquishing their time in the CPU, or as a result of the scheduler making the switch when a process has used up its CPU time slice.

A context is the contents of a CPU’s registers and program counter at any point in time. A register is a small amount of very fast memory inside a CPU (as opposed to the slower RAM main memory outside the CPU) that is used to speed the execution of computer programs by providing quick access to commonly used values, generally those in the middle of a calculation. A program counter is a specialized register that indicates the position of the CPU in its instruction sequence and holds either the address of the instruction being executed, or the address of the next, depending on the specific system. Context switching can be described in slightly more detail as the operating system or kernel performing the following activities:

(a) The state of the first process must be saved so that, when the scheduler returns to it, this state can be restored and execution can continue normally.

(b) The state of the process includes all the registers in use, especially the program counter, plus any other operating-system-specific data that may be necessary. Often, all the necessary data for state are stored in one data structure, called a switchframe or a process control block.

(c) To switch processes, the switchframe for the first process must be created and saved. They are sometimes stored upon a per-process stack in kernel memory (as opposed to the user-mode stack), or there may be some specific operating-system-defined data structure for this information.

(d) Since the operating system has effectively suspended the execution of the first process, it can now load the switchframe and context of the second. In doing so, the program counter from the switchframe is loaded, and thus execution can continue for the new process.

A context switch can also occur as a result of a hardware interrupt, which is a signal from a hardware device to the kernel that an event has occurred. Some processors, such as the Intel 80386 and higher CPUs, have hardware support for context switches, by making use of a special data segment designated the task state segment (TSS). When a task switch occurs (referring to a task gate or explicitly due to an interrupt or exception) the CPU can automatically load the new state from the TSS. Like other tasks performed in hardware, one would expect this to be rather fast; however, mainstream operating systems, including Windows, do not use this feature. This is partly because hardware context switching does not save all the registers (only general-purpose registers, not floating-point registers), and partly due to associated performance issues.

However, most modern operating systems can perform software context switching, which can be used on any CPU, rather than hardware context switching, in an attempt to obtain improved performance. Software context switching was first implemented in Linux for Intel-compatible processors with the 2.4 kernel. One major advantage claimed is that, while the hardware mechanism saves almost all of the CPU state, software can be more selective and save only that portion that actually needs to be saved and reloaded. However, there is some question as to how important this really is in increasing the efficiency of context switching. Its advocates also claim that software context switching allows the switching code to be improved, thereby further enhancing efficiency, and that it permits better control over the validity of the data being loaded.

(2) Task scheduler of common operating systems

For those operating systems without real-time constraints, the process or task scheduler is that part of the operating system which responds to the requests by programs and interrupts for microprocessor attention. A scheduler can also stand alone to act as a centerpiece to a program that requires moderation between many different tasks. In this capacity, each program task is written so that it can be called by the scheduler as necessary. Most embedded programs can be described as having a specific, discrete response to a stimulus or time interval. These tasks can be ranked in priority, allowing the scheduler to hand control of the microprocessor to each process in turn.

The scheduler itself is a loop that calls one of the other processes each time it executes. Each microprocessor executes the scheduler itself and will select its next task from all runnable processes that are not allocated to a microprocessor. An array of flags for the process, then indicates its need for scheduling. The simplest way to handle the problem is to give each program a turn, then when a process gets its turn, can decide when to return control. This method does not support any notion of importance among processes, so it is not as useful as it could be. The more complicated the scheduler or operating system, the more elaborate is the kind of scheduling information that can be maintained.

The scheduling information of a process or task can be measured with the following metrics:

(1) CPU utilization, which is the percentage of time that the CPU is doing useful work (i.e., not idling); 100% is perfect; (2) wait time, which is the average time a process spends in the run queue;

(3) throughput, which is the number of processes completed per time unit; (4) response time, which is

the average time elapsed from the time the process is submitted until useful output is obtained;

(5) turnaround time, which is the average time elapsed between when a process is submitted and when it has completed. Typically, utilization and throughput are traded-off for better response time. In general, we would like to optimize the average measure of response time. In some cases, minimum and maximum values are optimized; for example, it might be a good idea to minimize the maximum response time.

The types of process or task schedulers depend on the adopted algorithms, which are generally of the following types:

(a) First-come, first-served (FCFS) . The FCFS scheduler simply executes processes to completion in the order they are submitted. They can use a queue data structure, that, given a group of processes to run, will insert them all into the queue and execute them in that order.

(b) Round-robin (RR) . RR is a preemptive scheduler, which is designed especially for time-sharing systems. In other words, it does not wait for a process to finish or give up control. In RR, each process is given a time slot to run. If the process does not finish, it will get back in line and receive another time slot until it has completed. The implementation of RR can use a FIFO queue where new jobs are inserted at the tail end.

(c) Shortest-job-first (SJF). The SJF scheduler is like FCFS, except that instead of choosing the job at the front of the queue, it will always choose the shortest job (i.e., the job that takes the least time) available. It uses a sorted list to order the processes from longest to shortest, and when adding a new process or task, needs to figure out where in the list to insert it.

(d) Priority scheduling (PS). As mentioned, a priority is associated with each process. This priority can be defined in any way: for example, we can think of the shortest job as top priority, so this algorithm can be a special case of PRI. Processes with equal priorities may be scheduled in accordance with FCFS.

(3) The task scheduler of real-time operating systems

The goals for real-time scheduling are to complete tasks within specific time constraints and to prevent simultaneous access to shared resources and devices (critical sections). Although system resource utilization is of interest, it is not a primary driver, and predictability and temporal correctness are the principal concerns of the task scheduler of real-time operating systems. The algorithms used in real- time scheduling vary from relatively simple to extremely complex. The topic of real-time scheduling algorithms can be studied for either single-processor or multiprocessor platforms.

We first study real-time scheduling algorithms for a single-processor CPU. The set of such algo-rithms is divided into two major subsets, namely offline and online scheduling algorithms.

Offline algorithms (pre-run-time scheduling) generate scheduling information prior to system execution, which is utilized by the system during run-time. In systems using offline scheduling, there is generally, if not always, a required ordering of the execution of processes. This can be accommodated by using precedence relations that are enforced during offline scheduling. Preventing simultaneous access to shared resources and devices is also necessary and is accomplished by defining which portion of a process cannot be preempted by another task, and then defining exclusion constraints and enforcing them during the offline algorithm. Another goal that may be desired for offline schedules is reducing the cost of context switches caused by preemption.

Offline algorithms are good for applications where all characteristics are known a priori and change very infrequently, since a fairly complete characterization of all processes involved, such as execution times, deadlines, and ready times, is required. The algorithms need large amounts of offline processing time to produce the final schedule, and hence are quite inflexible. Any change to the system processes requires starting the scheduling problem over from the beginning. In addition, they cannot handle an environment that is not completely predictable. However, a major advantage of offline scheduling is a significant reduction in run-time resources, including processing time, used for scheduling.

Online algorithms generate scheduling information while the system is running. These schedulers do not assume any knowledge of process characteristics which have not arrived yet, and so require a large amount of run-time processing time.

However, if different modes or some form of error handling is desired, multiple offline schedules can be computed, one for each alternative situation, so at run-time, a small online scheduler can choose the correct one. One severe problem that can occur with this approach is priority inversion. This occurs when a lower-priority task is using a resource that is required by a higher-priority task and this causes blocking of the higher-priority task by the lower-priority one. The major advantage of online scheduling is that there is no requirement to know tasks’ characteristics in advance, and so they tend to be flexible and easily adaptable to environment changes.

Online scheduling algorithms can be static or dynamic priority-based algorithms, which are dis- cussed briefly here.

(a) Online static priority-based algorithms may be either preemptive or non-preemptive. The following two non-preemptive algorithms attempt to provide high microprocessor utilization whilst preserving task deadline guarantees.

Parametric dispatching algorithm: this uses a calendar of functions describing the upper and lower bounds of allowable start times for the task. During an offline component, the timing constraints between tasks are analyzed to generate the calendar of functions, then, during system execution, these bounds are passed to the dispatcher, which then determines when to start execution of the task. This decision can be based on whether there are other non-real-time tasks waiting to execute.

Predictive algorithm: this algorithm depends upon known a priori task execution and arrival times. When it is time to schedule a task for execution, the scheduler not only looks at the first task in the ready queue, but also looks at the deadlines for tasks that are predicted to arrive prior to the first task’s completion. If a later task is expected to arrive with an earlier deadline than the current task, the scheduler may insert CPU idle time and wait for the pending arrival, if this will produce a better schedule. In particular, the insertion of idle time may keep the pending task from missing its deadline.

(b) Online dynamic priority-based algorithms require a large amount of resources. There are two subsets of dynamic algorithms: planning-based and best effort. They attempt to provide better response to aperiodic or soft tasks, while still meeting the timing constraints of the hard, periodic tasks. This is often accomplished by utilization of spare microprocessor capacity to service soft and aperiodic tasks.

Planning-based algorithms reject task execution if they cannot guarantee its on-time completion. Most of them can provide higher microprocessor utilization than static priority-based algorithms while still guaranteeing on-time completion of accepted tasks. Earliest deadline first scheduling is one of the first planning-based algorithms proposed, providing the basis for many of those currently being studied and used. The dynamic priority exchange server, dynamic sporadic server, total bandwidth server, earliest deadline late server, and improved priority exchange server are all examples of planning-based algorithms.

Best effort algorithms that can be used by an application task, are based on application-specified benefit functions such as the energy consumption function. There exist many best effort real-time scheduling algorithms. Two of the most prominent are the dependent activity scheduling algorithm (DASA) and the lock best effort scheduling algorithm (LBESA).

DASA and LBESA are equivalent to the earliest deadline first algorithm (EDF) during under-loaded conditions, where EDF is optimal and guarantees that all deadlines are always satisfied. In the event of an overload situation, DASA and LBESA seek to maximize the aggregate task benefit. The DASA algorithm makes scheduling decisions using the concept of benefit densities. The benefit density of a task is the benefit accrued per unit time by the execution of the task. The objective of DASA is to compute a schedule that will maximize the aggregate task benefit, which is the cumulative sum of the benefit accrued by the execution of the tasks. Thus, since task benefit functions are step-benefit functions, a schedule that satisfies all deadlines of all tasks will yield the maximum aggregate benefit. LBESA is another best effort real-time scheduling algorithm, similar to DASA in that both algorithms schedule tasks using the notion of benefit densities and are equivalent to EDF during under-load situations. However, the algorithms differ in the way they reject tasks during overload situations. While DASA examines tasks in the ready queue in decreasing order of their benefit densities for determining feasibility, LBESA examines tasks in increasing order of deadlines. Like DASA, LBESA also inserts each task into a tentative schedule at its deadline-position and checks the feasibility of that schedule. Tasks are maintained in increasing deadline order in the tentative schedule. If the insertion of a task into it results in an infeasible schedule, then, unlike DASA, LBESA removes the task with least benefit density from the schedule. LBESA does this continuously until the schedule becomes feasible. Once all tasks in the ready queue have been examined and a feasible tentative schedule is thus constructed, LBESA selects the earliest deadline task from it.

The scheduling of real-time systems has been much studied on machines in which there is exactly one shared microprocessor available, and all the processes in the system are required to execute on it.

In multiprocessor platforms there are several microprocessors available upon which these processes may execute.

The following assumptions may be made to design a multiprocessor scheduling algorithm. (1) Task pre-emption is permitted. That is, a task executing on a microprocessor may be preempted before completion, and its execution resumed later. It may be assumed that there is no penalty associated with such pre-emption. (2) Task migration is permitted. That is, a task that has been preempted on a particular processor may resume execution on a different one. Once again, we may assume that there is no penalty associated with such migration. (3) Task parallelism is forbidden. That is, each task may execute on at most one microprocessor at any given instant in time. Multiprocessor scheduling techniques fall into the two general categories, as given below.

(a) Global scheduling algorithms store the tasks that have arrived, but not finished their execution, in one queue which is shared among all microprocessors. Suppose there are k microprocessors in the system. At every moment the k highest-priority tasks of the queue are selected for execution using pre-emption and migration if necessary. The focused addressing and bidding algorithm is an example of a global scheduling algorithm, which works as follows. Each microprocessor maintains a status table that indicates the tasks already committed to run. It also maintains a table of the surplus computational capacity of every other microprocessor in the system. The time axis is divided into windows of fixed duration, and each microprocessor regularly sends to its colleagues the fraction of the next window that is currently free. On the other hand, an overloaded microprocessor checks its surplus information and selects a microprocessor that seems to be most likely to be able to successfully execute that task by its deadline. It then ships the tasks out to that microprocessor, which is called the selected microprocessor. However, the surplus information may be outdated, and it is possible that the selected microprocessor will not have the free time to execute the task. In order to avoid this problem, and in parallel with sending out the task to the selected processor, the originating microprocessor asks other lightly loaded microprocessors how quickly they can successfully process the task. The replies are sent to the selected microprocessor, which, if unable to process the task successfully, can review the replies to find one able to do so, and transfers the task to that microprocessor.

(b) Partitioning scheduling algorithms divide the set of tasks such that all tasks in a partition are assigned to the same microprocessor. Tasks are not allowed to migrate hence the multiprocessor scheduling problem is transformed into many single-processor scheduling problems. The next fit algorithm is a multiprocessor scheduling algorithm that works on the basis of a partitioning strategy. A set of classes of the task are defined. Tasks are allocated one by one to the appropriate microprocessor class, then, with this assignment, the scheduling algorithm is running on each microprocessor.

In addition to the above approaches, we can apply hybrid partitioning and global strategies. For instance, each process can be assigned to a single microprocessor, while a task is allowed to migrate.

Task threads

A thread is a single sequential flow of control within a program. Task threads are a way for a program to split a task into two or more simultaneously running subtasks. Multiple threads can be executed in parallel on many control systems. This multithreading generally occurs by time slicing (where a single microprocessor switches between different threads) or by multiprocessing (where threads are executed on separate microprocessors).

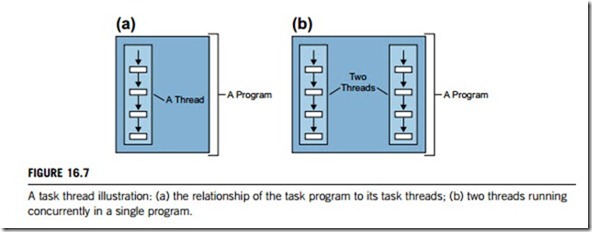

A single thread also has a beginning, a sequence, and an end. At any given time during the run- time of the thread, there is a single point of execution. However, a thread itself is not a program; it cannot run on its own. Rather, a thread runs within a program, as shown in Figure 16.7(a). The real potential for threads is not about a single one, but about the use of multiple threads running at the same time and performing different tasks in a single program. Figure 16.7(b) shows this multi- threading in a program.

Threads are distinguished from traditional multitasking operating system processes in that processes are typically independent, carry considerable state information, have separate address spaces, and interact only through system-provided interprocess communication mechanisms. Multiple threads, on the other hand, typically share the state information of a single process, and share memory and other resources directly. Context switching between threads in the same process is typically faster than context switching between processes.

One advantage of a multithreaded program is that it can operate faster on multiprocessor CPUs that are CPUs with multiple cores, or across a cluster of machines, because the threads of the program naturally lend themselves to truly concurrent execution. In such a case, the programmer needs to be careful to avoid race conditions and other non-intuitive behaviors by implementing correct scheduler algorithms. In order for data to be correctly manipulated, threads will often need to rendezvous in time to process data in the correct order. Threads may also require atomic operations (often implemented using semaphores) to prevent common data from being simultaneously modified, or read while in the process of being modified. Careless use of such primitives can lead to deadlocks.

Many modern operating systems support both time-sliced and multiprocessor threading directly with a process scheduler. Some implementations are called kernel threads or lightweight processes. In the absence of such mechanisms, programs can still implement threading by using timers, signals, or other methods to interrupt their own execution and hence perform a sort of ad hoc time-slicing. These are sometimes called user-space threads. Both standard and real-time operating systems generally implement threads, either by preemptive multithreading or cooperative multithreading. Preemptive multithreading is the same as preemptive tasking, and is generally considered the superior imple- mentation, as it allows the operating system to determine when a context switch should occur.

Cooperative multithreading, on the other hand, relies on the threads themselves to relinquish control once they are at a stopping point, which is also the same as cooperative tasking. This can create problems if a thread is waiting for a resource to become available. The disadvantage to preemptive multithreading is that the system may make a context switch at an inappropriate time, causing priority inversion or other bad effects that may be avoided by cooperative multithreading.

Traditional mainstream computing hardware did not have much support for multithreading, as switching between threads was generally already quicker than full process context switches. Microprocessors in embedded systems, which have higher requirements for real-time behaviors, might support multithreading by decreasing the thread switch time, perhaps by allocating a dedicated register file for each thread instead of saving/restoring a common register file. In the late 1990s, the idea of executing instructions from multiple threads simultaneously became known as simultaneous multithreading. This feature was introduced in Intel’s Pentium 4 processor, with the name Hyper- threading.