Scaling and quantisation

The purpose of scaling, also known as companding is to improve the sig- nal-to-noise ratio (SNR) caused by the quantising errors which are most pronounced with low-level sounds. Quantising errors are inherent in the digitising process itself in which a number of discrete quantum steps are used as determined by the number of bits. For instance, for three bits, there would be 23 = 8 quantum steps; 8-bit codes have 28 = 256 steps and so on. Within a given amplitude range the fewer the number of quantum steps that are available, the larger the height of the step, known as the quantum. Sample levels which may have any value within the given amplitude range will invariably fall between these quantum levels result- ing in an element of uncertainty or ambiguity in terms of the logic state of the least significant bit (LSB). This ambiguity is known as the quantising error which is equal to 1/2 the quantum step. With large signals, there are so many steps involved, the quantum is therefore small and with it the quantising error. For low-value samples representing low-level sound signals where fewer steps are needed, the quantising error is large and may fall above the noise level. The quantising error may be reduced by scaling.

Scaling is achieved by amplifying the samples representing low-level sound by a factor known as the scale factor. This will increase the number of bits used for the samples resulting in a smaller quantum and reduced quantising error. The scale factor is specified for each sub-band block, which in the case of Layer I coding contains 12 samples. The amplitudes of the 12 samples are examined and the scale factor is then based on the highest amplitude present in the block. Scaling of a sub-band is deter- mined by the power of each sub-band and its relative strength with respect to its neighbouring sub-bands. If the power in a band is below the masking threshold, then it won’t be decoded.

The small number of samples is such that the sample amplitudes are unlikely to vary very much within the sub-band block. This technique cannot be used for Layers II and III with 36 samples per block as large variations in sample levels are more likely to occur. First, the sample with the highest amplitude in the block is used to set the scale factor for the whole block. However, if there are vast differences between the ampli- tudes within each group of 12 samples within the block, a different scale factor may be set for each 12-sample group. The scale factor is identified by a 6-bit code, giving 64 different levels (0–63). This information is included with the audio packet before transmission.

Once the samples in each sub-band block are companded, they are then ‘re-quantised’ in accordance with the predefined masking curve. The prin- ciple of MPEG audio masking is based on comparing a spectral analysis of the input signals with a predefined psychoacoustical masking model to determine the relative importance of each audio component of the input. For any combination of audio frequencies, some components will fall below the masking curve, making them redundant as far as human hear- ing. They are discarded and only those components falling above the masking curve are re-quantised. The spectral analysis of the input fed into the masking processor may be derived directly from the 32 sub-bands gen- erated by the filter. This is a crude method which is used at Layer I audio encoding. A more accurate analysis of the audio spectrum is to use a fast Fourier transform (FFT) processor. Layer I provides an option to use a 512-point FFT. Layer II uses a 1024-point FFT.

Once the components that fall below the hearing threshold are removed by the masking processor, the remaining components are allocated appro- priate quantising bits. The masking processor determines the bit allocation for each sub-band block, which is then applied to all samples in the block. Bit allocation is dynamically set with the aim of generating a constant audio bit rate stream over the whole 384-sample (Layer I) or 1152-sample (Layers II and III) blocks. This means that some sub-bands can have long code words provided others in the same block have shorter code words.

Formatting

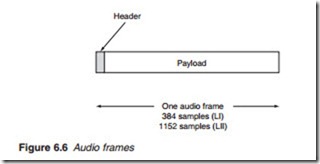

Coded audio information together with the necessary parameters for the decoding process are grouped into blocks known as frames. A frame (Figure 6.6) is a block of data with its own header and a payload which contains the coded audio data as well as other information such as bit allo- cation and scaling factor that are necessary for the decoder to re-produce the original sound. Figure 6.7 shows the construction of MPEG-1 Layers I, II and III and MPEG-2 audio frames. The cyclic redundancy count (CRC)

decoder calculates the CRC checksum of the payload and compares it with the checksum stored in the CRC field. A difference indicates that the data contained in the frame has altered during transmission, in which case, the frame is dropped and replaced with a ‘silent’ frame.

The auxiliary data at the end of the frame is another optional field which is used for information such as multi-channel sound. In Layer II, an additional field SCFSI (scale factor select information) is included to deter- mine which of the three scale factors has been sent for each sub-band. In Layer III, all the information regarding scale factor, bit allocation and SCFSI are included in single field called the side information field which also include information about surround sound.

The header is 32 bits (4 bytes) in length with 13 different fields as illus- trated in Figure 6.8. The first field is the frame sync comprising of 11 bits (31-21) which are always set to 1 indicating the start of a new frame. The next field (bits 20-19) indicates the MPEG version (MPEG-1 or MPEG-2) followed by the layer type (bits 18-17). The presence of CRC field in the payload is indicated by bit 16 set to logic 0. Four bits (15-12) are allocated to indicate the bit rate of the transmission with the sampling frequency indicated by bits 11 and 10. Bit 9 of the header tells the decoder if padding has been used in the frame. Padding is used if the frame is not completely filled with payload data. Bit 8 is for private use followed by 2 bits (7,6) to indicate the channel mode, namely stereo, joint stereo, dual channel or single channel. The next field (5,4) is only used when the channel mode is joint stereo. The remaining three fields contain information on copyright (bit 3), original media (bit 2) and emphasis to tell the decoder that the data must be de-emphasised (1,0).

In the case of Layers I and II, the frames are totally independent of each other. In the case of Layer III, frames are not always independent. This is due to the use of the buffer reservoir, which ensures a constant bit rate by changing frame sizes. Layer III frames are thus often dependent on each other. In the worst case, nine frames may be needed before it is possible to decode one frame.