MPEG audio basic elements

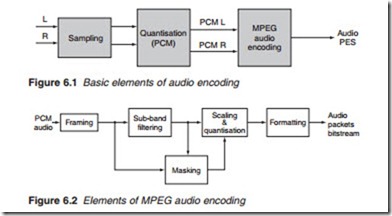

MPEG coding consists of five basic steps as illustrated in Figure 6.2:

● Framing: The process of grouping the PCM audio samples into PCM frames for the purposes of encoding.

● Sub-band filtering: The use of poly-phase filter bank to divide the audio signal into 32 frequency sub-bands.

● Masking: To determine the amount of redundancy removal for each band using a psychoacoustic model.

● Scaling and quantisation: To determine number of bits needed to repre- sent the samples such that noise is below the masking effect.

● Formatting: The organisation of the coded audio bitstream into data packets.

Framing

Before encoding can take place, the PCM audio samples are grouped together into frames, each one containing a set number of samples. MPEG encoding, is applied to a fixed-size frame of audio samples: 384 samples

for Layer I audio encoding and 1152 samples for Layer II. At a sampling rate of 48 kHz, using Layer I, 384 samples correspond to an audio time span known as window of 1/48 X 384 = 8 ms. In Layer II coding, frames of 1152 samples are used which correspond to a window size of 1/48 X 1152 = 24 ms. This size window is adequate for most sounds which are normally periodic. However, it fails where short duration transients are present. To overcome this, Layer III provides for a smaller window size to be employed whenever necessary. Layer III specifies two different window lengths: long and short. The long window is the same as that used for Layer II, 24 ms (1152 samples at a sampling frequency of 48 kHz). The short window is 8 ms duration containing 384 samples. The long window provides greater frequency resolution. It is used for audio signals with stationary or periodic characteristics. The short window provides better time resolution at the expense of frequency resolution used in blocks with changing sound levels containing transients.

Sub-band filtering

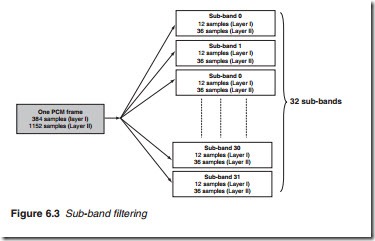

The human auditory system, what we commonly call hearing, has a lim- ited, frequency-dependent resolution (Figure 6.3). This frequency depend- ency can be expressed in terms of critical frequency bands which are less than 100 Hz for the lowest audible frequencies and more than 4 kHz at the highest. The human auditory system blurs the various signal compo- nents within these critical bands. MPEG audio encoding attempts to sim- ulate this by dividing the audio range into 32 equal-width frequency sub-bands to represent these critical bands using a poly-phase filter bank.

Each sub-band block, known as a bin, consists of 384/32 = 12 PCM sam- ples for Layer I and 1152/32 = 36 samples for Layers II and III.

This technique suffers from a number of shortcomings:

● The equal widths of the sub-bands do not accurately reflect the human auditory system’s frequency-dependent behaviour.

● The filter bank and its inverse are not lossless transformations. Even without quantisation, the inverse transformation cannot perfectly recover the original signal.

● Adjacent filter bands have a major frequency overlap. A signal at a sin- gle frequency can affect two adjacent filter bank outputs.

These shortcomings are mostly overcome with the introduction of AAC, MPEG AAC and MPEG surround.

Masking

The human auditory system uses two transducers on either side of the head, namely the ears. Disturbances in the equilibrium of the air, what we call sound waves are picked up by the ears and using the mechanics of the ear and numerous nerve ends. They are passed to the brain to produce the sensation of hearing. Our hearing sense is a very complex system which informs us of the source of the sound, its volume and its distance as well as its character such as its pitch. To do this, the hearing system works in three domains: time, frequency and space. The interaction within and between these domains, creates a possibility for redundancy, i.e. sounds that are present but not perceived. This is the basis of audio masking.

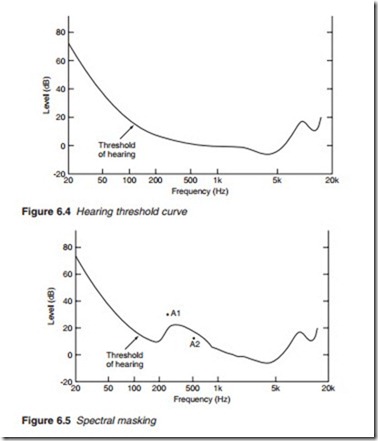

It is well known that the human ear can perceive sound frequencies in the range 20 Hz–20 kHz. However, the sensitivity of the human ear is not linear over the audio frequency range. Experiments show that the human ear has a maximum sensitivity over the range 2–5 kHz and that outside this range its sensitivity decreases. The uppermost audio frequency is 20 kHz and lowest that can be heard is about 40 Hz. However, reproduc- tion of frequencies down to 20 Hz is shown to improve the audio ambience making the experience more natural and real. The manner in which the human ear responds to sound is represented by the threshold curve in Figure 6.4. Only sounds above the threshold are perceived by the human ear; sounds below the curve are not and therefore they do need not to be transmitted. However, the hearing threshold curve will be distorted in the presence of multiple audio signals which could ‘mask’ the presence of one or more sound signals.

There are two types of audio masking:

● spectral (or frequency) masking where two or more signals occur simul- taneously and

● temporal (i.e. time-related) masking where two or more signals occur in close time proximity to each other.

It is common experience that a very loud sound, say a car backfiring will render a low-level sound, such as that from a television or radio receiver inaudible at the time of the backfiring. A closer examination would show that the softer sound is also made inaudible immediately before and immediately after the louder sound. The first is spectral masking and the second is temporal masking. Consider two signals, A1 at 500 Hz and A2 at 300 Hz, having the relative loudness shown in Figure 6.2. Each individual signal is well above the hearing threshold shown in Figure 6.4 and will be easily perceived by the human ear. But, if they occur simulta- neously, the louder sound A1 will tend to mask the softer sound A2, making it less audible or completely inaudible. This is represented by the loud sound A1 shifting the threshold as shown in Figure 6.5. Two other signals with different frequencies and different relative loudness will result in a different distortion of the masking shape.

In temporal masking, a sound of high volume will tend to mask sounds immediately preceding it (pre-masking) and immediately following it (post-masking). Temporal masking represents the fact that the ear has a finite time resolution. Sounds arriving over a period of about 30 ms are averaged whereas sounds arriving outside that time period are perceived separately. This is why echoes are only heard when the delay is substantially more than 30 ms.

The effects of spectral and temporal masking can be quantified through subjective experimentation to produce a model of human hearing known as a psychoacoustical model. This model is then applied to each sub-band to determine which sounds are perceived and therefore need coding and transmitting and which fall below the threshold and are masked.