Chapter 6: I/O Expansion Buses and Cards

Overview

Expansion buses are a system-level means of connection that allow adapters and controllers to use the computer’s system resources—memory and I/O space, interrupts, DMA channels, and so on—directly. Devices connected to expansion buses can also control these buses themselves, obtaining access to the rest of the computer’s resources (usually, memory space). This kind of direct control, known as “bus mastering,” makes it possible to unload the CPU and to achieve high rates of data exchange. Physically, expansion buses are implemented as slot or pin connectors; they typically have short conductors, which allow high operating frequencies. Buses may not necessarily have external connectors mounted on them, but can be used to connect devices integrated onto motherboards.

Currently, the third generation of I/O expansion bus architecture is getting dominant; these buses include PCI Express (also known as 3GIO), Hyper Transport, and InfiniBand. The ISA bus, an asynchronous parallel bus with low bandwidth (less than 10 MBps) that does not provide exchange robustness or autoconfiguration, belongs to the first generation. The second generation started with the EISA and MCA buses, followed by the PCI bus and its PCI-X extension. This is a generation of synchronous, reliable buses that have autoconfiguration capabilities; some versions are capable of hot-swapping. Transfer rates measured in GBps can be reached. In order to connect a large number of devices, buses are connected into a hierarchical tree-like structure using bridges. For the third generation of buses, the transition from parallel buses to point-to-point connections with serial interface is characteristic; multiple clients are connected using so-called “switching fabric.” In essence, the third generation of the I/O expansion approaches very local networks (within the limits of the motherboard).

The most commonly used expansion bus in modern computers is The PCI bus, supplemented by the AGP port. In desktop computers, the ISA bus is becoming less popular, but it has maintained its positions in industrial and embedded computers, both in its traditional slot version as well as the PC/104 “sandwich” type. In notebook computers, PCMCIA slots with PC Card and CardBus buses are extensively used. The LPC bus is a modern, inexpensive way to connect devices on the motherboard that are not resource-intensive. All these buses are considered in detail in this chapter. Information about the obsolete MCA, EISA, and VLB buses can be found in other books.

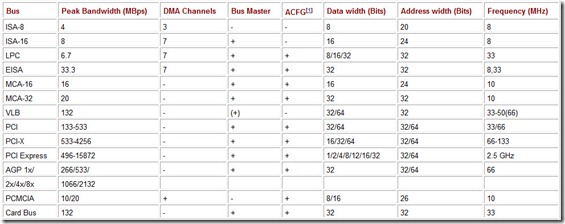

The characteristics of the standard PC expansion buses are given in Table 6.1.

Table 6.1: Characteristics of Expansion Buses

6.1 PCI and PCI-X Buses

The Peripheral Component Interconnect (PCI) local bus is the principal expansion bus in modern computers. It was developed for the Pentium family processors, but it is also well suited to the 486 family processors, as well as to the modern processors.

Currently, the PCI is a well-standardized, highly efficient, reliable expansion bus supported by several computer platforms, including PC-compatible computers, PowerPC, and others. The specifications of the PCI bus are updated periodically. The given description covers all PCI and PCI-X bus standards, up to and including versions 2.3 and 2.0, respectively:

-

PCI 1.0 (1992): general conception defined; signals and protocol of a 32-bit parallel synchronous bus with clock frequency of up to 33.3 MHz and peak bandwidth of 132 MBps.

-

PCI 2.0 (1993): introduced specification for connectors and expansion cards with possible width extension to 64 bits (speed of up to 264 MBps); 5 V and 3.3 V power supplies provided for.

-

Version 2.1 (1995): introduced 66 MHz clock frequency (3.3 V only), making it possible to provide peak bandwidth of up to 264 MBps in the 32-bit version and 528 MBps in the 64-bit version.

-

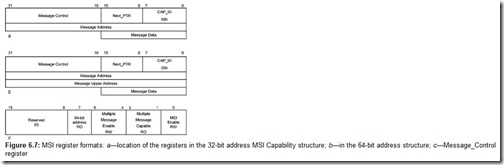

Version PCI 2.2 (PCI Local Bus Specification. Revision 2.2 of 12/18/1998): specified and clarified some provisions of version 2.1. Also introduced a new interrupt signaling mechanism, MSI.

-

Version PCI 2.3 (2002): defined bits for interrupts that facilitate identifying interrupt source; 5 V cards now obsolete (only 3.3 V and universal card left); low profile expansion card build introduced; supplementary SMBus and signals introduced. This version is described in the PCI Local Bus Specification; Revision 2.3 is the basis for the current expansions.

-

Version PCI 3.0 obsoletes 5 V motherboards, leaving only universal and 3.3 V.

In 1999, the PCI-X expansion came out based on the PCI 2.3. It is intended to raise peak bus bandwidth significantly by using a higher transfer frequency, and to increase the operation efficiency by employing an improved protocol. The protocol also defines split transactions and attributes that allow the exchange parties to plan their actions. The extension provides for mechanical, electrical, and software compatibility of the PCI-X devices and motherboards with the regular PCI; however, naturally, all devices on the bus adjust to the slowest piece of equipment.

In the 3.3 V interface of PCI-X 1.0, the clock frequency was raised to 133 MHz, producing PCI-X66, PCI-X100, and PCI-X133. Peak bandwidth reaches up to 528 MBps in the 32-bit version, and over 1 GBps in the 64-bit version. PCI-X 1.0 is described in the PCI-X Addendum to the PCI Local Bus Specification, Revision 1.0b (2002).

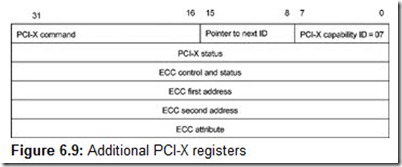

PCI-X 2.0 introduced new clocking modes with doubled (PCI-X266) and quadrupled (PCI-X533) data transfer frequency relative to the base clock frequency of 133 MHz. This high frequency requires a low-voltage interface (1.5 V) and error-correction coding (ECC). In addition to the 32- and 64-bit version, a 16-bit version is specified for embedded computers. A new type of transaction—Device ID Messages (DIM)—was introduced: These are messages that address a device using its identifier. PCI-X 2.0 is described in two documents: PCI-X Protocol Addendum to the PCI Local Bus Specification, Revision 2.0 (PCI-X PT 2.0); and PCI-X Electrical and Mechanical Addendum to the PCI Local Bus Specification, Revision 2.0 (PCI-X EM 2.0).

In addition to the bus specification, there are several specifications for other components:

-

PCI to PCI Bridge Architecture Specification, Revision 1.1 (PCI Bridge 1.1), for bridges interconnecting PCI and other bus types

-

PCI BIOS specification, defining configuring of PCI devices and interrupt controllers

-

PCI Hot-Plug Specification, Revision 1.1 (PCI HP 1.1), providing for dynamic (hot) device connection and disconnection

-

PCI Power Management Interface Specification, Revision 1.1 (PCI PM 1.1), for controlling power consumption

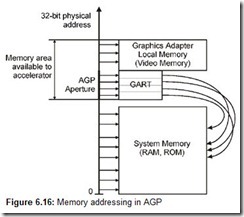

Based on the PCI 2.0 bus, Intel developed the dedicated Accelerated Graphics Ports (AGP) interface for connecting a graphics accelerator (see Section 6.2).

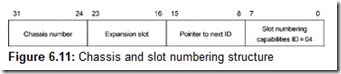

PCI specifications are published and supported by the PCI Special Interest Group (PCI SIG, http://www.pcisig.org). The PCI bus exists in different build variations: Compact PCI (CPCI), Mini-PCI, PXI, Card Bus.

The PCI bus was first introduced as a mezzanine bus for the systems with the ISA bus, and later became the central bus. It is connected to the processor system bus by the high-performance north bridge that is a part of the motherboard chipset. The south bridge connects to the PCI bus the other I/O expansion buses and devices. These include the ISA/EISA and MCA buses, as well as the ISA-like X-BUS and the LPC interface to which the motherboard’s integrated circuits (such as the ROM BIOS, interrupt, keyboard, DMA, COM and LPT ports, FDD, and so on) are connected. In modern motherboards that use hub architecture, the PCI bus has been taken out of the mainstream: The power of its CPU and RAM communication channel has not been reduced, but neither is it loaded up by transit traffic from the other buses’ devices.

The bus is synchronous: All signals are latched at the rising edge of the CLK signal. The nominal synchronization frequency is 33 MHz, but this can be lowered if necessary (on machines with 486 CPUs, frequencies of 20-33 MHz were used). Often, the bus frequency can be overclocked to 41.5 MHz, or half the typical 83 MHz system bus frequency. From the PCI 2.1 revision, the bus frequency can be raised to 66 MHz provided that all devices connected to the bus support it.

The nominal bus width is 32 bits, although the specification also defines a 64-bit bus. At 33 MHz, the theoretical throughput capacity of the bus reaches 132 MB/sec for the 32-bit bus and 264 MB/sec for the 64-bit bus. At 66 MHz, it reaches 264 MB/sec and 528 MB/sec for the 32-bit and 64-bit buses, respectively. But these peak values are achieved only during burst transmissions. Because of the protocol overhead, the real average aggregate bus throughput is lower for all masters.

The CPU can interact with PCI devices by memory and I/O port commands addressed within the ranges allocated to each device at configuration. Devices may generate masked and nonmasked interrupt requests. There is no concept of the DMA channel number for the PCI bus, but a bus agent may play the role of master and maintain a high-performance exchange with the memory and other devices without using CPU resources. In this way, for example, DMA exchange with ATA devices connected to the PCI IDE controller can be implemented (see Section 8.2.1).

Operating in the Bus Master role is desirable for all devices that require extensive data exchange, as the device can generate quite lengthy packet transactions, for which the effective data transfer speed approaches the declared pealtl Instead of I/O ports, memory-mapped I/O are recommended for controlling devices as far as possible.

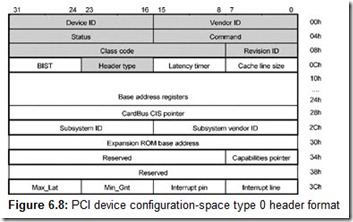

Each PCI device has a standard set of configuration registers located in addressing space separate from the memory and I/O space. Using these registers, devices are identified and configured, and their characteristics are controlled.

The PCI specification requires a device to be capable of moving the resources it uses (the memory and I/O ranges) within the limits of the available address space. This allows conflict-free resource allocation for many devices and/or functions. A device can be configured in two different ways: mapping its registers either onto the memory space or onto the I/O space. The driver can determine its current settings by reading the contents of the device’s base address register. The driver also can determine the interrupt request number used by the device.

6.1.1 Device Enumeration

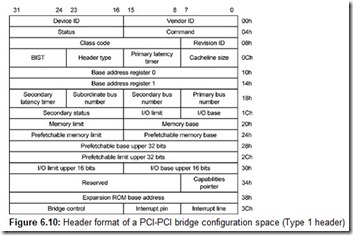

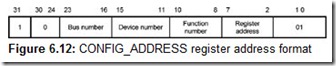

For the PCI bus, a hierarchy of enumeration categories—bus, device, function—has been adopted: The host—the bus master—enumerates and configures all PCI devices. This role is usually played by the central processor, which is connected to the PCI bus by the host (main) bridge, relative to which bus addressing begins.

The PCI bus is a set of signal lines that directly connect the interface pins of a group of devices such as slots or devices integrated onto the motherboard. A system can have several PCI buses interconnected by PCI bridges. The bridges electrically isolate one bus’ interface signals from another’s while connecting them logically. The host bridge connects the main bus with the host: the CPU and RAM. Each bus has its own PCI bus number. Buses are numbered sequentially; the main bus is assigned number 0.

A PCI device is an integrated circuit or expansion card, connected to one of the PCI buses, that uses an IDSEL bus line allocated to it for identifying itself to access the configuration registers. A device can be multifunctional (i.e., consisting of so-called functions numbered from 0 to 7). Every function is allocated a 256-byte configuration space. Multifunctional devices can respond only to the configuration cycles of those function numbers, for which there is configuration space available. A device must always have function 0 (whether a device with the given number is present is determined by the results of accessing this function); other functions are assigned numbers as needed from 1 to 7 by the device vendor. Depending on their implementation, simple, one-function devices can respond to either any function number or only to function 0.

Enumeration categories are involved only when accessing configuration space registers (see Section 6.1.12). These registers are accessed during the configuration state, which involves enumerating the devices detected, allocating them nonconflicting resources (memory and I/O ranges), and assigning hardware interrupt numbers. In the course of further regular operation, the devices will respond to accesses to the memory and I/O addresses allocated to them, which have been conveyed to the software modules associated with them.

Each function is configured. The full function identifier consists of three parts: the bus number, device number, and function number. The short identification form (used in OS Unix messages, for example) is of the PCIO:1:2 type, meaning function 2 of device 1 is connected to the main (0) PCI bus. The configuration software must operate with a list of all functions of all devices that have been detected on the PCI buses of the given system.

The PCI bus employs positional addressing, meaning that the number assigned to a device is determined by where the device is connected to the bus. The device number, or dev, is determined by the AD bus line to which the IDSEL signal line of the given slot is connected: As a rule, adjacent PCI slots use adjacent device numbers; their numbering is determined by the manufacturer of the motherboard (or of the passive backplane for industrial computers). Very often, decreasing device numbers, beginning with 20 or 15, are used for the slots. Groups of adjacent slots may be connected to different buses; devices are numbered independently on each PCI bus (devices may have the same dev numbers, but their bus numbers will be different). PCI devices integrated into the motherboard use the same numbering system. Their numbers are hard-wired, whereas the numbers of expansion cards can be changed by moving them into different slots.

One PCI card can have only one device for the bus to which it is connected, because it is allocated only one EDSEL line for the slot into which it is installed. If a card contains several devices (a 4-port Ethernet card, for example), then it needs a bridge installed on it, as well as a PCI device, which is addressed by the EDSCL line allocated to the given card. This bridge creates on the card a supplementary PCI bus to which several devices can be connected. Each of these devices is assigned its own IDSEL line, but this line now is a part of the given card’s supplementary PCI bus.

In terms of memory and I/O space addressing, the positional address (bus and device number) is not important within the limits of one bus. However, the device number determines the interrupt request line number that the given device can use. (See Section 6.2.6 for more information on this subject; here, it is enough to note that devices on the same bus whose numbers differ by 4 will use the same interrupt request line. Assigning them different interrupt request line numbers may be possible only when they are located on different buses, but this depends on the motherboard.) In systems with several PCI buses, installing a device into slots of different buses may affect its productivity; this depends on the characteristics of a particular bus and how far away it is from the host bridge.

Figuring out device numbering and the system for assigning interrupt request line numbers system is simple. This can be done by installing a PCI card sequentially into each of the slots (remembering to turn the power off each time) and noting the messages about the PCI devices found that are displayed at the end of POST. PCI devices built into the motherboard and not disabled by the SMOS Setup will also appear in these messages. Although this may all seem to be very clear and simple, some operating systems—especially “smart” ones, such as Windows—are not content with the allocated interrupt request numbers, and change them as they deem fit; this does not affect line-sharing in any way.

All bus devices can be configured only from the host’s side; this is the host’s special role. No master on any PCI bus has access to the configuration registers of all devices; without this access, complete configuration cannot be done. Even from the main PCI bus, the registers of the host bridge are not accessible to a master; without access to these registers, address distribution between the host and the PCI devices cannot be programmed. Access possibilities to the configuration registers are even more modest from the other PCI buses (see Section 6.1.6).

6.1.2 Bus Protocol

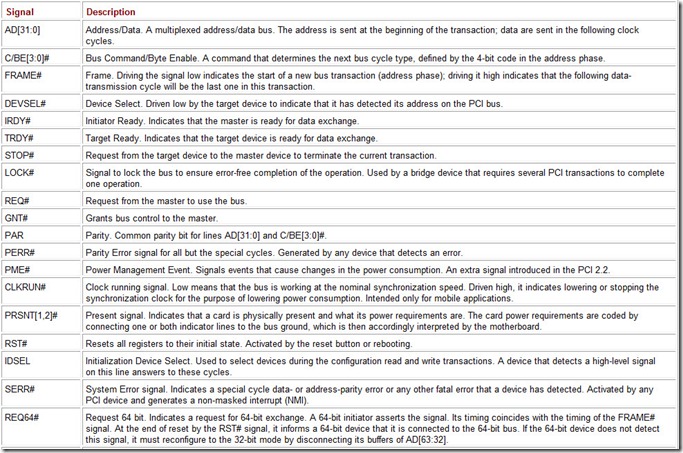

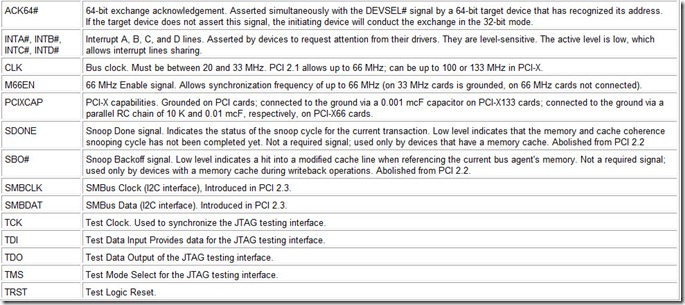

Two devices are involved in every transaction, or bus exchange: the exchange initiator device (or bus master) and a target device (or bus slave). The rules for these devices’ interactions are defined by the PCI bus protocol. A device can monitor the bus transactions without participating in them (i.e., without generating any signals); this mode is called Snooping. A Special Cycle happens to be of the broadcast type; in such cycle, the initiator does not interact with any of the devices using the protocol. The suite and functions of the bus interface signals are shown in Table 6.2.

Table 6.2: PCI Bus Signals

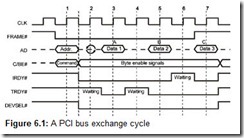

The states of all signal lines are perceived at the positive transition of the CLK signal, and it is precisely these moments that in the further description are meant by “bus cycles” (marked by vertical dotted lines in the drawings). At different points in time, the same signal lines are controlled by different bus devices; for conflict-free handing over of the authority of the bus, some time is needed when no device controls the lines. On the timing diagrams, this event—known as turnaround—is marked by a pair of semicircular arrows. See Fig. 6.1.

At any given moment, the bus can be controlled by only one master device, which has received this right from the arbiter. Each master device has a REQ# signal to request control of the bus and a GNT# signal to acknowledge bus control having been granted. A device can begin a transaction (i.e., assert a FRANE# signal) only when it receives an active GNT# signal and having waited until the bus is in the idle state. While waiting for the bus to assume the idle state, the arbiter can change its mind and give bus control to another device with higher priority. Deactivating the GNT# signal stops the device from beginning next transaction, and under certain conditions, which are considered later in this chapter, forces it to terminate the transaction in progress. A special unit, which is a part of the bridge that connects the given bus to the computer core, handles the arbitration requests. The priority scheme (whether fixed, cyclic, or combined) is determined by the arbiter’s programming.

Addresses and data are transmitted over common multiplexed AD lines. The four multiplexed lines C/BE[3:0] encode commands during the address phase and enable bytes during the data phase. In write transactions, the C/BE[3:0] lines enable bytes that are on the AD bus at the same time as their signals; in read transactions, they enable data in the following data phase. At the beginning of a transaction, the master device activates the FRAME# signal, sends the target device address over the AD bus, and sends the information about the transaction type, i.e., command, over the C/BE# lines. The addressed target device responds with a DEVSEL# signal. The master device indicates its readiness to exchange data by the IRDY# signal, which it also may assert before receiving the DEVSEL# signal. When the target device is also ready to exchange data, it will assert the TRDY# signal. Data are sent over the AD bus only when both signals, IRDY# and TRDY#, are asserted. The master and slave devices use these signals to coordinate their exchange rates by introducing wait cycles. If they asserted their ready signals at the end of the address phase and maintained them until the end of the transfer, then 32 bits of data would be sent after the address phase in each cycle. This would make it possible to reach the maximum exchange efficiency. In read transactions, an extra clock is required for the turnaround after the address phase, during which the initiator passes control over the AD line. The target device can take the AD bus only in the next clock. No turnaround is needed in a write transaction, because the data are sent by the initiator.

On the PCI bus, all transactions are of the burst type: Each transaction begins with the address phase, which may be followed by one or more data phases. The number of data phases in a burst is not indicated explicitly, but the master device releases the FRAME# signal before the last data phase, with the IRDY# signal still asserted. In single transactions, the FRAME# signal is active only for the duration of one cycle. If a device does not support burst transactions in the slave mode, then during the first data phase, it must request that the burst transaction be terminated by simultaneously asserting the STOP# and TRDY# signals. In response, the master device will complete the current transaction and will continue the exchange with the following transaction to the next address. After the last data phase, the master device releases the IRDY# signal and the bus goes into the PCI idle state, in which both the FRAME# and the IRDY# signals are inactive.

By asserting the FRAME# signal simultaneously with releasing the IRDY# signal, the initiator can begin the next transaction without going through the bus idle phase. Such fast back-to-back transactions may be directed to a target device. All PCI devices acting as targets support the first type of transactions. The second type, which is optional, is indicated by bit 7 of the status register. An initiator is allowed to use fast back-to-back transactions (by bit 9 of the command register if it is capable of doing so) with different target devices only if all bus agents are capable of fast transactions. When data exchange is conducted in the PCI-X mode, fast back-to-back transactions are not allowed.

The handshaking protocol makes the exchange reliable, as the master device will always receive information about the target device finishing the transaction. Using parity control makes the exchange more reliable and valid: The AD[31:0] and C/BE[3:0] lines are protected by the parity bit PAR line; the number of ones on these lines, including the PAR line, must be even. The actual value of PAR appears on the bus with a one-cycle delay with respect to the AD[31:0] and C/BE# lines. When a target device detects an error, it asserts the PERR# signal, shifting it one cycle after the valid parity-bit signal. When the parity is calculated during the data transfer, all bytes are taken into account, including invalid ones (marked by the high level C/BEX# signal). The bits’ state, even in invalid data bytes, must remain stable during the data phase.

Each bus transaction must be completed as planned, or terminated with the bus assuming the bus idle state (the FRAME# and IRDY# signals going inactive). Both the master and the slave device may initiate a transaction conclusion.

A master device can conclude a transaction in one of the following ways:

-

Completion is executed when the data exchange ends.

-

A time-out occurs when the master device is deprived of control of the bus (by the GNT# signal being driven high) during a transaction and the time indicated in its Latency Time timer has expired. This may happen when the addressed target device turns out to be slower than expected, or the planned transaction is too long. Short transactions (of one or two data phases) complete normally even when the GNT# signal goes high and a time-out is triggered.

-

A master-abort termination takes place when the master device does not receive a response from the target device (DEVSEL#) during the specified length of time.

A transaction may be terminated at the target device’s initiative. The target device can do this by asserting the STOP# signal. Here, three types of termination are possible:

-

Retry. The STOP# signal is asserted at the inactive TRDY# signal before the first data phase. This situation arises when the target device does not manage to present the first data within the allowed time period (16 cycles) because of being too busy. Retry is an instruction to the master device to execute the same transaction again.

-

Disconnect. The STOP# signal is asserted during or after the first data phase. If the STOP# signal is asserted when the TRDY# signal of the current data phase is active, then these data are transmitted and the transaction is terminated.

If the STOP# signal is asserted when the TRDY# signal is inactive, then the transaction is terminated without transmitting the next phase’s data. Disconnect is executed when a target device cannot send or receive the next portion of the burst’s data in time. The disconnect is a directive to the master device to repeat the transaction but with the modified start address.

-

Target-abort. The STOP# signal is asserted simultaneously with deactivation of the DEVSEL# signal. (In the preceding situations, the DEVSEL# signal was active when the STOP# signal was being asserted.) No data are sent after this termination. A target abort is executed when a target device detects a fatal error or some other conditions (e.g., an unsupported command), because of which it will not be able to service the current request.

Using all three termination types is not mandatory for all target devices; however, any master device must be capable of terminating a transaction upon any of these reasons.

Terminations of the Retry type are used to organize delayed transactions. Delayed transactions are only used by slow target devices and also by PCI bridges when transferring transactions to another bus. When terminating (for the initiator) a transaction by a Retry condition, the target device executes the transaction internally. When the initiator repeats this transaction (issues the same command with the same address and set of the C/BE# signals in the data phase), the target device (or the bridge) will have the result ready (data for a read transaction or execution status for a write transaction), and will promptly return it to the initiator. The target device (or the bridge) must store the results of an executed delayed transaction until the time they are requested by the initiator. However, due to some abnormal situation, the initiator can “forget” to repeat the transaction. In order to keep the result storage buffer from overflowing, the device has to discard these results. This can be done without producing detrimental effects only if transactions with prefetchable memory were delayed. The other types of transactions cannot be discarded without the danger of data integrity violation. They can be discarded only if no repeat request is made within 215 bus cycles (upon a Discard Timer timeout). Devices can inform their drivers (or the operating system) about this particular situation.

A transaction initiator may request exclusive use of the PCI bus during the whole exchange operation that requires several bus transactions. For example, if the central processor is executing an instruction that modifies the contents of a memory cell in a PCI device, it needs to read the data from the device, modify them in its ALU, and then return the data to the device. In order to prevent other initiators from intruding their transactions into this operation sequence (which is fraught with the danger of data integrity violation), the host bridge executes this operation as locked: I.e., keeps a LOCK# bus signal during of its execution. Regular PCI devices neither use nor generate this signal; only bridges use it to control arbitration.

PCI Bus Commands

PCI commands are defined by the transaction direction and type, as well as the address space to which they pertain. The PCI bus command set includes the following commands:

-

The I/O Read and Write commands are used to access the I/O address space.

-

Memory Read and Write commands are used to perform short, non-burst (as a rule) transactions. Their direct purpose is to access I/O devices that are mapped onto the memory space. For real memory, which allows prefetching, memory line read, memory line read multiple, and memory write and invalidate commands are used.

-

Memory Read Line is employed when reading to the end of a cache line is planned. Separating this type of read allows increased memory exchange efficiency.

-

Multiple Memory Read is used for transactions involving more than one cache line. Using this type of transaction allows the memory controller to prefetch lines, which gives an extra productivity increase.

-

Memory Write and Invalidate is used to write an entire cache line; moreover, all bytes in all phases must be enabled. This operation saves time by forcing the cache controller to flush the “dirty” cache lines corresponding to the written area without unloading them from the main memory. The initiator that issues this command must know the cache line size in the given system (it has a special register for this purpose in the configuration area).

-

The Dual Address Cycle (DAC) allows the 32-bit bus to be used to communicate with devices employing 64-bit addressing. In this case, the lower 32 address bits are sent in this cycle simultaneously with this command, after which follows a regular cycle setting the exchange type and carrying the higher 32 address bits. The PCI bus permits 64-bit I/O port addressing. (It is of no use for the x86 machines, but PCI also is used in other platforms.)

-

The Configuration Read and Write commands address the configuration space of the devices. Only aligned double words are used to perform access; bits AD[1:0] are used to identify the cycle type. A special hardware/software mechanism is needed to generate these commands.

-

The Special Cycle command is a broadcast type, which makes it different from all other commands. However, no bus agent responds to it, and the host bridge or another device that starts this cycle always terminates it with a master-abort. It takes 6 bus cycles to complete. The Special Cycle broadcasts messages that any “interested” bus agents can read. The message type is encoded in the contents of the AD[15:0] lines; the data sent in the message may be placed on the AD[31:16] lines. Regular devices ignore the address phase in this cycle, but bridges use the information to control how the message is broadcast. Messages with codes 0000h, 0001h, and 0002h are used to indicate shutdown, processor Halt, or the x86-specific functions pertaining to cache operations and tracing. The codes 0003-FFFFh are reserved. The same hardware/software mechanism that generates configuration cycles may generate the Special Cycle, but with the address having a specific meaning.

-

The Interrupt Acknowledge command reads the interrupt vector. In protocol terms, it looks like a read command addressed to the interrupt controller (PIC or APIC). This command does not send any useful information over the AD bus in the address phase (BE[3:0]# sets the vector size), but its initiator—the host bridge—must ensure that the signal is stable and the parity is correct. In PC, an 8-bit vector in byte 0 is sent when the interrupt controller is ready (upon the TRDY# signal). Interrupts are acknowledged in one cycle; the bridge suppresses the first null cycle that x86 processors perform for reverse-compatibility reasons.

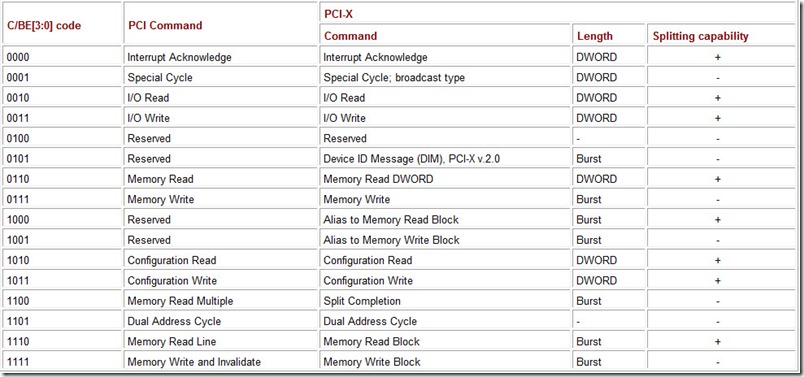

Commands are coded by the C/BE# bits in the address phase (see Table 6.3); PCI-X specific commands are considered in the following sections.

Table 6.3: PCI and PCI-X Bus Command Decoding

Each bus command contains the address of the data that pertain to the first data phase of the burst. The address for every subsequent data phase in the burst is incremented by 4 (the next double word), or by 8 (for 64-bit transfers), but the order may be different in memory-reference commands. The bytes of the AD bus that carry meaningful information are selected in data phases by the C/CB [3:0]# signals. Within a burst, these signals may arbitrarily change their states in different phases. Enabled bytes may not be adjacent on the data bus; data phases, in which not a single byte is enabled, are possible. Unlike the ISA bus, the PCI bus cannot change its width dynamically: All devices must connect to the bus in the 32- or 64-bit mode. If a PCI device uses a function circuit of different width (e.g., an 8255 integrated circuit, which has an 8-bit data bus and four registers, needs to be connected), then it becomes necessary to employ schematic conversions that map all registers to the 32-bit AD bus. The 16-bit connection capability appeared only in the second version of PCI-X.

Addressing is different for each of the three spaces—memory, I/O ports, and configuration registers; the address is ignored in the special cycles.

Memory Addressing

Physical memory space address is sent over the PCI bus; in x86 (and other) processors, it is derived from the logical addressing by table page translation done by the MMU block. In the memory-access commands, the address aligned at the double-word boundary is transmitted over the AD[31:2] lines; the AD[1:0] lines set the burst addressing modes:

-

00—Linear incrementing. The address of the next phase is obtained by incrementing the preceding address by the number of the bus-width bytes: 4 bytes for a 32-bit bus and 8 bytes for a 64-bit bus.

-

10—Cacheline Wrap mode. In this mode, memory access addresses wrap around the end of the cacheline. In a transaction, each subsequent phase address is incremented until its value reaches the end of the cacheline, after which it wraps around to the beginning of this line and increments to the value preceding the starting-address value. If a transaction is longer than the cacheline, then it will continue in the next line from the offset, at which it started. Thus, with a 16-byte line and a 32-bit bus, the subsequent data phases of a transaction that began at the address xxxxxx08h will have the addresses xxxxxx0Ch, xxxxxx00h, xxxxxx04h; then in the next cacheline: xxxxxx18h, xxxxxx1Ch, xxxxxx10h, xxxxxx14h. The length of a cacheline is set in the configuration space of the device. If a device does not have the cacheline size register, then it must terminate the transaction after the first data phase because the order in which the addresses alternate turns out to be not indeterminate.

-

01 and 11—These combinations are reserved. They may be used as a Disconnect direction after the first data phase.

If addresses over 4 GB need to be accessed, then a two-address cycle is used that carries the lower 32 bits of the 64-bit address for the following commands, along with which the higher bits of the address are sent. In regular commands, bits [63:32] are assumed to be having zero value.

A full memory address is sent in PCI-X using all AD[31:0] lines. In burst transactions, the address determines the exact location of the burst’s starting byte, and the addresses are assumed to be incremented in a linear ascending order. All bytes are involved in packet transactions, starting from the specified starting byte and ending with the last as given in the byte counter. Individual bytes cannot be disabled in a PCI-X burst transaction as they can be in PCI. In single DWORD transactions, the AD[1:0] address bits determine the bytes that can be enabled by the C/BE[3:0]# signals. Thus, if:

-

AD[1:0] = 00 then C/BE[3:0] = xxxx

-

AD[1:0] = 01 then C/BE[3:0] = xxx1

-

AD[1:0] = 10 then C/BE[3:0] = xx11

-

AD[1:0] = 11 then C/BE[3:0] = x111 (Only byte 3 is sent, or no bytes are enabled.)

I/O Addressing

In the I/O port-access addressing commands, all AD[31:0] lines are used (decoded) to address any byte. The AD[31:2] address bits point to the address of the double-word data being transmitted. The AD[1:0] address bits define the bytes that can be enabled by the C/BE[3:0]# signals. The rules for the PCI transactions are somewhat different here: When at least one byte is sent, the byte pointed to by the address also must be enabled. Thus, when:

-

AD[1:0] = 01 then C/BE[3:0]# = XX01 or 1111

-

AD[1:0] = 10 then C/BE[3:0]# = x011 or 1111

-

AD[1:0] = 11 then C/BE[3:0]# = 0111 (onlybyte3 issent) or C/BE [3:0] # = 1111 (no bytes are enabled)

These cycles formally can also come inbursts, although this capability is seldom used in practice. All 32 address bits are available for I/O port addressing on the PCI bus, but x86 processors can use only the lower 16 bits.

The same interrelations between the C/BE[3:0]# and address signals for single DWORD memory transactions described in the previous paragraph extend to PCI-X I/O transactions. These transactions are always single DWORD.

Addressing Configurations Registers and Special Cycle

The configuration write/read commands have two address formats, each of which is used for specific situations. To access registers of a device located on the given bus, Type 0 configuration transactions are employed (Fig. 6.2, a). The device (an expansion card) is selected by an individual IDSEL signal generated by the bus’ bridge based on the number of the device. The selected device sees the function number Fun in bits AD[10:8] and the configuration register number Reg in bits AD[7:2]; bits AD[1:0] are the Type 0 indicator. The AD[31:11] lines are used as the source of the IDSEL signals for the devices of the given bus. The bus specification defines the AD11 line as the IDSEL line for device 0, the AD12 line as the IDSEL line for device 1; the sequence continues in this order with the AD31 line being the IDSEL line for device 20. The bridge specification features a table in which only lines AD16 (device 0) through AD32 (device 15) are used.

PCI devices combined with a bridge (sharing the same microchip) can also use larger numbers, for which there are not enough AD lines. In PCI-X, the undecoded device number Dev is sent over the AD[15:11] lines: Devices use it as a part of their identifier in the transaction attributes. For devices operating in Mode 1, the AD[31:16] lines are used for IDSEL; only AD[23:16] lines are used in Mode 2, with seven being the largest device number. This allows the function’s configuration space to be expanded to four kilobytes: The AD[27:24] lines are used as the higher bits of the configuration register number UReg (Fig. 6.2, c).

Type 1 configuration transactions are used to access devices on the other buses (Fig. 6.2, d). Here, the bus number Bus of the bus, on which the device being sought is located, is determined by the AD[23:16] bits; the device number Dev is determined by the AD[15:11] bits; bits AD[10:8] contain the function number Fun; bits AD[7:2] carry the register number Reg; the value 01 in bits AD[1:0] is the Type 1 indicator. In PCI-X Mode 2, the higher bits of the register number UReg are sent over the AD[27:24] lines.

Because bits AD[1:0] are used only for identifying the transaction type, the configuration registers are accessed only by double words. The distinctions between the two configuration transaction types are used to construct the hierarchial PCI configuration system. Unlike in transactions conducted with the memory and I/O addresses, which arrive from the initiator to the target device no matter how they are mutually located, the configuration transactions propagate over the bus hierarchy only in one direction: downward, from the host (central processor) through the main bus to the subordinate buses. Consequently, only the host can configure all PCI devices (including bridges).

No information is sent over the AD bus in the address phase of the PCI broadcast command, called the special cycle. Any PCI bus agent can call a special cycle on any specifically indicated bus by using a Type 1 configuration write transaction and indicating the bus number in bits AD[23:16]. The device and function number fields (bits AD[15:8]) must be all set to one, while the register number field must be zeroed out. This transaction transits the buses independently of the mutual locations of the initiator and the target bus, and only the very last bridge converts it into the actual special cycle.

PCI-X Protocol Modification

In many respects, the PCI-X bus protocol is the same as described above: the same latching at the CLK transition, the same control signal functions. The changes in the protocol are aimed at raising the efficiency of bus cycle usage. For this purpose, additions were made to the protocol that allow devices to foresee upcoming events and to plan their actions accordingly.

In the regular PCI, all transactions begin in the same way (with the address phase) as burst transaction with their length unknown in advance. Here, in practice, the I/O transactions always have only one data phase; long bursts are efficient (and are used) only to access memory. In PCI-X, there are two transaction types in terms of length:

-

Burst: All commands access memory except the Memory Read DWORD.

-

Single double word size (DWORD): all other commands.

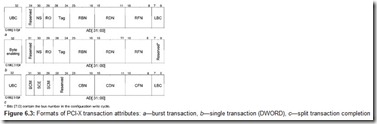

Each transaction has a new attribute transmission phase after the address phase. In this phase, the initiator presents its identifier (RBN—bus number, RDN—device number, and RFN—function number), a 5-bit tag, a 12-bit byte counter (only for burst transactions; UBC—higher bits, LBC—lower bits), and additional characteristics (ro and NS bits) of the memory area, to which the given transaction pertains. The attributes are sent over the AD[31:0] and BE[3:0]# bus lines (Fig. 6.3). The initiator identifier together with the tag defines the Sequence: one or more transactions that provide logical data transfer scheduled by the initiator. By using a 5-bit tag, each initiator can simultaneously execute up to 32 logical transfers (a tag can be reused for another logical transaction only after a previous transaction using the same tag value has been completed). A logical transfer (sequence) can be up to 4,096 bytes long (byte counter value 00 … 01 corresponds to number 1, value 11 … 11 corresponds to number 4,095, value 00 … 00 corresponds to number 4,096); the number of bytes that must be transferred in the given sequence is indicated in the attributes for each transaction. The number of bytes to be transmitted in the given transaction is not determined in advance (either the initiator or the target device can stop a transaction). However, in order to raise efficiency, stringent requirements are applied to burst transactions.

If a transaction has more than one data phase, it can terminate either after all the bytes declared (in the byte counter in the attributes) have been transmitted or only on the cache line boundaries (on the 128-byte memory address boundaries). If the transaction participants are not ready to meet with these requirements, then one of them must stop the transaction after only the first data phase. Only the target device still has the right to emergency transaction termination at any moment; the initiator is strictly responsible for its actions.

The characteristics of the memory, to which a given transaction pertains, make it possible to select the optimal method to access it when processing the transaction. The characteristics are determined by the device that requests the particular sequence. How it learns the memory properties is something, with which its driver should be concerned. The attributes of the memory characteristics pertain only to burst access memory transactions (but not to MSI messages):

-

The Relaxed Ordering (RO) flag means that the execution order of individual write or read transactions can be changed.

-

The No Snoop (NS) flag means that the memory area, to which the given transaction pertains, is not cached anywhere.

In PCI-X, Delayed Transactions are replaced by Split Transactions. The target device can complete any transaction, with the exception of memory write transactions, either immediately (using the regular PCI method) or using the split transaction protocol. In the latter case, the target device issues a Split Response signal, executes the command internally, and afterwards initiates its own transaction (a Split Completion command) to send the data or inform the initiator of the completion of the initial (splitted) transaction. The target device must split the transaction if it cannot answer it before the initial latency period expires. The device that initiates a split transaction is called a Requester.

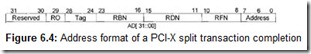

The device that completes a split transaction is called a Completer. To complete the transaction, the completer must request bus control from the arbiter; the requester will play the role of the target device in the completion phase. Even a device that is formally not a bus master (as indicated in its configuration space registers) can complete a transaction by the split method. A Split Completion transaction looks a lot like a burst write transaction, but differs from it in the addressing phase: Instead of the full memory or I/O address, the identifier of the sequence (with the requester’s bus, device, and function numbers), to which this completion pertains, and only the lower six address bits are sent over the AD bus (Fig. 6.4). The completer obtains this identifier from the attributes of the transaction that it splits.

Using this identifier (the number of the requester’s bus), the bridges convey the completion transaction to the requesting device. The completer’s identifier (CBN—bus number, CDN—device number, and CFM—function number; see Fig. 6.3, c) is sent in the attribute phase. The requester must recognize its sequence identifier and respond to the transaction in the regular way (immediately). The sequence may be processed in a series of completion transactions, until the byte counter is exhausted (or terminated by a time-out). The requester figures out itself, to which starting address each of the termination transactions belongs (it knows what it asked and how many bytes have already arrived). A completion transaction can carry either the requested read data or a Split Complete Message.

The requester must always be ready to receive the data of the sequence that it started; moreover, the data of different sequences may arrive in random order. The completer can generate completion transactions for several sequences also in random order. Within the limits of one sequence, the completions must, naturally, be arranged by addresses (which are not sent). The attributes of a completion transaction contain the bus, device, and function numbers and a byte counter. In addition, they contain three flags:

-

Byte Count Modified (BCM): indicates that there will be fewer data bytes sent than the requester asked for (sent with the completion data).

-

Split Completion Error (SCE): indicates a completion error; set when a completion message is sent as an early error indicator (before the message itself has been decoded).

-

Split Completion Message (SCM): message indicator (distinguishes the message from data).

PCI-X 2.0 Data Transfer Distinctions

In addition to the above-described protocol changes, a new operating mode—Mode 2—was introduced in PCI-X 2.0. The new mode allows faster memory block writes and uses ECC control, and can be used only with the low (1.5 V) power supply. It has the following features:

-

The time for address decoding by the target device—the delay in its DEVSEL# response to the command directed to it—has been increased by I clock in all transactions.

This extra clock is needed for the ECC control to ascertain the validity of the address and command.

-

In Memory Write Block transactions, data are transferred at two or four times the rate of the clocking frequency. In these transactions, the BEX# signals are used for synchronization from the data source (they are not used as intended, because it is assumed that all bytes must be enabled). Each data transfer (64, 32, or 16 bits) is strobed by the BEX# signals. The BE[1:0]# and BE[3:2] line pairs provide differential strobing signals for the AD[15:0] and AD[31:16] data lines. There can be two or four data subphases in one bus clock, which at the CLK frequency of 133 MHz provides the PCI-X266 and PCI-X533. Because all control signals are synchronized by the common signal CLE, the transfer granularity becomes two or four data subphases. For a 32-bit bus, this means that during transactions, data can be transferred (as well as transfers halted or suspended) in multiples of 8 or 16 bytes.

In the 64-bit version of the bus, the AD[63:32] lines are only used in data phases; only the 32-bit bus is used for the address (even 64-bit) and for the attributes.

Devices operating in Mode 2 have the option of using the 16-bit bus. In this case, the address and attribute phases take 2 clocks each, while the data phases always come in pairs (providing regular granularity). In the address/data bus, the AD[16:31] lines are used to send the information of bits [0:15] in the first phase of the pair, and of bits [16:31] in the second phase. The C/BE[0:1]# information is sent over lines C/BE[2:3]# in the first phase, and C/BE[2:3]# in the second phase. Lines ECC[2:5] are used for ECC control. Bits ECC[0,1,6] and the special E16 control bit are sent over these lines in the first phase, and ECC[2:5] in the second. The 16-bit bus is only intended for built-in applications (slots and expansion cards are not provided for).

Message Exchange Between Devices (DIM Command)

The ability to send information (messages) to a device addressing it using the identifier (bus, device, and function numbers) was introduced in PCI-X 2.0. The memory and I/O addressing spaces are not used to address and route these messages, which can be exchanged between any bus devices, including the host bridge. The messages are sent in sequences, in which Device ID Message (DIM) commands are used. This command has specific addresses and attributes. In the address phase (Fig. 6.5, a), the identifier of the message receiver (completer) is sent: the numbers of its bus, device, and function (CBN, CDN, and CFN, respectively). The Route Type (RT) bit indicates the routing type of the message: 0—explicit addressing using the identifier mentioned above, 1—implicit addressing to the host bridge (the identifier is not used in this case). The Silent Drop (SD) bit sets the error handling method when processing the given transaction: 0—regular (as for a memory write), 1—some types of errors are ignored (but not the parity or ECC errors). The Message Class field sets the message class, according to which the lower address byte is interpreted. A transaction can also use a two-address cycle. In this case, the DAC command code is sent over lines C/BE[3:0]# in the first address phase; the contents of bits AD[31:00] correspond to Fig. 6.5, a. The DIM command code is sent over the C/BE[3:0]# lines in the second address phase; bits AD[31:00] are interpreted depending on the message class. Having decoded the DIM command, a device that supports message exchange checks whether the receiver identifier field matches its own.

The message source identifier (RBN, RDN, and RFN), message tag (Tag), the 11-bit byte counter (UBC and LBC), and additional attribute bits are sent in the attribute phase (Fig. 6.5, b). The Initial Request (IR.) bit is the start of message indicator; the message itself can be broken into parts by the initiator, receiver, or the intermediary bridges (the bit is set to zero in all the following parts). The Relaxed Ordering (RO) bit indicates that the given message can be delivered in any order relative to the other messages and memory writes that are propagated in the same direction (the order, in which the fragments of the given message are delivered, is always preserved).

The body of the message, which is sent in the data phase, can be up to 4,096 bytes long (this limit is due to the 12-bit byte counter). The contents of the body are determined by the message class; class 0 is used at the manufacturer’s discretion.

Bridges transfer explicit routing messages using the bus number of the receiver. Problems with the transfer may only arise on the host bridges: If there is more than one host bridge, it may be very difficult to link them architecturally (using memory controller buses, for example). It is desirable to have the capability to transfer messages from one bus to another using host bridges (it is simpler than transferring transactions of all types), but it is not mandatory. If this method is supported, the user enjoys more freedom (the entire bus topology does not have to be considered when placing devices). Implicit routing messages are sent only in the direction of the host.

It is not mandatory for PCI-X devices to support DIM, but PCI-X Mode 2 devices are required to support it. If a DIM message is addressed to a device located on a bus operating in the standard PCI mode (or the path to it goes through the PCI), the bridge either cancels this message (if SD=1) or aborts the transactions (Target Abort, if SD=0).

Boundaries of Address Ranges and Transactions

The Base Address Registers (BAR) in the configuration space header describe the memory and I/O ranges taken by a device (or, more exactly, by a function). It is assumed that the range length is expressed by a 2n number (n = 0, 1, 2…) and that the range is naturally aligned. In PCI, memory ranges are allocated in 2n paragraphs (16 bytes; i.e., the minimal range size is 16 bytes). I/O ranges are allocated in 2n double words. PCI to PCI bridges have maps of the memory addresses with granularity of 1 MB; maps of I/O addresses have granularity of 4 KB.

In the PCI, a burst transaction can be interrupted at the boundary of any double word (a quadruple word in the 64-bit transactions). In PCI-X, in order to optimize memory accesses, burst transactions can only be interrupted at the special point called the Allowable Disconnect Boundary (ADB). ADB points are located at intervals of 128 bytes: This is a whole number (1, 2, 4, or 8) of cache lines in modern processors. Of course, this limitation applies only to the transaction borders inside a sequence. If a sequence has been planned to complete not on an ADB boundary, then its last transaction will be completed not on a boundary. However, this type of situation is avoided by developing types of data structures that can be properly aligned (sometimes, even at the expense of being superfluous).

The term ADB Delimited Quantum (ADQ) is associated with the address boundaries; it denotes the part of a transaction or buffer memory (in bridges and devices) that lies between adjacent allowable disconnect boundaries. For example, a transaction crossing one allowable disconnect boundary consists of two data ADQ5 and occupies two ADQ buffers in the bridge.

In accordance with the allowable transaction boundaries, the memory areas that PCI-X devices occupy also must begin and end at ADBs: The memory is allocated in ADQ quanta. Consequently, the minimum memory area allocated to a PCI-X device cannot be less than 128 bytes and, taking into account the area description rules, it size is allowed to be 128 × 2n bytes.

Transaction Execution Time, Timers, and Buffers

The PCI protocol regulates the time (number of clocks) allowed for different phases of a transaction. Bus operation is controlled by several timers, which do not allow bus cycles to be wasted and make it possible to plan bandwidth distribution.

Each target device must respond to the transaction addressed to it sufficiently rapidly. The reply from the addressed target device (the DEVSEL# signal) must come within 1-3 clocks after the address phase, depending on how fast the particular device is: 1 clock—fast, 2 clocks—medium, 3 clocks—slow decoding. If there is no answer, the next clock is allocated to transaction intercepting by a subtractive address decoding bridge.

Target initial latency (i.e., the delay in the appearance of the TRDY# signal with respect to the FRAME# signal), must not exceed 16 bus cycles. If, because of its technical characteristics, a device sometimes does not manage to complete its business during this interval, it must assert the STOP# signal, terminating the transaction. This will make the master device repeat the transaction, and chances are greater that this attempt will be successful. If a device is slow and often cannot manage to complete a transaction successfully within 16 bus cycles, then it must execute Delayed Transactions. Target devices are equipped with an incremental bus-cycle-duration tracking mechanism (Incremental Latency Mechanism) that does not allow the interval between the adjacent data phases in the burst (target subsequent latency) to exceed 8 bus cycles. If a target device cannot maintain this rate, it must terminate the transaction. It is desirable that a device inform about its “falling behind” as soon as possible, without waiting out the 16- or 8-cycle limits: This economizes on the bus’ bandwidth.

The initiator must also not slow down the data flow. The permissible delay from the beginning of the FRAME# signal to the IRDY# signal (master data latency) and between data phases must not exceed 8 cycles. If a target device periodically rejects a memory write operation and requests a repeat (as may happen when writing to video memory, for example), then there is a time limit for the operation to be completed. The maximum complete time timer has a threshold of 10 jusec—334 cycles at 33 MHz or 668 cycles at 66 MHz—during which the initiator must have an opportunity to push through at least one data phase. The timer begins to count from the moment the memory write operation repeat is requested, and is reset when a subsequent memory write transaction other than the requested repeat is completed. Devices that are not capable of complying with the limits on the maximum memory write time must provide the driver with a means of determining at what states sufficiently fast memory write operations are not possible with them. The driver, naturally, must take such states into consideration and not strain the bus and the device with fruitless write attempts.

Each master device capable of forming a burst of more than two data phases long must have its own programmable Latency Timer, which regulates its operation when it loses bus control. This timer actually sets the limitation on the length of a burst transaction and, consequently, on the portion of the bus bandwidth allotted to this device. The timer is set going every time the device asserts the FRAME# signal, and counts off bus cycles to the value specified in the configuration register of the same name. The actions of a master device when the timer reaches the threshold depend on the command type and the states of the FRAME# and GNT# signals at the moment the timer is triggered:

-

If the master device deactivates the FRAME# signal before the timer is triggered, the transaction terminates normally.

-

If the GNT# signal is deactivated and the command currently being executed is not a Memory Write and Invalidate command, then the initiator must curtail the transaction by deactivating the FRAME# signal. It is allowed to complete the current data phase and execute one more.

-

If the GNT# signal is deasserted and a Memory Write and Invalidate command is being executed, then the initiator must complete the transaction in one of two ways. If the double word currently being transmitted is not the last in the cache line, the transaction is terminated at the end of the current cacheline. If the double word is the last in the current cacheline, the transaction is terminated at the end of the next cache line.

Arbitration latency is defined as the number of cycles that lapse from the time the initiator issues a bus-control request by the REQ# signal to the time it is granted this right by the GNT# signal. This latency depends on the activity of the other initiators, operating speeds of the other devices (the fewer wait cycles they introduce, the better), and how fast the arbiter itself is. Depending on the command being executed and the state of the signals, a master device must either curtail the transaction or continue it to the planned completion.

When master devices are configured, they declare their resource requirements, stating the maximum permissible bus access grant delay (Max_Lat) and the minimum time they must have control over the bus (Min_GNT). These requirements are functions of how fast a device is and how it has been designed. However, whether these requirements will actually be satisfied (the arbitrage strategy is supposed to be determined based on them) is not clear.

The arbitration latency is defined as the time that elapses from the moment the master’s REQ# is asserted to the moment it receives a GNT# signal and the bus goes into the Idle state (only from that moment can the specific device begin a transaction). The total latency depends on how many master devices there are on the bus, how active they are, and on the values (in bus clocks) of their latency timers. The greater these values are, the more time other devices have to wait to be given control over the bus when it is considerably loaded.

The bus allows lower power consumption by the device at the price of decreased productivity, by using address/data stepping for the AD[31:0] and PAR lines:

-

In continuous stepping, signals begin to be formed by low-current formers several cycles before asserting the valid-data acknowledgement signal (FRAME# in the address phase; IRDY# or TRDY# in the data phase). During these cycles, the signals will “crawl” to the required value using lower current.

-

In discrete stepping, signal formers use regular currents, but instead of switching all at the same time switch in groups (e.g., by bytes), only one group switches during one cycle. This reduces surges of the current, as fewer formers are switched at the same time.

A device does not necessarily use these capabilities (see the description of the command register’s bit 7 functions), but it must “understand” these cycles. If a device delays the FRAME# signal, it risks losing the right to access the bus if the arbiter receives a request from a device that has higher priority. For this reason, stepping was abolished in PCI 2.3 for all transactions except accesses to device configuration areas (type 0 configuration cycles). In these cycles, a device could have not enough time to recognize in the very first transaction cycle the IDSEL selection signal that arrives through a resistor from the corresponding ADx lines.

In PCI-X, the requirements on the number of cycles are more stringent:

-

The initiator has no right to generate wait cycles. In write transactions, the initiator places the initial data (DATA0) on the bus two clocks after the attribute phase; if the transaction is of the burst type, the next data (DATA1) are placed two clocks after the device answers with a DEVSEL# signal. If the target device does not indicate that it is ready (by the TRDY# signal), the initiator must alternate DATA0 and DATA1 data in each clock, until the target device gives a ready signal (it is allowed to generate only an even number of wait cycles).

-

The target device can introduce wait cycles only for the initial data phase of the transaction; no wait is allowed in the following data phases.

To take full advantage of the bus’ capabilities, devices must have buffers to accumulate data for burst transmissions. It is recommended that devices with transmission speeds of up to 5 MB have buffers to hold at least 4 double words. For devices with higher speeds, it is recommended to have buffers to hold 32 double words. For memory exchange operations, transactions that operate with a whole cacheline are the most effective, which is also taken into account when the buffer size is determined. However, increasing the buffer size may cause difficulties when processing errors, and may also increase delays in delivering data: Until a device fills up the buffer to the predetermined level, it will not begin sending these data and the devices, for which they are intended, will be kept waiting.

The specification gives an example of a Fast Ethernet card design (transmission speed 10 MB/sec) that has a 64-byte buffer divided into two parts for each transmission direction (a ping pong buffer). While the adapter is filling up one half of the buffer with an incoming frame, it is outputting the accumulated contents of the second half into the memory, after which the two halves of the buffer swap places. It takes 8 data phases (approximately 0.25 μsec at 33 MHz) to output each half into the memory, which corresponds to the MIN_GNT=1 setting. When the speed of incoming data is 10 MBps, then it takes 3.2 μsec to fill up each half, which corresponds to the MAX_LAT=12 setting (in the MIN_GNT and MAX_LAT registers, the time is set in 0.25 μsec intervals).

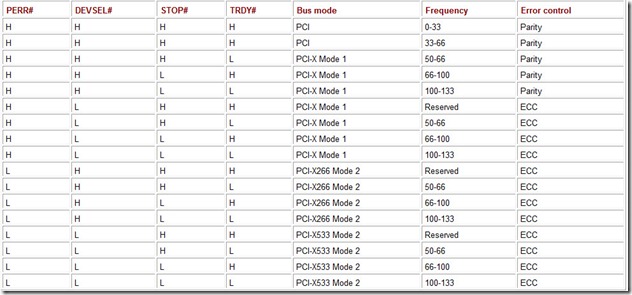

Data Transfer Integrity Control and Error Handling

Parity checking of addresses and data is used to control data transfer integrity on the PCI bus; in PCI-X, ECC with correction of one-bit errors is employed. ECC is mandatory for PCI-X Mode 2 operations; it can also be used when operating in Mode 1. The data transfer integrity control method is communicated by the bridge in the initialization pattern after a hardware reset of the bus. The bridge selects the control method supported by all its bus clients (including itself). Errors are reported by the PERR# signals (protocol signaling between the devices) and SERR# (a fatal error signal that generates, as a rule, an unmasked system interrupt).

The PAR and PAR64 signals are used in parity checking; these signals provide even parity on the AD[31:0], C/BE[3:0]#, PAR, and AD[63:32], C/BE[7:4]#, PAR64 sets of lines. The parity signals PAR and PAR64 are generated by the device that controls the AD bus at the given moment (places a command and its address, attributes, or data). Parity signals are generated with a delay of one 1 clock with respect to the lines they control: AD and C/BE#. The rules are somewhat different for read operations in PCI-X: Parity bits in the N clock pertain to the data bits of the N – 1 clock and the C/BE# signals of the N – 2 clock. The PERR# and SERR# signals are generated by the information receiver in the clock that follows the clock, in which the wrong parity appeared.

With ECC, a 7-bit ECC on the ECC[6:0] lines is to check the AD[31:0] and C/BE# [3:0] lines in the 32-bit mode; in the 64-bit mode, an 8-bit code is employed with the ECC [7:0] signals; in the 16-bit mode, a somewhat modified ECC7+1 system is used. In all of the operating modes, the ECC control allows only single errors to be corrected and most errors with a larger repetitive factor to be detected. Error correction can be disabled by software (via the ECC control register); in this case, all parity errors with the repetition factor of 1, 2, or 3 are detected. In all cases, the diagnostic information is saved in the ECC registers. The ECC bits are placed on the bus following the same rules and with the same latency as the parity bits. However, the PERR# and SERR# signals are generated by the information receiver 1 clock after the valid ECC bits: An extra clock is given to ECC syndrome decoding and an attempt to correct the error.

A detected parity error (the same as an ECC error in more than one bit) is unrecoverable. Information integrity in the address phase, and for PCI-X in the attribute phase, is checked by the target device. If an unrecoverable error is detected in these phases, the target device issues a SERR# signal (one clock long) and sets bit 14 in its status register: Signaled System Error. In the data phase, data integrity is checked by the data receiver; if it detects an unrecoverable error, it issues a PERR# signal and sets bit 15 in its status register: Detected Parity Error.

In the status register of devices, bit 8 (Master Data Parity Error) reflects a transaction (sequence) execution failure because of a detected error. In PCI and PCI-X, the rules for setting it are different:

-

In PCI, it is set only by the transaction initiator when it generates (when doing a read) or detects (when doing a write) a PERR# signal.

-

In PCI-X, it is set by the transaction requester or a bridge: a read transaction initiator detects an error in data; a write transaction initiator detects the PERR# signal; a bridge as a target device receives completion data with an error or a completion message with a write transaction error from one of the devices.

When a data error is detected, a PCI-X device and its driver have two alternatives:

-

Without attempting to undertake any actions to recover and continue working, issue a SERR# signal: This is an unrecoverable error signal that can be interpreted by the operating system as a reason to reboot. For PCI devices, this is the only option.

-

Not issue a SERR# signal and attempt to handle the error by itself. This can be only done by software and taking into account all the potential side effects of the extra operations (a simple repeated read operation may, for example, cause data loss).

The alternative selected is determined by bit 0 (Unrecoverable Data Recovery Enable) in the PCI-X Command register. By default (after a reset), this bit is zeroed out, causing a SERR# signal to be generated in case of a data error. The other option must be selected by a driver that is capable of handling errors on its own.

A detected error in the address or attribute phase is always unrecoverable.

The initiator (requester) of a transaction must have the possibility of notifying the driver of the transaction if it is rejected upon a Master Abort (no answer from the target device) or Target Abort (transaction aborted by the target device) condition; this can be done by using interrupts or other suitable means. If such notification is not possible, the device must issue a SERR# signal.

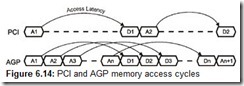

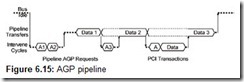

6.1.3 Bus Bandwidth

In modern computers, the PCI bus is the fastest I/O bus; however, even its actual bus bandwidth is not that high. Here, the most common version of the bus—32-bit wide, clocked at 33 MHz—will be considered. As previously mentioned, the peak data transfer rate within a burst cycle is 132 MB/sec, i.e., 4 bytes of data are sent over one bus clock (33 x 4 = 132). However, burst cycles are used far from always. To communicate with PCI devices, the processor uses memory or I/O access instructions. It sends these instructions via the host bridge, which translates them into PCI bus transactions. Because the main registers of x86 processors are 32 bits wide, no more than four bytes of data can be transmitted in one PCI transaction produced by the processor instruction, which equals a single transmission (DWORD transaction). But if the address of the transmitted double word is not aligned on the corresponding boundary, then either two single cycles or one with two data phases will be produced. In either case, this access will take longer to execute than if the address were aligned.

However, when writing a data array to a PCI device (a sequentially incremented address transmission), the bridge may try to organize burst cycles. Modern processors, starting with the Pentium, have a 64-bit data bus and use buffers for writing, so two back-to-back 32-bit write requests may be combined into one 64-bit request. If this request is addressed to a 32-bit device, the bridge will try to send it by a burst with two data phases. An “advanced” bridge may also attempt to assemble consecutive requests into a burst, which may produce a burst of a considerable length. Burst write cycles may be observed, for example, when using the MOVSD string instruction with the repeat prefix REP to send a data array from the main memory to a PCI device. The same effect also produces a sequence of LODSW, STOSW, and other memory access instructions.

Because the kernel of modern processors executes instructions much faster than the bus is capable of outputting their results, the processors can execute several other operations between the instructions that produce assembled writes. However, if the data transfer is organized by a high-level-language instruction, which for the sake of versatility is much more complex than the above-mentioned primitive assembler instructions, the transactions will most likely be executed one at a time for one of two reasons: The first is that the processor’s write buffers will not have enough “patience” to hold one 32-bit request until the next one appears; second, the processor’s or bridge’s write buffers will be forcedly cleared upon a write request (see Section 6.2.10).

Reading from a PCI device in the burst mode is more difficult. Naturally, processors do not buffer the data they read: A read operation may be considered completed only when actual data are received. Consequently, even string instructions will produce single cycles. However, modern processors can generate requests to read more than 4 bytes. For this purpose, instructions to load data into the MMX or XMM registers (8 and 16 bytes, respectively) may be used. From these registers, data then are unloaded to the RAM (which works much faster than any PCI device).

String I/O instructions (INSW, OUTSW with the REP repeat prefix) that are used for programmed input/output of data blocks (PIO) produce a series of single transactions because all the data in the block pertain to one PCI address.

It is easy to observe with an oscilloscope how a device is accessed: In single transactions, the FRAME# signal is asserted only for one clock; this is longer for burst transactions. The number of data phases in a burst is the same as the number of cycles during which both the IRDY# and TRDY# signals are asserted.

Trying to perform write transactions in the burst mode is advisable only when the PCI device supports burst transactions in the target mode. If it does not, then attempting to write data in the burst mode will even lead to a slight efficiency loss, because the transactions will be completed at the initiative of the slave device (by the STOP# signal) and not by the master device, thereby causing the loss of one bus cycle. Thus, for example, when writing an array into a PCI device memory using a high language instruction, a medium-speed device (one that introduces only 3 wait cycles) receives data every 7 cycles, which at 33 MHz gives speed of 33 x 4/7 = 18.8 MBps. Here, the active part of the transaction—from activation of the FRAME# signal to deactivation of the IRDY# signal—takes 4 cycles, and the pause takes 3 cycles. The same device using the MOVSD instruction receives data every eight bus cycles, giving a speed of 33 x 4/8 = 16.5 MBps.

These data were obtained by observing the operation of a PCI kernel implemented on the base of an Altera FPGA integrated circuit that does not support burst transactions in the slave mode. The same device works much slower when reading a PCI device memory: using the REP MOVSW instruction data could be obtained only once every 19-21 bus cycles, giving an average speed of 33 x 4/20 = 6.6 MBps. Here, the negative factors are the device’s high latency (it presents data only 8 cycles after the FRAME# signal is activated) and the fact that the processor begins its next transmission only after receiving data from the previous one. In this case, despite losing a cycle (used by the target device to terminate the transaction), the trick of using the XMM register produces a positive effect. This happens because each processor’s 64-bit request is executed by a consecutive pair of PCI transactions with only a two-cycle wait between them.

To determine the theoretical bus bandwidth limit, let’s return to Fig. 6.1 to determine the minimal time (number of cycles) for executing a read or write transaction. In the read transaction, the current master of the AD bus changes after the initiator has issued the command and address (cycle 1). This turnaround takes cycle 2 to execute, which is due to the TRDY# signal being delayed by the target device. Then, if the target device is smart enough, a data phase may follow (cycle 3). After the last data phase, one more cycle is needed for the reverse turnaround of the AD bus (in this case, it is cycle 4). Thus, it takes at least 4 cycles of 30 nsec each (at 33 MHz) to read one double word (4 bytes). If these transactions follow each other immediately (if the initiator is capable of operating this way and the bus control is not taken away from it) then, for single transactions, maximum read speeds of 33 MBps can be reached. In write transactions, the initiator always controls the AD bus, so no time is lost on the turnarounds. With a smart target device, which does not insert extra wait cycles, write speeds of 66 MBps may be achieved.

Speeds comparable to the peak speeds may be achieved only when using burst transmissions, when three extra read cycles and one write cycle are added not to each data phase but to a burst of them. Thus, to read a burst with 4 data phases, 7 cycles are needed, producing the speed of V=16/(7×30) bytes/nsec = 76 MBps. Five cycles are needed to write such a burst, giving a speed of V=16/(5×30) bytes/nsec = 106.6 MBps. When the number of data phases equals 16, the read speed may reach 112 MBps, and the write speed may reach 125 MBps.

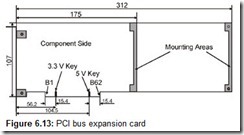

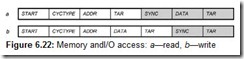

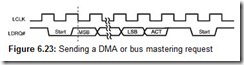

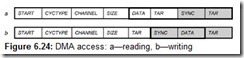

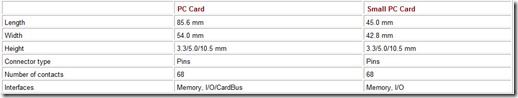

These calculations do not take into account time losses caused by the changes of the initiator. The initiator can begin a transaction at receiving the GNT# signal only after it ascertains that the bus is idle (i.e., that the FRANE# and IRDY# signals are deasserted); recognizing idle state takes another cycle. As can be seen, a single initiator can grab most of the bus’ bandwidth by increasing the burst length. However, this will cause delays for other devices to obtain bus control, which is not always acceptable. It should also be noted that far from all devices can respond to transactions without inserting wait cycles, so the actual figures will be more modest.