Data Storage Systems

Introduction

The astounding volume of data being transmitted between systems today has created an obvious need for data management. As a result, more servers—whether they are PCs, UNIX workstations, minicomputers, or supercomputers—have assumed the role of information providers and managers. The number of networked or connectable systems is increasing by leaps and bounds as well, thanks to the widespread adoption of the client-server computing model. Hard disk storage plays an important role in enabling improvements to networked systems, because the vast and growing ocean of data needs to reside some- where. It also has to be readily accessible, placing a demand upon storage system manufacturers to not only provide high-capacity products, but products that can access data as fast as possible and to as many people at the same time as possible. Such storage also needs to be secure, placing an importance on reliability features that best ensure data will never be lost or otherwise rendered inaccessible to network system users.

Redundant Arrays of Independent Disks (RAID) Systems

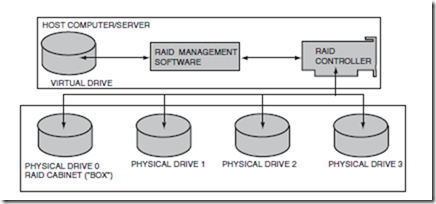

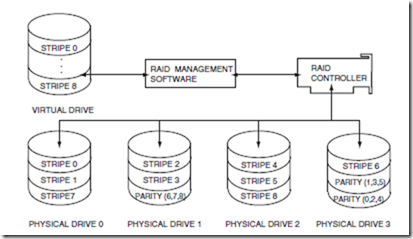

The common solution to providing access to many gigabytes of data to users fast and reliably has been to assemble a number of drives together in a gang or array of disks, known as redundant arrays of independent disks (RAID) subsystems. Simple RAID subsystems are basically a cluster of up to five or six disk drives assembled in a cabinet that are all connected to a single controller board. The RAID controller orchestrates read and write activities in the same way a controller for a single disk drive does, and treats the array as if it were in fact a single or virtual drive. RAID management software that resides in the host system provides the means to manage data to be stored on the RAID subsystem. A typical RAID configuration is illustrated in Fig. 24.1.

RAID Elements

Despite its multidrive configuration, the individual disk drives of a RAID subsystem remain hidden from users; the subsystem itself is the virtual drive, though it can be infinitely large. The phantom virtual drive is created at a lower level within the host operating system through the RAID management software. Not only does the software set up the system to address the RAID unit as if it were a single drive, it allows the subsystem to be configured in ways that best suit the general needs of the host system.

RAID subsystems can be optimized for performance, highest capacity, fault tolerance, or a combination of these attributes. Different RAID levels have been defined and standardized in accordance with these

FIGURE 24.1 A typical RAID configuration. (Source: Adapted from Heyn, T. 1995. The RAID Advantage. Seagate Technology Paper, Seagate, Scotts Valley, CA.)

general optimization parameters. There are six such standardized levels RAID, called RAID 0, 1, 2, 3, 4, and 5, depending on performance, redundancy and other attributes required by the host system.

The RAID controller board is the hardware element that serves as the backbone for the array of disks; it not only relays the input/output (I/O) commands to specific drives in the array, but provides the physical link to each of the independent drives so that they may easily be removed or replaced. The controller also serves to monitor the integrity of each drive in the array to anticipate the need to move data should it be placed in jeopardy by a faulty or failing disk drive (a feature known as fault tolerance).

RAID Levels

The RAID 0–5 standards offer users and system administrators a host of configuration options. These options permit the arrays to be tailored to their application environments. Each of the various configurations focus on maximizing the abilities of an array in one or more of the following areas:

-

Capacity

-

Data availability

-

Performance

-

Fault tolerance

RAID Level 0

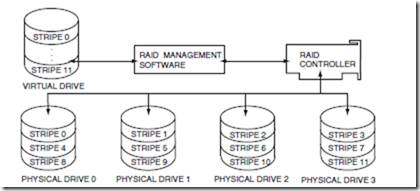

An array configured to RAID level 0 is an array optimized for performance, but at the expense of fault tolerance or data integrity. RAID level 0 is achieved through a method known as striping. The collection of drives (virtual drive) in a RAID level 0 array has data laid down in such a way that it is organized in stripes across the multiple drives. A typical array can contain any number of stripes, usually in multiples of the number of drives present in the array. Take, as an example, a four-drive array configured with 12 stripes (four stripes of designated space per drive). Stripes 0, 1, 2, and 3 would be located on corresponding hard drives 0, 1, 2, and 3. Stripe 4, however, appears on a segment of drive 0 in a different location than stripe 0; stripes 5–7 appear accordingly on drives 1, 2, and 3. The remaining four stripes are allocated in the same even fashion across the same drives such that data would be organized in the manner depicted in Fig. 24.2. Practically any number of stripes can be created on a given RAID subsystem for any number of drives: 200 stripes on two disk drives is just as feasible as 50 stripes across 50 hard drives. Most RAID subsystems, however, tend to have between 3 and 10 stripes.

The reason RAID 0 is a performance-enhancing configuration is that striping enables the array to access data from multiple drives at the same time. In other words, because the data is spread out across a number of drives in the array, it can be accessed faster because it is not bottled up on a single drive. This is especially beneficial for retrieving a very large file, because it can be spread out effectively across multiple drives and accessed as if it were the size of any of the fragments it is organized into on the data stripes.

FIGURE 24.2 In a RAID level 0 configuration, a virtual drive comprises several stripes of information. Each consecutive stripe is located on the next drive in the chain, evenly distributed over the number of drives in the array. (Source: Adapted from Heyn, T. 1995. The RAID Advantage. Seagate Technology Paper, Seagate, Scotts Valley, CA.)

The downside to RAID level 0 configurations is that it sacrifices fault tolerance, raising the risk of data loss because no room is made available to store redundant data. If one of the drives in the RAID 0 fails for any reason, there is no way of retrieving the lost data, as can be done in other RAID implementations.

RAID Level 1

The RAID level 1 configuration employs what is known as disk mirroring, which is done to ensure data reliability or a high degree of fault tolerance. RAID 1 also enhances read performance, but the improved performance and fault tolerance come at the expense of available capacity in the drives used. In a RAID level 1 configuration, the RAID management software instructs the subsystem’s controller to store data redundantly across a number of the drives (mirrored set) in the array. In other words, the same data is copied and stored on different disks to ensure that, should a drive fail, the data is available somewhere else within the array. In fact, all but one of the drives in a mirrored set could fail and the data stored to the RAID 1 subsystem would remain intact. A RAID level 1 configuration can consist of multiple mirrored sets, whereby each mirrored set can be a different capacity. Usually the drives making up a mirrored set are of the same capacity. If drives within a mirrored set are of different capacities, the capacity of a mirrored set within the RAID 1 subsystem is limited to the capacity of the smallest capacity drive in the set, hence the sacrifice of available capacity across multiple drives.

The read performance gain can be realized if the redundant data is distributed evenly on all of the drives of a mirrored set within the subsystem. The number of read requests and total wait state times both drop significantly, inversely proportional to the number of hard drives in the RAID, in fact. To illustrate, suppose three read requests are made to the RAID level 1 subsystem (see Fig. 24.3). The first request looks for data in the first block of the virtual drive; the second request goes to block 0, and the third seeks from block 2. The host-resident RAID management software can assign each read request to an individual drive. Each request is then sent to the various drives, and now—rather than having to handle the flow of each data stream one at a time—the controller can send three data streams almost simultaneously, which in turn reduces system overhead.

RAID Level 2

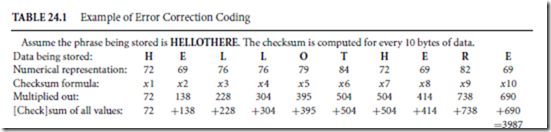

RAID level 2 is rarely used in commercial applications, but is another means of ensuring data is protected in the event drives in the subsystem incur problems or otherwise fail. This level builds fault tolerance around Hamming error correction code (ECC), which is often used in modems and solid-state memory devices as a means of maintaining data integrity. ECC tabulates the numerical values of data stored on specific blocks in the virtual drive using a formula that yields a checksum. The checksum is then appended to the end of the data block for verification of data integrity when needed.

FIGURE 24.3 A RAID level 1 subsystem provides high data reliability by replicating (mirroring) data between physical hard drives. In addition, I/O performance is boosted as the RAID management software allocates simultaneous read requests between several drives. (Source: Adapted from Heyn, T. 1995. The RAID Advantage. Seagate Technology Paper, Seagate, Scotts Valley, CA.)

As data is read back from the drive, ECC tabulations are again computed, and specific data block checksums are read and compared against the most recent tabulations. If the numbers match, the data is intact; if there is a discrepancy, the lost data can be recalculated using the first or earlier checksum as a reference point, as illustrated in Table 24.1.

This form of ECC is actually different from the ECC technologies employed within the drives themselves. The topological formats for storing data in a RAID level 2 array is somewhat limited, however, compared to the capabilities of other RAID implementations, which is why it is not commonly used in commercial applications.

RAID Level 3

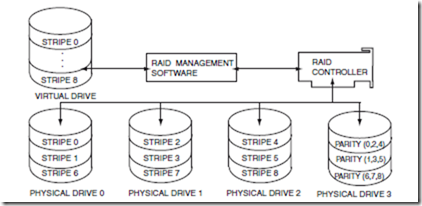

This RAID level is essentially an adaptation of RAID level 0 that sacrifices some capacity, for the same number of drives, but achieves a high level of data integrity or fault tolerance. It takes advantage of RAID level 0 data striping methods, except that data is striped across all but one of the drives in the array. This drive is used to store parity information for maintenance of data integrity across all drives in the subsystem. The parity drive itself is divided into stripes, and each parity drive stripe is used to store parity information for the corresponding data stripes dispersed throughout the array. This method achieves high data transfer performance by reading from or writing to all of the drives in parallel or simultaneously but retains the means to reconstruct data if a given drive fails, maintaining data integrity for the system. This concept is illustrated in Fig. 24.4. RAID level 3 is an excellent configuration for moving very large sequential files in a timely manner. The stripes of parity information stored on the dedicated drive are calculated using the Exclusive OR function. By using Exclusive OR with a series of data stripes in the RAID, any lost data can easily be recovered. Should a drive in the array fail, the missing information can be determined in a manner similar to solving for a single variable in an equation.

FIGURE 24.4 A RAID level 3 configuration is similar to a RAID level 0 in its utilization of data stripes dispersed over a series of hard drives to store data. In addition to these data stripes, a specific drive is configured to hold parity information for the purpose of maintaining data integrity throughout the RAID subsystem. (Source: Adapted from Heyn, T. 1995. The RAID Advantage. Seagate Technology Paper, Seagate, Scotts Valley, CA.)

RAID Level 4

This level of RAID is similar in concept to RAID level 3, but emphasizes performance for different applications, e.g., database files vs large sequential files. Another difference between the two is that RAID level 4 has a larger stripe depth, usually of two blocks, which allows the RAID management software to operate the disks more independently than RAID level 3 (which controls the disks in unison). This essentially replaces the high data throughput capability of RAID level 3 with faster data access in read-intensive applications. (See Fig. 24.5.)

A shortcoming of RAID level 4 is rooted in an inherent bottleneck on the parity drive. As data is written to the array, the parity encoding scheme tends to be more tedious in write activities than with other RAID topologies. This more or less relegates RAID level 4 to read-intensive applications with little need for similar write performance. As a consequence, like level 3, level 4 does not see much common use in commercial applications.

RAID Level 5

This is the last of the most common RAID levels in use, and is probably the most frequently implemented. RAID level 5 minimizes the write bottlenecks of RAID level 4 by distributing parity stripes over a series of

FIGURE 24.5 RAID level 4 builds on RAID level 3 technology by configuring parity stripes to store data stripes in a nonconsecutive fashion. This enables independent disk management, ideal for multiple-read-intensive environments. (Source: Adapted from Heyn, T. 1995. The RAID Advantage. Seagate Technology Paper, Seagate, Scotts Valley, CA.)

FIGURE 24.6 RAID level 5 overcomes the RAID level 4 write bottleneck by distributing parity stripes over two or more drives within the system. This better allocates write activity over the RAID drive members, thus enhancing system performance. (Source: Adapted from Heyn, T. 1995. The RAID Advantage. Seagate Technology Paper, Seagate, Scotts Valley, CA.)

hard drives. In so doing it provides relief to the concentration of write activity on a single drive, which in turn enhances overall system performance. (See Fig. 24.6.)

The way RAID level 5 reduces parity write bottlenecks is relatively simple. Instead of allowing any one drive in the array to assume the risk of a bottleneck, all of the drives in the array assume write activity responsibilities. This distribution frees up the concentration on a single drive, improving overall subsystem throughput. The RAID level 5 parity encoding scheme is the same as levels 3 and 4, and maintains the system’s ability to recover lost data should a single drive fail. This can happen as long as no parity stripe on an individual drive stores the information of a data stripe on the same drive. In other words, the parity in- formation for any data stripe must always be located on a drive other than the one on which the data resides.

Other RAID Levels

Other, less common RAID levels have been developed as custom solutions by independent vendors. Those levels include

-

RAID level 6, which emphasizes ultrahigh data integrity

-

RAID level 10, which focuses on high I/O performance and very high data integrity

-

RAID level 53, which combines RAID levels 0 and 3 for uniform read and write performance

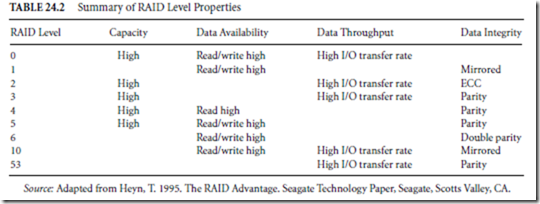

TABLE 24.2 Summary of RAID Level Properties

Custom RAID Systems

Perhaps the greatest advantage of RAID technology is the sheer number of possible adaptations available to users and systems designers. RAID offers the ability to customize an array subsystem to the requirements of its environment and the applications demanded of it. The inherent variety of configuration options provides several ways in which to satisfy specific application requirements, as detailed in Table 24.2. Customization, however, does not stop with a RAID level. Drive models, capacities, and performance levels have to be factored in as well as what connectivity options are available.

Defining Terms

Fiber channel-arbitrated loop (FC-AL): A high-speed interface protocol permitting high data transfer rates, large numbers of attached devices, and long-distance runs to remote devices using a combi- nation of fiber optic and copper components.

Redundant arrays of independent disks (RAID): A configuration of hard disk drives and supporting software for mass storage whose primary properties are high capacity, high speed, and reliability.

RAID level: A standardized configuration of RAID elements, the purpose of which is to achieve a given objective, such as highest reliability or greatest speed.

Single connector attachment (SCA): A cabling convention for certain interface standards that simplifies the interconnection of devices by combining data and power signals as a common, standardized port.

Small computer systems interface (SCSI): A cabling and software protocol used to interface multiple devices to a computer system. These devices may be internal and/or external to the computer itself. Several variations of SCSI exist.

Striping: A hard disk storage organization technique whereby data is stored on multiple physical devices so as to increase write and read speed.

Thermal calibration: A housekeeping function of a hard disk drive used to maintain proper alignment of the head with the disk surface.

Virtual drive: An operational state for a computing device whereby memory is organized to achieve a specific objective that usually does not exist as an actual physical entity. For example, RAM in the computer may be made to appear as a physical drive, or two or more hard disks can be made to appear as a single physical drive.

References

Anderson, D. 1995. Fiber channel-arbitrated loop: The preferred path to higher I/O performance, flexibility in design. Seagate Technology Paper No MN-24, Seagate, Scotts Valley, CA.

Heyn, T. 1995. The RAID Advantage. Seagate Technology Paper, Seagate, Scotts Valley, CA.

Tyson, H. 1995. Barracuda and elite: Disk drive storage for professional audio/video. Seagate Technology

Paper No. SV-25, Seagate, Scotts Valley, CA.

Further Information

The technology behind designing and manufacturing hard disk drives is beyond the scope of this chapter, and because most of the applied use of disks involves treating the drive as an operational component (black box, if you will), the best source of current information is usually drive manufacturers. Technical application notes and detailed product specifications are typically available at little or no cost.