This chapter covers the Intel8086 in detail. Intel’s 32-bit microprocessors are based on the Intel 8086. Therefore, the 8086 provides an excellent educational tool for understanding Intel 32- and 64-bit microprocessors. Because the 8086 and its peripheral chips are inexpensive, the implementation costs of 8086-based systems are low. This makes the 8086 appropriate for thorough coverage in a first course on microprocessors. Thus, the 8086 is covered in detail in this chapter.

9.1 Introduction

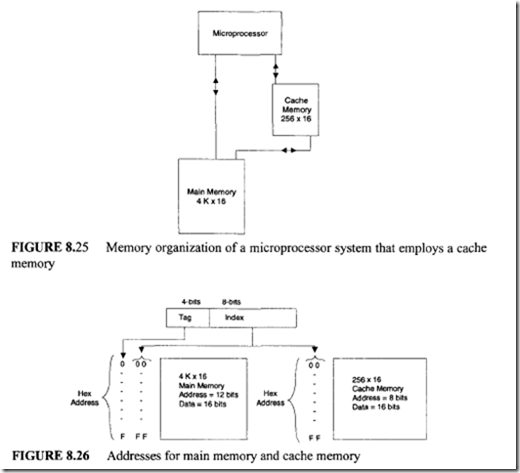

The 8086 was Intel’s first 16-bit microprocessor. This means that the 8086 has a 16-bit ALU. The 8086 contains 20 address pins. Therefore, it has a main (directly addressable) memory of one megabyte (220 bytes).

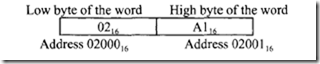

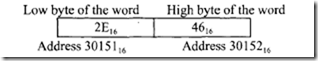

The memory of an 8086-based microcomputer is organized as bytes. Each byte is uniquely addressed with 20-bit addresses of 00000 16,00001 16, ••• FFFFF 16• An 8086 word in memory consists of any two consecutive bytes; the low-addressed byte is the low byte of the word and the high-addressed byte contains the high byte as follows:

The 16-bit word at the even address 02000 16 is A102 16• Next, consider a word stored at an address 30151 16 as follows:

The 16-bit word at the even address 02000 16 is A102 16• Next, consider a word stored at an address 30151 16 as follows:

The 16-bit word stored at the odd address 30151 16 is 462E 16.

The 16-bit word stored at the odd address 30151 16 is 462E 16.

The 8086 always reads a 16-bit word from memory. This means that a word instruction

accessing a word starting at an even address can perform its function with one memory read. A word instruction starting at an odd address, however, must perform two memory accesses to two consecutive memory even addresses, discarding the unwanted bytes of each. For byte read starting at odd address N, the byte at the previous even address N- I is also accessed but discarded. Similarly, for byte read starting at even address N, the byte with odd address N + 1 is also accessed but discarded.

For the 8086, register names followed by the letters X, H, or L in an instruction for data transfer between register and memory specify whether the transfer is 16-bit or 8- bit. For example, consider MOV AX, (START]. If the 20-bit address START is an even number such as 02212 16, then this instruction loads the low (AL) and high (AH) bytes of

the 8086 16-bit register AX with the contents of memory locations 02212 16 and 02213 16, respectively, in a single access. Now, if START is an odd number such as 0221316, then the MOV AX, [ START ] instruction loads AL and AH with the contents of memory locations 02213 16 and 02214 16, respectively, in two accesses. The 8086 also accesses memory

locations 02212 16 and 02215 16 but ignores their contents.

Next,considerMOV AL, [START]. IfSTARTisanevennumbersuchas30156 16, then this instruction accesses both addresses, 30156 16 and 30157 16, but loads AL with the contents of 30156 16 and ignores the contents of 30157 16• However, if START is an odd number such as 30157 16, then MOV AL, [START] loads AL with the contents of 3015716• In this case the 8086 also reads the contents of30156 16 but discards it.

The 8086 is packaged in a 40-pin chip. A single +5 V power supply is required.

The clock input signal is generated by the 8284 clock generator/driver chip. Instruction execution times vary between 2 and 30 clock cycles.

There are four versions of the 8086. They are 8086, 8086-1, 8086-2, and 8086-4. There is no difference between the four versions other than the maximum allowed clock speeds. The 8086 can be operated from a maximum clock frequency of 5 MHz. The maximum clock frequencies of the 8086-1, 8086-2 and 8086-4 are 10 MHz, 8 MHz and 4 MHz, respectively.

The 8086 family consists of two types of 16-bit microprocessors, the 8086 and 8088. The main difference is how the processors communicate with the outside world. The 8088 has an 8-bit external data path to memory and 110; the 8086 has a 16-bit external data path. This means that the 8088 will have to do two READ operations to read a 16-bit word from memory. Similarly, two write operations are required to write a 16-bit word into memory. In most other respects, the processors are identical. Note that the 8088 accesses memory in bytes. No alterations are needed to run software written for one microprocessor on the other. Because of similarities, only the 8086 will be considered here. The 8088 was used in designing IBM’s first personal computer.

An 8086 can be configured as a small uniprocessor (minimum mode when the MN/MX pin is tied to HIGH) or as a multiprocessor system (maximum mode when the MN/MX pin is tied to LOW). In a given system, the MN/MX pin is permanently tied to either HIGH or LOW. Some of the 8086 pins have dual functions depending on the selection of the MN/MX pin level.

In the minimum mode (MN/MX pin HIGH), these pins transfer control signals directly to memory and I/0 devices; in the maximum mode (MN/MX pin LOW), these same pins have different functions that facilitate multiprocessor systems. In the maximum mode, the control functions normally present in minimum mode are assumed by a support chip, the 8288 bus controller.

Due to technological advances, Intel introduced the high-performance 80186 and 80188, which are enhanced versions of the 8086 and 8088, respectively. The 8-MHz 80186/80188 provides two times greater throughput than the standard 5-MHz 8086/8088. Both have integrated several new peripheral functional units, such as a DMA controller, a 16-bit timer unit, and an interrupt controller unit, into a single chip. Just like the 8086 and 8088, the 80186 has a 16-bit data bus and the 80188 has an 8-bit data bus; otherwise, the architecture and instruction set of the 80186 and 80188 are identical. The 80186/80188 has an on-chip clock generator so that only an external crystal is required to generate the clock. The 80186/80188 can operate at either a 6- or an 8-MHz internal clock frequency. The crystal frequency is divided by 2 internally. In other words, external crystals of 12or 16 MHz must be connected to generate the 6- or 8-MHz internal clock frequency. The 80186/80188 is fabricated in a 68-pin package. Both processors have on-chip priority interrupt controller circuits to provide five interrupt pins. Like the 8086/8088, the 80186/80188 can directly address one megabyte of memory. The 80186/80188 is provided with 10 new instructions beyond the 8086/8088 instruction set. Examples of these instructions include INS and OUTS for inputting and outputting a string byte or string word.

The 80286, on the other hand, has added memory protection and management capabilities to the basic 8086 architecture. An 8-MHz 80286 provides up to 6 times greater throughput than the 5-MHz 8086. The 80286 is fabricated in a 68-pin package. The 80286 can be operated at a clock frequency of 4, 6, or 8 MHz. An external 82284 clock generator chip is required to generate the clock. The 82284 divides the external clock by 2 to generate the internal clock. The 80286 can be operated in two modes, real address and protected virtual address. Real address mode emulates a very high-performance 8086. In this mode, the 80286 can directly address one megabyte of memory. In virtual address mode, the 80286 can directly address 16 megabytes of memory. Virtual address mode provides (in addition to the real address mode capabilities) virtual memory management as well as task management and protection. The programmer can select one of these modes by loading appropriate data in the 16-bit machine status word (MSW) register by using the load instruction (LMSW).

The 80286 was used as the microprocessor of the IBM PC/AT personal computer.

An enhanced version of the 80286 is the 32-bit 80386 microprocessor. The 80386 was used as the microprocessor in the IBM 386PC. The 80486 is another 32-bit microprocessor. It is based on the Intel 80386 and includes on-chip floating-point circuitry. IBM’s 486 PC contains the 80486 chip. Other 32-bit and 64-bit Intel microprocessors include Pentium, Pentium Pro, Pentium II, Celeron, Pentium III, Pentium 4 and Merced.

Although the 8086 seems to be obsolete, it is expected to be around for some time from second sources. Therefore, a detailed coverage of the 8086 is included. A summary of the 32- and 64-bit microprocessors is then provided.

9.2 8086 Main Memory

The 8086 uses a segmented memory. There are some advantages to working with the segmented memory. First, after initializing the 16-bit segment registers, the 8086 has to deal with only 16-bit effective addresses. That is, the 8086 has to manipulate and store 16-bit address components. Second, because of memory segmentation, the 8086 can be effectively used in time-shared systems. For example, in a time-shared system, several users may share one 8086. Suppose that the 8086 works with one user’s program for, say, 5 milliseconds. After spending 5 milliseconds with one of the other users, the 8086 returns to execute the first user’s program. Each time the 8086 switches from one user’s program to the next, it must execute a new section of code and new sections of data. Segmentation makes it easy to switch from one user program to another.

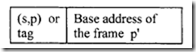

The 8086’s main memory can be divided into 16 segments of 64K bytes each (16 x 64 KB = 1 MB). A segment may contain codes or data. The 8086 uses 16-bit registers to address segments. For example, in order to address codes, the code segment register must be initialized in some manner (to be discussed later): A 16-bit 8086 register called the "instruction pointer" (IP), which is similar to the program counter of a typical microprocessor, linearly addresses each location in a code segment. Because the size of the IP is 16 bits, the segment size is 64K bytes (216 . Similarly, a 16-bit data segment register must be initialized to hold the segment value of a data segment. The contents of certain 16-bit registers are designed to hold a 16-bit address in a 64-Kbyte data segment. One of these address registers can be used to linearly address each location once the data segment is initialized by an instruction. Finally, in order to access the stack segment, the 8086 16-bit stack segment (SS) register must be initialized; the 64-Kbyte stack is addressed linearly by a 16-bit stack pointer register. Note that the stack memory must be a read/write (RAM) memory. Whenever the programmer reads from or writes to the 8086 memory or stack, two components of a memory address must be considered: a segment value and, an address or an offset or a displacement value. The 8086 assembly language program works with these two components while accessing memory. These two 16-bit components (the contents of a 16-bit segment register and a 16-bit offset or IP) form a logical address. The programmer writes programs using these logical addresses in assembly language programming.

The 8086 includes on-chip hardware to map or translate these two 16-bit components of a memory address into a 20-bit address called a "physical address" by shifting the contents of a segment register four times to left and then adding the contents of IP or offset. Note that the 8086 contains 20 address pins, so the physical address size is 20 bits wide.

Consider, for example, a logical address with the 16-bit code segment register contents of 2050 16 and the 16-bit 8086 instruction pointer containing a value of 0004w Suppose that the programmer writes an 8086 assembly language program using this logical address. The programmer assembles this program and obtains the object or machine code. When the 8086 executes this program and encounters the logical address, it will generate the 20-bit physical address as follows: If 16-bit contents of IP = 000416, 16-bit contents of code segment = 205016, 16-bit contents of code segment value after shifting logically 4 times to the left = 20500 16, then the 20-bit physical address generated by the 8086 on its 20-pin address is 20504 16 • Note that the 8086 assigns the low address to the low byte of a 16-bit register and the high address to the high byte of the 16-bit register for 16-bit transfers between the 8086 and main memory. This is called Little-endian byte ordering.

9.3 8086 Registers

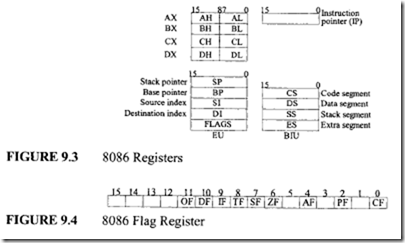

As mentioned in Chapter 6, the 8086 is divided internally into two independent units: the bus interface unit (BIU) and the execution unit (EU). The BIU reads (fetches) instructions, reads operands, and writes results. The EU executes instructions already fetched by the BIU. The 8086 prefetches up to 6 instruction bytes from external memory into a FIFO (first-in-first-out) memory in the BIU and queues them in order to speed up instruction execution. The BIU contains a dedicated adder to produce the 20-bit address. The bus control logic of the BIU generates all the bus control signals, such as the READ and WRITE signals, for memory and 1/0. The BIU also has four 16-bit segment registers: the code segment (CS), data segment (DS), stack segment (SS), and extra segment (ES) registers.

All program instructions must be located in main memory, pointed to by the 16- bit CS register with a 16-bit offset contained in the 16-bit instruction pointer (IP). Note that immediate data are considered as part of the code segment. The SS register points to the current stack. The 20-bit physical stack address is calculated from the SS and SP (stack pointer) for stack instructions such as PUSH and POP. The programmer can create a programmer’s stack with the BP (base pointer) instead of the SP for accessing the stack using the based addressing mode. In this case, the 20-bit physical stack address is calculated from the BP and SS. The DS register points to the current data segment; operands for most instructions are fetched from this segment. The I6-bit contents of a register such as the SI (source index) or DI (destination index) or a I6-bit displacement are used as offsets for computing the 20-bit physical address.

The ES register points to the extra segment in which data (in excess of 64 KB pointed to by the DS) is stored. String instructions always use the ES and DI to determine the 20-bit physical address for the destination.

The segments can be contiguous, partially overlapped, fully overlapped, or disjointed. An example ofhow five segments (SEGMENT 0 through SEGMENT 4), may be stored in physical memory is shown in Figure 9.1. In this example, SEGMENTs 0 and I are contiguous (adjacent), SEGMENTs I and 2 are partially overlapped, SEGMENTs 2 and 3 are fully overlapped, and SEGMENTs 2 and 4 are disjointed.

Every segment must start on I6-byte memory boundaries. Typical examples of values of segments should then be selected based on physical addresses starting at 00000 16, 00010 16,00020 16, 00030 16, ••• , FFFF0 16• A physical memory location may be mapped into (contained in) one or more logical segments. Many applications can be written to simply initialize the segment registers and then forget them.

A segment can be pointed to by more than one segment register. For example, the DS and ES may point to the same segment in memory if a string located in that segment is used as a source segment in one string instruction and a destination segment in another string instruction. Note that, for string instructions, a destination segment must be pointed to by the ES. One example of four currently addressable segments is shown in Figure 9.2.

The EU decodes and executes instructions. It has a 16-bit ALU for performing arithmetic and logic operations. The EU has nine 16-bit registers: AX, BX, CX, DX, SP,

BP, SI, and DI, and the flag register. The 16-bit general registers AX, BX, ex, and DX can be used as two 8-bit registers (AH, AL; BH, BL; eH, eL; DH, DL). For example, the 16- bit register DX can be considered as two 8-bit registers DH (high byte ofDX) and DL (low byte of DX). The general-purpose registers AX, BX, ex, and DX perform the following functions:

BP, SI, and DI, and the flag register. The 16-bit general registers AX, BX, ex, and DX can be used as two 8-bit registers (AH, AL; BH, BL; eH, eL; DH, DL). For example, the 16- bit register DX can be considered as two 8-bit registers DH (high byte ofDX) and DL (low byte of DX). The general-purpose registers AX, BX, ex, and DX perform the following functions:

-

The AX register is 16 bit wide whereas AH and AL are 8 bit wide. The use of AX and AL registers is assumed by some instructions. The I/0 (IN or OUT) instructions always use the AX or AL for inputting/outputting 16- or 8-bit data to or from an I/0 port. Multiplication and division instructions also use the AX orAL.

-

The BX register is called the "base register." This is the only general-purpose register whose contents can be used for addressing 8086 memory. All memory references utilizing this register content for addressing use the DS as the default segment register.

-

The ex register is known as the counter register because some instructions, such as SHIFT, ROTATE, and LOOP, use the contents of eX as a counter, For example, the instruction LOOP START will automatically decrement eX by 1 without affecting flags and will check to see if (ex) = 0. If it is zero, the 8086 executes the next instruction; otherwise, the 8086 branches to the label START.

-

The DX register, or data register, is used to hold the high 16-bit result (data) (LOW 16-bit data is contained in AX) after 16 x 16 multiplication or the high 16-bit dividend (data) before a 32 + 16 division and the 16-bit remainder after the division (16-bit quotient is contained in AX).

-

The two pointer registers, SP (stack pointer) and BP (base pointer), are used to access data in the stack segment. The SP is used as an offset from the current SS during execution of instructions that involve the stack segment in external memory. The SP contents are automatically updated (incremented or decremented) due to execution of a POP or PUSH instruction. The BP contains an offset address in the current SS. This offset is used by instructions utilizing the based addressing mode.

-

The two index registers, SI (source index) and DI (destination index), are used in indexed addressing. Note that instructions that process data strings use the SI and DI index registers together with the DS and ES, respectively, in order to distinguish between the source and destination addresses.

-

The flag register in the EU holds the status flags, typically after an ALU operation. The

EU sets or resets these flags to reflect the results of arithmetic and logic operations.

EU sets or resets these flags to reflect the results of arithmetic and logic operations.

Figure 9.3 depicts the 8086 registers. It shows the nine 16-bit registers in the EU. As described earlier, each one of the AX, BX, CX, and DX registers can be used as two 8-bit registers or as one 16-bit register. The other registers can be accessed as 16- bit registers. Also shown are the four 16-bit segment registers and the 16-bit IP in the BIU. The IP is similar to the program counter. The CS register points to the current code segment from which instructions are fetched. The effective address is derived from the CS and IP. The SS register points to the current stack. The effective address is obtained from the SS and SP. The DS register points to the current data segment. The ES register points to the current extra segment where data is usually stored.

Figure 9.4 shows the 8086 flag register. The 8086 has six one-bit status flags. Let us now explain these flags.

-

AF (auxiliary carry flag) is set if there is a carry due to addition of the low nibble into the high nibble or a borrow due to the subtraction of the low nibble from the high nibble of a number. This flag is used by BCD arithmetic instructions; otherwise, AF is zero.

-

CF (carry flag) is set if there is a carry from addition or a borrow from subtraction.

-

OF (overflow flag) is set if there is an arithmetic overflow (i.e., if the size of the result exceeds the capacity of the destination location). An interrupt on overflow instruction is available to generate an interrupt in this situation; otherwise, it is zero.

-

SF (sign flag) is set if the most significant bit of the result is one; otherwise, it is zero.

-

PF (parity flag) is set if the result has even parity; PF is zero for odd parity of the result.

-

ZF (zero flag) is set if the result is zero; ZF is zero for a nonzero result.

The 8086 has three control bits in the flag register that can be set or cleared by the programmer:

1. Setting DF (direction flag) causes string instructions to auto-decrement; clearing DF causes string instructions to auto-increment.

2. Setting IF (interrupt flag) causes the 8086 to recognize external maskable

interrupts; clearing IF disables these interrupts.

3. Setting TF (trap flag) puts the 8086 in the single-step mode. In this mode, the 8086 generates an internal interrupt after execution of each instruction. The user can write a service routine at the interrupt address vector to display the desired registers and memory locations. The user can thus debug a program.