MESSAGE QUEUE

Message passing

An operating system provides inter-process communication (IPC) to allow processes to exchange information, which is useful for creating cooperating processes. The most popular form of IPC involves message passing. A process may send information to a channel (or port), from which another process may receive it. The sending and receiving processes can be on the same or different computers connected through a communication medium.

One reason for the popularity of message passing is its ability to support client server inter- action. A server is a process that offers a set of services to client processes, which are invoked in response to messages from the clients and results are returned in messages to them. We are particularly interested in servers that offer operating system services. With such servers, part of the operating system functionality can be transferred from the kernel to utility processes, for instance, file management can be handled by a file server, which offers services such as open, read, write, and seek.

There are several issues involved in message passing. We discuss some of these below.

(1) Reliability and order

Messages sent between computers can fail to arrive or can be garbled because of noise and contention for the communication line. There are techniques to increase the reliability of data transfer, but they cost both extra space (longer messages to increase redundancy, more code to check the messages) and time. Message-passing techniques can be distinguished by the reliability with which they deliver messages.

Another issue is whether messages sent to a channel are received in the order in which they are sent. Differential buffering delays and routings in a network environment can place messages out of order. It takes extra effort (in the form of sequence number, and more generally, time stamps) to ensure order.

(2) Access

An important issue is how many readers and writers can exchange information through a channel. Different approaches impose various restrictions on the access to channels. A bound channel is the most restrictive: there may be only one reader and writer. At the other extreme, a free channel allows any number of readers and writers. These are suitable for programming client-server interactions based on a family of servers providing a common service. This is associated with a single channel; clients send their service requests to this channel and servers providing the requested service receive service requests from the channel. Unfortunately, implementing free channels can be quite costly if in-order messages are to be supported.

The message queue associated with the channel is kept at a site which, in general, is remote to both sender and receiver. Thus both send and receive requests-result in messages being sent to this site the former put messages in this queue and the latter request messages from it. Between these two extremes are input channels and output channels. An input channel has only one reader but any number of writers modelling the fairly typical many-client, one-server situation. They are easy to implement since all receivers that designate a channel occur in the same process. Thus, the message queue associated with a channel can be kept with the receiver. Output channels, in contrast, allow any number of readers but only one writer. They are easier to implement than free channels since the message queue can be kept with the sender, but they are not much used since one-client and many-server situations are very unusual.

Several applications can use more than one kind of channel. For instance, a client can enclose a bound channel in a request message to the input port of a file server. This bound channel can represent the opened file, and subsequent read and write requests can be directed to this channel.

(3) Synchronous and asynchronous

The send, receive, and reply operations may be synchronous or asynchronous. A synchronous operation blocks a process till the operation completes, whereas an asynchronous operation is non-blocking and only initiates the operation. The caller could discover completion by some other mechanism. Note that both synchronous and asynchronous imply blocking and not blocking but not vice versa, that is, not every blocking operation is synchronous and not every non-blocking operation is asynchronous. For instance, a send that blocks till the receiver machine has received the message is blocking but not synchronous, since the receiver process may not have received it. These definitions of both synchronous and asynchronous operations are similar but not identical to the ones given in textbooks, which tend to equate synchronous with blocking.

Asynchronous message passing allows more parallelism. Since a process does not block, it can do some computation while the message is in transit. In the receive case, a process can express its interest in receiving messages on multiple channels simultaneously. In a synchronous system, such parallelism can be achieved by forking a separate process for each concurrent operation, but this approach incurs the cost of extra process management.

Asynchronous message passing introduces several problems. What happens if a message cannot be delivered? The sender may not wait for delivery of the message, and thus never hear about the error. Similarly, a mechanism is needed to notify an asynchronous receiver that a message has arrived. The operation invoker could learn about completion or errors by polling, getting a software interrupt, or by waiting explicitly for completion later using a special synchronous wait call. An asynchronous operation needs to return a call ID if the application needs to be later notified about the operation. At notification time, this ID would be placed in some global location or passed as an argument to a handler or wait call.

Another problem related to asynchronous message passing has to do with buffering. If messages sent asynchronously are buffered in a space managed by the operating system, then a process may fill this space by flooding the system with a large number of messages.

Message queue types

Message queues allow one or more processes to write messages, which will be read by one or more reading processes. Most operating systems or kernels maintain a list of message queues; a vector keeps the messages, each element of which points to a data structure that fully describes the message queue. When message queues are created, a new data structure is allocated from system memory and inserted into the vector.

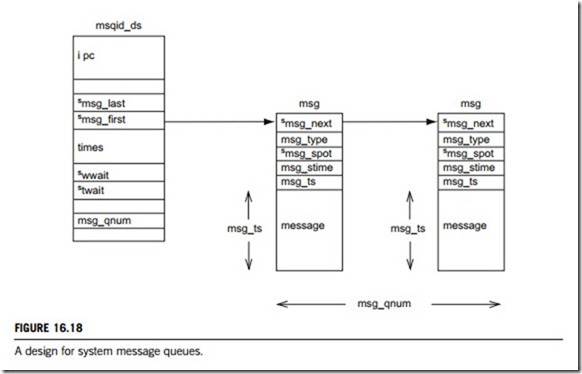

Figure 16.18 displays a possible design of system message queue. In Figure 16.18, each “msqid ds” data structure contains an “ipc ” data structure and pointers to the messages entered onto this queue. In addition, it keeps queue modification times such as the last time that this queue was written to and so on. The “msqid ds” also contains two wait queues, one for the writers to the queue and one for the readers of the message queue.

Each time a process attempts to write a message to the write queue, its effective user and group identifiers are compared with the mode in this queue’s “ipc ” data structure. If the process can write to the queue then the message may be copied from the process’s address space into the “a msg” data structure and put at the end of this message queue. Each message is tagged with an application-specific

type, agreed between the cooperating processes. However, there may be no room for the message as the operating system may restrict the number and length of messages that can be written. In this case, the process will be added to this message queue’s write wait queue and the scheduler will be called to select a new process to run. It will be woken up when one or more messages have been read from this message queue.

Reading from the queue is a similar process. Again, the process’s access rights to the write queue are checked. A reading process may choose to either get the first message in the queue regardless of its type, or select messages of a particular type. If no messages match these criteria, the reading process will be added to the message queue’s read wait queue and the scheduler run. When a new message is written to the queue this process will be woken up and run again.

Pipes

In implementations of IPC, one solution to some of the buffering problems of asynchronous sending is to provide an intermediate degree of synchrony. We can treat the set of message buffers as a “tradi- tional bounded buffer” that blocks the sending process when there are no more buffers available. That is exactly the kind of message passing supported by pipes.

Pipes also allow the output of one process to become the input of another. A pipe is like a file opened for reading and writing. They are constructed by the service call pipe, which opens a new pipe and returns two descriptors for it, one for reading and another for writing. Reading from a pipe advances the read buffer, and writing to it advances the write buffer. The operating system may only wish to buffer a limited amount of data for each pipe, so an attempt to write to a full pipe may block the writer. Similarly, an attempt to read from an empty buffer will block the reader.

Though a pipe may have several readers and writers, it is really intended for one reader and writer. Pipes are used to unify both the input and output mechanisms and IPC. Processes expect that they will have two descriptors when they start, one called standard input and another called standard output. Typically, the first is a descriptor for the terminal open for input, and the second is a similar descriptor for output. However, the command interpreter, which starts most processes, can arrange for these descriptors to be different. If the standard output descriptor happens to be a file descriptor, the output of the process will go to the file, and not to the terminal. Similarly, the command interpreter can arrange for the standard output of one process to be one end of a pipe and for the other end of the pipe to be standard input for a second process. Thus, a listing program can be piped to a sorting program, which in turn directs its output to a file.

Conceptually, a pipe can be thought of much like a hose-pipe, in that it is a conduit where we pour data in at one end and they flow out at the other. A pipe looks a lot like a file, in that it is treated as a sequential data stream. Unlike a file, there is no physical storage of data when we close a pipe; anything that was written in one end but not read out from the other end will be lost.

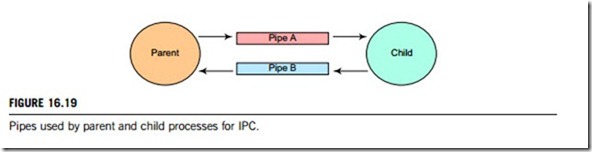

The use of pipes as conduits between processes is shown in the diagram given in Figure 16.19. Here we see two processes that are called parent and child, for reasons that will become apparent shortly. The parent can write to Pipe A and read from Pipe B. The child can read from Pipe A and write to Pipe B.

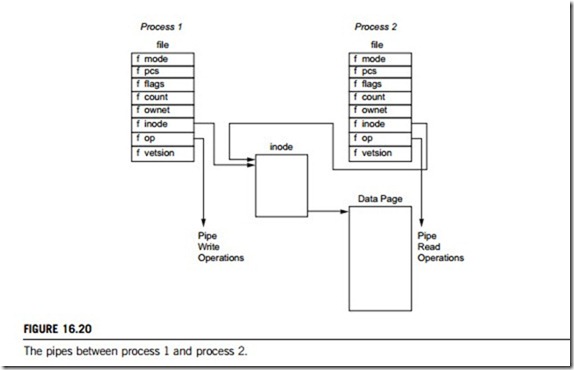

A pipe can be implemented using two file data structures that both point at the same temporary inode, which itself points at a physical page within memory. Figure 16.20 shows that each file data structure contains pointers to two different file operation routine vectors: one for writing to the pipe, the other for reading from the pipe. This hides the underlying differences from generic system calls that read and write to ordinary files. As the writing process writes to the pipe, bytes are copied into the shared data page and when the reading process reads from the pipe, bytes are copied from its shared data page. The operating system must synchronize access to the pipe. It must make sure that the reader and the writer of the pipe are in step and to do this it uses locks, wait queues, and signals.

When the writer wants to write to the pipe it uses the standard write library functions. These all pass file descriptors that are indices into the process’s set of file data structures, each one representing an open file, or, as in this case, an open pipe. The operating system call uses the write routine pointed at by

the file data structure describing this pipe, which in turn uses information held in the inode representing the pipe to manage the write request.

If there is enough room to write all of the bytes into the pipe then, so long as the pipe is not locked by its reader, the operating system locks it for the writer and copies the bytes to be written from the process’s address space into the shared data page. If the pipe is locked by the reader, or if there is not enough room for the data, then the current process is made to sleep in the pipe inode’s wait queue and the scheduler is called so that another process can run. It is interruptible, so it can receive signals and will be woken by the reader when there is enough room for the write data, or when the pipe is unlocked. When the data have been written, the pipe’s inode is unlocked and any waiting readers sleeping in the wait queue of the inode will themselves be woken up. Reading data from the pipe is a very similar process to writing to it.

Some operating systems also support named pipes, also known as FIFOs because pipes operate on a first in, first out principle; the first data written into the pipe are the first read from it. Unlike pipes, FIFOs are not temporary objects; they are entities in the file system and can be created using system commands. Processes are free to use a FIFO so long as they have appropriate access rights to it. The way that FIFOs are opened is a little different from pipes. A pipe (its two file data structures, its inode, and the shared data page) is created in one go whereas a FIFO already exists and is opened and closed by its users. It must handle readers opening the FIFO before writers open it, as well as readers reading before any writers have written to it. That aside, FIFOs are handled almost exactly the same way as pipes and they use the same data structures and operations.