Optical Storage Systems

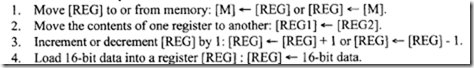

Introduction

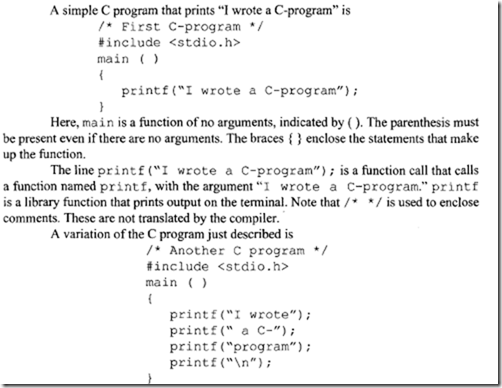

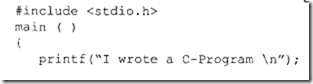

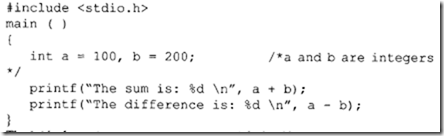

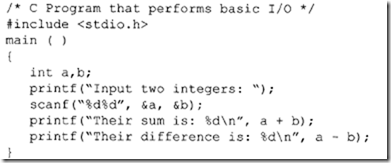

Recordable optical disk drive technology provides a well-matched solution to the increasing demands for removable storage. An optical disk drive provides, in a sense, infinite storage capabilities: Extra storage space is easily acquired by using additional media cartridges (which are relatively inexpensive). Such cost effective storage capabilities are welcome in storage-intensive modern computer applications such as desktop publishing, computer aided design/computer aided manufacturing (CAD/CAM), or multimedia authoring.

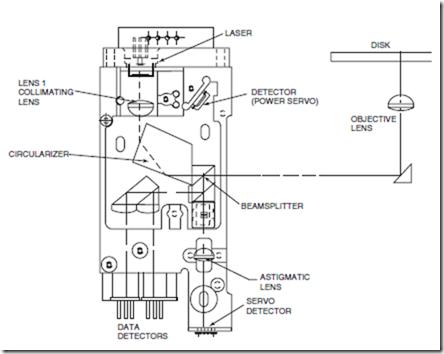

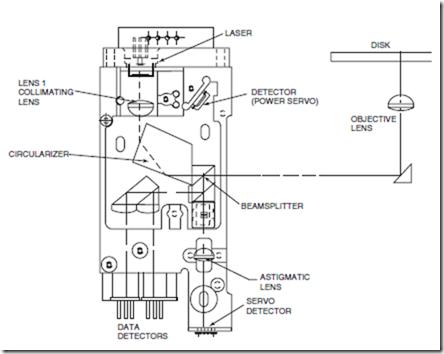

The purposes of the optical head are to transmit the laser beam to the optical disk, focus the laser beam to a diffraction limited spot, and to transmit readout signal information from the optical disk to the data and servo-detectors.

The laser diode is a key component in optical storage, whether the recording technology is magneto- optic, ablative WORM, or phase change. Early generations of optical drives used infrared lasers emitting in the 780 nm or 830 nm wavelengths. Later generation of drives use red laser wavelengths emitting at around 690 nm. The lasers are typically rated to have a maximum continuous output power in the 40-mW range and are index guided to ensure good wavefront quality.

In a laser diode, light is emitted from the facets, which are the cleaved ends of the waveguide region of an index guided laser. The facet dimensions are small enough (order of a few micrometers) for diffraction to take place as light is emitted from the facet. As a result the output beam has a significant divergence angle. In many commercial laser diodes, the width of the facet (i.e., the dimensions parallel to the pn-junction plane) is much larger than the height (or the direction perpendicular to the junction plane), which causes

FIGURE 25.1 Drawing of the optical head in a typical magneto-optic disk drive. Shown is a split-optics design, which consists of a fixed set of components (the laser, detectors, and polarizing optics) and a movable system consisting of a beam bender and an objective lens that focuses the light on the disk. (Source: Asthana, P. 1994. Laser Focus World, Jan., p. 75. Penwell Publishing Co., Nashua, N.H. Used by permission.)

the divergence angles to be unequal in the directions parallel and perpendicular to the laser junction. The spatial profile of the laser beam some distance from the laser is thus elliptical.

The basic layout of the optical head of a magneto-optic drive is shown in Fig. 25.1. The laser is mounted in a heat sink designed to achieve an athermal response. The output from the laser diode is collimated by lens 1. A prismlike optical element called a circularizer is then used to reduce the ellipticity of the laser beam. The beam then passes through a polarizing beamsplitter, which reflects part (30%) of the beam toward a detector and transmits the rest toward the disk.

The output of the laser is linearly polarized in the direction parallel to the junction (which will be referred to as P-polarization). The ratio of the intensity of the P-polarization component of the emitted light to the ratio of the intensity in the S-polarization component is > 25:1. This polarizing beam splitter is designed to transmit 70% of the P-polarized light and 100% of the S-polarized light. The light that is reflected is incident on a light detector, which is part of a power servoloop designed to keep the laser at a constant power. Without a power servoloop, the laser power will fluctuate with time as the laser junction heats up, which can adversely affect the read performance.

The beam transmitted by the beam splitter travels to a turning (90◦) mirror, called a beam bender, which is mounted on a movable actuator. During track seeking operations, this actuator can move radially across the disk. The beam reflected by the turning mirror is incident on an objective lens (also mounted on the actuator), which focuses the light on the disk. This type of optical head design in which the laser, the detectors, and most of the optical components are stationary while the objective lens and beambender are movable is called a split optics design. In early optical drive designs, the entire optical head was mounted on a actuator and moved during seeking operations. This led to slow seek times (∼200 ms) because of the mass on the actuator. A split optics design, which is possible because coherent light can be made highly collimated, lowers the mass on the actuator and thus allows much faster seek times.

The size of the focal spot formed by the objective lens on the disk depends on the numerical aperture (NA) of the lens and the amount of overfill of the lens (i.e., the amount with which the diameter of the incident collimated beam exceeds the aperture of the objective lens). The numerical aperture is given by

NA = n sin θmax,

in which n is the refractive index of the lens and θmax is the incidence angle of a light ray focused through the margin of the lens. The beam of light incident on the objective lens usually has a Gaussian electric field (or intensity) profile. The profile of the focused spot is a convolution of the incident intensity profile and the aperture of the objective lens (Goodman, 1968). Overfilling the lens aperture reduces the size of the focused spot at the cost of losing optical energy outside the aperture and increasing the size of the side lobes. Optimization of the amount of overfill (Marchant, 1990) yields an approximate spot diameter of

in which λ is the wavelength of light and NA is the numerical aperture of the objective lens. The depth of focus z of the focal spot is given by z = 0.8λ/(NA)2.

The depth of focus defines the accuracy with which the objective lens position must be held with respect to the disk surface. The smaller the depth of focus, the less tolerance the system has for media tilt and the more difficult the job of the focus servosystem. Thus trying to reduce the spot size (always a goal as a smaller spot allows a higher storage density) by increasing the NA of the lens becomes impractical beyond an NA of about 0.6. The objective lens also acts as a collector lens for the light that is reflected from the disk. This reflected light, used for the servosystems and during reading, contains the readout information. The reflected light follows the incident path up to the fixed optical element. Beamsplitter 1 reflects all of the S polarized light and 30% of the P polarized light in the direction of the servo and data detectors.

The portion of the light that is transmitted by the beamsplitter is, unfortunately, focused by the col- limating lens back into the facet of the laser. This feedback light causes a number of problems in the laser, even though the net amount of feedback does not exceed about 7% of the output light for most magneto-optic media. Optical feedback affects the laser by causing the laser to mode hop randomly, which results in a random amplitude fluctuation in the output. This amplitude noise can be a serious problem and so techniques must be used to control the laser noise (such as injection of high-frequency current) or HFM (Arimoto, 1986). Increasing the HFM current in general can decrease the amount of noise, but as a practical matter, the injection current cannot be made arbitrarily large as it may then violate limits on allowable radiation from computer accessories. Optical feedback also decreases the threshold and increases the slope of the power-current (or PI) curve of the laser, but these effects are not really a problem.

The light reflected by beamsplitter 1 is further split by beamsplitter 2 into servo and data components. The light reflected by the beamsplitter is incident on the data detectors. For magneto-optic read back, two detectors are used in a technique known as differential detection (i.e., the difference in the signals incident on the two detectors is taken). The light transmitted through beamsplitter 2 is incident onto a special multielement servodetector and is used to generate the servosignals. The mechanism by which these signals are generated are the subject of the following discussion on the servosystem.

The Servosystem

The servosystem is what enables the focused laser spot to be positioned with accuracy onto any of the tracks on the disk and ensure that it can be moved to any track on the disk as required. The high track densities (18,000 tracks/in) on optical disks require that the laser spot position be controlled to within a fraction of a micrometer. To be able to move across the entire disk surface requires a large actuator, but such an actuator will be too massive to quickly respond to the rapid changes in the track position (due to run-out in the disk) as the disk spins. Therefore, a compound actuator consisting of a coarse actuator and a fine actuator is used to control the radial position of the laser beam on the disk. The fine actuator, which has a very low mass, can change the spot position rapidly over a limited range. The coarse actuator has a slower response, but has a much wider range of motion and is used for long seek operations. Optical disks have a continuous spiral groove (as in a phonograph record) to provide information on the relative track location.

In addition to tracking and seeking, the laser spot in an optical drive must be kept in perfect focus on the disk regardless of the motion of the disk (there can be quite a lot of vertical motion if the disk has tilt

or is slightly warped). To do this, the objective lens must be constantly adjusted to correct for the axial motion of the disk surface as the media spins. The lens position is controlled by a focus servomechanism. Figure 25.2 shows a block diagram of an optical drive servocontrol system (combined tracking and focusing). The return beam contains information on the focus and position of the spot, which is processed by the servo detectors. The feedback signals derived from the detectors allow the system to maintain control of the beam.

The focus control system requires a feedback signal that accurately indicates the degree and direction of focus error (Braat and Bouwhuis, 1978; Earman, 1982). To generate a focus error signal, an astigmatic lens is used to focus onto a quadrant detector, a portion of the light reflected from the disk. In perfect focus, the focal spot is equally distributed on the four elements of the quad detector (as shown in Fig. 25.3). However, if the lens is not in focus, the focal spot on the detector is elliptical because of the optical properties of the astigmatic lens. The unequal distribution of light on the detector quadrants generates a focus error signal (FES).

This signal is normalized with respect to light level to make it independent of laser power and disk reflectivity. The focus actuator typically consists of an objective lens positioned by a small linear voice coil motor.

The coils are preferably mounted with the lens to reduce moving mass, while the permanent magnets are stationary. The lens can be supported by either a bobbin on a sliding pin or elastic flexures. The critical factors in the design are range of motion, acceleration, freedom from resonances, and thermal considerations.

Once the spot is focused on the active surface, it must find and maintain position along the desired track. This is the role of the tracking servo. The same quadrant detector that is used to generate the focus signal can be used to generate the tracking error signal (TES). The beam returning from the disk contains first-order diffraction components; their intensity depends on the position of the spot on the tracks and varies the light falling along one axis of the quadrant detector. The TES is the normalized difference of the current from the two halves of the detector, and it peaks when the spot passes over the cliff between a land and groove (Mansuripur, 1987; Braat and Bouwhuis, 1978).

As a matter of terminology, the seek time usually refers to the actual move time of the actuator. The time to get to data, however, is called the access time which includes the latency of the spinning disk in addition

FIGURE 25.3 Focus control system: (a) the quad detector, (b) the spot of light focused on the quad by the astigmatic lens (circular implies objective lens is in focus), and (c) the spot of light on the quad when the objective lens is out of focus.

FIGURE 25.3 Focus control system: (a) the quad detector, (b) the spot of light focused on the quad by the astigmatic lens (circular implies objective lens is in focus), and (c) the spot of light on the quad when the objective lens is out of focus.

to the seek time. For example, a drive spinning a disk at 3600 rpm has a latency of (0.5 × (60/3600)) s or 8 ms. Thus, a 3600-rpm drive with a seek time of 30 ms will have an access time of 38 ms. The standard

way to measure seek time is 1/3 of the full stroke of the actuator (i.e., the time it takes to cover 1/3 of the isk). This is a historical artifact from the times of early hard disk drives.

The ability to accurately follow the radial and axial motions of the spinning disk results directly from he quality of the focus and tracking actuators. To reject the errors due to shock, vibration, and media unout, the servosystem must have high bandwidth. The limitation to achieving high bandwidth is usually he resonance modes of the actuator. As the actuators are reduced in size, the frequencies of the resonances ecome higher and the achievable bandwidth of the system rises. Servosystems in optical drives face dditional challenges because they have to handle removable media, which has variations between different isks (such as media tilt).

Optical Recording and Read Channel

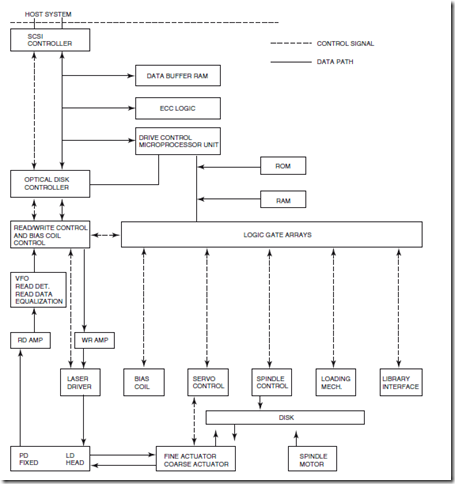

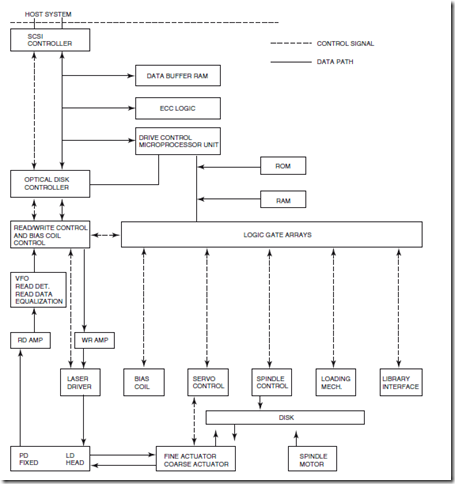

A schematic block diagram of the functions in an optical drive is shown in Fig. 25.4. The SCSI controller handles the flow of information to and from the host (including commands). The optical disk controller is a key controller of the data path. It interprets the commands from the SCSI controller and channels data appropriately through the buffer random access memory (RAM) to the write channel, or from the read channel to the output. The drive control microprocessor unit controls, through the logic gate arrays, all the functions of the optical drive including the servo control, spindle motor, actuators, laser driver, etc.

Data that are input to the drive over the SCSI for recording are first broken up into fixed block sizes (of, for example, 512 kilobyte or 1024 kilobytes length) and then stored in the data buffer RAM. Magneto-optic, phase-change, and WORM drives can be classified as fixed block architecture technologies in which data blocks are recorded much like in hard drives (Marchant, 1990). Blocks of data can be placed anywhere on the disk in any sequence. The current CD recordable drive is, on the other hand, an example of a non-fixed block architecture (because its roots are in CD audio). In common CD-R drives, input data are recorded sequentially (like a tape player) and can be of any continuous length.

Error correction and control (ECC) bytes are added to each block of data. Optical drives use Reed– Solomon codes, which are able to reduce the error rate from 1E-5 to about 1E-13 (Golomb, 1986). After the addition of ECC information, the data are encoded with run length limited (RLL) modulation codes (Tarzaiski, 1983; Treves and Bloomberg, 1986) in order to increase efficiency and improve detection. Special characters are inserted in the bit stream such as a synchronization character to tell where the data begins. All in all, the overhead required to store customer data is about 20%.

In most current optical drives, recording is based on pulse position modulation (PPM) techniques. In basic PPM recording, the presence of a mark signifies a one and the absence signifies a zero bit. Thus, a 1001 bit sequence would be recorded as mark-space-space-mark. In pulse width modulation (PWM) recording, the edges of the mark represent the one bits (Sukeda et al., 1987). Thus a 1001 bit sequence would be recorded as a single mark of a certain size. A longer bit sequence such as 100001 would be represented by a longer mark, hence the term pulse width modulation. The use of pulse width modulation allows a higher linear density of recording than pulse position modulation.

A comparison of PWM recording with the current technique of PPM is shown in Fig. 25.5. It is more difficult to implement PWM technology (a more complicated channel is required), and PWM writing has greater sensitivity to thermal effects. Thus implementing PWM is a challenging task for drive vendors.

On readback, the light reflects from the disk and travels to a photodetector (or two detectors in the case of magneto-optic drives). For WORM, phase-change, and CD-R disks, the signal is intensity modulated and thus can be converted to current from the detectors and amplified prior to processing. The WORM, phase-change, and CD-R disks have a high contrast ratio between mark and no-mark on the disk and thus provide a good signal-to-noise ratio. In magneto-optic drives, the read signal reflected off the disk is not intensity modulated but polarization modulated. Thus polarization optics and the technique of differential detection must be used to convert the polarization modulation to intensity modulation (as is discussed later in the section on magneto-optic recording).

FIGURE 25.4 A functional block diagram of an optical disk drive describing the key electrical functions and interfaces. Some of the abbreviations are: VFO is the variable field oscillator, which is used to synchronize the data, RD amp is the read signal amplifier, WR amp is the write signal amplifier, PD fixed is the photodetextor assemply, and LD head is the laser diode.

To extract the 1s and 0s from the noisy analog signal derived from the photodetectors, optical drives use a number of techniques, such as equalization, which boosts the high frequencies and thus provides greater discrimination between spots. Using an analog-to-digital converter, the analog data signal is converted into channel bits. The channel bits are converted back into customer data bytes using basically the reverse of the encoding process. The data is clocked into the decoder, which removes the modulation code. The remaining special characters are removed from the data, which is then fed into the ECC alignment buffer to correct any errors (up to 40 bytes long). Once data has been read from the disk, it is stored in a RAM buffer and then output to whatever readout device is hooked by SCSI to the drive. With this basic understanding

FIGURE 25.5 Schematic showing the mark spacing for PPM and PWM recording. The PPM recording is used in most current optical drives. The PWM recording increases capacity by as much as 50% and will be used in almost all forthcoming writable optical drives. PWM recording, however, requires much tighter tolerances than PPM recording.

of how data is recorded on a spinning disk, we can turn to the specific items such as recording physics that delineate the various recording technologies.

Phase-Change Recording

Phase-change recording takes advantage of the fact that certain materials can exist in multiple metastable i.e. normally stable) crystalline phases, each of which have differing optical properties (such as reflectivity).

Thermal energy (as supplied by the focused beam of a high-power laser) above some threshold can be used o switch from one metastable state to another (Ovshinsky, 1970; Takenaga et al., 1983). Energy below the witching threshold should have no effect. In this way a low-power focused spot can be used to read out he recorded information without affecting it.

To achieve this kind of multiple metastable states, phase-change materials typically are a mixture of everal elements such as germanium, tellurium, and antimony (Ge2Sb2Te5). Phase-change materials are available that are suited for either rewritable or write-once recordings. In an erasable material, recording is affected by melting the material under the focused spot and then cooling it quickly enough to freeze it in an amorphous phase. Rapid cooling is critical and, thus, the design of the heat sinking capability of the material is important.

Erasure of the phase-change material is achieved by an annealing process, that is, heating the material to just below the melting point for a long enough period to recrystallise the material and erase any amorphous marks.

The fact that phase-change materials are a mixture of several materials makes recyclability difficult to achieve. The melting/annealing processes increase phase segregation and thus reduce the number of cycles that can be achieved. Early phase-change materials could only achieve a few thousand cycles, which is one of the primary reasons they were passed over by many companies in favor of magneto-optical products when rewritable drives were being introduced. However, the cyclability of phase-change materials has increased substantially.

The advantage of phase-change recording over magneto-optic recording is that a simpler head design is possible (since no bias magnet and fewer polarizing optics are needed). The disadvantages include the fact

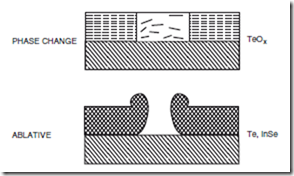

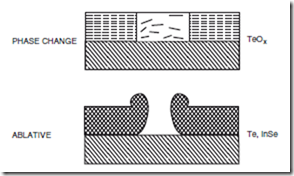

FIGURE 25.6 Permanent WORM recording provides the highest level of data security available in a removable storage device. In ablative WORM recording, marks are physically burned into the material. In phase-change worm, the recording process is a physical change in the material, which results in a change in reflectivity.

that there is less standardization support for the phase-change format, and fewer companies produce the drives. For the consumer this means there is less interchange with phase-change than with magneto-optic.

Worm Technology

The earliest writable optical drives were, in fact, WORM drives, and although the rewritable drive is more popular for daily storage needs, the WORM technology has a clear place in data storage because it allows permanent archiving capability.

There are a number of different types of write-once technologies that are found in commercial products. Ablative WORM disks consist of tellurium-based alloys. Writing of data is accomplished by using a high- powered laser to burn a hole in the material (Kivits et al., 1982). A second type of WORM material is what is known as textured material, such as in a moth’s eye pattern. The actual material is usually a platinum film. Writing is accomplished by melting the textured film to a smooth film and thus changing the reflectivity. Phase-change technology provides a third type of WORM technology using materials such as tellurium oxide. In the writing process, amorphous (dark) material is converted to crystalline (light) material by application of the heat from a focused laser beam (Wrobel et al., 1982). The change cannot be reversed. A comparison of phase-change and ablative WORM recording is shown schematically in Fig. 25.6.

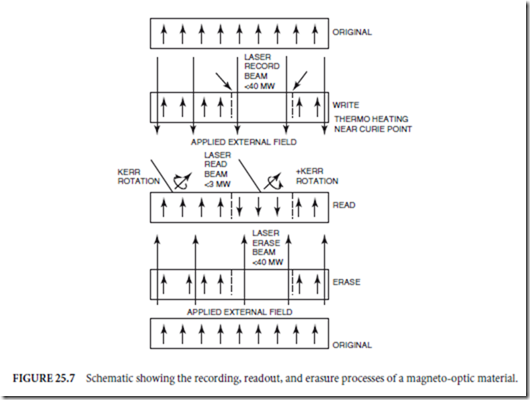

Magneto-Optic Technology

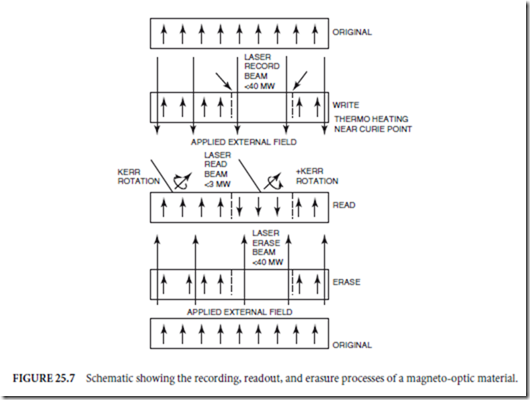

In a magneto-optic drive, data recording is achieved through a thermomagnetic process (Mayer, 1958), also known as Curie point writing as it relies on the threshold properties of the Curie temperature of magnetic materials. In this process, the energy within the focused optical spot heats the recording material past its Curie point (about 200◦C), a threshold above which the magnetic domains of the material are susceptible to moderate (about 300 G) external magnetic fields. Application of an external magnetic field is used to set the state of the magnetization vector (which represents the polarization of the magnetic domains) in the heated region to either up (a one bit) or down (a zero bit). When the material is cooled to below the Curie point this orientation of the magnetic domains is fixed. An illustration of the magneto-optic recording process is given in Fig. 25.7. This recording cycle has been shown to be highly repeatable (>1 million cycles) in any given region without degradation of the material. This is an important aspect if the material is to be claimed as fully rewritable.

In any practical recording process, it is necessary to have a sharp threshold for recording. This ensures the stability of the recorded information both to environmental conditions as well as during readout. Thermo-magnetic recording is an extremely stable process. Unless heated to high temperatures (>100◦C), the magnetic domains in magneto-optic recording films are not affected by fields under several kilogauss

in strength (in comparison, the information stored on a magnetic floppy is affected by magnetic fields as low as a 100 G). The coercivity of a magneto-optic material remains high until very close to the Curie temperature. Near the Curie temperature (about 200◦C), the coercivity rapidly drops by two or three orders of magnitude as the magnetic domain structure becomes disordered.

Readout of the recorded information can safely be achieved with a laser beam of about 2-mW power at the disk, a power level which is high enough to provide good signal strength at the detectors, but low enough not to affect the recorded information because any media heating from it is far below the Curie threshold. During readout, the magnetic state of the recorded bits of information is sensed through the polar-Kerr effect by a low-power linearly polarized readout beam. In this effect the plane of polarization of the light beam is rotated slightly (0.5◦) by the magnetic vector. The direction of rotation, which defines whether the bit is a one or a zero, is detected by the readout detectors and channel. Although the tiny amount of Kerr rotation results in a very small amount of signal modulation riding on a large DC bias, the technique of differential detection permits acceptable signal-to-noise ratio (SNR) to be achieved.

The output signal in an MO recording system is the signal from the light falling on one detector minus the signal from the light falling on the other detector. By placing a polarizing beamsplitter at 45◦ to the incident polarization, the two data detectors get the signals (Mansuripur, 1982)

in which d1 and d2 refer to the detector signals, I0 is the incident intensity, and θk /2, the rotation angle, is assumed to be small. The readout signal is taken as

As can be seen, this signal does not contain intensity noise from either the laser or from reflectivity variations of the disk. But the signal is very sensitive to polarization noise, which may be introduced by polarization

sensitive diffraction, by substrate birefringence effects, by inhomogeneities in the magnetooptic films, or by other polarization sensitive components.

Early MO recording media, such as manganese bismuth (MnBi) thin films (Chen et al., 1968), were generally crystalline in nature. The magnetic domains followed the crystalline boundaries and thus were irregular in shape (Marchant, 1990). The crystalline nature of the films caused optical scattering of the readout signal, and the irregular domains led to noise in the recorded signal. The combination degraded the SNR sufficiently to make polycrystalline magneto-optic media impractical.

The discovery in 1976 of magneto-optic materials based on the rare earth/transition metal (RE/TM) alloys (Choudhari et al., 1976) provided a practical material system for rewritable magneto-optic recording. These materials were amorphous and thus allowed acceptable signal-to-noise ratio to be obtained. Most commercial magneto-optic films today are based on terbium iron cobalt (TbFeCO).

Compact Disk-Recordable (CD-R)

The writable version of the popular CD-R disk looks very much like a stamped CD-ROM disk, and can be played in most CD-ROM players.

One of the reasons why CD-audio and CD-ROM have become so successful is the strict, uniform standards that all of the manufacturers of these products have adhered to. The standards were drawn up by Philips and Sony and are described by the colors of the books that they were first printed in. The standards describing CD-audio are found in the Red Book, those describing CD-ROM are found in the Yellow book, and those describing CD-R are found in the Orange Book. These books describe the physical attributes that the disks must meet (such as reflectivity, track pitch, etc.), as well as they layout of recorded data (Bouwhuis et al., 1985).

In prerecorded CD-ROM disks, the information is stamped as low reflectivity pits on a high reflectivity background. The disk has a reflectivity of 70% (achieved by using an aluminum layer), whereas the pits have a reflectivity of 30% (these reflectivity specifications have been defined in the Red Book). The CD-ROM drive uses this difference in reflectivity to sense the information stamped on the disk. To be compatible with CD-ROM readers, a CD-R disk must also use this reflectivity difference when recording data. To accomplish this, a CD-R disk is coated with an organic polymer that can change its local reflectivity permanently upon sufficient heating by a laser spot. The structure of a CD-R disk is shown in Fig. 25.8.

When the organic dye polymer is locally heated by the focused spot of a laser beam, polymeric bonds are broken or altered resulting in a change in the complex refractive index within the region. This refractive index change results in a change in the material reflectivity. There are a half-dozen organic dye polymers that are commercially being used. Two examples are phthalocyanine and poly-methane cyanine.

Like the CD-ROM drives, the CD-R drives have relatively low performance (when compared with optical or hard drives). The seek times are on the order of a few hundred milliseconds, whereas the maximum

data rate for a 4X speed drive is about 600 kilobytes/s. The seek time is slow because the CD-R drives spin the disks in constant linear velocity (CLV) mode as defined in the Red Book standards. Constant linear velocity means that the disk rotation speed varies with the radius at which the read head is positioned in such a way as to ensure that the linear velocity is constant with radius.

Pure data devices such as hard disks and optical WORM drives, however, can handle constant angular velocity (CAV) operation in which the linear velocity and data rate increase with radial position. The disadvantage of CLV operation is that it results in a very slow access time. When a seek of the optical head is performed, the motor speed has to be adjusted according to the radial position of the head. This takes time and thus lengthens the seek operation to 250–300 ms. In contrast, constant angular velocity devices like optical WORM disks have seek times on the order of 40 ms.

The roots of CD-R are found in audio CD, and thus some of the parameters, recording formats, and performance features are based on the Red Book standard (which has tended to be a handicap). The CD format (Bouwhuis et al., 1985) is not well suited for random access block oriented recording. The CD format leads to a sequential recording system, much like a tape recorder.

Recording Modes of CD-R

To understand the attributes and limitations of CD-R, it is important to understand the various recording modes that it can operate in. For fixed block architecture devices, the question of recording modes never comes up as there is only one mode, but in CD-R, there are four modes (Erlanger, 1994).

The four recording methods in CD-R drives are

In disk-at-once recording, one recording session is allowed on the disk, whether it fills up the whole disk or just a fraction of the disk. The data area in a single session disk consists of a lead-in track, the data field, and a lead out track. The lead-in track contains information such as the table of contents (TOC). The lead-in and lead-out are particularly important for interchange with CD-ROM drives. In single session writing, once the lead-in and lead-out areas are written, the disk is considered finalized and further recording (even if there are blank areas on the disk) cannot take place. After the disk is finalized, it can be played back on a CD-ROM player (which needs the lead-in and lead-out tracks present just to read the disk).

Having just the capability of recording a single session can be a quite a limitation for obvious reasons, and so the concept of multisession recording was introduced. An early proponent of multisession recording was Kodak, which wanted multisession capability for its photo-CD products. In multisession recording, each session is recorded with its own lead-in and lead-out areas. Multisession recorded disks can be played back in CD-ROM drives that are marked multisession compatible (assuming that each session on the disk has been finalized with lead-in and lead-out areas). Unfortunately, the lead-in and lead-out areas for each session take up lots of overhead (about 15 megabytes). With this kind of overhead, the ultimate maximum number of sessions that can be recorded on a 650 megabyte disk is 45 sessions.

Rather than do multisession recording, the user may choose track-at-once recording. In this type of recording, a number of tracks (which could represent distinct instances of writing) could be written within each session. The maximum number of tracks that can be written on the whole disk is 99. However, the disk or session must be finalized before it can be read on a CD-ROM drive.

Because of the way input data are encoded and spread out, it is imperative to maintain a constant stream of information when recording. If there is an interruption in the data stream, it affects the whole file being recorded (not just a sector as in MO or WORM drives). If the interruption is long enough, it will cause a blank region on the disk and will usually lead to the disk being rendered useless.

Many of these problems or inconveniences can be alleviated through a recording method called packet recording. In packet recording, the input data are broken up into packets of specified size (for example 128

kilo-bytes or 1 megabyte). Each packet consists of a link block, four run-in blocks, the data area, and two run-out blocks. The run-in and run-out blocks help delineate packets and allow some room for stitching, that is, provide some space for overlap if perfect synching is not achieved when recording an adjacent packet in a different CD-R drive.

Packet recording has several advantages. To begin with, there is no limit to the number of packets that can be recorded (up to the space available on the disk, of course), and so limitations imposed by track-at- once, multisession, or disk-at-once can be avoided. Also, if the packet size is smaller than the drive buffer size (as is likely to be the case), a dedicated hard drive is not needed while recording. Once the packet of information has been transferred to the drive buffer, the computer can disengage and do other tasks while the CD-R drive performs the recording operation.

With the advent of packet recording, CD-R technology becomes much more flexible than in the past and thus more attractive as a general purpose removable data storage device. It can be used for backup purposes as well the storage of smaller files. However, there is a problem of interchange with CD-ROM players. Some CD-ROM players cannot read a CD-R disk that has been packet written because they post a hard error when they encounter the link block at the beginning of each packet. Packet written CD-R disks can be read on CD-R drives and on CD-ROM drives that are packet enabled.

Optical Disk Systems

Disks

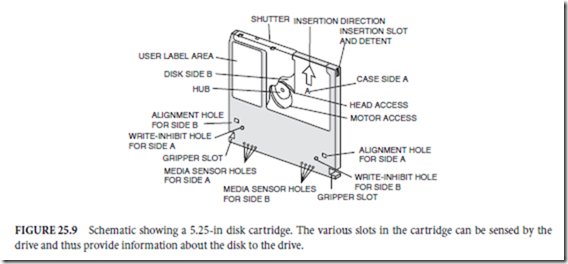

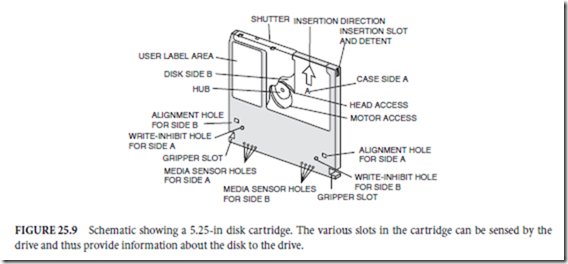

An important component of the optical storage system is, of course, the media cartridges. In fact, the greatest attraction of optical storage is that the storage media is removable and easily transported, much like a floppy disk. Most of the writable media that is available comes in cartridges of either 3.5- or 5.25-in. form factors. An example of the 5.25-in. cartridge is shown in Fig. 25.9. The cartridge has some sensory holes to allow its media type to be easily recognized by the drive. The tracks on the media are arranged in a spiral fashion. The first block of data is recorded on the innermost track (i.e., near the center). Recordable CD media is cartridgeless and is played in CD-R/CD-ROM drives either using a caddy or through tray loading (like audio CD players).

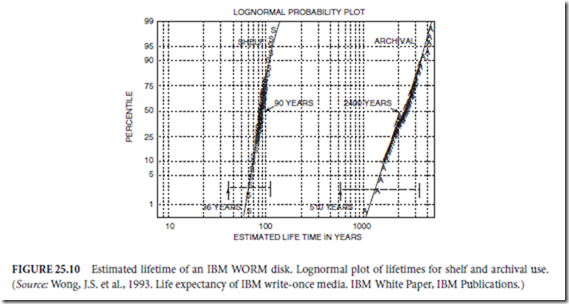

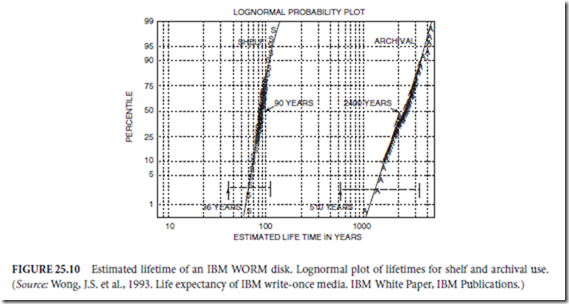

Cartridged media has been designed to have a long shelf and archival life (without special and expensive environmental control) and to be robust enough to survive the rigors of robotic jukeboxes and customer handling. Magneto-optic and WORM media is extremely stable and so data can be left on the media with great confidence (Okino, 1987). A chart showing projected lifetimes (after accelerated aging) of IBM WORM media is given in Fig. 25.10, which indicates that the lifetimes for 97.5% of the media surfaces for shelf and archival use are projected to exceed 36 and 510 years, respectively, for storage at 30◦C/80%

relative humidity. Shelf life is the length of time that data can be effectively written, whereas archival life is the length of time that data can be effectively read.

Optical media is perhaps the most stable digital storage technology: magnetic drives are prone to head crashes, tape deteriorates or suffers from print through (in which information on one layer is transferred to another layer in the tightly wound tape coil), and paper or microfiche also deteriorate with time (Rothenberg, 1995).

Automated Optical Storage Systems

Optical drives can be extended to automated storage systems, which are essentially jukeboxes consisting of one or more optical drives and a large number of optical disk cartridges. Optical libraries can provide on-line, direct-access, high-capacity storage.

Applications of Optical Library Systems

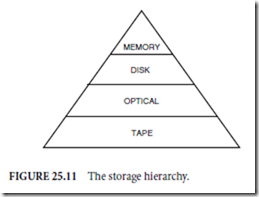

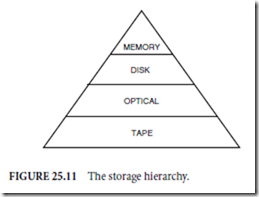

Optical library systems fit well into a large computer- based environment, such as client-server systems, peer-to-peer local area networks, or mainframe- based systems. In such environments, there is a distinct storage hierarchy based on cost/access trade-

off of various types of data. This hierarchy is shown schematically in Fig. 25.11 as a pyramid. The highest section of the pyramid contains the highest performance and highest cost type of memory. The most inexpensive (on a cost/megabyte) basis and lowest performance is that of tape. Optical libraries are an important part of this segment because they provide storage with performance capabilities approaching that of magnetic, but at a cost approaching that of tape.

An optical library contains a cartridge transport mechanism called an autochanger. The autochanger moves optical cartridges between an input/output slot (through which cartridges can be inserted into the library), the drives (where the cartridges are read or written), and the cartridge storage cells.

Sophisticated storage systems provide a hierarchical storage management capability in which data are automatically migrated between the various layers of the pyramid depending on the access needs of the data. For example, a telephone company can keep current billing information on magnetic storage, whereas billing information older than a month can be stored in optical libraries, and information older than six months can be stored on tape. It does not make sense to keep data that the system does not frequently access on expensive storage systems such as semiconductor memory or high erformance magnetic drives.

One of the applications for which automated optical storage is ideally suited is ocument imaging. Document imaging directly addresses the substantial paper management problem in society today.

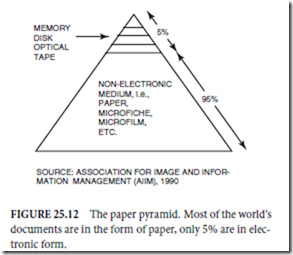

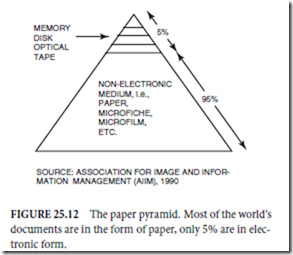

The way we store and manage paper documents has not changed significantly in over a century, and largely does not take advantage of the widespread availability of computers in the workplace. Trying to retrieve old paper documents is one of the most inefficient of business activities today (some businesses have stockrooms full of filing cabinets or document boxes). The way information is currently stored can be represented by a pyramid (as shown in Fig. 25.12), in which paper accounts for almost all of the document storage.

An application aimed squarely at re-enginee- ring the way we handle and store documents is document imaging. In document imaging, documents are scanned onto the computer and stored as computer readable documents. Storing documents in computer format has many advantages, including rapid access to the information. Recall of the stored documents is as easy as typing in a few key search words. Document imaging, however, is very storage intensive and, thus, a high-capacity, low-cost storage technology is required, ideally with fast random access. Optical storage is now recognized as the optimal storage technology in document imaging. Optical libraries can accommodate the huge capacities required to store large numbers of document images (text and/or photos), and optical drives can provide random access to any of the stored images. Figure 25.13 illustrates the equivalent storage capacity of a single 1.3 gigabyte optical disk.

Future Technology and Improvements in Optical Storage

Optical drives and libraries, like anyhigh-technology products, will see two types of improvement processes. The first is an incremental improvement process in which quality and functionality will continuously improve. The second is a more dramatic improvement process in which disk capacity and drive performance will improve in distinct steps every few years.

For the library, the improvements will be in the speed of the automated picker mechanism as well as the implementation of more sophisticated algorithms for data and cartridge management. Most of the improvements in the library systems will arise out of improvements in the drives themselves.

For the optical drive, the incremental improvement process will concentrate on the four core technology elements: the laser, the media, the recording channel, and the optomechanics. The laser will see continual improvements in its beam quality (reduction of wavefront aberrations and astigmatism), lifetime (which will allow drive makers to offer longer warranty periods), and power (which will allow disks to spin faster). The media will see improvements in substrates (reduction of tilt and birefringence), active layers (improved sensitivity), and passivation (increased lifetimes). The optics and actuator system will see improvements in the servosystem to allow finer positioning, reductions in noise, smaller optical components, and lighter actuators for faster seek operations. The recording channel and electronics will see an increase in the level of electronics integration, a reduction in electronic noise, the use of lower power electronics, and better signal processing and ECC to improve data reliability. One of the paramount directions for improvement will be in the continuous reduction of cost of the drives and media. This step is necessary to increase the penetration of optical drives in the marketplace.

In terms of radical improvements, there is a considerable amount of technical growth that is possible for optical drives. The two primary directions for future work on optical drives are (1) increasing capacity and (2) improving performance specifications (such as data rate and seek time). The techniques to achieve these are shown schematically in Fig. 25.14.

The performance improvements will include spinning the disk at much higher revolutions per minute to get higher data rates, radically improving the seek times for faster access to data, and implementing direct overwrite and immediate verify techniques to reduce the number of passes required when recording. Finally, the use of parallel recording (simultaneous recording or readback of more than one track), either through the use of multielement lasers or through special optics, will improve the data rates significantly.

In optical disk products, higher capacities can only be achieved by higher areal densities since the size of the disk cannot increase from presently established standard sizes. There are a number of techniques that are being considered for improving the storage capacity of optical drives. These include

- ✁ Use of shorter wavelength lasers or superresolution techniques to achieve maller spot sizes

- Putting the data tracks closer together for greater areal density

- Reducing the sensitivity to focus misregistration (FMR) (i.e., misfocus) and tracking misregistration (TMR) (i.e., when the focused spot is not centered on the track)

- Reducing the sensitivity to media tilt

Finally, improvements in the read channel such as the use of partial-response-maximum-likelihood (PRML) will enable marks in high-density recording to be detected in the presence of noise.

Acknowledgments

The author is grateful to two IBM Tucson colleagues: Blair Finkelstein for discussions and suggestions on the read channel section and Alan Fennema for writing some of the paragraphs in the servo section.

Defining Terms

Compact disk erasable/compact disk recordable (CD-E/CD-R): These define the writable versions of the compact disk. CD-E is a rewritable media and CD-R is a write-once media.

Data block size: The data that is to be recorded on an optical disk is formatted into minimum block sizes. The standard block size, which defines a single sector of information, is 512 byte. Many DOS/windows programs expect this block size. If the block size can be made larger, storage usage becomes more efficient. The next jump in block size is 1024 byte, often used in Unix applications.

Device driver: This is a piece of software that enables the host computer to talk to the optical drive. Without this piece of software, you cannot attach an optical drive to a PC and expect it to work. As optical drives grow in popularity, the device driver will be incorporated in the operating system itself. For example, the device driver for CD-ROM drives is already embodied in current operating systems.

Error correction and control (ECC): These are codes (patterns of data bits) that are added to raw data bits to enable detection and correction of errors. There are many types of error correcting codes. Compact disk drives, for example, use cross interleaved Reed–Solomon codes.

Magneto-optical media: An optical recording material in which marks are recorded using a thermomagnetic process. That is, the material is heated until the magnetic domains can be changed by the application of a modes magnetic field. This material is rewritable.

Optical jukebox: This is very similar to a traditional jukebox in concept. A large number of disks are contained in a jukebox and can be accessed at random for reading or writing.

Phase-change media: An optical recording material consisting of an alloy that has two metastable phases with different optical properties. Phase-change media can be rewritable or write-once.

Pulse-position modulation (PPM): A recording technique in which a mark on the disk signifies a binary 1 and its absence signifies a binary 0. A 1001 bit sequence is mark-space-space-mark.

Pulse-width modulation (PWM): A recording technique in which the edges of the mark represent the ones and the length of the mark represents the number of zeros. Thus, a 1001 sequence is represented by one mark.

Seek time/access time: The two terms are often used interchangeably, which is incorrect. The seek time is, by convention, defined as the length of time taken to seek across one-third of the full stroke of the actuator (which is from the inner data band to the outer data band on the disk). The access time is the seek time plus some latency for settling of the actuator and for the disk to spin around appropriately. The access time really states how quickly you can get to data.

Servosystem: The mechanism, which through feedback and control, keeps the laser beam on track and in focus on the disk—no easy task on a disk spinning at 4000 rpm.

Tracking: The means by which the optical stylus (focused laser beam) is kept in the center of the data tracks on an optical disk.

References

Arimoto, A. et al. 1986. Optimum conditions for high frequency noise reduction method in optical videodisk players. Appl. Opt. 25(9):1.

Asthana, P. 1994. A long road to overnight success. IEEE Spectrum 31(10):60.

Bouwhuis, G., Braat, J., Huijser, A., Pasman, J., van Rosmalem, G., and Schouhamer Immink, K. 1985.

Principles of Optical Disk Systems. Adam Hilger, Bristol, England, UK.

Braat, J. and Bouwhuis, G. 1978. Position sensing in video disk read-out. Appl. Opt. 17:2013.

Chen, D., Ready, J., and Bernal, G. 1968. MnBi thin films: Physical properties and memory applications.

J. Appl. Phys. 39:3916.

Choudhari, P., Cuomo, J., Gambino, R., and McGuire, T. 1976. U.S. Patent #3,949,387.

Earman, A. 1982. Optical focus servo for optical disk mass data storage system application. SPIE Proceedings

329:89.

Erlanger, L. 1994. Roll your own CD. PC Mag. (May 17):155.

Golomb, S. 1986. Optical disk error correction. BYTE (May):203.

Goodman, J. 1968. Introduction to Fourier Optics. McGraw-Hill, San Francisco.

Inoue, A. and Muramatsu, E. 1994. Wavelength dependency of CD-R. Proceedings of the Optical Data

Storage Conference. Optical Society of America, May, p. 6.

Kivits, P., de Bont, R., Jacobs, B., and Zalm, P. 1982. The hole formation process in tellerium layers for

optical data storage. Thin Solid Films 87:215.

Mansuripur, M., Connell, G., and Goodman, J.W. 1982. Signal and noise in magneto-optical readout. J.

Appl. Phys. 53:4485.

Mansuripur, M. 1987. Analysis of astigmatic focusing and push-pull tracking error signals in magneto-

optical disk systems. Appl. Opt. 26:3981.

Marchant, A. 1990. Optical Recording. Addison-Wesley, Reading, MA.

Mayer, L. 1958. Curie point writing on magnetic films. J. Appl. Phys. 29:1003.

Okino, Y. 1987. Reliability test of write-once optical disk. Japanese J. Appl. Phys. 26.

Ovshinsky, S. 1970. Method and apparatus for storing and retrieving information. U.S. Patent #3,530,441.

Rothenberg, J. 1995. Ensuring the longevity of digital documents. Sci. Am. (Jan.).

Sukeda, H., Ojima, M., Takahashi, M., and Maeda, T. 1987. High density magneto-optic disk using highly

controlled pit-edge recording. Japanese J. Appl. Phys. 26:243.

Takenaga, M. et al. 1983. New optical erasable medium using tellerium suboxide thin film. SPIE Proc.

420:173.

Tarzaiski, R. 1983. Selection of 3f (1,7) code for improving packaging density on optical disk recorders.

SPIE Proc. 421:113.

Treves, D. and Bloomberg, D. 1986. Signal, noise, and codes in optical memories. Optical Eng. 25:881.

Wrobel, J., Marchant, A., and Howe, D. 1982. Laser marking of thin organic films. Appl. Phys. Lett. 40:928.

Further Information

There are a number of excellent books that provide an overview of optical disk systems. A classic is Optical Recording by Alan Marchant (Addison-Wesley, Reading, MA, 1990), which provides an overview of the various types of recording as well as the basic functioning of an optical drive. A more detailed study of optical disk drives and their opto-mechanical aspects is provided in Principles of Optical Disc Systems by

G. Bouwhuis, J. Braat, A. Huijser, J. Pasman, G. van Rosmalen, and K. Schouhamer Immink (Adam Hilger Ltd., Bristol, England, 1985). An extensive study of magneto-optical recording is presented in The Physical Properties of Magneto-Optical Recording by Masud Mansuripur (Cambridge University Press, London, 1994).

For recent developments in the field of optical storage, the reader is advised to attend the meetings of the International Symposium on Optical Memory (ISOM) or the Optical Data Storage (ODS) Conferences (held under the auspices of the IEEE or the Optical Society of America).

For information on optical storage systems and their applications, a good trade journal is the Computer Technology Review. Imaging Magazine usually has a number of good articles on the applications of optical libraries to document imaging, as well as periodic reviews of commercial optical products.

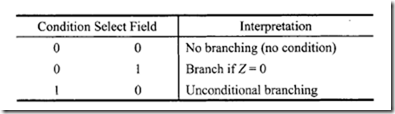

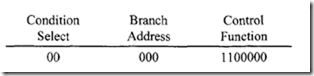

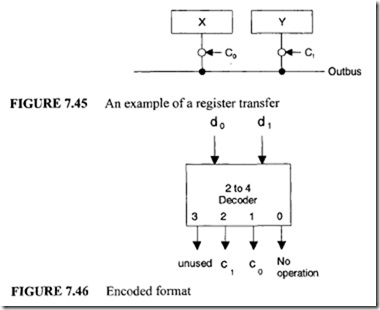

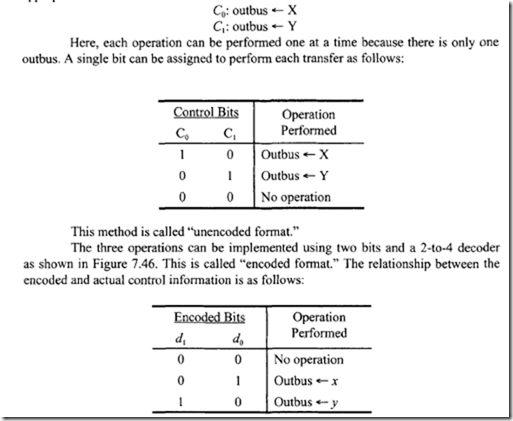

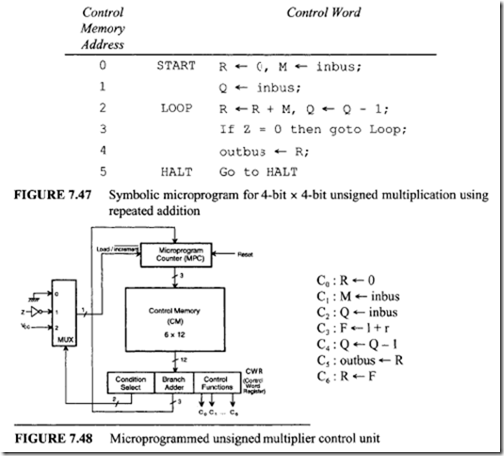

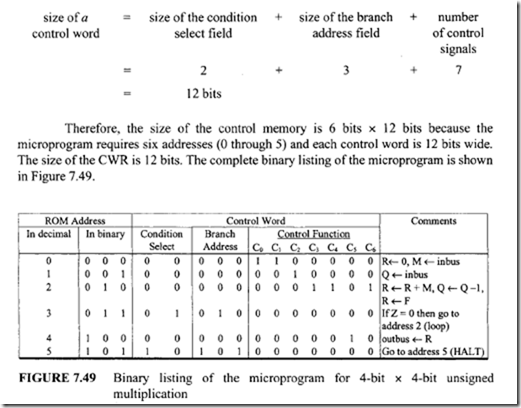

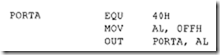

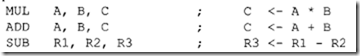

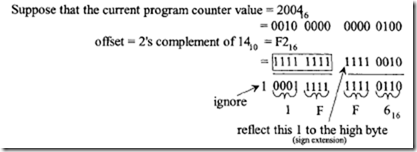

From Figure 7.47 six control memory address (addresses 0 through 5) are required for the control memory to store the microprogram. Therefore, a 3-bit address is necessary for each microinstruction. Hence, three bits for the branch address field are required. From Figure 7.48 seven control signals (C0 through C6) are required. Therefore, the size of the control function field is 7 bits wide. Thus, the size of each control word can be determined as follows:

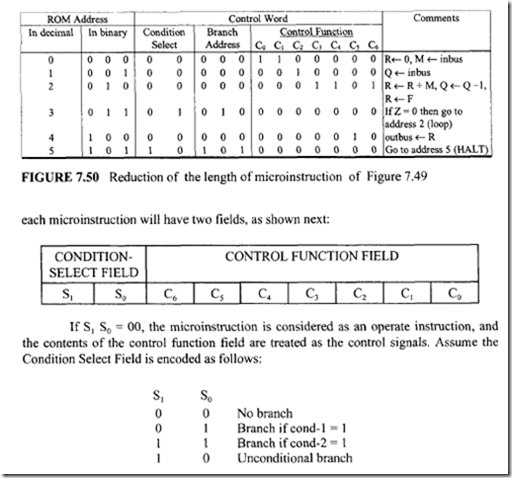

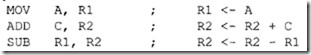

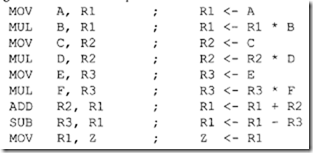

From Figure 7.47 six control memory address (addresses 0 through 5) are required for the control memory to store the microprogram. Therefore, a 3-bit address is necessary for each microinstruction. Hence, three bits for the branch address field are required. From Figure 7.48 seven control signals (C0 through C6) are required. Therefore, the size of the control function field is 7 bits wide. Thus, the size of each control word can be determined as follows: Next, consider the conditional branch instruction of Figure 7.49. This microinstruction implements the conditional instruction "If Z = 0 then go to address 2." In this case, the microinstruction does not have to activate any control signal of the control function field. Therefore, C0 through C6 are zero. The condition select field is 01 because the condition is based on Z = 0. Also, if the condition is true (Z = 0), the program branches to address 2. Therefore, the branch address field contains 0102 • Thus, the following binary microinstruction is obtained:

Next, consider the conditional branch instruction of Figure 7.49. This microinstruction implements the conditional instruction "If Z = 0 then go to address 2." In this case, the microinstruction does not have to activate any control signal of the control function field. Therefore, C0 through C6 are zero. The condition select field is 01 because the condition is based on Z = 0. Also, if the condition is true (Z = 0), the program branches to address 2. Therefore, the branch address field contains 0102 • Thus, the following binary microinstruction is obtained:

![image_thumb[1] image_thumb[1]](http://lh3.ggpht.com/-7vzW76NvbI8/VI8H5WgCjFI/AAAAAAAA8Sc/RJRpGnamLz8/image_thumb%25255B1%25255D_thumb.png?imgmax=800)

![image_thumb[2] image_thumb[2]](http://lh6.ggpht.com/-sv8bw9qeJ8s/VI8H8UpFuqI/AAAAAAAA8Ss/I7LzXSxcz-I/image_thumb%25255B2%25255D_thumb.png?imgmax=800)

![image_thumb[3] image_thumb[3]](http://lh4.ggpht.com/-_DjNmmoC72Q/VI8IAcKnVGI/AAAAAAAA8S8/ZlJUjrj-APk/image_thumb%25255B3%25255D_thumb.png?imgmax=800)

![image_thumb[4] image_thumb[4]](http://lh3.ggpht.com/-mlC44ycrNL4/VI8IDndxS2I/AAAAAAAA8TM/ixMNVthTTvE/image_thumb%25255B4%25255D_thumb.png?imgmax=800)

![image_thumb[5] image_thumb[5]](http://lh6.ggpht.com/-yuuLpC0Gtgc/VI8IG0tz9WI/AAAAAAAA8Tc/Rzav4YkjYv4/image_thumb%25255B5%25255D_thumb.png?imgmax=800)

![image_thumb[6] image_thumb[6]](http://lh3.ggpht.com/-vsEwAfisvDc/VI8IJz3YqVI/AAAAAAAA8Ts/J-MtUxsEaO8/image_thumb%25255B6%25255D_thumb.png?imgmax=800)

![image_thumb[7] image_thumb[7]](http://lh5.ggpht.com/-QKomt_BeIJA/VI8IS2AOjlI/AAAAAAAA8T8/1KY1IFo8Vss/image_thumb%25255B7%25255D_thumb.png?imgmax=800)

![image_thumb[8] image_thumb[8]](http://lh3.ggpht.com/-XqzG1b9oyKU/VI8IWE9LvKI/AAAAAAAA8UM/i41241CAB34/image_thumb%25255B8%25255D_thumb.png?imgmax=800)

![image_thumb[9] image_thumb[9]](http://lh4.ggpht.com/-D8bumeRZ500/VI8IZkAWuSI/AAAAAAAA8Uc/_9X2q4_5YXw/image_thumb%25255B9%25255D_thumb.png?imgmax=800)

![image_thumb[10] image_thumb[10]](http://lh5.ggpht.com/-V1VFQkOVkoM/VI8Icvb3NwI/AAAAAAAA8Us/KkNqeA3u8fY/image_thumb%25255B10%25255D_thumb.png?imgmax=800)

![image_thumb[1] image_thumb[1]](http://lh4.ggpht.com/-N1FLY7lv9gk/VI3PuPFmKPI/AAAAAAAA76k/xXFiAMsC_K0/image_thumb%25255B1%25255D_thumb.png?imgmax=800)

![image_thumb[2] image_thumb[2]](http://lh4.ggpht.com/-bTi4DTHHt6E/VI3P73b_1RI/AAAAAAAA760/zk1bfRBhGoo/image_thumb%25255B2%25255D_thumb.png?imgmax=800)

![image_thumb[3] image_thumb[3]](http://lh6.ggpht.com/-XHbicwYpzfU/VI3QJedPXjI/AAAAAAAA77I/TElwdln2fcs/image_thumb%25255B3%25255D_thumb.png?imgmax=800)

![image_thumb[4] image_thumb[4]](http://lh6.ggpht.com/-nVvIPIRBdLE/VI3QN51ZBOI/AAAAAAAA77Y/Z_KPCkM7faQ/image_thumb%25255B4%25255D_thumb.png?imgmax=800)

![image_thumb[5] image_thumb[5]](http://lh4.ggpht.com/-Ji4fPe09mHs/VI3QRJguo4I/AAAAAAAA77o/AwAjbsP7ldw/image_thumb%25255B5%25255D_thumb.png?imgmax=800)

![image_thumb[6] image_thumb[6]](http://lh3.ggpht.com/-Qq6-jI3g0Qo/VI3QUfcsmrI/AAAAAAAA774/3qwv_qs-Pw0/image_thumb%25255B6%25255D_thumb.png?imgmax=800)

![image_thumb[7] image_thumb[7]](http://lh5.ggpht.com/-ZTcA5J8bi88/VI3QcETHamI/AAAAAAAA78I/0enWBb9wJ8w/image_thumb%25255B7%25255D_thumb.png?imgmax=800)

![image_thumb[8] image_thumb[8]](http://lh6.ggpht.com/-0ohpKwGJLWA/VI3QhVTvO_I/AAAAAAAA78Y/x2ElhnPSb0k/image_thumb%25255B8%25255D_thumb.png?imgmax=800)

![image_thumb[9] image_thumb[9]](http://lh5.ggpht.com/-aNAfhtfGF5s/VI3QlRrSmeI/AAAAAAAA78o/G94-AP6kHck/image_thumb%25255B9%25255D_thumb.png?imgmax=800)

![image_thumb[10] image_thumb[10]](http://lh4.ggpht.com/-aIGK7LiSkqg/VI3QpmM4RGI/AAAAAAAA784/_dZu2x_U2LM/image_thumb%25255B10%25255D_thumb.png?imgmax=800)

![image_thumb[11] image_thumb[11]](http://lh5.ggpht.com/-D6usWuVJBfs/VI3QyojhfRI/AAAAAAAA79M/p7W0UGsIV0c/image_thumb%25255B11%25255D_thumb.png?imgmax=800)

![image_thumb[12] image_thumb[12]](http://lh4.ggpht.com/-ULPESsykduE/VI3Q28Zr9gI/AAAAAAAA79c/2WPrcSYQb_c/image_thumb%25255B12%25255D_thumb.png?imgmax=800)