16.5 MEMORY MANAGEMENT

Memory management is one of the most important subsystems of any operating system for computer control systems, and is even more critical in a RTOS than in standard operating systems. Firstly, the speed of memory allocation is important in a RTOS. A standard memory allocation scheme scans a linked list of indeterminate length to find a free memory block; however, memory allocation has to occur in a fixed time in a RTOS. Secondly, memory can become fragmented as free regions become separated by regions that are in use, causing a program to stall, unable to get memory, even though there is theoretically enough available. Memory allocation algorithms that slowly accumulate fragmentation may work perfectly well for desktop machines rebooted every day or so but are unacceptable for embedded systems that often run for months without rebooting.

Memory management is the process by which a computer control system allocates a limited amount of physical memory among its various processes (or tasks) in a way that optimizes performance. Actually, each process has its own private address space, initially divided into three logical segments: text, data, and stack. The text segment is read-only and contains the machine instructions of a program, the data and stack segments are both readable and writable. The data segment contains the initialized and non-initialized data portions of a program, whereas the stack segment holds the application’s run-time stack. On most machines, this is extended automatically by the kernel as the process executes. This is done by making a system call, but change to the size of a text segment only happens when its contents are overlaid with data from the file system, or when debugging takes place. The initial contents of the segments of a child process are duplicates of the segments of its parent.

The contents of a process address space do not need to be completely in place for a process to execute. If a process references a part of its address space that is not resident in main memory, the system pages the necessary information into memory. When system resources are scarce, the system uses a two-level approach to maintain available resources. If a modest amount of memory is available, the system will take memory resources away from processes if these resources have not been used recently. Should there be a severe resource shortage, the system will resort to swapping the entire context of a process to secondary storage. This paging and swapping done by the system are effectively transparent to processes, but a process may advise the system about expected future memory utili- zation as a performance aid.

A common technique for doing the above is virtual memory, which simulates a much larger address space than is actually available, using a reserved disk area for objects that are not in physical memory. The operating system’s kernel often performs memory allocations that are needed for only the duration of a single system call. In a user process, such short-term memory would be allocated on the run-time stack. Because the kernel has a limited run-time stack, it is not feasible to allocate even moderately- sized blocks of memory on it, so a more dynamic mechanism is needed. For example, when the system must translate a path name, it must allocate a 1-kbyte buffer to hold the name. Other blocks of memory must be more persistent than a single system call, and thus could not be allocated on the stack even if there was space. An example is protocol-control blocks that remain throughout the duration of a network connection.

This section discusses virtual memory techniques, memory allocation and deallocation, memory protection and memory access control.

Virtual memory

When it is executing a program, the microprocessor reads an instruction from memory and decodes it. At this point it may need to fetch or store the contents of a location in memory, so it executes the instruction and then moves on to the next. In this way the microprocessor is always accessing memory, either to fetch instructions or to fetch and store data. In a virtual memory system all of these addresses are virtual, and not physical addresses. They are converted into physical addresses by the microprocessor, based on information held in a set of tables maintained by the operating system.

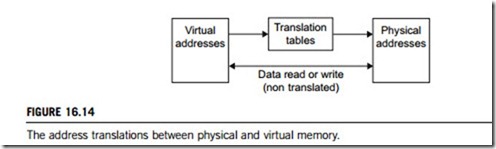

The operating system uses virtual memory to manage the memory requirements of its processes by combining physical memory with secondary memory (swap space) on a disk, usually located on a hardware disk drive. Diskless systems use a page server to maintain their swap areas on the local disk (extended memory). The translation from virtual to physical addresses is implemented by a memory management unit (MMU), which may be either a module of the CPU, or an auxiliary, closely coupled chip. The operating system is responsible for deciding which parts of the program’s simulated main memory are kept in physical memory, and also maintains the translation tables that map between virtual and physical addresses. This is shown in Figure 16.14.

We will now discuss three techniques of implementing virtual memory; paging, swapping and segmentation.

(1) Paging

Almost all implementations of virtual memory divide the virtual address space of an application program into pages; a page is a block of contiguous virtual memory addresses. Here, the low-order bits of the binary representation of the virtual address are preserved, and used directly as the low-order bits of the actual physical address; the high-order bits are treated as a key to one or more address translation

tables, which provide the high-order bits with the actual physical address. For this reason, a range of consecutive addresses in the virtual address space, whose size is a power of two, will be translated to a corresponding range of consecutive physical addresses. The memory referenced by such a range is called a page. The page size is typically in the range 512 8192 bytes (with 4 kB currently being very common), though 4 MB or even larger may be used for special purposes. (Using the same or a related mechanism, contiguous regions of virtual memory larger than a page are often mappable to contiguous physical memory for purposes other than virtualization, such as setting access and caching control bits.)

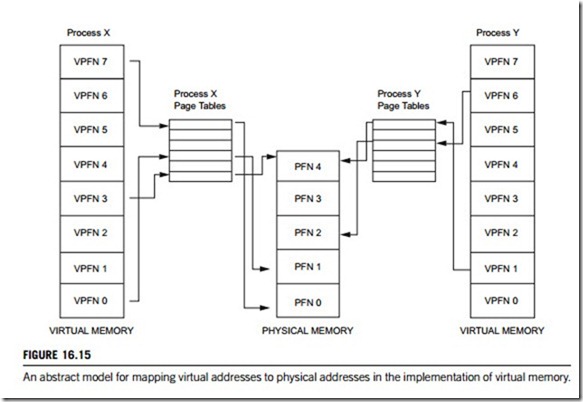

Almost all implementations use page tables to translate the virtual addresses seen by the application into physical addresses (also referred to as real addresses) used by the hardware. The operating system stores the address translation tables, i.e. the mappings from virtual to physical page numbers, in a data structure known as a page table. When the CPU tries to reference a memory location that is marked as unavailable, the MMU responds by raising an exception (commonly called a page fault) with the CPU, which then jumps to a routine in the operating system. If the page is in the swap area, this routine invokes an operation called a page swap, to bring in the required page.

The operating systems can have one page table or a separate page table for each application. If there is only one, different applications running at the same time will share a single virtual address space, i.e. they use different parts of a single range of virtual addresses. The operating systems which use multiple page tables provide multiple virtual address spaces, so concurrent applications seem to use the same range of virtual addresses, but their separate page tables redirect to different real addresses.

Figure 16.15 shows the virtual address spaces of two processes, X and Y, each with their own page tables, which map each process’s virtual pages into physical pages in memory. This shows that process X’s virtual page frame number 0 is mapped into memory at physical page frame number 1 and that process Y’s virtual page frame number 1 is mapped into physical page frame number 4. Each entry in the theoretical page table contains the following information: (1) a valid flag that indicates whether this page table entry is valid; (2) the physical page frame number that this entry describes; (3) the access control information that describes how the page may be used.

(2) Swapping

Swap space is a portion of hard disk used for virtual memory that is usually a dedicated partition (i.e., a logically independent section of a hard disk drive), created during the installation of the operating system. Such a partition is also referred to as a swap partition. However, swap space can also be

a special file. Although it is generally preferable to use a swap partition rather than a file, sometimes it is not practical to add or expand a partition when the amount of RAM is being increased. In such case, a new swap file can be created with a system call to mark a swap space.

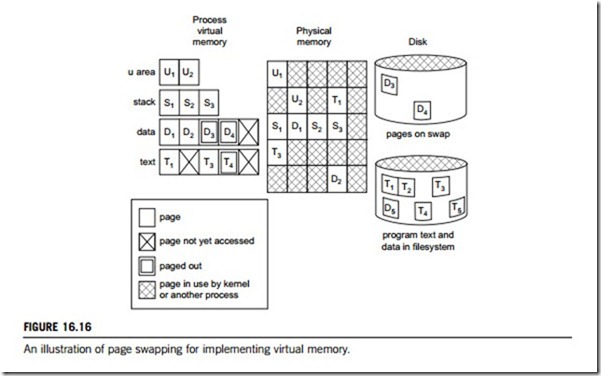

It is also possible for a virtual page to be marked as unavailable because the page was never previously allocated. In such cases, a page of physical memory is allocated and filled with zeros, the page table is modified to describe it, and the program is restarted as above. Figure 16.16 illustrates how the virtual memory of a process might correspond to what exists in physical memory, on swap, and in the file system. The U-area of a process consists of two 4 kB pages (displayed here as U1 and U1) of virtual memory containing information about the process that is needed by the system during execution. In this example, these pages are shown in physical memory, and the data pages, D3 and D4, are shown as being paged out to the swap area on disk. The text page, T4, has also been paged out, but it is not written to the swap area as it exists in the file system. Those pages that have not yet been accessed by the process (D5, T2, and T5) do not occupy any resources in physical memory or in the swap area.

The page swap operation involves a series of steps. Firstly it selects a page in memory; for example, a page that has not been recently accessed and (preferably) has not been modified since it was last read. If the page has been modified, the process writes the modified page to the swap area. The next step in the process is to read in the information in the needed page (the page corre- sponding to the virtual address the original program was trying to reference when the exception occurred) from the swap file. When the page has been read in, the tables for translating virtual

addresses to physical addresses are updated to reflect the revised contents of physical memory. Once the page swap completes, it exits, the program is restarted and returns to the point that caused the exception.

(3) Segmentation

Some operating systems do not use paging to implement virtual memory, but use segmentation instead. For an application process, segmentation divides its virtual address space into variable-length segments, so a virtual address consists of a segment number and an offset within the segment.

Memory is always physically addressed with a single number (called absolute or linear address). To obtain it, the microprocessor looks up the segment number in a table to find a segment descriptor. This contains a flag indicating whether the segment is present in main memory and, if so, the address of its starting point (segment’s base address) and its length. It checks whether the offset within the segment is less than the length of the segment and, if not, generates an interrupt. If a segment is not present in main memory, a hardware interrupt is raised to the operating sys- tem, which may try to read the segment into main memory, or to swap it in. The operating system may need to remove other segments (swap out) in order to make space for the segment to be read in.

The difference between virtual memory implementations that use pages and those using segments is not only about the memory division. Sometimes the segmentation is actually visible to the user processes, as part of the semantics of the memory model. In other words, instead of a process just having a memory which looks like a single large vector of bytes or words, it is more structured. This is different from using pages, which does not change the model visible to the process. This has important consequences.

It is possible to combine segmentation and paging, usually by dividing each segment into pages. In such systems, virtual memory is usually implemented by paging, with segmentation used to provide memory protection. The segments reside in a 32-bit linear paged address space, which segments can be moved into and out of, and pages in that linear address space can be moved in and out of main memory, providing two levels of virtual memory. This is quite rare, however, most systems only use paging.

Memory allocation and deallocation

The inefficient allocation or deallocation of memory can be detrimental to system performance. The presence of wasted memory in a block is called internal fragmentation, and it occurs because the size that was requested was smaller than that allocated. The result is a block of unusable memory, which is considered as allocated when not being used. The reverse situation is called external fragmentation, when blocks of memory are freed, leaving non-contiguous holes. If these holes are not large, they may not be usable because further requests for memory may call for larger blocks. Both internal and external fragmentation results in unusable memory.

Memory allocation and deallocation is a process that has several layers of application. If one application fails, another operates to attempt to resolve the request. This whole process is called dynamic memory management in Cþþ or C. Memory allocation is controlled by a subsystem called malloc, which controls the heap, a region of memory to which memory allocation and deallocation occurs. The reallocation of memory is also under the control of malloc. In malloc, the allocation of memory is performed by two subroutines, malloc and calloc. Deallocation is performed by the free subroutine, and reallocation is performed by the subroutine known as realloc. In deallocation, those memory blocks that have been deallocated are returned to the binary tree at its base. Thus, a binary tree can be envisioned as a sort of river of information, with deallocated memory flowing in at the base and allocated memory flowing out from the tips.

Garbage collection is another term associated with deallocation of memory. This refers to an automated process that determines what memory is no longer in use, and so recycles it. The auto- mation of garbage collection relieves the user of time-consuming and error-prone tasks. There are a number of algorithms for the garbage collection process, all of which operate independently of malloc.

Memory allocation and deallocation can be categorized as static or dynamic.

(1) Static memory allocation

Static memory allocation refers to the process of allocating memory at compile-time, before execution.

One way to use this technique involves a program module (e.g., function or subroutine) declaring static data locally, such that these data are inaccessible to other modules unless references to them are passed as parameters or returned. A single copy of this static data is retained and is accessible through many calls to the function in which it is declared. Static memory allocation therefore has the advantage of modularizing data within a program so that it can be used throughout run-time. The use of static variables within a class in object-oriented programming creates a single copy of such data to be shared among all the objects of that class.

(2) Dynamic memory allocation

Dynamic memory allocation is the allocation of memory storage for use during the run-time of a program, and is a way of distributing ownership of limited memory resources among many pieces of data and code. A dynamically allocated object remains allocated until it is deallocated explicitly, either by the programmer or by a garbage collector; this is notably different from automatic and static memory allocation. It is said that such an object has dynamic lifetime.

Memory pools allow dynamic memory allocation comparable to malloc, or the operator “new” in Cþþ. As those implementations suffer from fragmentation because of variable block sizes, it can be impossible to use them in a real-time system due to performance problems. A more efficient solution is to pre-allocate a number of memory blocks of the same size, called the memory pool. The application can allocate, access, and free blocks represented by handles at run- time.

Fulfilling an allocation request, which involves finding a block of unused memory of a certain size in the heap, is a difficult problem. A wide variety of solutions have been proposed, and some of the most commonly used are discussed here.

(a) Free lists

A free list is a data structure used in a scheme for dynamic memory allocation that operates by connecting unallocated regions of memory together in a linked list, using the first word of each unallocated region as a pointer to the next. It is most suitable for allocating from a memory pool, where all objects have the same size.

Free lists make the allocation and deallocation operations very simple. To free a region, it is just added it to the free list. To allocate a region, we simply remove a single region from the end of the free list and use it. If the regions are variable-sized, we may have to search for a large enough region, which can be expensive.

Free lists have the disadvantage, inherited from linked lists, of poor locality of reference and thus poor data cache utilization, and they provide no way of consolidating adjacent regions to fulfill allocation requests for large regions. Nevertheless, they are still useful in a variety of simple applications where a full-blown memory allocator is unnecessary, or requires too much overhead.

(b) Paging

As mentioned earlier, the memory access part of paging is done at the hardware level through page tables, and is handled by the MMU. Physical memory is divided into small blocks called pages (typically 4 kB or less in size), and each block is assigned a page number. The operating system may keep a list of free pages in its memory, or may choose to probe the memory each time a request is made (though most modern operating systems do the former). In either case, when a program makes a request for memory, the operating system allocates a number of pages to it, and keeps a list of allocated pages for that particular program in memory.

Memory protection

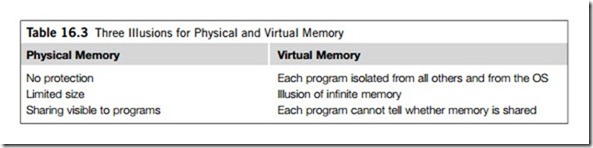

The topic of memory management in this section addresses a different set of constructs related to physical and virtual memory: protected memory, infinite amount of memory, and transparent sharing. Table 16.3 shows these illusions for physical memory and virtual memory.

Perhaps the simplest model for using memory is to provide single programming without memory protection, where each process (or task) runs with a range of physical memory addresses. Given that a single-programming environment allows only one process to run at a time, this can use the same physical addresses every time, even across reboots. Typically, processes use the lower memory addresses (low memory), and an operating system uses the higher memory addresses (high memory). An application process can address any physical memory location.

One step beyond the single-programming model is to provide multiprogramming without memory protection. When a program is copied into memory, a linker-loader alters the code of the program (loads, stores, jumps) to use the address of where the program lands in memory. In this environment, bugs in any program can cause other programs to crash, even the operating system.

The third model is to have a multitasking operating system with memory protection, which keeps user programs from crashing one another and the operating system. Typically, this is achieved by two hardware- supported mechanisms: address translation and dual-mode operation.

(1) Address translation

Each process is associated with an address space, or all the physical addresses it can touch. However, each process appears to own the entire memory, with the starting virtual address of 0. The missing piece is a translation table that translates every memory reference from virtual addresses to physical addresses.

Translation provides protection because there is no way for a process to talk about other processes’ address, and it has no way of touching the code or data of the operating system. The operating system uses physical addresses directly, and involves no translation. When an exception occurs, the operating system is responsible for allocating an area of physical memory to hold the missing information (and possibly in the process pushing something else out to disk), bringing the relevant information in from the disk, updating the translation tables, and finally resuming execution of the software that incurred the exception.

(2) Dual-mode operation

Translation tables can offer protection only if a process cannot alter their content. Therefore, a user process is restricted to only touching its address space under the user mode. Hardware requires the CPU to be in the kernel mode to modify the address translation tables.

A CPU can change from kernel to user mode when starting a program, or vice versa through either voluntary or involuntary mechanisms. The voluntary mechanism uses system calls, where a user application asks the operating system to do something on its behalf. A system call passes arguments to an operating system, either through registers or copying from the user memory to the kernel memory. A CPU can also be switched from user to kernel mode involuntarily by hardware interrupts (e.g., I/O) and program exceptions (e.g., segmentation fault).

On system calls, interrupts, or exceptions, hardware atomically performs the following steps:

(1) sets the CPU to kernel mode; (2) saves the current program counter; (3) jumps to the handler in the kernel (the handler saves old register values).

Unlike threads, context switching among processes also involves saving and restoring pointers to translation tables. To resume execution, the kernel reloads old register values, sets the CPU to user mode, and jumps to the old program counter.

Communication among address spaces is required in this operation. Since address spaces do not share memory, processes have to perform inter-process communication (IPC) through the kernel, which can allow bugs to propagate from one program to another.

Protection by hardware can be prohibitively slow, since applications have to be structured to run in separate address spaces to achieve fault isolation. In the case of complex applications built by multiple vendors, it may be desirable for two different programs to run in the same address space, with guarantees that they cannot trash each other’s code or data. Strong typing and software fault isolation are used to ensure this.

(a) Protection via strong typing

If a programming language disallows the misuse of data structures, a program may trash another, even in the same address space. With some object-oriented programming, programs can be downloaded over the net and run safely because the language, compiler, and run-time system prevents the program from doing bad things (e.g., make system calls). For example, Java defines a separate virtual machine layer, so a Java program can run on different hardware and operating systems. The downside of this protection mechanism is the requirement to learn a new language.

(b) Protection via software fault isolation

A language-independent approach is to have compilers generate code that is proven safe (e.g., a pointer cannot reference illegal addresses). For example, a pointer can be checked before it is used in some applications.

Memory access control

Dealing with race conditions is also one of the difficult aspects of memory management. To manage memory access requests coming from the system, a scheduler is necessary in the application layer or in the kernel, in addition to the MMU as a hardware manager. The most common way of protecting data from concurrent access by the memory access request scheduler is memory request contention. The semantics and methodologies of memory access request contention should be the same as for I/O request contention. Section 16.3.3 can be referred to for this topic.