Based on the control functions performed, a distributed control system can be architected into these hardware components: operator interfaces, I/O subsystem, connection buses, and field control units; and into these software modules: history modules, control modules, I/O modules, and network modules. These were discussed in detail in section 1.3 of this textbook.

In advanced industrial controls, the control units in distributed systems are digital, intelligent controllers or computers, containing microprocessors. From the computer point of view, both distributed control systems and distributed computer systems have similar hardware and software architectures. Therefore, distributed operating systems are required by both distributed control and computer systems. The term distributed system is therefore used here for both distributed control systems and distributed computer systems.

A distributed system consists of a set of computers that communicate with each other using hardware and software interconnecting devices. Generally, distributed systems exist in two types of hardware architectures. The first type is multiprocessor architecture, in which two or more micro- processors or CPUs are fully connected with buses or switches and share a common memory. In this arrangement, every microprocessor or CPU has equal access to the entire physical memory, and communication among them uses the shared memory model. It is found in some supercomputers such as the Cray-T3E, and in some equipment such as multifunction copiers.

The second type is multicomputer architecture, in which several independent computers are physically connected with hardware, and dynamically coupled with software to make up a computer network. LANs and computer clusters within a corporation. building or office are typical networks where multicomputer architectures are used.

All the software for distributed systems requires a kernel that plays the same role as that played by the operating system in single-processor or centralized systems. This is defined as a distributed operating system, and it manages the shared resources, schedules the processes and coordinates communications between the elements of the distributed system. In fact, distributed operating systems are just an extension of the distributed system architecture of multitasking operating systems applied to centralized system architectures.

There are two categories of such systems. The first is the multiprocessor operating system, often just a regular operating system. Nevertheless, they some have unique features including process synchronization, resource management, and scheduling.

The second kind is the multicomputer operating system, which may be considered to be either a computer network (loosely coupled computers) or a computer cluster (tightly coupled computers). This textbook explains three types of multicomputer operating systems: cluster operating systems, network operating systems, and parallel operating systems.

There are four mechanisms behind distributed operating systems: (1) distributed and parallel process management; (2) distributed and parallel file systems; (3) distributed and shared memory allocations; (4) interior, local and remote communications see section 17.3 for further details.

MULTIPROCESSOR OPERATING SYSTEMS

17.1.1 Multiprocessor hardware and software models

One main property of a multiprocessor is its interconnection network, which links the processors, memory modules and the other devices in a system. An interconnection network, which may be static or dynamic, facilitates communication among processors and memory modules. A few sample interconnection networks are: time-shared or common buses, crossbar switches, hierarchical switches, and multistage networks.Although in all multiprocessors, every CPU can address all memory words, in some, every memory word can be read as equally quickly. Depending on the coupling of processors and memory, multiprocessors may be broadly divided into two major categories: shared-memory, and non-remote memory access (NORMA) multiprocessors.

(1) Shared-memory multiprocessors

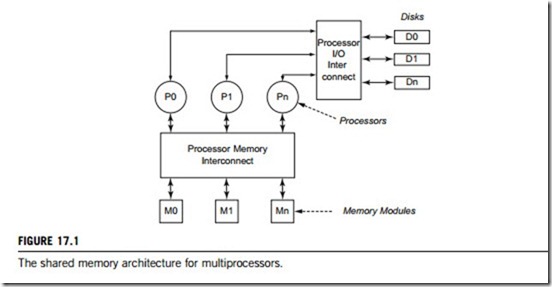

In a shared-memory multiprocessor, all main memory is accessible to and shared by all processors, as shown in Figure 17.1. On the basis of the cost of accessing shared memory, shared memory multi- processors are classified as:

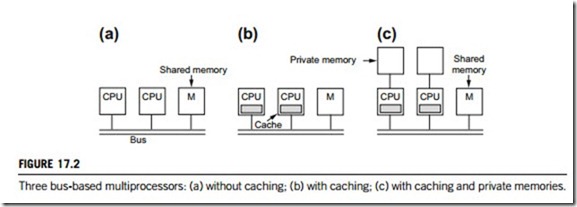

(a) Uniform memory access (UMA) multiprocessors. In the UMA architecture, the access time to shared memory is the same for all processors. The simplest have bus-based architecture, as illustrated in Figure 17.2. Figure 17.2(a) is a single-bus architecture in which two or more CPUs and one or more memory modules all use the same bus for communication. In Figure 17.2(b), each CPU has an additional cache, which can be inside the CPU chip, next to it, on the processor board, or some combination of all three. Figure 17.2(c) shows a bus with caching and memories, in which each CPU has not only a cache, but also a local, private memory which it accesses over a dedicated (private) bus. In addition to the bus-based

architectures, there are two other designs, which use crossbar switches, or multistage switching networks. However, these two designs may not require different mechanisms and semantics from the bus-based architectures in their operating systems.

(b) Non-uniform memory access (NUMA) multiprocessors. In the NUMA architecture, all physical memory in the system is partitioned into modules, each of which is local to and associated with a specific processor. As a result, access time for local memory is less than that for nonlocal memory. Nearly all CPU architectures use a small amount of very fast non-shared memory, known as cache, to exploit locality of reference in memory accesses. With NUMA, maintaining cache coherence across shared memory has a significant overhead. One can view NUMA as a very tightly coupled form of cluster computing. The addition of virtual memory paging to cluster architecture can allow the implementation of NUMA entirely in software where no NUMA hardware exists. However, the inter-node latency of software-based NUMA remains several orders of magnitude greater than that of hardware-based NUMA.

UMA architectures are the most common parallel machines, in part because most such machines are simply used as high-throughput multiple-programmed, multiple-user timesharing machines, rather than as execution vehicles for single, large-scale parallel programs. Interestingly, although all memory is accessed via a single shared bus, even UMA machines often have NUMA characteristics, because individual processors access shared memory via local caches. Cache misses and cache flushing can result in effectively non-uniform memory access times. Furthermore, bus contention may aggravate variability in memory access times, and scalability is limited, in that the shared global bus imposes limits on the maximum number of processors and memory modules it can accommodate.

NUMA architecture addresses the scalability problem by attaching local memory to each processor. Processors directly access local memory and communicate with each other and with remote memory modules through an interconnection switch. One type of switch is an interconnection network consisting of multiple levels of internal nodes, where systems are scaled by addition of internal switch nodes. A second type of switch consists of a hierarchical set of buses, where access times to remote memory depend on either the number of internal switch nodes on the access path between the processor and the memory or on the number of traversed system buses. Because NUMA architecture allows a large number of processors in a single machine, many experimental, large-scale multipro- cessors are NUMA machines.

(2) Non-remote memory access (NORMA) multiprocessors

In this class of architectures, each processor has its own local memory that is not shared by other processors in the system. Some modern Intel multiprocessors and HP workstation clusters are examples of non-shared memory multiprocessors. Workstation clusters differ from hypercube or mesh machines, in that the latter typically offer specialized hardware for low-latency inter-machine communication and also for implementation of selected global operations such as global synchroni- zation, addition, or broadcast.

NORMA multiprocessors are the simplest to design and build, and have become the architecture of choice for current supercomputers such as the Intel Paragon, the Cray series of high-performance machines, and others. In the simplest case, a collection of workstations on a local area network constitutes a NORMA multiprocessor. A typical NORMA multiprocessor consists of a number of processors interconnected on a high-speed bus or network cables; the topology of interconnection varies between applications.

One major difference between NORMA multiprocessors and NUMA is that there is no hardware support for direct access to remote memory modules. As a result, the formes are more loosely coupled. However, recent advances are leading to trade-offs in remote to local memory access times for NORMA machines (e.g., roughly 1:500 for local vs. remote memory access times) that can approximate those achieved for shared-memory machines (roughly 1:100). This suggests that future NUMA or NORMA parallel machines will require similar operating system and programming tool support in order to achieve high-performance parallelism.

The variety of different kinds of multiprocessor architectures, coupled with diverse application requirements, have resulted in many different designs, goals, features, and implementations of multiprocessor operating systems, both in university research projects and in the commercial domain. To introduce these, it is necessary to understand three important concepts: processor symmetry, processor instruction, and processor data-stream.

In a multiprocessing system, all CPUs may be equal, or some may be reserved for special purposes. A combination of multiprocessor hardware and operating system software design determines the symmetry (or lack thereof) in a given system. For example, hardware or software considerations may require that only one CPU responds to all hardware interrupts, whereas all other work in the system may be distributed equally among the remainder, or execution of kernel-mode code may be restricted to only one processor (either a specific processor, or only one processor at a time), whereas user-mode code may be executed in any combination of processors. Multiprocessing systems are often easier to design if such restrictions are imposed, but they tend to be less efficient than systems in which all CPUs are utilized equally.

Systems that treat all CPUs equally are called symmetric multiprocessing (SMP) systems. In systems where all CPUs are not equal, resources may be divided in a number of ways, including asymmetric multiprocessing (ASMP), non-uniform memory access (NUMA) multiprocessing, and clustered multiprocessing.

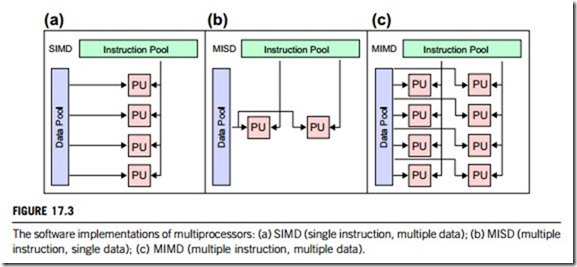

In multiprocessing, the processors can be used to execute a single sequence of instructions in multiple contexts (single instruction, multiple data or SIMD, often used in vector processing); or multiple sequences of instructions in a single context (multiple instruction, single data or MISD, used for redundancy in fail-safe systems and sometimes applied to describe pipelined processors or hyperthreading); or multiple sequences of instructions in multiple contexts (multiple instruction, multiple data or MIMD).

(a) SIMD multiprocessing is well suited to parallel or vector processing, as mentioned, since a very large set of data can be divided into parts that are individually subject to identical but independent operations. A single instruction stream directs the operation of multiple processing units to perform the same manipulations simultaneously on potentially large amounts of data. Figure 17.3(a) provides an illustration of SIMD multiprocessing.

(b) MISD is a type of parallel computing architecture where many functional units perform different operations on the same data. Pipeline architectures belong to this type, though a purist might say that the data are different after processing by each stage in the pipeline. Fault-tolerant computers executing the same instructions redundantly in order to detect and mask errors, in a manner known as task replication, may also be considered to belong to this type. Not many instances of this architecture exist, as MIMD and SIMD are often more appropriate for common data parallel techniques. Specifically, they allow better scaling and use of computational resources than MISD does. Figure 17.3(b) provides an illustration of MISD multiprocessing.

(c) MIMD is a technique employed to achieve parallelism. Machines using MIMD have a number of processors that function asynchronously and independently. At any time, different processors may be executing different instructions on different pieces of data. Such machine may be used in a number of application areas, such as computer-aided design and computer-aided manufacturing, simulation, modeling, and as communication switches. MIMD machines can be of either shared-memory or distributed-memory categories based on how their processors access memory. Shared-memory machines may be of the bus-based, extended, or hierarchical type, while distributed-memory machines may have hypercube or mesh interconnection schemes. Figure 17.3(c) provides an illustration of MIMD multiprocessing.

Processor scheduling

The basic functionality of a multiprocessor operating system must include most of what is present in a multitasking operating systems for single-processor machines. However, complexities arise due to

the additional capabilities of multiprocessor hardware and, more importantly, due to the extreme requirements of performance imposed on the multiprocessor operating system. The classic functions of an operating system include the creation and management of dynamic entities such as jobs, processes and tasks (or threads, as they may be termed hereafter). The effectiveness of multiprocessor computing depends on the performance of the primitives used to manage processors and to share resources.

(1) Heavyweight processes to lightweight processes

One way to express parallelism is by using UNIX-like processes sharing parts of their address spaces. Such a process consists of a single address space and a single task of control. Those operating systems’ kernels which support such processes do not distinguish between a task and its address space; they are sometimes referred to as heavyweight tasks. The parallelism expressed using heavyweight tasks is coarse-grained and is too inefficient for general-purpose parallel programming because of the following reasons:

(a) Since the operating system kernel treats a task and its address space as a single entity, they are created, scheduled, and destroyed together. As a result, the creation and deletion of heavyweight tasks is expensive.

(b) Reallocating a processor to a different address space (this operation is a context-switch) is expensive. There is an initial scheduling cost to decide the address space to which the processor should be reallocated, and next, there is a cost for updating the virtual memory mapping registers and transferring the processor between address spaces. Finally, there is a long-term cost associated with cache due to the address space change.

In many operating system kernels, therefore, address spaces and tasks are decoupled, so that a single address space can have more than one execution task. Such tasks are referred to as middleweight or kernel-level tasks when they are managed by the operating system kernel. The advantages of middleweight tasks are: (1) the kernel can directly schedule an application’s task on the available physical processors; (2) these kernel-level tasks offer a general programming interface to the application.

However, kernel-level tasks also exhibit some problems that can make them impractical for use in fine-grained parallel programs. The first of these is that the cost of generality of kernel-level tasks is not acceptable for fine-grained parallel applications. For example, saving and restoring the floating point context in a context switch are expensive and may be unnecessary for a specific application program. The second problem is that a relatively costly protected kernel call is required to invoke any task management operation, including task synchronization, and thirdly a single model represented by one style of kernel-level task is unlikely to have an implementation that is efficient for all parallel programs.

To address the above, some operating system designers have turned to user-level tasks, also known as lightweight tasks. These are managed by run-time library routines linked into each application. A management operation on a user-level task does not require an expensive kernel call, and such tasks enable an application program to use a task management system most appropriate to the problem domain under development.

A lightweight task generally executes in the context of a middleweight or a heavyweight task. Specifically, the task library schedules lightweight tasks on top of middleweight or heavyweight ones, which in turn are scheduled by the kernel on the available physical processors. Such a two-level scheduling policy has some inherent problems:

(a) User-level tasks, typically, do not have any knowledge of kernel events (e.g., processor preemption, I/O blocking and resuming, etc.). As a result, the application library cannot schedule a task on a “just idle” processor.

(b) When the number of runnable kernel-level tasks in a single address space is greater than the number of available processors, these tasks must be multiplexed. This implies that user-level tasks built on top of kernel-level tasks are actually scheduled by the kernel’s task scheduler, which nevertheless has little or no knowledge of the application’s scheduling requirements or current state.

Problems with multi-level scheduling arise from the lack of information flow between the different levels. For two-level scheduling, they can be solved by explicit vectoring of kernel events to the user- level task scheduler, using up-calls called scheduler activations, and by notifying the kernel of user- level events affecting processor allocation. Another solution dynamically controls the number of processes used by applications, which will be discussed later on.

Similarly, a set of kernel mechanisms have been proposed to implement “first-class user-level” tasks addressing the above problem. These mechanisms include shared kernel and user data structures (for asynchronous communication between the kernel and the user), software interrupts (for events that might require action on the part of a user-level scheduler), and a scheduler interface convention that facilitates interactions in user space between dissimilar kinds of tasks. There is another solution that also explores data structure alternatives when implementing user-level task packages. Here, alternative implementations are evaluated in performance for task run queues, idle processor queues, and for spinlock management.

We are not aware of general solutions to the multi-level scheduling problem, other than the actual exchange or configuration of the operating system’s tasks scheduler by application programs, as is often done in real-time systems.

(2) Scheduling policies

A scheduling policy is required by a multiprocessor operating system to allocate available time and processors to a job or a process, either statically or dynamically. Processor load balancing is considered to be a part of a scheduling policy.

These are operated by multiprocessor schedulers. As with other operating system services for parallel machines, schedulers themselves must be structured to be scalable to different-size target machines and to different application requirements.

There are two scheduling methods: static and dynamic. A static scheduler makes a one-time decision per job of how many processors to allocate. Once decided, the job is guaranteed to have exactly that number of processors whenever it is active. This approach offers low run-time scheduling overhead, but it also assumes a stable parallel application. This is a reasonable assumption for many large-scale scientific applications in which parallelism is derived by decomposition of regular data domains.

Recent work, however, is focusing more on dynamic scheduling because most complex large- scale parallel applications exhibit irregular data domains or changes in domain decompositions over time, so that a static processor allocation rapidly becomes inefficient; and also because large-scale parallel machines are often used in multi-user mode, so that scheduling must take into account the requirements of multiple parallel applications sharing a single machine. The dynamic policy occa- sionally exhibits a performance penalty when overhead values are very large. One reason for such performance degradation is a possible high rate of processor reallocation. Hence, some solutions have been suggested for dampening the rate of processor allocation and release, thereby reducing the rate of “useless processor exchange”. However, such a modification to the dynamic policy was found to be detrimental to performance. As for single-processor schedulers, multiprocessor schedulers can be classified as preemptive or non-preemptive. A scheduler can also be classified according to its scheduling granularity, which is determined by the executable unit being scheduled (for example, schedulers differ in that they may schedule individual or groups of processes).

In this subsection, we focus on dynamic scheduling, and on scheduling for shared memory machines, where variations in distances between different processors on the parallel machine are not considered. A few well-accepted multiprocessor scheduling policies are now reviewed.

(a) Single shared ready queue

Research addressing UMA multiprocessors has typically assumed the use of a single ready queue shared by all processors. With this queue, scheduling policies such as first-come first-served (FCFS) or shortest job first (SJF) are easily implemented, and have been evaluated in the literature. More interesting to us are schedulers and scheduling policies directly addressing the primary requirement of a parallel program: if performance improvements are to be attained by use of parallelism, then the program’s processes must be scheduled to execute in parallel.

(b) Co-scheduling

The goal of co-scheduling (or gang scheduling) is to achieve a high degree of simultaneous execution of processes belonging to a single job. This is particularly useful for a parallel application with cooperating processes that communicate frequently. A co-scheduling policy schedules the runnable processes of a job to run simultaneously on different processors. Job preemption implies the simul- taneous preemption of all of its processes. Effectively, the system context-switches between jobs. The scheduling algorithms used in the co-scheduling policy will be explained later in this subsection.

(c) Round-robin (RR) scheduling

Two versions of RR scheduling exist for multiprocessors. The first is a straightforward extension of the single-processor round-robin scheduling policy, which appends its processes to the end of the shared process queue. A round-robin scheduling policy is then invoked on the process queue. The second version uses jobs rather than processes as the scheduling unit, so the shared process queue is replaced by a shared job queue. Each entry of this queue itself contains a queue holding its processes. The scheduling algorithms supporting the RR policy are discussed in section (3) below.

(d) Dynamic partitioning

Dynamic partitioning (also known as the process control with processor partitioning) policy has a goal of minimizing context switches, so that less time is spent rebuilding a processor’s cache. This approach is based on the hypothesis that an application performs best when the number of runnable processes is the same as the number of processors. As a result, each job is dynamically allocated an equal fraction of the total number of processors, but none is allocated more processors than it has runnable processes.

Each application program periodically polls a scheduling server to determine the number of processes it should ideally run. If the ideal number is less than the actual number, the process suspends some of its processes, if possible. If the ideal number is greater than the actual number, a process wakes up a previously suspended process. This policy has limited generality since it requires interactions between user processes and the operating system scheduler, and it also requires user programs to be written such that their processes can be suspended and woken up during execution.

(e) Hand-off scheduling

A kernel level scheduler accepts user hints. Two kinds of hints exist: (1) discouragement hints, which is used to discourage the scheduler from running the current task; it may be either mild and strong, or weak; (2) hand-off hints, used to suggest that the scheduler runs a specific task. When using a hand-off hint, the current task hands off the processor to another task without intermediate scheduler interference. Such schedulers are better known as hand-off schedulers. Experiments with scheduling hints have shown that they can be used to improve program performance, particularly when program synchronization is exploited (e.g., the requester task hands off the processor to the holder of the lock) and when interprocess communication takes place (e.g., the sender hands the processor off to the receiver).

(3) Scheduling algorithms

There are three different co-scheduling algorithms: matrix, continuous and undivided. In a matrix algorithm, processes of arriving jobs are arranged in a matrix with P columns and a certain number of rows, where P is the total number of processors in the system. This arrangement is such that all the processes in a job reside in a same row. The scheduling algorithm uses a round-robin mechanism to multiplex the system between different rows of the matrix, so that all the processes in a row are co- scheduled.

A problem with the matrix algorithm is that a hole in the matrix may result in a processor being idle even though there are runnable processes. The continuous algorithm addresses this problem by arranging all processes in a linear sequence of activity slots. The algorithm considers a window of P consecutive positions in the sequence at a particular moment. When a new job arrives, the window is checked to see whether there are enough empty slots to satisfy its requirements. If not, the window is moved one or more positions to the right, until the leftmost activity slot in the window is empty but the slot just outside the window to the left is full. This process is repeated until a suitable window position is found to contain the entire job, or the end of the linear sequence is reached. Scheduling consists of moving the window to the right at the beginning of each time slice until the leftmost process in the window is the leftmost process of a job that was not co-scheduled in the previous time slice.

The most serious problem with the continuous algorithm is analogous to external fragmentation in a segmentation system. A new job may be split into fragments, which can result in unfair scheduling for large, split jobs compared to small contiguous jobs. This issue has been addressed by designing an undivided algorithm, which is identical to the continuous algorithm except that all of the processes of each new job are required to be contiguous in the linear activity sequence. This algorithm can be slightly modified to eliminate some of its performance problems: when a job arrives, its processes are appended to the end of a linked list of processes. In this case, scheduling is done by moving a window of length equal to the number of processors over the linked list. Each process in the window receives one quantum of service on a processor. At the end of this, the window is moved down the linked list until its first slot is over the first process of a job that was not completely co-scheduled in the previous quantum. When a process within the window is not runnable, the window is extended by one process and the non-runnable process is not scheduled. All processors that switch processes at the end of a quantum do so at the same time. A second algorithm modification improves expected performance for correlated workloads. This modification applies to the movement of the window. At the end of each of quantum, the window is only moved to the first process of the next job, even if it was co-scheduled in the previous time slice.

Scheduling policy with a round robin is done on the jobs. The job in the front of the queue receives P quanta of size Q, where P is the number of processors in the system and Q is the quantum size. If a job has fewer processes than P, then the total quanta size, which is equal to PQ, is divided equally among the processes. If the number of processes in a job exceeds P, then there are two choices. The first choice is same as the previous case, i.e., divide the total quanta size PQ equally among all processes. Alternatively, one can choose P processes from the job in a round-robin fashion, each process executing for one quantum. The first alternative has more scheduling overhead than the second.

(4) Some remarks

The performance of an application worsens considerably when the number of processes exceeds the total number of processors. This decreased performance may come from several factors:

(a) A process may be preempted while inside a spinlock-controlled critical section, while the other processes of the same application “busy wait” to enter the critical section. This problem is particularly acute for fine-grain parallel programs. Identical problems arise when programs’ processes are engaged in producer/consumer relationships.

(b) Frequent context switches occur when the number of processes greatly exceeds the number of processors.

(c) When a processor is interleaved between multiple address spaces, cache misses can be a major source of performance degradation. Careful application design and co-scheduling may handle problems associated with spinlock-controlled critical sections, and those with producer- consumer processes, but they do not address performance degradation due to cache corruption or frequent context switches.

A more direct solution is proposed, which describes a task scheduler that avoids preempting processes inside critical sections. This approach combines co-scheduling and preemption avoidance for critical sections and combines multiple processes to form a group. The scheduling policy of a group can be set so that either all processes in the group are scheduled and preempted simultaneously, or individual processes are scheduled and preempted normally, or processes in the group are never preempted. An individual process may choose to override its group scheduling policy, which is flexible, but leaves specific solutions of the critical section problem to user code. Problems with cache corruption and context-switch frequency are addressed by evaluating the performance of several multiprocessor scheduling policies based on the notion of processor affinity. A process’s processor affinity is based on the contents of the processor’s cache. The basic policy schedules a process on a processor on which it last executed, hoping that a large percentage of its working set is still present in the processor’s cache. Since the policy inherently discourages process migration, it may lead to severe load imbalance. Similarly, affinity (for local memory) also plays a vital role in scheduling processes in a NUMA machine; the context of a process resides mostly near the processor on which the process executed last. The effect of cache affinity on kernel processor scheduling discipline for multiple-programmed, shared-memory multiprocessors shows that the cache effects due to processor reallocation can be significant.

Memory management

Memory management for UMA multiprocessors is conceptually similar to that in a multitasking operating system for a single-processor machine. As mentioned earlier, in UMA architecture, memory access times are equal for all processors, but the underlying architecture typically supports some degree of parallelism in global memory access. As a result, even for UMA machines, operating system writers must exploit the available hardware parallelism when implementing efficient memory management. More interesting problems arise for NUMA and NORMA machines.

(1) Shared virtual memory

Memory management services and implementations are generally dependent on the operating system, as well as the underlying machine architecture. Some effort has focused on designing memory management functionalities and interfaces which are independent of the machine architecture and the operating system kernel. For example, virtual memory management is machine- and operating- system-independent. In the operating systems that follow this design, the machine-dependent portion of the virtual memory subsystem is implemented as a separate module. All information necessary for the management of virtual memory is maintained in machine-independent data structures that contain only the mappings necessary to run the current mix of programs. Similarly, a scalable, kernel- independent, generic memory management interface is suitable for various architectures (e.g. paged and or segmented) and implementation schemes.

Some operating systems allow applications to specify the protection level (inaccessible, read-only, read-write) of pages, and allow user programs to handle protection violations. Several user-level algorithms have been developed that make use of page-protection techniques, and analyze their common characteristics, in an attempt to identify the virtual memory primitives the operating system should provide to user processes.

(2) NUMA and NORMA memory management

A NUMA multiprocessor organization leads to memory management design choices that differ markedly from those that are common in systems designed for single processors and UMA multi- processors. NUMA architectures implementing a shared-memory programming model typically expose the existing memory access hierarchy to the application program.

We will briefly discuss several memory management algorithms for NUMA multiprocessors. Those described below are categorized by whether they migrate and/or replicate data. An algorithm would migrate data to the site where they are accessed in an attempt to exploit locality in data accesses, and decrease the number of remote accesses. The others replicate data so that multiple read accesses can happen at the same time, using local accesses.

(a) Migration algorithm

In the migration algorithm, the data are always migrated to the local memory of the processor which accesses them. If an application exhibits a high locality of reference, the cost of data migration is amortized over multiple accesses. Such an algorithm may cause thrashing of pages between local memories. One disadvantage of the migration algorithm is that only the tasks on one processor can access the data efficiently. An access from a second processor may cause another migration.

(b) Read-replication algorithm

Replication reduces the average cost of read operations, since it allows a read to be simultaneously executed locally at multiple processors. However, a few write operations become more expensive, since a replica may have to be invalidated or updated to maintain consistency. If the ratio of reads over writes is large, the extra expense of the write operation may be offset. Replication can be naturally added to the migration algorithm for better performance.

(c) Full-replication algorithm

Full replication allows data blocks to be replicated even while being written to, so keeping the data copies consistent is a major concern. A number of algorithms are available for this purpose. One of them is similar to the write-update algorithm for cache consistency. Some specific NUMA memory management schemes are described for individual parallel operating systems in section 17.2.

(d) Page placement

A page placement mechanism is implemented to automatically assign pages of virtual memory to appropriately located physical memory in the operating system on multiprocessor machines. A simple strategy uses local memory as a cache over global, managing consistency with a directory-based ownership protocol similar to that used for distributed shared virtual memory. Dynamic page replacement policies for NUMA multiprocessors support both migration and replication, use a directory-based invalidation scheme to ensure the coherence of replicated pages, and use a freeze and defrost strategy to control page bouncing. Such a parameterized NUMA memory management policy can be tuned for architectural as well as application differences.

(e) Weak memory

Some research has explored how shared memory may be represented on multiprocessor machines such that its performance can approximate that of message-passing systems. For example, some memory models exploit the fact that synchronization is used to control access to a shared state, which allows the underlying system to weaken memory consistency requirements. The resulting, weakened shared memory abstraction presented to programmers may be implemented efficiently, because strong consistency, and therefore, inter-processor communication is not required for all memory accesses. Other models of shared memory that were developed for distributed architectures exploit programmer directives to reduce the cost of coherence maintenance, or they provide explicit primitives with which users can maintain application-specific notions of coherence of shared state.

Process control

When multiple cooperating processes execute simultaneously within a multiprocessor, synchroniza- tion primitives are needed for concurrency control. When multiple processes share an address space, synchronization is required for shared memory consistency. Let us now take a close look at how this synchronization actually works in a multiprocessor. To start with, if a process on a single processor makes a system call that requires accessing some critical kernel table, the kernel code can simply disable interrupts before touching the table. It can then do its work knowing that it will be able to finish without any other process sneaking in and touching the table before it is finished. On a multiprocessor, disabling interrupts affects only the CPU doing the disable. Other CPUs continue to run and can still touch the critical table. As a consequence, a proper protocol must be used and respected by all CPUs to guarantee that mutual exclusion works.

Two fundamental semantics for single-processor operating systems also work for multiprocessor synchronization: mutual exclusion and event broker, discussed in detail in Chapter 16. This subsection briefly reviews some common and efficient synchronization constructs supported by recent multi- processor operating systems, where the purpose of mutual exclusion is that when one process in one processor locks a critical section, all other processes, running anywhere, also have access blocked to this critical section.

(1) Locks

Multiprocessor operating systems typically support multiple types of lock.

(a) Spin and blocking locks

Spin locks are the most primitive type, and can be based on different mechanisms including queue locking, ticket lock and priority locking. When a lock is busy, a waiting process spins (busy-waits) until it is released. Most hardware supports spin locks by specific instructions in their instruction sets; each of these instructions allows one indivisible blocking operation (atomic operation). When using a blocking lock, a waiting process (also called a contender process) blocks until awakened by the process releasing the lock. Such locks are also known as mutex locks.

(b) Read-write locks

A read-write lock maintains a pair of associated locks, one for read-only operations and one for write-only. The read lock may be held simultaneously by multiple reader threads, as long as there are no writers. The write lock is exclusive. A read-write lock allows either multiple readers or a single writer to enter a critical section at the same time. The waiting processes may either spin or block depending on whether the lock is implemented as a spinning read-write lock or a blocking read-write lock.

(c) Configurable locks

These locks allow applications to alter the waiting (spin, block or both) mechanism dynamically and as well as the request-handling mechanism (how the lock is scheduled). Configurable locks demonstrate that combined locks (locks that both spin and block while waiting) improve application performance considerably, compared to simple spin or blocking locks. Furthermore, hints from lock owners may be used to configure a lock, and improve its waiting strategy (advisory or speculative locks).

(d) Barrier locks

A barrier lock implements a barrier in a parallel program, which provide a means of ensuring that no processes advance beyond a particular point until all have arrived there. Once a process reaches a barrier, it is allowed to proceed if and only if all other cooperating processes reach the barrier. With a barrier lock, a waiting process may either spin or block depending on the implementation of the lock. There are three main barrier constructs: centralized barrier, three barrier, and dissemination barrier.

(e) Structured locks

In distributed-memory machines, multiprocessor operating system constructs (e.g., I/O, exception handling, multicast communications, etc.) are physically distributed in order to offer efficient access to the global operating system functionalities. Synchronization is no exception because it is a computa- tion that must be performed globally for many physically distributed processes and processors. As a result, this must be performed using explicit communication structures such as rings or spanning trees, which touch upon all members of the group of processes to be synchronized. In essence, a lock in a distributed-memory machine is a fragmented and distributed abstraction shared among several independently executable processes, where its importance is demonstrated by explicit support in hardware in several parallel machines. However, in contrast to the operating systems for UMA and NUMA multiprocessors, synchronization abstractions for distributed-memory machines can often be optimized substantially if they can be made programmable, or if it can be combined with other communications being performed in application programs.

(2) Other synchronization constructs

There are three further synchronization constructs, as explained below, used for multiprocessor operating systems.

(a) Test-and-set instructions

The test-and-set instruction is used to both test and (conditionally) write to a memory location as part of a single indivisible (atomic) operation. This means setting a value, but first performing some test (such as, is the value equal to another given value). If the test fails, the value is not set. If multiple processes may access the same memory and if a process is currently performing a test-and-set, no other process may begin another test-and-set until the first process is done. CPUs may use test-and-set instructions offered by other electronic components, such as dual-port RAM (DPRAM); CPUs may also offer such an instruction themselves.

(b) Condition variables

Condition variables make it possible for a task (or thread) to suspend its execution while awaiting an action by some other task. A condition variable is associated with some shared variables protected by a mutex and a predicate (based on the shared variables). A process acquires the mutex and evaluates the predicate. If it is not satisfied, the process waits on the condition variable. Such a wait atomically releases the mutex and suspends execution of the process. After a process has changed the shared variables so that the predicate is satisfied, it may signal a waiting task. This allows blocked tasks to resume action, to re-acquire the mutex, and to re-evaluate the predicate to determine whether to proceed or wait.

(c) Event brokers

Events are mainly used to control task orderings. A process may wait on an event; it blocks until the event occurs. Upon event occurrence, a signal wakes up one or all waiting processes. Events come in different flavors. A state (happened or not happened) may or may not be associated with an event. An account may be associated with an event, which enables a process to wait for a particular occurrence of an event. More complicated event structures have been shown to be useful for several application domains and target machines, most prominently including the event-handling facilities for active messages or the synchronization points designed for real-time applications.

(3) Interprocess communications

Cooperating processes or tasks in a multiprocessor environment often communicate to help with synchronization and concurrency. Such communication employs one of two schemes: shared variables or message passing. As mentioned earlier, when two processes communicate using shared memory, synchronization is required to guarantee memory consistency. The previous section described a few popular synchronization primitives, whilst this one focuses on interprocess communication without using explicit shared variables.

In a shared memory multiprocessor, message-passing primitives between disjoint address spaces may be implemented using global memory. Exchange of messages is a more abstract form of communication than accessing shared memory locations. Message passing subsumes communication, buffering, and synchronization. Multiprocessor operating systems have experimented with a large variety of different communication abstractions, including ports, mailboxes, links, etc.

From an implementation point of view, such abstractions are kernel-handled message buffers, which may be either unidirectional or bidirectional. A process may send to or may receive messages from them. There may be rights (such as send, receive, or ownership rights) associated with these entities. Different operating systems define different semantics on these abstractions.

The two basic communication primitives in all such abstractions are both send and receive, which can again come in many different flavors. Sends and receives may be blocking (a process invoking a primitive blocks until the operation is complete), or they may be non-blocking (a process does not wait for the communication to be complete), or they may be conditional vs. unconditional. Communication between processes using these primitives may be synchronous or asynchronous, etc.

Many issues must be considered when designing an interprocess communication mechanism; they are reviewed in numerous surveys of distributed operating systems, and are not discussed in detail here. Issues include (1) whether the underlying hardware supports reliable or unreliable communi- cation, (2) whether send and receives are blocking or non-blocking, (3) whether messages are typed or un-typed and of variable or fixed length, (4) how message queues can be kept short, (5) how to handle queue overflows, (6) how to support message priority (it may be necessary that some messages are handled at higher priorities than others), (7) how to transmit names, (8) protection issues, and (9) how kernel and user programs must interact to result in efficient message transfers. Communication issues specific to hypercube or mesh machines are reviewed elsewhere.

Most operating systems for shared-memory multiprocessor support cross-address space remote procedure calls (RPC) as a means of interprocess communication. RPC is a higher-level abstraction than message passing, that hides the message communication layer beneath a procedure call layer. It allows efficient and secure communications. Furthermore, cross-address space RPC can be made to look identical to cross-machine RPC, except that messages do not go out over the network and, in most cases, only one operating system kernel is involved in RPC processing. Otherwise, the same basic paradigm for control and data transfer is used. Messages are sent by way of the kernel between independent tasks bound to different address spaces. The use and performance of cross-address space RPC is discussed extensively in section 17.2.

Related posts:

Incoming search terms:

- difference between multiprocessor and distributed system

- classification of multiprocessor system in advance operating system design

- introduction to multiprocessor and distributed operating system

- multiprocessor and distributed os

- multiprocessor systems in advance operating system design

- difference between multiprocessor and distributed System in tabular form

- multiprocessor and distributed operating system

- Multiprocessor and distributed system

- difference between distributed system and multiprocessor system

- Multiprocessor system in ds

- multiprocessor system in advance operating system design unit

- compare and contrast the multiprocessor and distributed operating system

- multi processor and distributed operating system

- multi processor and distributed o/s

- multiprocessor system of classification in advance operating system design

- Difference between multiprocessor and distributed operating system

- switched multiprocessor in distributed operating system

- difference between multiprocessor and distributed os

- Multiprocessor and distributed oprating systems

- multiprocessor in distributed systems

- multiprocessor OS functions & requirements in advance operating system design

- Comparison between multiprocessor and distributed systems in a tabular form

- bus multiprocessor in distributed systems

- multiprocessor and distrubution system different between in o/s

- Multi processing os vs distributed os

- explain the similarties of multiprocessor and distributed system

- explain the similaries and difference between multi processes and distributed os

- general architecture of multi processor and the distributed operating system

- explain numa algorithm in distributed operating system

- differentiate distributed system from multiprocessor system

- differnce between multiprocessing and distrubutive system

- discuss in detail the architecture and organization of multiprocessor and distributed os ?

- distributed operating system vs multiprosser operating system

- distributed system multiprocessor operation system

- explain general architecture of multiprocessor and the distributed operating system

- explain in detail multiprocessoe and distributed system in os

- explain in detail architecture and orgainization of multiprocessor and distributed system

- define uma numa norma multiprocessing

- write about bus multiprocessor in distributed systems

- basic issues in multiprocessor and distributed operating systems

- brief study to multiprocessor and distributed operating systems

- compare and contrast multiprocessor and distributed operating system

- compare and contrast multiprocessor and distributed system

- compare distributed and multiprocessor

- diff between distributed operating system and multiprocessor operating system

- differenc between multiprocessor and distributed operating system

- difference between distributed and multiprocessor operating system

- difference between distributed system and multiprocessor system in tabular form

- difference between multiprocesses and distributedoperatingsystem

- difference between multiprocessor and multicomputer based on architecture resource sharing intrrprocessor communications in tabular form