Chapter 9. Expansion Buses

Given an old house, closets packed tighter than the economy section of a Boeing 737, and a charge card, most people will broach the idea of adding to their homes. After all, when you need more room, nothing seems more natural than building an addition. If you have more ambition than second thoughts, the most humble bungalow can become a manse of overwhelming grandeur with a mortgage to match.

Making the same magic on your computer might not seem as natural, but it is. Adding to your computer is easier than adding to your house—and less likely to lead to bankruptcy and divorce—because, unlike a house, computers are designed to be added to. They sprout connectors and ports like a Chia pet does hair.

Computers are different, however, in that the best way to add to them is not on the outside. Odd as it may seem, you can effectively increase the power and capabilities of most computers by expanding them on the inside.

The design feature key to this interior growth is the expansion bus. By plugging a card into your computer’s expansion bus, you can make the machine into almost anything you want it to be—within reason, of course. You can’t expect to turn your computer into a flying boat, but a video production system, international communications center, cryptographic analyzer, and medical instrument interface are all easily within the power of your expansion bus. Even if your aim is more modest, you’ll need to become familiar with this bus. All the most common options for customizing your computer—modems, network interface cards, and television tuners—plug into it. Even if your computer has a modem, sound, and video built in to its motherboard, forever fixed and irremovable, these features invisibly connect to the computer’s expansion bus.

The expansion bus is your computer’s electrical umbilical cord, a direct connection with the computer’s logical circulatory system that enables whatever expansion brainchild you have to link to your system. The purpose of the expansion bus is straightforward: It enables you to plug things into the machine and, hopefully, enhance the computer’s operation.

Background

Expansion buses are much more than the simple electrical connections you make when plugging in a lamp. Through the bus circuits, your computer transfers not only electricity but also information. Like all the data your computer must deal with, that information is defined by a special coding in the sequence and pattern of digital bits.

The bus connection must flawlessly transfer that data. To prevent mistakes, every bus design also includes extra signals to control the flow of that information, adjust its rate to accommodate the speed limits of your computer and its expansion accessories, and adjust the digital pattern itself to match design variations. Each different bus design takes it own approach to the signals required for control and translation. Some use extra hardware signals to control transfers across the bus. Others use extensive signaling systems that package information into blocks or packets and route them across the bus by adding addresses and identification bytes to these blocks.

Expansion bus standards define how your computer and the cards you add to it negotiate their data transfers. As a result, the standard that your computer’s bus follows is a primary determinant of what enhancement products work with it. Nearly all of today’s computers follow a single standard, the oft-mentioned Peripheral Component Interconnect (PCI), which ensures that the most popular computer cards will work inside them.

Older computers often had two expansion buses: a high-speed bus (inevitably PCI) that was the preferred expansion connection and a compatibility bus to accommodate older add-in devices that frugal-minded computer owners refused to discard. The compatibility bus followed the Industry Standard Architecture design, often called the PC bus. In addition, most new computers have an accelerated graphics port (AGP) for their video cards. Although AGP is a high-speed variation on PCI, it is not compatible with ordinary expansion cards. PCI replaced ISA because of speed. PCI was about eight times faster than ISA. But time has taken its toll, and engineers are now eyeing higher-performance alternatives to PCI. Some of these, such as PCI-X, are straightforward extrapolations on the basic PCI design. Others, such as HyperTransport and InfiniBand, represent new ways of thinking about the interconnections inside a computer.

Notebook computers have their own style of expansion buses that use PC Cards rather than the slide-in circuit boards used by PCI. This design follows the standards set by the Personal Computer Memory Card International Association (PCMCIA). The basic PC Card design allows for performance and operation patterned after the old ISA design, but the CardBus design expands on PC Card with PCI-style bus connections that are backward compatible. That means a CardBus slot will accept either a CardBus or PC Card expansion card (with one exception—fat “Type 3” cards won’t fit in ordinary skinny slots), so your old cards will happily work even in the newest of computers.

Unlike those of desktop computers, the expansion buses of notebook machines are externally accessible—you don’t have to open up the computer to slide in a card. External upgrades are, in fact, a necessity for notebook computers in that cracking open the case of a portable computer is about as messy as opening an egg and, for most people, an equally irreversible process. In addition, both the PC Card and CardBus standards allow for hot-swapping. That is, you can plug in a card or unplug it while the electricity is on to your computer and the machine is running. The expansion slots inside desktop computers generally do not allow for hot-swapping.

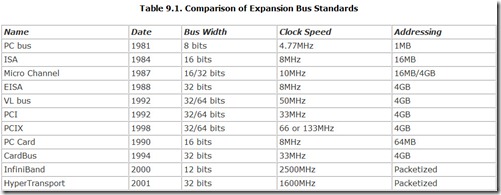

Expansion board technology is mature, at least for now. The necessary standards are in place and stable. You can take it for granted that you can slide a PCI expansion board in a PCI slot and have it automatically configure itself and operate in your computer. You can even select from a wealth of PC Card and CardBus modules for your notebook computer—all without any concern about compatibility. Although those statements sound so obvious as to be trivial, reaching this point has been a long journey marked by pragmatism and insight, cooperation and argument, and even battles between industry giants. At least 11 major standards for computer expansion have been the result, as summarized in Table 9.1.

The PC bus, so called because it was the expansion bus used by the first IBM personal computer, was little more than an extension of the connections from the 8088 microprocessor, with a few control signals thrown in. The AT bus was IBM’s extrapolation on the PC bus, adding the signals needed to match the 80286 microprocessor used in the IBM personal computer AT (for Advanced Technology). Compaq Corporation altered the design by moving memory to a separate bus when the company introduced its Deskpro 386 in 1987. This basic design was made into an official standard by the Institute of Electrical and Electronic Engineers as Industry Standard Architecture. Micro Channel Architecture represented IBM’s take on a new, revolutionary bus design, but did not survive poor marketing. EISA, short for Enhanced ISA, was an industry response to Micro Channel, which found little acceptance. Neither of the new buses (Micro Channel nor EISA) made a visible improvement on performance. The VESA Local Bus (or VL Bus, created by the Video Electronics Standards Association) did offer a visible improvement because it allowed for faster video displays. It was a promising new bus standard, but it was out-promoted (and out-developed) by Intel’s Peripheral Component Interconnect (PCI), which became the most popular expansion bus ever used by the computer industry.

Peripheral Component Interconnect

No one might have predicted that Peripheral Component Interconnect would become the most popular expansion bus in the world when Intel Corporation introduced it in July 1992. PCI was long awaited as a high-speed expansion bus specification, but the initial announcement proved to be more and less than the industry hoped for. The first PCI standard defined mandatory design rules, including hardware guidelines to help ensure proper circuit operation of motherboards at high speeds with a minimum of design complications. It showed how to link together computer circuits—including the expansion bus—for high-speed operation but failed to provide a standard for the actual signals and connections that would make it a real expansion bus.

The design first took the form of a true expansion bus with Intel’s development of PCI Release 2.0 in May 1993, which added the missing pieces to create a full 64-bit expansion bus. Although it got off to a rocky start when the initial chipsets for empowering it proved flawed, computer-makers have almost universally announced support. The standard has been revised many times since then. By the time this book appears, Version 3.0 should be the reigning standard.

Architectural Overview

The original explicit purpose of the PCI design was to make the lives of those who engineer chipsets and motherboards easier. It wasn’t so much an expansion bus as an interconnection system, hence its pompous name (Peripheral Component is just a haughty way of saying chip, and Interconnect means simply link). And that is what PCI is meant to be—a fast and easy chip link.

Even when PCI was without pretensions of being a bus standard, its streamlined linking capabilities held promise for revolutionizing computer designs. Whereas each new Intel microprocessor family required the makers of chipsets and motherboards to completely redesign their products with every new generation of microprocessor, PCI promised a common standard, one independent of the microprocessor generation or family. As originally envisioned, PCI would allow designers to link together entire universes of processors, coprocessors, and support chips without glue logic—the pesky profusion of chips needed to match the signals between different integrated circuits—using a connection whose speed was unfettered by frequency (and clock) limits. All computer chips that follow the PCI standard can be connected together on a circuit board without the need for glue logic. In itself, this could lower computer prices by making designs more economical while increasing reliability by minimizing the number of circuit components.

A key tenant of the PCI design is processor independence; that is, its circuits and signals are not tied to the requirements of a specific microprocessor or family. Even though the standard was developed by Intel, the PCI design is not limited to Intel microprocessors. In fact, Apple’s PowerMac computers use PCI.

Bus Speed

PCI can operate synchronously or asynchronously. In the former case, the speed of operation of the PCI bus is dependent on the host microprocessor’s clock and PCI components are synchronized with the host microprocessor. Typically the PCI bus will operate at a fraction of the external interface of the host microprocessor. With today’s high microprocessor speeds, however, the bus speed often is synchronized to the system bus or front-side bus, which may operate at 66, 100, or 133MHz. (The 400 and 533MHz buses used by the latest Pentium 4 chips actually run at 100 and 133MHz, respectively, and ship multiple bytes per clock cycle to achieve their high data rates.) The PCI bus can operate at speeds up to 66MHz under the revised PCI 2.2 (and later) standards. PCI-bus derivations, such as PCI-Express, use this higher speed.

PCI is designed to maintain data integrity at operating speeds down to 0 Hz, a dead stop. Although it won’t pass data at 0 Hz, the design allows notebook computers to freely shift to standby mode or suspend mode.

Although all PCI peripherals should be able to operate at 33MHz, the PCI design allows you to connect slower peripherals. To accommodate PCI devices that cannot operate at the full speed of the PCI bus, the design incorporates three flow-control signals that indicate when a given peripheral or board is ready to send or receive data. One of these signals halts the current transaction. Consequently, PCI transactions can take place at a rate far lower than the maximum 33MHz bus speed implies.

The PCI design provides for expansion connectors extending the bus off the motherboard, but it limits such expansion to a maximum of three connectors (none are required by the standard). As with VL bus, this limit is imposed by the high operating frequency of the PCI bus. More connectors would increase bus capacitance and make full-speed operation less reliable.

To attain reliable operation at high speeds without the need for terminations (as required by the SCSI bus), Intel chose a reflected rather than direct signaling system for PCI. To activate a bus signal, a device raises (or lowers) the signal on the bus only to half its required activation level. As with any bus, the high-frequency signals meant for the slots propagate down the bus lines and are reflected back by the unterminated ends of the conductors. The reflected signal combines with the original signal, doubling its value up to the required activation voltage.

The basic PCI interface requires only 47 discrete connections for slave boards (or devices), with two more on bus-mastering boards. To accommodate multiple power supply and ground signals and blanked off spaces to key the connectors for proper insertion, the physical 32-bit PCI bus connector actually includes 124 pins. Every active signal on the PCI bus is adjacent to (either next to or on the opposite side of the board from) a power supply or ground signal to minimize extraneous radiation.

Multiplexing

Although the number of connections used by the PCI system sounds high, Intel actually had to resort to a powerful trick to keep the number of bus pins manageable. The address and data signals on the PCI bus are time-multiplexed on the same 32 pins. That is, the address and data signals share the same bus connections (AD00 through AD31). On the one clock cycle, the combined address/data lines carry the address values and set up the location to move information to or from. On the next cycle, the same lines switch to carrying the actual data.

This address/data cycling of the bus does not slow the bus. Even in nonmultiplexed designs, the address lines are used on one bus cycle and then the data lines are used on the next. Moreover, PCI has its own burst mode that eliminates the need for alteration between address and data cycles. PCI also can operate in its own burst mode. During burst mode transfers, a single address cycle can be followed by multiple data cycles that access sequential memory locations.

PCI achieves its multiplexing using a special bus signal called Cycle Frame (FRAME#). The appearance of the Cycle Frame signal identifies the beginning of a transfer cycle and indicates the address/data bus holds a valid address. The Cycle Frame signal is then held active for the duration of the data transfer.

Burst Mode

During burst mode transfers, a single address cycle can be followed by multiple data cycles that access sequential memory locations, limited only by the needs of other devices to use the bus and other system functions (such as memory refresh). The burst can continue as long as the Cycle Frame signal remains active. With each clock cycle that Cycle Frame is high, new data is placed on the bus. If Cycle Frame is active only for one data cycle, an ordinary transfer takes place. When it stays active across multiple data cycles, a burst occurs.

This burst mode underlies the 132MBps throughput claimed for the 32-bit PCI design. (With the 64-bit extension, PCI claims a peak transfer rate of 264MBps.) Of course, PCI attains that rate only during the burst. The initial address cycle steals away a bit of time and lowers the data rate (the penalty for which declines with the increasing length of the burst). System overhead, however, holds down the ultimate throughput.

PCI need not use all 32 (or 64) bits of the bus’s data lines. Four Byte Enable signals (C/BE0# through C/BE3#) are used to indicate which of the four-byte-wide blocks of PCI’s 32-bit signals contain valid data. In 64-bit systems, another four signals (C/BE4# through C/BE7#) indicate the additional active byte lanes.

To accommodate devices that cannot operate at the full speed of the PCI bus, the design incorporates three flow-control signals: Initiator Ready (IRDY#, at pin B35), Target Ready (TRDY#, at pin A36), and Stop (STOP#, at pin A38). Target Ready is activated to indicate that a bus device is ready to supply data during a read cycle or accept it during a write cycle. When Initiator Ready is activated, it signals that a bus master is ready to complete an ongoing transaction. A Stop signal is sent from a target device to a master to stop the current transaction.

Data Integrity Signals

To ensure the integrity of information traversing the bus, the PCI specification makes mandatory the parity-checking of both the address and data cycles. One bit (signal PAR) is used to confirm parity across 32 address/data lines and the four associated Byte Enable signals. A second parity signal is used in 64-bit implementations. The parity signal lags the data it verifies by one cycle, and its state is set so that the sum of it, the address/data values, and the Byte Enable values are a logical high (1).

If a parity error is detected during a data transfer, the bus controller asserts the Parity Error signal (PERR#). The action taken on error detection (for example, resending data) depends on how the system is configured. Another signal, System Error (SERR#) handles address parity and other errors.

Parity-checking of the data bus becomes particularly important as the bus width and speed grow. Every increase in bus complexity also raises the chance of errors creeping in. Parity-checking prevents such problems from affecting the information transferred across the bus.

Serialized Interrupts

Because of the design of the PCI system, the lack of the old IRQ signals poses a problem. Under standard PCI architecture, the compatibility expansion bus (ISA) links to the host microprocessor through the PCI bus and its host bridge. The IRQ signals cannot be passed directly through this channel because the PCI specification does not define them. To accommodate the old IRQ system under PCI architecture, several chipset and computer makers, including Compaq, Cirrus Logic, National Semiconductor, OPTi, Standard Microsystems, Texas Instruments, and VLSI Technology, developed a standard they called Serialized IRQ Support for PCI Systems.

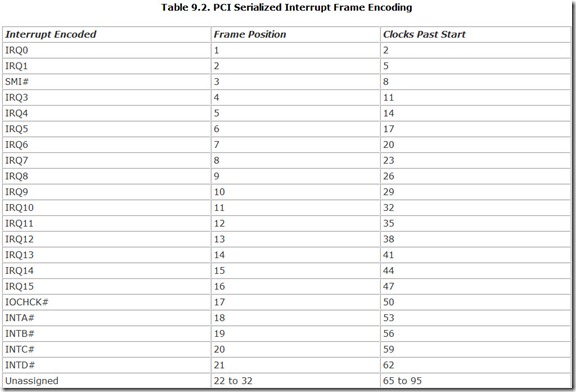

The serialized IRQ system relies on a special signal called IRQSER that encodes all available interrupts as pulses in a series. One long series of pulses, called an IRQSER cycle, sends data about the state of all interrupts in the system across the PCI channel.

The IRQSER cycle begins with an extended pulse of the IRQSER signal, lasting from four to eight cycles of the PCI clock (each of which is nominally 33MHz but may be slower in systems with slower bus clocks). After a delay of two PCI clock cycles, the IRQSER cycle is divided into frames, each of which is three PCI clock cycles long. Each frame encodes the state of one interrupt—if the IRQSER signal pulses during the first third of the frame, it indicates the interrupt assigned to that frame is active. Table 9.2 lists which interrupts are assigned to each frame position.

In addition to the 16 IRQ signals used by the old interrupt system, the PCI serialized interrupt scheme also carries data about the state of the system management interrupt (SMI#) and the I/O check (IOCHCK#) signals as well as the four native PCI interrupts and 10 unassigned values that may be used by system designers. According to the serialized interrupt scheme, support for the last 14 frames is optional.

The IRQSER cycle ends with a Stop signal, a pulse of the IRQSER signal that lasts two or three PCI clocks, depending on the operating mode of the serialized interrupt system.

The PCI serialized interrupt system is only a means of data transportation. It carries the information across the PCI bus and delivers it to the microprocessor and its support circuitry. The information about the old IRQ signals gets delivered to a conventional 8259A interrupt controller or its equivalent in the microprocessor support chipset. Once at the controller, the interrupts are handled conventionally.

Although the PCI interrupt-sharing scheme helps eliminate setup problems, some systems demonstrate their own difficulties. For example, some computers force the video and audio systems to share interrupts. Any video routine that generates an interrupt, such as scrolling a window, will briefly halt the playing of audio. The audio effects can be unlistenable. The cure is to reassign one of the interrupts, if your system allows it.

Bus-Mastering and Arbitration

The basic PCI design supports arbitrated bus-mastering like that of other advanced expansion buses, but PCI has its own bus command language (a four-bit code) and supports secondary cache memory.

In operation, a bus master board sends a signal to its host to request control of the bus and starts to transfer when it receives a confirmation. Each PCI board gets its own slot-specific signals to request bus control and receive confirmation that control has been granted. This approach allows great flexibility in assigning the priorities, even the arbitration protocol, of the complete computer system. The designer of a PCI-based computer can adapt the arbitration procedure to suit his needs rather than having to adapt to the ideas of the obscure engineers who conceived the original bus specification.

Bus mastering across the PCI bus is achieved with two special signals: Request (REQ#) and Grant (GNT#). A master asserts its Request signal when it wants to take control of the bus. In return, the central resource (Intel’s name for the circuitry shared by all bus devices on the motherboard, including the bus control logic) sends a Grant signal to the master to give it permission to take control. Each PCI device gets its own dedicated Request and Grant signal.

As a self-contained expansion bus, PCI naturally provides for hardware interrupts. PCI includes four level-sensitive interrupts (INTA# through INTD#, at pins A6, B7, A7, and B8, respectively) that enable interrupt sharing. The specification does not itself define what the interrupts are or how they are to be shared. Even the relationship between the four signals is left to the designer (for example, each can indicate its own interrupt, or they can define up to 16 separate interrupts as binary values). Typically, these details are implemented in a device driver for the PCI board. The interrupt lines are not synchronized to the other bus signals and may therefore be activated at any time during a bus cycle.

Low-Voltage Evolution

As the world shifts to lower-power systems and lower-voltage operation, PCI has been adapted to fit. Although the early incarnations of the standard provided for 3.3-volt operation in addition to the then-standard 5-volt level, the acceptance of the lower voltage standard became official only with PCI version 2.3. Version 3.0 (not yet released at the time of this writing) takes the next step and eliminates the 5-volt connector from the standard.

Slot Limits

High frequencies, radiation, and other electrical effects also conspire to limit the number of expansion slots that can be attached in a given bus system. These limits become especially apparent with local bus systems that operate at high clock speeds. All current local bus standards limit to three the number of high-speed devices that can be connected to a single bus.

Note that the limit is measured in devices and not slots. Many local bus systems use a local bus connection for their motherboard-based display systems. These circuits count as one local bus device, so computers with local bus video on the motherboard can offer at most two local bus expansion slots.

The three-device limit results from speed considerations. The larger the bus, the higher the capacitance between its circuits (because they have a longer distance over which to interact). Every connector adds more capacitance. As speed increases, circuit capacitance increasingly degrades its signals. The only way to overcome the capacitive losses is to start with more signals. To keep local bus signals at reasonable levels and yet maintain high speeds, the standards enforce the three-device limit.

A single computer can accommodate multiple PCI expansion buses bridged together to allow more than three slots. Each of these sub-buses then uses its own bus-control circuitry. From an expansion standpoint—or from the standpoint of an expansion board—splitting the system into multiple buses makes no difference. The signals get where they are supposed to, and that’s all that counts. The only worries are for the engineer who has to design the system to begin with—and even that is no big deal. The chipset takes care of most expansion bus issues.

Hot-Plugging

Standard PCI cards do not allow for hot-plugging. That is, you cannot and should not remove or insert a PCI expansion board into a connector in a running computer. Try it, and you risk damage to both the board and the computer.

In some applications, however, hot-plugging is desirable. For example, in fault-tolerant computers you can replace defective boards without shutting down the host system. You can also add new boards to a system while it is operating.

To facilitate using PCI expansion boards in such circumstances, engineers developed a variation on the PCI standard called PCI Hot Plug and published a specification that defines the requirements for expansion cards, computers, and their software to make it all work. The specification, now available as revision 1.1, is a supplement to the ordinary PCI Specification.

Unless you have all three—the board, computer, and software—you should not attempt hot-plugging with PCI boards. Products that meet the standard are identifiable by a special PCI Hot Plug logo.

Setup

PCI builds upon the Plug-and-Play system to automatically configure itself and the devices connected to it without the need to set jumpers or DIP switches. Under the PCI specification, expansion boards include Plug-and-Play registers to store configuration information that can be tapped into for automatic configuration. The PCI setup system requires 256 registers. This configuration space is tightly defined by the PCI specification to ensure compatibility. A special signal, Initialization Device Select (IDSEL), dedicated to each slot activates the configuration read and write operations as required by the Plug-and-Play system.

PCI-X

In the late 1990s, engineers at Compaq, Hewlett-Packard, and IBM realized that microprocessor speeds were quickly outrunning the throughput capabilities of the PCI bus, so they began to develop a new, higher-speed alternative aimed particularly at servers. Working jointly, they developed a new bus specification, which they submitted to the PCI Special Interest group in September 1998. After evaluating the specification for a year, in September 1999, the PCI SIG adopted the specification and published it as the official PCI-X Version 1.0 standard.

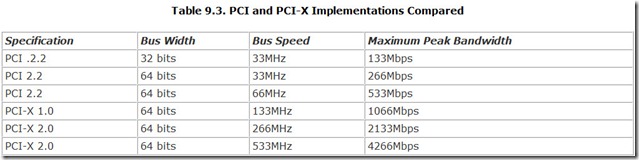

The PCI-X design not only increases the potential bus speed of PCI but also adds a new kind of transfer that makes the higher speeds practical. On July 23, 2002, PCI-X Version 2.0 was released to provide a further upgrade path built upon PCI-X technology. Table 9.3 compares the speed and bandwidth available under various existing and proposed PCI and PCI-X specifications.

The PCI-X design follows the PCI specification in regard to signal assignments on the bus. It supports both 32-bit and 64-bit bus designs. In fact, a PCI card will function normally in a PCI-X expansion slot, and a PCI-X expansion board will work in a standard PCI slot, although at less than its full potential speed.

The speed of the PCI-X expansion bus is not always the 133MHz of the specifications. It depends on how many slots are connected in a single circuit with the bus controller and what’s in the slots. To accommodate ordinary PCI boards, for example, all interconnected PCI-X slots automatically slow to the highest speed the PCI board will accept—typically 33MHz. In addition, motherboard layouts limit the high-frequency capabilities of any bus design. Under PCI-X, only a single slot is possible at 133MHz. Two-slot designs require retarding the bus to 100MHz. With four slots, the practical top speed falls to 66MHz. The higher speeds possible under PCI-X 2.0 keep the clock at 133MHz but switch to double-clocking or quad-clocking the data to achieve higher transfer rates.

To reach even the 133MHz rate (and more reliable operation at 66MHz), PCI-X adds a new twist to the bus design, known as register-to-register transfers. On the PCI bus, cards read data directly from the bus, but the timing of signals on the bus leaves only a short window for valid data. At 33MHz, a PCI card has a window of about 7 milliseconds to read from the bus; at 66MHz, only about 3 milliseconds is available. In contrast, PCI-X uses a register to latch the signals on the bus. When a signal appears on the bus, the PCI-X registers lock down that value until data appears during the next clock cycle. A PCI-X card therefore has a full clock cycle to read the data—about 15 milliseconds at 66MHz and about 7.5 milliseconds at 133MHz.

Although register-to-register transfers make higher bus speeds feasible, at any given speed they actually slow the throughput of the bus by adding one cycle of delay to each transfer. Because transfers require multiple clock cycles, however, the penalty is not great, especially for bursts of data. But PCI-X incorporates several improvements in transfer efficiency that help make the real-world throughput of the PCI-X bus actually higher than PCI at the same speed.

One such addition is attribute phase, an additional phase in data transfers that allows devices to send each other a 36-bit attribute field that adds a detailed description to the transfer. The field contains a transaction byte count that describes the total size of the transfer to permit more efficient use of bursts. Transfers can be designated to allow relaxed ordering so that transactions do not have to be completed in the order they are requested. For example, a transfer of time-critical data can zoom around previously requested transfers. Relaxed ordering can keep video streaming without interruption. Transactions that are flagged as non-cache coherent in the attribute field tell the system that it need not waste time snooping through its cache for changes if the transfer won’t affect the cache. By applying a sequence number in the attribute field, bus transfers in the same sequence can be managed together, which can improve the efficiency in caching algorithms.

In addition to the attribute phase, PCI-X allows for split transactions. If a device starts a bus transaction but delays in finishing it, the bus controller can use the intervening idle time for other transactions. The PCI-X bus also eliminates wait states (except for the inevitable lag at the beginning of a transaction) through split transactions by disconnecting idle devices from the bus to free up bandwidth. All PCI-X transactions are one standard size that matches the 128-bit cache line used by Intel microprocessors, permitting more efficient operation of the cache. When all the new features are taken together, the net result is that PCI-X throughput may be 10 percent or more higher than using standard PCI technology.

Because PCI-X is designed for servers, the integrity of data and the system are paramount concerns. Although PCI allows for parity-checking transfers across the bus, its error-handling mechanism is both simple and inelegant—an error would shut down the host system. PCI-X allows for more graceful recovery. For example, the controller can request that an erroneous transmission be repeated, it can reinitialize the device that erred or disable it entirely, or it can notify the operator that the error occurred.

PCI-X cards can operate at either 5.0 or 3.3 volts. However, high-speed operation is allowed only at the lower operating voltage. The PCI-X design allows for 128 bus segments in a given computer system. A segment runs from the PCI-X controller to a PCI-X bridge or between the bridge and an actual expansion bus encompassing one to four slots, so a single PCI-X system can handle any practical number of devices.

PCI Express

According to the PCI Special Interest Group, the designated successor to today’s PCI-X (and PCI) expansion buses is PCI Express. Under development for years as 3GIO (indicating it was to be the third generation input/output bus design), PCI Express represents a radical change from previous expansion bus architectures. Instead of using relatively low-speed parallel data lines, it opts for high-speed serial signaling. Instead of operating as a bus, it uses a switched design for point-to-point communications between devices—each gets the full bandwidth of the system during transfers. Instead of special signals for service functions such as interrupts, it uses a packet-based system to exchange both data and commands. The PCI-SIG announced the first PCI Express specification at the same time as PCI-X 2.0, on July 23, 2002.

In its initial implementation, PCI Express uses a four-wire interconnection system, two wires each (a balanced pair) for separate sending and receiving channels. Each channel operates at a speed of 2.5GHz, which yields a peak throughput of 200MBps (ignoring packet overhead). The system uses the 8b/10b encoding scheme, which embeds the clock in the data stream so that no additional clock signal is required. The initial design contemplates future increases in speed, up to 10GHz, the theoretic maximum speed that can be achieved in standard copper circuits.

To accommodate devices that require higher data rates, PCI Express allows for multiple lanes within a single channel. In effect, each lane is a parallel signal path between two devices with its own four-wire connection. The PCI Express hardware divides the data between the multiple lanes for transmission and reconstructs it at the other end of the connection. The PCI Express specifications allow for channels with 1, 2, 4, 8, 12, 16, or 32 lanes (effectively boosting the speed of the connection by the equivalent factor). A 32-lane system at today’s 2.5GHz bus speed would deliver throughput of 6400MBps.

The switched design is integral to the high-speed operation of PCI Express. It eliminates most of the electrical problems inherent in a bus, such as changes in termination and the unpredictable loading that occur as different cards are installed. Each PCI Express expansion connector links a single circuit designed for high-speed operation, so sliding a card into one slot affects no other. The wiring from each PCI Express connector runs directly back to a single centralized switch that selects which device has access to the system functions, much like a bus controller in older PCI designs. This switch can be either a standalone circuit or a part of the host computer’s chipset.

All data and commands for PCI Express devices are contained in packets, which incorporate error correction to ensure the integrity of transfers. Even interrupts are packetized using the Messaged Signal Interrupt system introduced with PCI version 2.2, the implementation of which is optional for ordinary PCI but mandatory for PCI Express. The packets used by PCI Express use both 32-bit and extended 64-bit addressing.

The primary concern in creating PCI Express was to accommodate the conflicting needs of compatibility while keeping up with advancing technology. Consequently, the designers chose a layered approach with the top software layers designed to match current PCI protocols, while the lowest layer, the physical, permits multiple variations.

In the PCI Express scheme, there are five layers, designated as follows:

-

Config/OS— Handles configuration at the operating system level based on the current PCI Plug-and-Play specifications for initializing, enumerating, and configuring expansion devices.

-

S/W— This is the main software layer, which uses the same drivers as the standard PCI bus. This is the main layer that interacts with the host operating system.

-

Transaction— This is a packet-based protocol for passing data between the devices. This layer handles the send and receive functions.

-

Data Link— This layer ensures the integrity of the transfers with full error-checking using a cyclic redundancy check (CRC) code.

The top two layers, Config/OS and S/W, require no change from ordinary PCI. In other words, the high-speed innovations of PCI Express are invisible to the host computer’s operating system.

As to the actual hardware, PCI Express retains the standard PCI board design and dimensions. It envisions dual-standard boards that have both conventional and high-speed PCI Express connections. Such dual-standard boards are restricted to one or two lanes on a extra edge connector that’s collinear with the standard PCI expansion connector but mounted between it and the back of the computer. Devices requiring a greater number of lanes need a new PCI Express connector system.

Standards and Coordination

The PCI, PCI-X, and PCI Express standards are managed and maintained by the PCI Special Interest Group. The latest revision of each specification is available from the following address:

PCI Special Interest Group

2575 NE Kathryn St. #17

Hillsboro, OR 97124

Fax: 503-693-8344

Web site: http://www.pcisig.com

InfiniBand

At one time, engineers (who are as unlikely to gamble as the parish priest) put their money on InfiniBand Architecture as the likely successor to PCI and PCI-X as the next interconnection standard for servers and, eventually, individual computers. The standard had wide industry backing and proven advantages in moving data around inside computers. Its success seemed all but assured by its mixed parentage that pulled together factions once as friendly as the Montagues and Capulets. But as this is written, some industry insiders believe that the introduction of a new PCI variation, PCI Express, may usurp the role originally reserved for InfiniBand.

InfiniBand Architecture (also known as IBA) marks a major design change. It hardly resembles what’s come to be known as an expansion bus. Instead of being loaded with clocks and control signals as well as data links, InfiniBand more resembles a network wire. It’s stripped down to nothing but the connections carrying the data signals and, if necessary, some power lines to run peripherals. As with a network connection, InfiniBand packages data into packets. The control signals usually carried over that forest of extra bus connections are put into packets, too. Moreover, InfiniBand has no shared bus but rather operates as a switched fabric. In fact, InfiniBand sounds more like a network than an expansion system. In truth, it’s both. And it also operates as an interconnection system that links together motherboard components.

Designed to overcome many of the inherent limitations of PCI and all other bus designs, IBA uses data-switching technology instead of a shared bus. Not only does this design increase the potential speed of each connection because the bandwidth is never shared, it also allows more effective management of individual connected devices and almost unlimited scalability. That is, it has no device limit (as does PCI) but allows you to link together as many peripherals as you want, both as expansion boards inside a computer and as external devices.

As with PCI, InfiniBand starts as an interconnection system to link components together on motherboards. But the specification doesn’t stop there. It defines a complete system that includes expansion boards and an entire network.

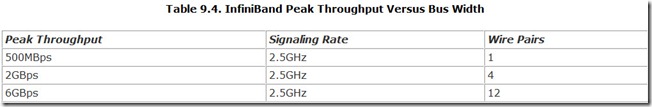

The initial implementation of InfiniBand operates with a base frequency of 2.5GHz. Because it is a packetized communication system, it suffers from some signaling overhead. As a result, the maximum throughput of an InfiniBand circuit is 250MBps or 2.0Gbps. The speed is limited by necessity. Affordable wiring systems simply cannot handle higher rates.

To achieve better performance, InfiniBand defines a multicircuit communication system. In effect, it allows a sort of parallel channel capable of boosting performance by a factor of 12.

InfiniBand is a radical change for system expansion, as different from PCI as PCI was from ISA. Good as it is, however, don’t expect to find an InfiniBand expansion bus in new personal computers. As an expansion bus, it is expensive, designed foremost for servers. You’ll find the physical dimensions for InfiniBand expansion in Chapter 30, “Expansion Boards.”

As an interconnection system for motherboard circuits, however, InfiniBand will find its way into personal computers, along with the next generation of Intel microprocessors, those that use 64-bit architecture.

History

The shortcomings of PCI architecture were apparent to engineers almost by the time the computer industry adopted the standard. It was quicker than ISA but not all that swift. Within a few years, high-bandwidth applications outgrew the PCI design, resulting in the AGP design for video subsystems (and eventually 2x, 4x, and 8x versions of AGP).

To the engineers working on InfiniBand, PCI-X was only a band-aid to the bandwidth problems suffered by high-powered systems. Most major manufacturers were already aware that PCI-X shared the same shortcomings as PCI, burdened with its heritage of interrupts and hardware-mediated flow control. Increasing the PCI speed exacerbated the problems rather than curing them.

Two separate groups sought to create a radically different expansion system to supercede PCI. One group sought a standard that would be able to link all the devices in a company without limit and without regard to cost. The group working on this initiative called it Future I/O. Another group sought a lower-cost alternative that would break through the barriers of the PCI design inside individual computers. This group called its initiative Next Generation I/O.

On August 31, 2000, the two groups announced they had joined together to work on a single new standard, which became InfiniBand. To create and support the new standard, the two groups formed the InfiniBand Trade Association (ITA) with seven founding members serving as the organization’s steering committee. These included Compaq, Dell, Hewlett-Packard, IBM, Intel, Microsoft, and Sun Microsystems. Other companies from the FI/O and NGI/O initiatives joined as sponsoring members, including 3Com, Adaptec, Cisco, Fujitsu-Siemens, Hitachi, Lucent, NEC, and Nortel Networks. On November 30, 2000, the ITA announced that three new members joined—Agilent Technologies Inc., Brocade Communications Systems Inc., and EMC Corporation.

The group released the initial version of the InfiniBand Architecture specification (version 1.0) on October 23, 2000. The current version, 1.0.a, was released on June 19, 2001. The two-volume specification is distributed in electronic form without cost from the InfiniBand Trade Association Web site, www.infinibandta.com.

Communications

In traditional terms, InfiniBand is a serial communication system. Unlike other expansion designs, it is not a bus but rather a switched fabric. That means it weaves together devices that must communicate together, providing each one with a full-bandwidth channel. InfiniBand works more like the telephone system than a traditional bus. A controller routes the high-speed InfiniBand signals to the appropriate device. In technical terms, it is a point-to-point interconnection system.

InfiniBand moves information as packets across its channel. As with other packet-based designs, each block of data contains addressing, control signals, and information. InfiniBand uses a packet design based on the Internet Protocol so that engineers will have an easier time designing links between InfiniBand and external networks (including, of course, the Internet itself).

All InfiniBand connections use the same signaling rate, 2.5GHz. Because of the packet structure and advanced data coding used by the system, this signaling rate amounts to an actual throughput of about 500MBps. The InfiniBand system achieves even higher throughputs by moving to a form of parallel technology, moving its signals through 4 or 12 separate connections simultaneously. Table 9.4 summarizes the three current transfer speeds of the InfiniBand Architecture.

These connections are full-duplex, so devices can transfer information at the same data rate in either direction. The signals are differential (the system uses two wires for each of its signals) to help minimize noise and interference at the high frequencies it uses.

The InfiniBand design allows the system to use copper traces on a printed circuit board like a conventional expansion bus. In addition, the same signaling scheme works on copper wires like a conventional networking system or through optical fiber.

As with modern interconnection designs, InfiniBand uses intelligent controllers that handle most of the work of passing information around so that it requires a minimum of intervention from the host computer and its operating system. The controller packages the data into packets, adds all the necessary routing and control information to each one, and sends them on their way. The host computer or other device need only send raw data to the controller, so it loses a minimal share of its processing power in data transfers.

The InfiniBand design is inherently modular. Its design enables you to add devices up to the limit of a switch or to add more switches to increase its capacity without limit. Individual switches create subnetworks, which exchange data through routers, much like a conventional network.

Structure

InfiniBand is a system area network (SAN), which simply means a network that lives inside a computer system. It can also reach outside the computer to act as a real network. In any of its applications—internal network, expansion system, or true local network—InfiniBand uses the same signaling scheme and same protocol. In fact, it uses Internet Protocol, version 6 (IPv6), the next generation of the protocol currently used by the Internet, for addressing and routing data.

In InfiniBand terminology, a complete IBA system is a network. The individual endpoints that connect to hardware devices, such as microprocessors and output devices, are called nodes. The wiring and other hardware that ties the network together make up the IBA fabric.

The hardware link between a device and the InfiniBand network that makes up a node is a channel adapter. The channel adapter translates the logic signals of the device connected to it into the form that will be passed along the InfiniBand fabric. For example, a channel adapter may convert 32-bit parallel data into a serial data stream spread across four differential channels. It also includes enough intelligence to manage communications, providing functions similar to the handshaking of a conventional serial connection.

InfiniBand uses two types of channel adapters: the Host Channel Adapter (HCA) and the Target Channel Adapter (TCA). As the name implies, the HCA resides in the host computer. More specifically, it is typically built in to the north bridge in the computer’s chipset to take full advantage of the host’s performance. (Traditionally, Ethernet would link to a system through its south bridge and suffer the speed restrictions of the host’s expansion bus circuitry.) The distinction serves only to identify the two ends of a communications channel; the HCA is a microprocessor or similar device. The TCA is an input/output device such as a connection to a storage system. Either the HCA or the TCA can originate and control the flow of data.

The InfiniBand fabric separates the system from conventional expansion buses. Instead of using a bus structure to connect multiple devices into the expansion system, the InfiniBand fabric uses a switch, which sets up a direct channel from one device to another. Switching signals rather than busing them together ensures higher speed—every device has available to it the full bandwidth of the system. The switch design also improves reliability. Because multiple connections are not shared, a problem in one device or channel does not affect others. Based on the address information in the header of each packet, the switch directs the packet toward its destination, a journey that may pass through several switches.

A switch directs packets only within an InfiniBand network (or within a device such as a server). The IPv6 nature of InfiniBand’s packets allows easy interfacing of an individual InfiniBand network with external networks (which may, in turn, be linked to other InfiniBand systems). A router serves as the connection between an InfiniBand network and another network.

The physical connections within the InfiniBand network (between channel adapters, switches, and routers) are termed links in IBA parlance. The fabric of an InfiniBand network may also include one or more repeaters that clean up and boost the signals in a link to allow greater range.

In a simple InfiniBand system, two nodes connect through links to a switch. The links and the switch are the fabric of the network. A complex InfiniBand system may involve multiple switches, routers, and repeaters knitted into a far-reaching web.

Standards and Coordination

The InfiniBand specification is managed and maintained by the InfiniBand Trade Association. The latest revision of the specification is available from the following address:

InfiniBand Trade Association

5440 SW Westgate Drive, Suite 217

Portland, OR 97221

Telephone: 503-291-2565

Fax: 503-297-1090

E-mail: administration@infinibandta.org

Web site: www.infinibandta.org

HyperTransport

The fastest way to send signals inside a computer is via HyperTransport, but HyperTransport is not an expansion bus. It isn’t a bus at all. Strictly defined, it is a point-to-point communications system. The HyperTransport specification makes no provision for removable expansion boards. However, HyperTransport is destined to play a major role in the expansion of high-performance computers because it ties the circuitry of the computer together. For example, it can link the microprocessor to the bus control circuitry, serving as a bridge between the north and south bridges in the motherboard chipset. It may also link the video system to the north bridge. Anywhere signals need to move their quickest, HyperTransport can move them.

Quick? HyperTransport tops out with a claimed peak data rate of 12.8GBps. That’s well beyond the bandwidth of today’s microprocessor memory buses, the fastest connections currently in use. It easily eclipses the InfiniBand Architecture connection system, which peaks out at 2GBps.

But that’s it. Unlike InfiniBand, which does everything from host expansion boards to connect to the World Wide Web, HyperTransport is nothing but fast. It is limited to the confines of a single circuit board, albeit a board that may span several square feet of real estate. As far as your operating system or applications are concerned, HyperTransport is invisible. It works without any change in a computer’s software and requires no special drivers or other attention at all.

On the other hand, fast is enough. HyperTransport breaks the interconnection bottlenecks in common system designs. A system with HyperTransport may be several times faster than one without it on chores that reach beyond the microprocessor and its memory.

History

HyperTransport was the brainchild of Advanced Micro Devices (AMD). The Texas chip-maker created the interconnection design to complement its highest performance microprocessors, initially codenamed Hammer.

Originally named Lightning Data Transport, the design gained the HyperTransport name when AMD shared its design with other companies (primarily the designers of peripherals) who were facing similar bandwidth problems. On July 23, 2001, AMD and a handful of other interested companies created the HyperTransport Technology Consortium to further standardize, develop, and promote the interface. The charter members of the HyperTransport Consortium included Advanced Micro Devices, API NetWorks, Apple Computers, PMC-Sierra, Cisco Systems, NVidia, and Sun Microsystems.

The Consortium publishes an official HyperTransport I/O Link Specification, which is currently available for download without charge from the group’s Web site at www.hypertransport.org. The current version of the specification is 1.03 and was released on October 10, 2001.

Performance

In terms of data transport speed, HyperTransport is the fastest interface currently in use. Its top speed is nearly 100 times quicker than the standard PCI expansion bus and three times faster than the InfiniBand specification currently allows. Grand as those numbers look, they are also misleading. Both InfiniBand and HyperTransport are duplex interfaces, which means they can transfer data in two directions simultaneously. Optimistic engineers calculate throughput by adding the two duplex channels together. Information actually moves from one device to another through the InfiniBand and HyperTransport interfaces at half the maximum rate shown, at most. Overhead required by the packet-based interfaces eats up more of the actual throughput performance.

Not all HyperTransport systems operate at these hyperspeeds. Most run more slowly. In any electronic design, higher speeds make parts layout more critical and add increased worries about interference. Consequently, HyperTransport operates at a variety of speeds. The current specification allows for six discrete speeds, only the fastest of which reaches the maximum value.

The speed of a HyperTransport connection is measured in the number of bits transferred through a single data path in a second. The actual clock speed of the connection on the HyperTransport bus is 800MHz. The link achieves its higher bit-rates by transferring two bits per clock cycle, much as double data-rate memory does. One transfer is keyed to each the rise and fall of the clock signal. The six speeds are 400, 600, 800, 1000, 1200, and 1600Mbps.

In addition, HyperTransport uses several connections in parallel for each of its links. It achieves its maximum data rate only through its widest connection with 32 parallel circuits. The design also supports bus widths of 16, 8, 4, and 2 bits.

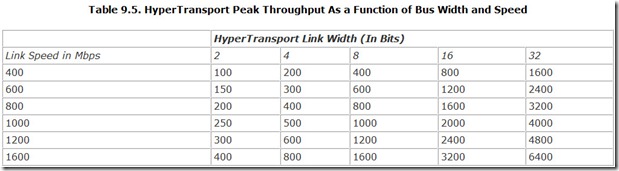

Table 9.5 lists the link speeds as well as the maximum bus throughputs in megabytes per second at each of the allowed bus widths.

Certainly, a wider bus gives a performance edge, but the wider connections also rapidly increase in complexity because of the structure of the interface.

Structure

HyperTransport is a point-to-point communications system. Functionally, it is equivalent to a wire with two ends. The signals on the HyperTransport channel cannot be shared with multiple devices. However, a device can have several HyperTransport channels leading to several different peripheral devices.

The basic HyperTransport design is a duplex communications system based on differential signals. Duplex means that HyperTransport uses separate channels for sending and receiving data. Differential means that HyperTransport uses two wires for each signal, sending the same digital code down both wires at the same time but with the polarity of the two signals opposite. The system registers the difference between the two signals. Any noise picked up along the way should, in theory, be the same on the two lines because they are so close together, so it does not register on the differential receiver.

HyperTransport uses multiple sets of differential wire pairs for each communications channel. In its most basic form, HyperTransport uses two pairs for sending and two for receiving, effectively creating two parallel paths in the channel. That is, the most basic form of HyperTransport moves data two bits at a time. The HyperTransport specification allows for channel widths of 2, 4, 8, 16, or 32 parallel paths to move data the corresponding number of bits at a time. Of course, the more data paths in a channel, the faster the channel can move data. The two ends of the connection negotiate the width of the channel when they power up, and the negotiated width remains set until the next power up or reset. The channel width cannot change dynamically.

The HyperTransport specification allows for asymmetrical channels. That is, the specification allows for systems that might have a 32-bit data path in one direction but only 2 bits in return.

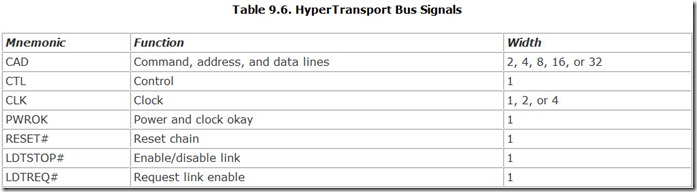

Although HyperTransport moves data in packets, it also uses several control lines to help reduce software overhead and maintain the integrity of the network. A clock signal (CLK) indicates when data on the channel is valid and may be read. Channels 2-, 4-, or 8-bits wide have single clock signals; 16-bit channels have two synchronized clock signals; 32-bit channels have four clock signals. A control signal (CTL) indicates whether the bits on the data lines are actually data or control information. Activating this signal indicates the data on the channel is control information. The line can be activated even during a data packet, allowing control information to take immediate control. A separate signal (POWEROK) ensures the integrity of the connection, indicating that the power to the system and the clock are operating properly. A reset signal (RESET#) does exactly what you’d expect—it resets the system so that it reloads its configuration.

For compatibility with power-managed computers based on Intel architecture, the HyperTransport interface also includes two signals to allow a connection to be set up again after the system goes into standby or hibernate mode. The LDTSTOP# signal, when present, enables the data link. When it shifts off, the link is disabled. The host system or a device can request the link be reenabled (which also reconfigures the link) with the LDTREQ# signal. Table 9.6 summarizes the signals in a HyperTransport link.

The design of the HyperTransport mechanical bus is meant to minimize the number of connections used because more connections need more power, generate more heat, and reduce the overall reliability of the system. Even so, a HyperTransport channel requires a large number of connections—each CAD bit requires four conductors, a two-wire differential pair for each of the two duplex signals. A 32-bit HyperTransport channel therefore requires 128 conductors for its CAD signals. At a minimum, the six control signals, which are single ended, require six more conductors, increasing to nine in a 32-bit system because of the four differential clock signals.

Each differential pair uses a variation on the low-voltage differential signaling (LVDS) standard. The differential voltage shift between the pairs of a signal is only 600 millivolts.

Standards and Coordination

The HyperTransport specification is maintained by the HyperTransport Technology Consortium, which can be reached at the following addresses:

HyperTransport Technology Consortium

1030 E. El Camino Real #447

Sunnyvale, CA 94087

Phone: 800-538-8450 (Ext. 47739)

E-mail: info@hypertransport.org

Web site: www.hypertransport.org

PCMCIA

While the desktop remains a battlefield for bus designers, notebook computer–makers have selected a single standard to rally around: PC Card, promulgated by the Personal Computer Memory Card International Association (PCMCIA). Moreover, the PC Card bus is flexible and cooperative. Because it is operating system and device independent, you can plug the same PC Card peripheral into a computer, Mac, Newton, or whatever the next generation holds in store. The PC Card expansion system can cohabitate in a computer with a desktop bus such as ISA or PCI. What’s more, it will work in devices that aren’t even computers—from calculators to hair curlers, from CAD workstations to auto-everything cameras. Someday you may even find a PC Card lurking in your toaster oven or your music synthesizer.

The PC Card system is self-configuring, so you do not have to deal with DIP switches, fiddle with jumpers, or search for a reference diskette. PC Card differs from other bus standards in that it allows for external expansion—you don’t have to open up your computer to add a PC Card.

The design is so robust that you can insert or remove a PC Card with the power on without worrying that you will damage it, your computer, or the data stored on the card. In other words, PC Cards are designed for hot-swapping. The system is designed to notify your operating system what you’ve done, so it can reallocate its resources as you switch cards. The operating system sets up its own rules and may complain if you switch cards without first warning it, although the resulting damage accrues mostly to your pride.

The engineers who created the PC Card standard originally envisioned only a system that put memory in credit-card format—hence the name, Personal Computer Memory Card International Association. The U.S.-based association drew on the work of the Japan Electronics and Information Technology Industries Association, which had previously developed four memory-only card specifications.

They saw PC Card as an easy way to put programs into portable computers without the need for disk drives. Since then the standard has grown to embrace nearly any expansion option—modems, network interface cards, SCSI host adapters, video subsystems, all the way to hard disk drives. Along the way, the PCMCIA and JEITIA standards merged, so one specification now governs PC Cards worldwide.

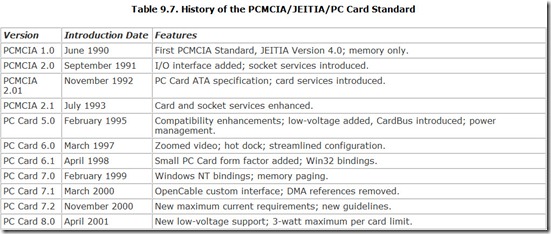

The first release of the PC Card standard allowed only for memory and came in September 1990. In September 1991, it was updated to Release 2.0 to include storage and input/output devices as well as thicker cards. After some minor changes, the standard jumped to Release 5.0 in February 1995. The big jump in numbering (from Release 2.1 to Release 5.0) put the PCMCIA and JEITIA number schemes on equal footing—the specification was number 5.0 for both. Since that event, the numbering of the standard as issued by both organizations has tracked, culminating in the current standard, Release 8.0, published in April 2001. Note, too, the specification title changed from PCMCIA Release 2.1 to PC Card Release 5.0. Table 9.7 summarizes the history of the PCMCIA standard.

The PCMCIA/JEITIA specifications cover every aspect of the cards you slide into your computer (or other device—the standard envisions you using the same cards in other kinds of electronic gear). It details the size and shape of cards, how they are protected, how they are connected (including the physical connector), the firmware the cards use to identify themselves, and the software your computer wields to recognize and use the cards. Moreover, the specifications don’t describe a hard-and-fast hardware interface. The same connector and software system can embrace a wide variety of different purposes and interfaces. The PC Card was only the first of the interconnection schemes documented by the PCMCIA/JEITIA specifications. By redefining the signals on the PC Card connector, a high-speed 32-bit expansion bus called CardBus, a dedicated high-speed video system called Zoomed Video, and a cable television–oriented video system called OpenCable all fit within the system.

All types of PC Cards use the same 68-pin connector, whose contacts are arranged in two parallel rows of 34 pins. The lines are spaced at 1.27 mm (0.050-inch) intervals between rows and between adjacent pins in the same row. Male pins on the card engage a single molded socket on the host. We’ll look at the PCMCIA packages in more detail in Chapter 30.

To ensure proper powering up of the card, the pins are arranged so that the power and ground connections are longer (3.6 mm) than the signal leads (3.2 mm). Because of their greater length, power leads engage first so that potentially damaging signals are not applied to unpowered circuits. The two pins (36 and 67) that signal that the card has been inserted all the way are shorter (2.6 mm) than the signal leads.

PC Card

The original and still most popular of the PCMCIA card specifications is PC Card. Based on the ISA design, the standard expansion bus at the time the specifications were written, PC Card is a 16-bit interface. Unlike modern expansion buses, it lacks such advanced features as bus-mastering. However, the PC Card standard is more than the old bus. It is a system designed specifically for removable cards that includes both hardware and software support.

The hardware side of the standard sets its limits so high that engineers are unlikely to bump into them. For example, it allows for a single system to use from 1 to 255 PCMCIA adapters (that is, circuits that match the signals of PC Cards to the host). Up to 16 separate PC Card sockets can be connected to each adapter, so under the PCMCIA specifications you could potentially plug up to 4080 PC Cards into one system. Most computers shortchange you a bit on that—typically you get only one or two slots.

The memory and I/O registers of each PC Card are individually mapped into a window in the address range of the host device. The entire memory on a PC Card can be mapped into a single large window (for simple memory expansion, for example), or it can be paged through one or more windows. The PC Card itself determines the access method through configuration information it stores in its own memory.

Signals and Operation

The PCMCIA specifications allow for three PC Card variations: memory-only (which essentially conforms to the Release 1.0 standard), I/O cards, and multimedia cards.

When a PCMCIA connector hosts PC Cards, all but 10 pins of the standard 68 share common functions between the memory and I/O-style cards. Four memory card signals are differently defined for I/O cards (pins 16, 33, 62, and 63); three memory card signals are modified for I/O functions (pins 18, 52, and 61); and three pins reserved on memory cards are used by I/O cards (pins 44, 45, and 60).

The PC Card specifications allow for card implementations that use either 8- or 16-bit data buses. In memory operations, two Card Enable signals (pins 7 and 42) set the bus width; pin 7 enables even-numbered address bytes; and pin 42 enables odd bytes. All bytes can be read by an 8-bit system by activating pin 7 but not 42 and toggling the lowest address line (A0, pin 29) to step to the next byte.

Memory Control

The original PC Card specifications allow the use of 26 address lines, which permitted direct addressing of up to 64MB of data. The memory areas on each card are independent. That is, each PC Card can define its own 64MB address range as its common memory. In addition to common memory, each card has a second 64MB address space devoted to attribute memory, which holds the card’s setup information. The typical PC Card devotes only a few kilobytes of this range to actual CIS storage.

Activating the Register Select signal (pin 61) shifts the 26 address lines normally used to address common memory to specifying locations in attribute memory instead. The address space assigned to attribute memory need not correspond to a block of memory separate from common memory. To avoid the need for two distinct memory systems, a PC Card can be designed so that activating the Register Select signal simply points to a block of common memory devoted to storing setup information. All PC Cards limit access to attribute memory to an eight-bit link using the eight least-significant data lines.

To accommodate more storage, the specifications provide address extension registers, a bank-switching system that enables the computer host to page through much larger memory areas on PC Cards. A number of different address extension register options are permitted, all the way up to 16 bits, which extends the total card common memory to four terabytes (4TB, which is 2 to the 42nd power—that is, 26 addresses and 65,536 possible pages).

Memory cards that use EPROM memory often require higher-than-normal voltages to reprogram their chips. Pins 18 and 52 on the PCMCIA interface provide these voltages when needed.

Data Transfers

To open or close access to data read from a PC Card, the host computer activates a signal on the card’s Output Enable line (pin 9). A Ready/Busy line (pin 16) on memory cards allows the card to signal when it is busy processing and cannot accept a data-transfer operation. The same pin is used on I/O cards to make interrupt requests to the host system. During setup, however, an I/O card can redefine pin 16 back to its Ready/Busy function. Since Release 2.0, memory or I/O PC Cards also can delay the completion of an operation in progress—in effect, slowing the host to accommodate the time needs of the card—by activating an Extend Bus Cycle signal on pin 59.

The Write Protect pin (pin 33) relays the status of the write-protect switch on memory cards to the computer host. On I/O cards, this pin indicates that a given I/O port has a 16-bit width.

The same 26 lines used for addressing common and attribute memory serve as port-selection addresses on I/O cards. Two pins, I/O read (44) and I/O write (45), signal that the address pins will be used for identifying ports and whether the operation is a read or a write.

Unlike memory addresses, however, the I/O facilities available to all PC Cards in a system share “only” one 67,108,864-byte (64MB) range of port addresses. Although small compared to total memory addressing, this allotment of I/O ports is indeed generous. When the specification was first contrived, the most popular computer expansion bus (the AT bus) allowed only 64KB of I/O ports, of which some systems recognized a mere 16KB. Whether ports are 8 or 16 bit is indicated by the signal on pin 33.

I/O PC Cards each have a single interrupt request signal. The signal is mapped to one of the computer interrupt lines by the computer host. In other words, the PC Card generates a generic interrupt, and it is the responsibility of the host computer’s software and operation system to route each interrupt to the appropriate channel.

Configuration

The PC Card specifications provide for automatic setup of each card. When the host computer activates the Reset signal on pin 58 of the PCMCIA socket, the card returns to its preinitialization settings, with I/O cards returning to their power-on memory card emulation. The host can then read from the card’s memory to identify it and run the appropriate configuration software.

When a PC Card is plugged into a slot, the host computer’s PCMCIA adapter circuitry initially assumes that it is a memory card. The card defines itself as an I/O card through its onboard CIS data, which the host computer reads upon initializing the PC Card. Multimedia cards similarly identify themselves through their software and automatically reconfigure the PC Card to accept their special signals.

Audio

An audio output line also is available from I/O PC Cards. This connection is not intended for high-quality sound, however, because it allows only binary digital (on/off) signals (much like the basic speaker in early desktop computers). The audio lines of all PC Cards in a system are linked together by an XOR (exclusive OR) logic gate fed to a single common loudspeaker, equivalent to the sound system of a primitive computer lacking a sound board. Under the CardBus standard (discussed later in this chapter) the same socket supports high-quality digital audio.

Power

Pins 62 and 63 on memory cards output two battery status signals. Pin 63 indicates the status of the battery: When activated, the battery is in good condition; when not activated, it indicates that the battery needs to be replaced. Pin 62 refines this to indicate that the battery level is sufficient to maintain card memory without errors; if this signal is not activated, it indicates that the integrity of on-card memory may already be compromised by low battery power.

Although the PCMCIA specifications were originally written to accommodate only the 5-volt TTL signaling that was the industry standard at the time of their conception, the standard has evolved. It now supports cards using 3.3-volt signaling and makes provisions for future lower operating voltages. Cards are protected so you cannot harm a card or your computer by mismatching voltages.

Software Interface

The PC Card specifications require layers of software to ensure its compatibility across device architectures. Most computers, for example, require two layers of software drivers—Socket Services and Card Services—to match the card slots in addition to whatever drivers an individual card requires (for example, a modem requires a modem driver).

As with other hardware advances, Windows 95 helped out the PC Card. Support for the expansion standard is built in to the operating system (as well as Windows 98). Although Windows NT (through version 4.0) lacks integral PC Card support, Windows 2000 embraced the standard. Most systems accept cards after you slide them in, automatically installing the required software. Unusual or obscure boards usually require only that you install drivers for their manufacturer-specific features using the built-in Windows installation process.

Socket Services

To link the PC Card to an Intel-architecture computer host, PCMCIA has defined a software interface called Socket Services. By using a set of function calls under interrupt 1A (which Socket Services shares with the CMOS real-time clock), software can access PC Card features without specific knowledge of the underlying hardware. In other words, Socket Services make access to the PC Card hardware independent, much like the BIOS of a computer. In fact, Socket Services are designed so that they can be built in to the computer BIOS. However, Socket Services also can be implemented in the form of a device driver so that PCMCIA functionality can be added to existing computers.

Using Socket Services, the host establishes the windows used by the PC Card for access. Memory or registers then can be directly addressed by the host. Alternatively, individual or multiple bytes can be read or written through Socket Services function calls.

Card Services

With Release 2.01, PCMCIA approved a Card Services standard that defines a program interface for accessing PC Cards. This standard establishes a set of program calls that link to those Socket Services independent of the host operating system. Like the Socket Services associated with interrupt 1A, Card Services can either be implemented as a driver or be built in as part of an operating system. (Protected-mode operating systems such as OS/2 and Windows NT require the latter implementation.)

Setup

For an advanced PC Card system to work effectively, each PC Card must be able to identify itself and its characteristics to its computer host. Specifically, it must be able to tell the computer how much storage it contains; the device type (solid-state memory, disk, I/O devices, or other peripherals); the format of the data; the speed capabilities of the card; and any of a multitude of other variables about how the card operates.

Card Identification Structure

Asking you to enter all the required data every time you install a PC Card would be both inconvenient and dangerous. Considerable typing would be required, and a single errant keystroke could forever erase the data off the card. Therefore, PCMCIA developed a self-contained system through which the basic card setup information can be passed to the host regardless of either the data structure of the on-card storage or the operating system of the host.

Called the Card Identification Structure (CIS) or metaformat of the card, the PCMCIA configuration system works through a succession of compatibility layers to establish the necessary link between the PC Card and its host. As with the hardware interface, each layer of CIS is increasingly device specific.

Only the first layer, the Basic Compatibility Layer, is mandatory. This layer indicates how the card’s storage is organized. Only two kinds of information are relevant here: the data structures used by the layer itself and such standard and physical device information as the number of heads, cylinders, and sectors of a physical or emulated disk. Since Release 5.0, each card must bear a Card Information Structure so it can identify itself to the host system.

Data Recording Format Layer

The next layer up is the Data Recording Format Layer, which specifies how the stored data is organized at the block level. Four data formats are supported under Release 2.0: unchecked blocks, blocks with checksum error correction, blocks with cyclic redundancy error checking, and unblocked data that does not correspond to disk organization (for example, random access to the data, such as is permitted for memory).

Data Organization Layer

The third CIS layer, the Data Organization Layer, specifies how information is logically organized on the card; that is, it specifies the operating system format to which the data conforms. PCMCIA recognizes four possibilities: DOS, Microsoft’s Flash File System for Flash RAM, PCMCIA’s own Execute-in-Place (XIP) ROM image, and application-specific organization. Microsoft’s Flash File System is an operating system specifically designed for the constraints of Flash memory. It minimizes rewriting specific memory areas to extend the limited life of the medium and to allow for speedy updates of required block writes.

System-Specific Standards

The fourth CIS layer is assigned to system-specific standards that comply with particular operating environments. For example, the Execute-in-Place (XIP) standard defines how programs encoded on ROM cards are to be read and executed.