Servo Systems

Introduction

During the past 60 years, many methods have been developed for accurately controlling dynamic systems. The vast majority of these systems employ sensors to measure what the system is actually doing. Then, based on these measurements, the input to the system is modified. These concepts were initially used in applications where only a single output was measured, and that measurement was used to modify a single input. For instance, position sensors measured the actual position of a motor, and the current or voltage put into the motor windings was modified based on these position readings. An early definition of these systems is the following:

A servosystem is defined as a combination of elements for the control of a source of power in which the output of the system, or some function of the output, is fed back for comparison with the input and the difference between these quantities is used in controlling the power (James, Nichols, and Phillips, 1947).

This definition is extremely broad and encompasses most of the concepts that are usually referred to today as the technical area of control systems. However, the technology and theory of control systems has changed significantly since that 1947 definition. There are now methods for controlling systems with multiple inputs and multiple outputs, many different design and optimization techniques, and intelligent control methodologies sometimes using neural networks and fuzzy logic. As these new theories have evolved, the definition of a servo system has gradually changed. Today, the term servo system often refers to motion control systems, usually employing electric motors. This change may have occurred simply because one of the most important applications of the early servo system theories was in rapid and accurate positioning. For the sake of brevity, this discussion will confine its treatment to motion control systems utilizing electric motors.

Principles of Operation

To obtain rapid and accurate motion control, servo systems employ the same basic principle just outlined, the principle of feedback. By measuring the output (usually the position or velocity of the motor) and feeding it back to the input, the performance can be improved. This output measurement is often subtracted

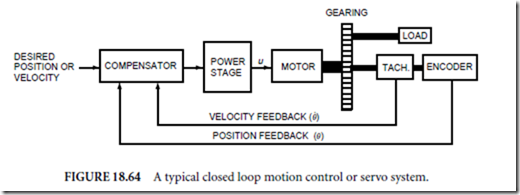

from a desired output signal to form an error signal. This error signal is then used to drive the motor. Figure 18.64 illustrates the components present in a typical system.

As the figure illustrates, each servo system consists of several separate components. First, a compensator takes the desired response (usually in terms of a position or velocity of the motor) and compares it to that measured by the sensing system. Based on this measurement, a new input for the motor is calculated. The compensator may be implemented with either analog or digital computing hardware. Since the compensator consists of computing electronics, it rarely has sufficient power to drive the motor directly. Consequently, the compensator’s output is used to command a power stage. The power stage amplifies that signal and drives the motor. There are a number of motors that can be used in servo systems. This chapter will briefly discuss DC motors, brushless DC motors, and hybrid stepping motors. To achieve a mechanical advantage, the motor shaft is usually connected to some gears that move the actual load. The performance of the motor is measured by either a single sensor or multiple sensors. Two common sensors, a tachometer (for velocity) and an encoder (for position), are shown. Finally, those measurements are fed back to the compensator.

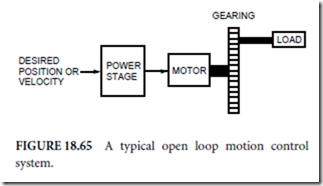

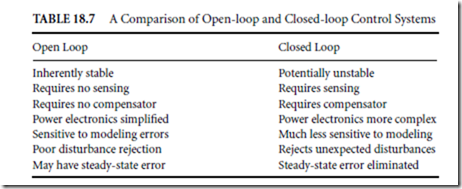

Servo systems are called closed-loop devices be- cause the feedback elements form a loop, as can be seen in Fig. 18.64. In contrast, open-loop motion control devices, which do not require sensors, have become popular for some applications. Figure 18.65 depicts an open-loop motion control system. Obviously, it is much easier and less expensive to implement an open-loop control system because far fewer components are involved. The compensator and sensors have been eliminated completely.

In addition, the power stage is often simplified because the signals it receives may not be as complex. For instance, switching transistors may replace linear amplifiers. On the other hand, it is difficult to construct an open-loop system with capabilities equal to a servo system’s capabilities. Table 18.7 compares open-loop and closed-loop systems.

The following sections will briefly describe the components that together are used to create a servo system. The emphasis will be on motor control applications.

Compensator Design

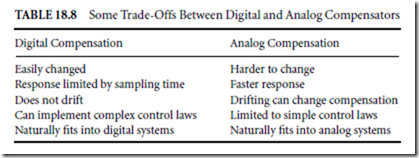

The compensator in Fig. 18.64 is in some ways the brains of a servo system. It takes a desired position or velocity trajectory that the motor system needs to move through, and, by comparing it to the actual movement measured by the sensors, calculates the appropriate input. This calculation can be performed using either digital or analog computing technology. Unlike other areas of computing, in which digital computers long ago replaced analog devices, analog means still are useful today for servo systems. This is mainly because the calculations required are usually simple (a few additions, multiplications, and perhaps integrations) and they must be performed with the utmost speed. On the other hand, digital compensators are increasingly popular because they are easy to change and are capable of more sophisticated control strategies. Table 18.8 compares digital and analog control.

As Table 18.8 indicates, the choice of digital vs. analog compensation depends in part on the control law chosen, since more complex algorithms can be easily implemented on digital computers. If a digital compensator is chosen, then digital control design techniques should be used. These techniques are able to account for the sampling effects inherent in digital compensators. As a rule of thumb, analog design techniques may be used even for digital compensators as long as the sampling frequency used by that digital compensator is at least five times higher than the bandwidth of the servo system.

Once the hardware used to implement the compensator has been selected, a control algorithm, which combines the desired position and/or velocity trajectories with the measurements, must be found. Many design techniques have been developed for determining a control law. In general, these techniques require that the dynamics of the system be identified. This dynamic model may come from a physical understanding of the device. For instance, suppose our servo system is used to move a heavy camera. Since the camera is fairly rigid, it might be modeled accurately as a lumped mass. Similarly, the motor’s rotor as well as the gearing will yield a mostly inertial load. The motor itself may be modeled as a device that inputs current and outputs torque. By putting these three observations together and writing elementary dynamics equations (e.g., Newton’s laws) a set of differential equations that model the actual system can be found. Alternatively, a similar set of differential equations may be found by gathering input and output data (e.g., the motor’s current input and the position and velocity output) and employing identification algorithms directly.

Once an adequate dynamic model has been found, it is possible to design a control law that will improve the servo system’s behavior in some manner. There are many ways of measuring the performance. Some of the most commonly used and oldest performance measures describe the system’s ability to respond to an instantaneous change, or a step. For instance, suppose you are covering a sports event and want to suddenly move the camera to catch the latest action. The camera should reach the desired angle as quickly as possible. The time it does take is termed rise time, tr . In addition, it should stop as quickly as possible so that jitter in the picture will be minimized. The time required to stop is termed settling time, ts . Finally, any excess oscillations will also increase jitter. The magnitude of the worst oscillation is called the overshoot. The overshoot divided by the total desired movement and multiplied by 100 gives the percent overshoot. These characteristics are depicted in Fig. 18.66.

A vast number of techniques have been developed for achieving these desirable characteristics. As an example, one particular method known as proportional integral derivative (PID) control will be considered.

This method is selected because it is intuitive and fairly easy to tune. First, an error signal is formed by subtracting the actual position from the desired position. The error is then

Next, this error signal is operated on mathematically to determine the input to the motor. The simplest form of this controller uses the following logic:

If the motor starts falling behind so θd >θ and e > 0, then increase the input to catch up. If the motor is getting ahead, decrease the input to slow down.

The logic can be mathematically written in the formula

u = K P e (18.15)

where K P is a constant number called the proportional gain because the input is made proportional to the error.

To make the motor respond faster, it is helpful to know how quickly the motor is falling behind (or getting ahead). This information is contained in the derivative of the error

Finally, it is often necessary that the servo system exhibit zero steady-state error. This quality can be achieved by adding a term due to the integral of the error. The reason this works can be understood by considering what would happen should a steady-state error occur. The integrator would keep increasing the input ad infimum. Instead, an equilibrium point is reached with zero error. The complete PID control law is then

with K I a constant called the integral gain.

The choice of the controller gains (K P , K I , and K D ) greatly influences the behavior of the servo system.

If a good dynamic model has been found and it consists of linear time invariant differential (or difference) equations, then several methods may be employed for finding gains that best meet the performance requirements (the textbooks listed in the Further Information section provide an excellent and detailed treatment).

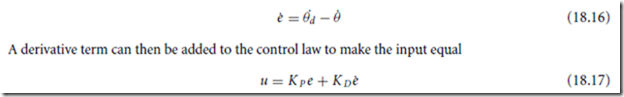

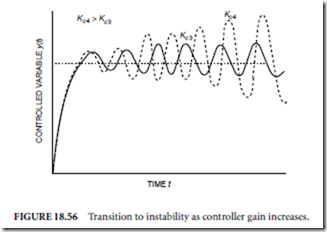

If not, the gains may be experimentally tuned using, for instance, the Ziegler–Nichols method (Franklin, Powell, and Workman, 1990). Care must be exercised when adjusting the gains because the system may become unstable. Figure 18.67 shows the response to a step change at t = 5 in the desired 0.5 position for a properly tuned servo system. The position response quickly tracks the change. Figure 18.68 plots an unstable response resulting from incorrectly tuned gains. The position error increases rapidly. Obviously, this undesirable behavior can be very destructive.

Power Stage

A variety of devices are used to implement the power stage, depending on the actuator used to move the servo system. These range from small DC motors to larger AC motors and even more powerful hydraulic systems. This section will briefly discuss the power stage options for the smaller, electric driven servo systems.

There are two distinct methods for electronically implementing the power stage: linear amplifiers and switching amplifiers. Either of these methods typically takes a low-power signal (usually a voltage) as input, and outputs either a desired voltage or current level. This output is regulated so that the voltage or current level remains virtually unchanged despite large changes in the loading caused by the motor.

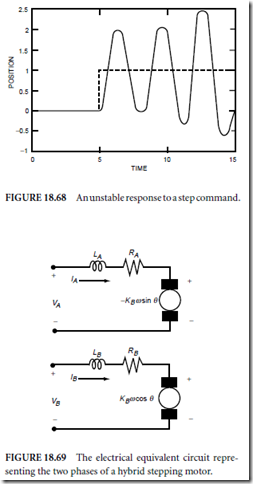

The motor loading does change dramatically as the motor moves because the back electromotive force (EMF) is proportional to the motor velocity ω. For hybrid stepping motors, it is also modulated by either the sine or cosine of the motor position, θ . Figure 18.69 shows the equivalent electric circuit for the two phases of a hybrid stepping motor.

Despite their similarities, linear and switching amplifiers differ markedly in how they achieve volt- age or current regulation. Linear amplifiers use a feedback strategy very similar to that described in the previous section. The result is a smooth, continuous output voltage or current. Switching amplifiers, on the other hand, can only turn the voltage to the motor fully on or off (or positive/negative). Consequently, every few microseconds the circuit checks a low-pass filtered version of the motor’s voltage or current level. If it is too high, the voltage is turned off. If it is too low, the voltage is turned on. This method works because the sampling is done on a microsecond scale, whereas the motor’s current or voltage can only change in a millisecond scale. All motors contain resistance and inductance due to the winding, and this resistor-inductor circuit acts as a low-pass filter. Thus, the motor filters out the high-frequency switching, and responds only to the low-frequency components contained within the switching. Care must be taken when using this technique to perform the switching at a frequency well beyond the bandwidth of the mechanical components so that the motor does not respond to the high-frequency switching.

If the desired output is a voltage, the amplifier is called a pulse-width-modulated (PWM) voltage amplifier. If the desired output is a current, the amplifier is usually termed a current chopper.

Both linear and switching amplifiers remain in use because each has particular advantages. Linear amplifiers do not introduce high-frequency harmonics to the system; thus, they can provide more accurate control with less vibrations. This is especially true if the servo system contains components that are somewhat flexible. On the other hand, if the system is rigid, the switching amplifier will provide satisfactory results at a lower cost.

Motor Choice

The choice of motor for a servo system can impact cost, complexity, and even the precision. Three motors will be described in this section. Although this is not by any means an exhaustive coverage, it will suffice for a variety of applications.

DC servomotors are the oldest and probably the most commonly used actuator for servo systems. The name arises because a constant (or DC) voltage applied to the motor input will produce motor movement. Over a linear range, these motors exhibit a torque that is proportional to the quantity of current flowing into the device. This can make the design of control laws particularly convenient since torque can often be easily included into the dynamic equations. The back EMF generated by the motor is strictly proportional to the motor velocity, and so a large input voltage is required to overcome this effect at large velocities. The chief disadvantage of the DC motor is caused by the brushes. Inside the motor, mechanical switching is performed with brushes. As you might imagine, these brushes are the first part of the motor to fail, since they are constantly rubbing and switching current. Consequently, they severely limit the reliability of DC motors as compared to other, more modern actuators. The brushes also limit the low-speed performance because the switching effects are not filtered out when the motor is turning slowly. Instead, the motor actually has time to react to each individual switching. For this reason, DC motors are normally run only at high speeds, and gearing is used to convert the speed to that needed for a particular application. Only very specialized designs of DC motors are suitable for highly precise direct drive applications in which no gearing is allowed.

One way to eliminate some of these problems is to use a brushless DC motor rather than an ordinary DC motor. The brushless DC operates in a manner identical to the DC motor. However, the motor construction is quite different. A typical brushless DC motor actually has three phases. Much like the DC motor, these phases are switched either on or off depending on the motor position. Whereas the DC motor accomplishes this switching mechanically with the brushes, the brushless DC contains Hall effect sensors. These sensors determine the position of the motor, then based on these measurements electronically perform the switching. The advantage is that brushes are no longer necessary. As a result, the brushless DC motor will last much longer than an ordinary DC motor. Unfortunately, since Hall effect sensors and more sophisticated switching electronics are required, brushless DC motors are more expensive than DC motors. In addition, since the basic principle still involves switching phases fully on or off, brushless DC motors also exhibit poor performance at low speeds.

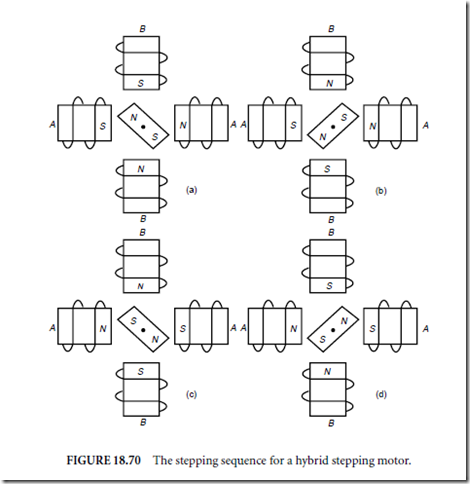

Both types of DC motors have become popular because they respond linearly (over a nominal operating range) to an input current command in that the output torque is roughly proportional to the input current. This is convenient because it allows the direct use of linear control design techniques. It was also especially important during early servo system development because it is easy to implement with analog computing devices. Advances in microcontroller computing technology and nonlinear control design techniques now provide many more options. One of these is the use of hybrid stepping motors in servo systems. These motors were originally developed for open-loop operation. They have shown considerable promise for closed-loop operation as well. Hybrid stepping motors typically have two phases. These windings are labeled A and B in Fig. 18.70(a). These two windings are energized in the sequence shown in

Fig. 18.70(a) to Fig. 70(d), thus causing the permanent magnet rotor to turn. Although Fig. 18.70 shows only four separate energizations (called steps) of the windings to complete a revolution, clever mechanical arrangements allow the number of steps per revolution to be greatly increased. Typical hybrid stepping motors have 200 steps/revolution. Consequently, a complete revolution is performed by repeating the four step sequence shown in Fig. 18.70, 50 times.

One advantage of these motors is that they allow fairly accurate positioning in open loop. As long as the motor has sufficient torque capabilities to resist any external torques, the motor position remains within the commanded step. Since open-loop systems are far less complicated (and therefore less expensive), these motors offer an economical alternative to the traditional closed-loop servo system. On the other hand, there are some accompanying disadvantages to open-loop operation. First, since the winding currents are switched on and off to energize the coils in the proper sequence, the motor exhibits a jerky motion at low speeds, much like the DC motors. Second, if an unexpectedly large disturbance torque occurs, the motor can be knocked into the wrong position. Since the operation is open loop and therefore does not employ any position sensor, the system will not correct the position, but will keep running. Finally, the speed of response and damping can be improved using closed-loop techniques.

In addition to the open-loop uses of hybrid stepping motors, methods exist for operating these motors in a closed-in a closed-loop fashion (Lofthus et al., 1994). The principle difficulty arises because hybrid stepping motors are nonlinear devices, so the plethora of design techniques available in linear control theory cannot be directly applied. Fortunately, these motors can be made to behave in a linear fashion by modulating the input currents by sinusoidal functions of the motor position. For instance, the currents through the two windings (IA and IB ) can be made equal to

where N is the number of motor pole pairs (50 for the typical 200-step/revolution motor) and θ is the motor’s angular position. Then the torque output by the motor is proportional to the new input, IC . IC can be determined using standard linear control designs such as the PID method outlined earlier.

In contrast to the DC motors, which employ current switching and therefore perform poorly at low speeds, this closed-loop method of controlling stepping motors performs quite accurately even at very low velocities. On the other hand, the generation of the sinusoidally modulated input currents requires both accurate position measurement and separate current choppers for each phase. Thus the implementation cost is somewhat higher than a servo system utilizing DC motors.

Gearing

As alluded to in the previous section, gearing is often used in servo systems so that the motor can operate in its optimum speed range while simultaneously obtaining the required speeds for the application. In addition, it allows the use of a much smaller motor due to the mechanical advantage. Along with these advantages come some disadvantages, the principle disadvantage being backlash. Backlash is caused by the small gaps that exist in gearing systems. In gearing systems, there always exist small gaps between a pair of mating gears. As a result of the gaps, the driven gear can wiggle independently from the driving gear. This wiggling is actually a nonlinear phenomena that can cause vibrations and decreases the accuracy that can be obtained. A similar effect occurs when using belt driven gearing because the belt stretches.

These inherent imperfections in gearing can become major problems if extreme accuracy is required in the servo system. Since these effects increase as the gear ratio increases, one way to reduce the effect of the gearing imperfections is to make the gear ratio as small as possible. Unfortunately, DC motors run smoother at higher speeds, so smaller gear ratios tend to accentuate the motor imperfections caused by switching. Consequently, a tradeoff between the inaccuracies caused by the gearing and the inaccuracies caused by the motor must be made. For highly precise systems, the gearing is sometimes completely eliminated. For such applications, a closed-loop hybrid stepping motor or a specialized DC motor are usually necessary to obtain good motion quality.

A Simple Example Application

To clarify some of the engineering choices that must be made when designing a servo system, a simple example of a servo system design for moving a camera will be discussed.

First, suppose the camera will be used in a surveillance application, to constantly pan back and forth across a store. For this application, an actual servo system employing feedback is probably not necessary and would prove too costly. The required precision is fairly low, and low cost is of the utmost importance. Consequently, an open-loop stepping motor powered by transistors switching on or off will probably be adequate. Alternatively, even a DC motor operated in open loop, with microswitches to determine when to reverse direction will suffice.

Now suppose the camera is used to visually inspect components as part of a robotic workcell. In this case, the requirements on precision are significantly higher. Consequently, a servo system using a geared DC motor and powered by a PWM voltage amplifier may be an appropriate choice. If the robotic workcell is used extensively, a more reliable system may be in order. Then a brushless DC motor may be substituted for a higher initial cost.

Finally, suppose the camera system is used to provide feedback to a surgeon performing intricate eye surgeries. In this case, an extremely precise and reliable system is required, and cost is a secondary consideration. To achieve the high accuracy, a direct drive system that does not use any gearing may provide exceptional results. Since the motor must move precisely at low speeds, simultaneously remaining very reliable, a closed-loop hybrid stepping motor may be used. To drive the hybrid stepping motor, either linear current amplifiers or current choppers (one for each of the two phases) will suffice. For this particular application, the hybrid stepping motor offers another advantage because it can be operated fairly accurately in open loop. Thus it offers redundancy if the compensator or sensors fail.

Defining Terms

Backlash: The errors introduced into geared systems due to unavoidable gaps between two mating gears.

Bandwidth: The frequency range over which the Bode plot of a system remains within 3 dB of its nominal value.

Closed-loop system: A system that measures its performance and uses that information to alter the input to the system.

Current chopper: A current amplifier that employs pulse-width-modulation.

Open-loop system: A system that does not use any measurements to alter the input to the system.

Overshoot: The peak magnitude of oscillations when responding to a step command.

Pulse-width-modulation: A means of digital-to-analog conversion that uses the average of high-frequency switchings. This can be performed at high-power levels.

Rise time: The time between an initial step command and the first instance of the system reaching that commanded level.

Settling time: The time between an initial step command and the system reaching equilibrium at the commanded level. Usually this is defined as staying within 2% of the commanded level.

References

Franklin, G.F., Powell, J.D., and Workman, M.L. 1990. Digital Control of Dynamic Systems, 2nd ed. Addison- Wesley, Reading, MA.

James, H.M., Nichols, N.B., and Phillips, R.S. 1947. Theory of Servomechanisms, Vol. 25, MIT Radiation Laboratory Series. McGraw-Hill, New York.

Lofthus, R.M., Schweid, S.A., McInroy, J.E., and Ota, Y. 1994. Processing back EMF signals of hybrid step motors. Control Engineering Practice, a journal of the International Federation of Automatic Control 3(1):1–10. (Updated in Vol. 3, No. 1, (Jan.) 1995, pp. 1–10.)

Further Information

Recent research results on the topic of servo systems and control systems in general is available from the following sources:

Control Systems magazine, a monthly periodical highlighting advanced control systems. It is published by the IEEE Press.

The IEEE Transactions on Automatic Control is a scholarly journal featuring new theoretical developments in the field of control systems.

Control Engineering Practice, a journal of the International Federation of Automatic Control, strives to meet the needs of industrial practitioners and industrially related academics and researchers. It is published by Pergamon Press.

In addition, the following books are recommended:

Brogan, W.L. 1985. Modern Control Theory. Prentice-Hall, Englewood Cliffs, NJ. Dorf, R.C. Modern Control Systems. Addison-Wesley. Reading, MA.

Jacquot, R.G. 1981. Modern Digital Control Systems. Marcel Dekker, New York.