TRENDS IN COMPUTER ARCHITECTURE

In the earlier chapters, the fetch-execute cycle is described in the form: “fetch an instruction, execute that instruction, fetch the next instruction, etc.” This gives the impression of a straight-line linear progression of program execution. In fact, the processor architectures of today have many advanced features that go beyond this simple paradigm. These features include pipelining, in which several instructions sharing the same hardware can simultaneously be in various phases of execution, superscalar execution, in which several instructions are executed simultaneously using different portions of the hardware, with possibly only some of the results contributing to the overall computation, very long instruction word (VLIW) architectures, in which each instruction word specifies multiple instructions (of smaller widths) that are executed simultaneously, and parallel processing, in which multiple processors are coordinated to work on a single problem.

In this chapter we cover issues that relate to these features. The discussion begins with issues that led to the emergence of the reduced instruction set computer (RISC) and examples of RISC features and characteristics. Following that, we cover an advanced feature used specifically in SPARC architectures: overlapping register windows. We then cover two important architectural features: superscalar execution and VLIW architectures. We then move into the topic of parallel processing, touching both on parallel architectures and program decomposition. The chapter includes with case studies covering Intel’s Merced architecture, the PowerPC 601, and an example of a pervasive parallel architecture that can be found in a home videogame system.

10.1 Quantitative Analyses of Program Execution

Prior to the late 1970’s, computer architects exploited improvements in integrated circuit technology by increasing the complexity of instructions and addressing modes, as the benefits of such improvements were thought to be obvious. It became an effective selling strategy to have more complex instructions and more complex addressing modes than a competing processor. Increases in architectural complexity catered to the belief that a significant barrier to better machine performance was the semantic gap—the gap between the meanings of high-level language statements and the meanings of machine-level instructions.

Unfortunately, as computer architects attempted to close the semantic gap, they sometimes made it worse. The IBM 360 architecture has the MVC (move character) instruction that copies a string of up to 256 bytes between two arbitrary locations. If the source and destination strings overlap, then the overlapped portion is copied one byte at a time. The runtime analysis that determines the degree of overlap adds a significant overhead to the execution time of the MVC instruction. Measurements show that overlaps occur only a few percent of the time, and that the average string size is only eight bytes. In general, faster execution results when the MVC instruction is entirely ignored, and instead, its function is synthesized with simpler instructions. Although a greater number of instructions may be executed without the MVC instruction, on average, fewer clock cycles are needed to implement the copy operation without using MVC than by using it.

Long-held views began to change in 1971, when Donald Knuth published a landmark analysis of typical FORTRAN programs, showing that most of the statements are simple assignments. Later research by John Hennessy at Stanford University, and David Patterson at the University of California at Berkeley con- firmed that most complex instructions and addressing modes went largely unused by compilers. These researchers popularized the use of program analysis and benchmark programs to evaluate the impact of architecture upon performance.

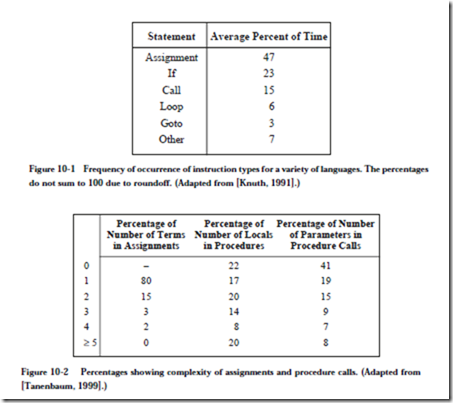

Figure 10-1, taken from (Knuth, 1971), summarizes the frequency of occurrence of instructions in a mix of programs written in a variety of languages. Nearly half of all instructions are assignment statements. Interestingly, arithmetic and other “more powerful” operations account for only 7% of all instructions. Thus, if we want to improve the performance of a computer, our efforts would be better spent optimizing instructions that account for the greatest percentage of execution time rather than focusing on instructions that are inherently complex but rarely occur.

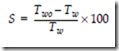

Related metrics are shown in Figure 10-2. From the figure, the number of terms in an assignment statement is normally just a few. The most frequent case (80%),

is the simple variable assignment, X ¬ Y. There are only a few local variables in each procedure, and only a few arguments are normally passed to a procedure.

We can see from these measurements that the bulk of computer programs are very simple at the instruction level, even though more complex programs could potentially be created. This means that there may be little or no payoff in increasing the complexity of the instructions.

Discouragingly, analyses of compiled code showed that compilers usually did not take advantage of the complex instructions and addressing modes made available by computer architects eager to close the semantic gap. One important reason for this phenomenon is that it is difficult for a compiler to analyze the code in sufficient detail to locate areas where the new instructions can be used effectively, because of the great difference in meaning between most high-level language constructs and the expression of those constructs in assembly language. This observation, and the ever increasing speed and capacity of integrated circuit technology, converged to bring about an evolution from complex instruction set computer (CISC) machines to RISC machines.

A basic tenet of current computer architecture is to make the frequent case fast, and this often means making it simple. Since the assignment statement happens so frequently, we should concentrate on making it fast (and simple, as a consequence). One way to simplify assignments is to force all communication with memory into just two commands: LOAD and STORE. The LOAD/STORE model is typical of RISC architectures. We see the LOAD/STORE concept in Chapter 4 with the ld and st instructions for the ARC.

By restricting memory accesses to LOAD/STORE instructions only, other instructions can only access data that is stored in registers. There are two consequences of this, both good and bad: (1) accesses to memory can be easily over- lapped, since there are fewer side effects that would occur if different instruction types could access memory (this is good); and (2) there is a need for a large number of registers (this seems bad, but read on).

A simpler instruction set results in a simpler and typically smaller CPU, which frees up space on a microprocessor to be used for something else, like registers. Thus, the need for more registers is balanced to a degree by the newly vacant cir- cuit area, or chip real estate as it is sometimes called. A key problem lies in how to manage these registers, which is discussed in Section 10.4.

10.1.1 QUANTITATIVE PERFORMANCE ANALYSIS

When we estimate machine performance, the measure that is generally most important is execution time, T. When considering the impact of some performance improvement, the effect of the improvement is usually expressed in terms of the speedup, S, taken as the ratio of the execution time without the improvement (Two) to the execution time with the improvement (Tw):

For example, if adding a 1MB cache module to a computer system results in lowering the execution time of some benchmark program from 12 seconds to 8 seconds, then the speedup would be 12/8, = 1.5, or 50%. An equation to calculate speedup as a direct percent can be represented as:

We can develop a more fine-grained equation for estimating T if we have information about the machine’s clock period, t, the number of clock cycles per instruction, CPI, and a count of the number of instructions executed by the pro- gram during its execution, IC. In this case the total execution time for the pro- gram is given by:

CPI and IC can be expressed either as an average over the instruction set and total count, respectively, or summed over each kind and number of instructions in the instruction set and program. Substituting the latter equation into the former we get:

These equations and others derived from them, are useful in computing and esti- mating the impact of changes in instructions and architecture upon performance.

EXAMPLE: CALCULATING SPEEDUP FOR A NEW INSTRUCTION SET

Suppose we wish to estimate the speedup obtained by replacing a CPU having an average CPI of 5 with another CPU having an average CPI of 3.5, with the clock period increased from 100 ns to 120 ns. The equation above becomes:

Thus, without actually running a benchmark program we can estimate the impact of an architectural change upon performance.