Entropy & Information Theory

Why Does Entropy Seem So Complicated?

Students and authors alike dread the subject of entropy. To be sure, entropy, denoted ‘S’, is a state function like no other. Equally clear is that it is of central importance to thermodynamics—being intimately associated with two of the four Laws. Yet many individuals—even science professionals who use entropy every day—wrestle with the basic question of what it actually is, exactly.

It is an interesting exercise to review how the different authors address this question. Among the reference textbooks, entropy—or its explanation—has been described (sometimes apologetically!) as follows “mysterious;” “uncomfortable;” “the most misunderstood property of matter;” “less than satisfying;” “less than rigorous;” “confused;” “rather abstract;” “rather subjective;” “difficult to fully comprehend…physical implications that are hard to grasp;” “not a household word, like energy;” etc. Perhaps Cengel puts it most poignantly and directly:

This does not mean that we know entropy well, because we do not. In fact, we cannot even give an adequate answer to the question, “what is entropy?”

It does not have to be this way.

First and foremost, entropy is a quantity. This means that any description of entropy that purports to be a definition had better have a number

attached to it. Moreover, the physical meaning of that number should be clear—e.g., we must know precisely what it means to “add one” to it, in the same way that we know precisely what it means to add one calorie of heat to a gram of water.

⊳⊳⊳ To Ponder… We laugh at a certain fictional rocker, for putting his faith in a particular amplifier because it goes “one louder.” But we scientists are really no better, if we cannot clearly explain just exactly what it means to go “one more entropic.” Can you do this? Can your professor? If not, don’t worry; you will by the end of this chapter.

As entropy is a quantity, the usual descriptions of it as a measure of molecular “disorder,” “randomness,” “chaos,” etc.—though conceptually useful—are clearly not sufficient. Next in the hierarchy of less-than-fully- satisfactory explanations is the statement that entropy is “the quantity that increases under a spontaneous irreversible change” (Section 12.3)—which, of course, still does not really tell us what it is.

The most standard definition of entropy is the oldest one, known as the thermodynamic definition. It stems from a purely macroscopic approach that does not rely on a molecular description at all. This definition is a bit “funny,” in that it is usually expressed in differential form—specifically, via a relation involving the temperature and the reversible heat, or heat absorbed during a reversible change:

dQ = TdS [reversible, infinitesimal] (10.1) The thermodynamic definition has the huge advantage of being quantitative. However, it, too, has some significant limitations.

The first limitation—obvious, though not always acknowledged—is that it technically applies only to reversible changes. The usual workaround is to prove that dS is an exact differential and therefore path-independent. Nevertheless, given that the most important application of entropy—the Second Law (see Chapter 12)—concerns only irreversible processes, it seems strange that the definition itself should be restricted to reversible processes only! The second limitation of the thermodynamic definition is that—being a differential—it is only capable of predicting entropy changes, ΔS, and not absolute entropy values, S. This distinction is an important one that we will encounter often, most notably in the Third Law (see Section 13.3). However, by far the most widely acknowledged limitation of the thermodynamic definition of entropy is that, in and of itself, it provides very little physical understanding. It is presumably for this reason that even advanced textbooks still retain some qualitative “disorder”-type descriptions, in addition to Equation (10.1). Evidently for this reason, also, it has in recent years become fashionable for textbooks to include a brief blurb on the statistical definition of entropy. This approach—developed by Boltzmann, and exemplified in his famous formula [Equation (10.2), p. 84]—offers a molecular origin of entropy, and is one of the most rigorous.

On the other hand, at first glance the Boltzmann approach seems to have nothing to do with thermodynamic entropy. It is, in fact, quite a challenge to quantitatively relate the two definitions using straightforward means; one traditionally resorts to complicated statistical mechanical proofs that all but the advanced physics texts would rather avoid. As Raff puts it, …we lack the tools required to rigorously demonstrate the connection between the [two types of] entropy…

In this book, we adopt the information theory approach to entropy, whose advantages in this regard will become clear as we go along.

Entropy as Unknown Molecular Information

Without further ado, here is a qualitative version of the information definition of entropy:

Definition 10.1 (qualitative) Entropy is the amount of molecular information that a macroscopic observer does not know about the system.

Recall from Section 3.1 that thermodynamics provides a complete description of reality at the macroscopic scale only; at the molecular scale, it is largely incomplete. Thus, precise knowledge of the thermodynamic state of the system [e.g., of the thermodynamic variables (T , V )] tells us very little about the molecular state. The details of the latter—i.e., positions, velocities, and internal states of every constituent molecule—must therefore be considered information that is mostly unknown to a macroscopic observer.

According to Definition 10.1, the amount of this missing molecular information is the entropy.

Some comments are in order.

First, note that entropy is the fundamental link between the macroscopic and molecular descriptions of nature—the “medulla oblongata,” if you will—between thermodynamics and statistical mechanics, as per Section 2.3. Thus, rather than favoring either the macroscopic or molecular view- point, it behooves us to recognize at the outset that both are necessary in order to achieve a full understanding of this quantity.

Second, Definition 10.1 has some very interesting ramifications for the Second Law of Thermodynamics. However, a full discussion on this point will have to wait until Section 12.4.

Third, note that even the qualitative Definition 10.1 already suffices to prove that entropy is a thermodynamic state function. The set of molecular states associated with a given thermodynamic state clearly depends only on the thermodynamic state itself. Moreover, the entropy value evidently varies from one thermodynamic state to another—i.e., S = S(T, V ) is not constant. This can be understood as follows.

Recall from Figure 3.1 that there are many possible molecular states associated with each thermodynamic state. It is reasonable to assume that some thermodynamic states have more molecular states associated with them than do others. Presumably, these thermodynamic states are characterized by more unknown molecular information—and therefore, greater entropy. Intuitively, we expect larger T and V to correspond to a larger number of possible molecular states, and thus, to higher S values. This is, in fact, almost always the case.

It should be mentioned that the state function aspect of entropy is some- thing that other treatments—particularly those based on the thermodynamic definition of Equation (10.1)—must go to a fair amount of trouble to validate. The usual proof has several stages, invoking the rather complex Carnot cycle (which we also consider, in Section 13.2, but for different reasons). Here, the desired result is seen to follow immediately from Definition 10.1, without any additional effort.

On the other hand, in order to demonstrate that the information entropy as defined here is indeed the same as the usual thermodynamic entropy, we are obliged to prove that the former satisfies Equation (10.1). Curiously, though a number of the reference textbooks now include both the thermo- dynamic definition of Equation (10.1) and the statistical (quantitative information) definition of Equation (10.2), in most of these, no real attempt is made to prove the equivalence of the two. We will do so in Section 11.5 of this book, for the special case of the ideal gas.

Amount of Information

So far, our information definition of entropy is only qualitative—and therefore, according to Lord Kelvin, unscientific (see quote on p. 75)— because we have not yet specified exactly what is meant by “amount of in formation.” Fortunately, the field of information theory provides us with exactly the quantitative formula that we need.

⊳⊳⊳ To Ponder… In fact, it is the same basic formula that is used in computer science—to determine, e.g., how much information can be stored on your computer, or how many bytes are needed to store the MP3 file for your favorite song.

The framework is that of a hypothetical observer, who gains information by performing a measurement that yields one outcome from a number of possibilities.

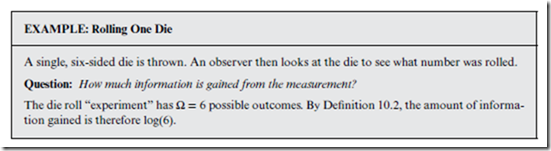

Definition 10.2 (quantitative) The amount of information gained from any measurement is the logarithm of Ω, where Ω is the number of possible outcomes.

Definition 10.2 is technically only valid if the possible outcomes are all equally likely; if not, we need a means of estimating the “effective” number of possible or available outcomes—e.g., by ignoring those that are extremely unlikely.

It makes intuitive sense that the amount of information should increase with Ω. Why is it that log(Ω) is the amount of information, though, and not simply Ω itself? This is so that the amount of information gained from separate, independent measurements is additive. By “additive,” we mean that the total amount of information gained from independent measurements is just the sum of the amounts of information gained from each measurement separately.

Some instructive examples may be found in the rolling of dice. In the boxes below, six-sided dice are presumed, and each die roll is considered to be independent of (i.e., unrelated to, or unaffected by) the others.

Note that the amount of information gained from rolling two dice is exactly twice that of a single die roll, which makes good intuitive sense. The extension to N dice is straightforward:

In general, the numerical value of the amount of information depends on the base of the logarithm, which is left unspecified in Definition 10.2 (p. 81). Different bases correspond to different “units” of information (see Log Blog post and Correspondence on p. 83).

Application to Thermodynamics

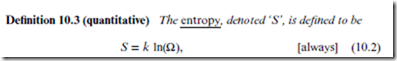

Combining the qualitative Definition 10.1 (p. 79) with the information theory results from Section 10.3, we are now in a position to provide a quantitative information definition of the entropy for a thermodynamic system, as follows:

where ‘ln’ denotes the natural log, Ω is the number of possible or available molecular states (of the whole system) associated with the thermodynamic state, and k is the Boltzmann constant.

Equation 10.2 is the famous Boltzmann entropy formula—i.e., the statistical definition developed by Boltzmann and by Gibbs.

In principle, computing the entropy for a given thermodynamic state reduces to simply counting the number of corresponding molecular states, Ω. However, this is not at all easy to do in practice. For one thing, Ω is absolutely enormous—much, much larger than Avogadro’s number, NA, and in fact much closer to exp(NA). The logarithm in Equation (10.2) helps a lot, by reducing the mind-bogglingly huge Ω down to something that is “merely” on the order of NA. The factor of k then converts this molecular- scale number to macroscopic units, leaving us in the end with typically quite reasonable SI values for S.

Nevertheless, there is still the problem of actually counting Ω. This is essentially impossible in the non-ideal case when N is on the order of NA; we must therefore make do with the largest N values that can be achieved using the latest computers, and hope that these are “large enough” to obtain reasonable results.

However, in the special case of the ideal gas, Ω can be computed exactly, by exploiting the fact that all molecules behave independently. In Chapter 11, the thermodynamic state function for S—i.e., the explicit function, S = S(T, V )—is derived for the ideal gas from Equation (10.2). In addition, the corresponding expression for the entropy change, ΔS, matches exactly the standard result obtained by integrating the thermodynamic differential form of Equation (10.1).

Before moving on, we briefly address the issue of dimensions. As per the second Helpful Hint in the Log Blog post on p. 63, the argument of the logarithm in Equation (10.2)—i.e., Ω itself—is dimensionless. The amount of information is also dimensionless, although the factor of k that appears in Equation (10.2) results ultimately in S dimensions of energy over temperature (J/K, in SI units). As discussed in Section 4.4 however, k is simply a conversion factor between temperature and energy, introduced essentially just for convenience. Some authors therefore prefer to regard the dimensionless quantity, = ln(Ω) = S∕k, as the “fundamental entropy.” The conjugate variable—i.e., = kT, is then called the “fundamental temperature.” Note that has dimensions of energy.

When working with and , the contrived kelvin unit no longer plays a direct role. Such an approach—already eminently sensible, even without the information definition of entropy—becomes highly compelling when the latter is adopted, given that itself now becomes the amount of information as directly measured in natural log units. In any event, the J/K units of S can perhaps best be regarded as an arbitrary convention, or historical accident.

⊳⊳⊳ To Ponder…at a deeper level. Even so, some authors have turned this situation on its head, reasoning that the information definition of entropy cannot be physically correct, because it leads naturally to a dimensionless quantity. Entropy—it is argued—is physically related to energy, and must therefore evidently possess energy-like dimensions. The first part of this claim is undeniably true. As for the second part…would anyone argue that heat capacity and specific heat—or molality and molarity—must be unrelated because they have different dimensions? In reality, the only dimensional requirement here is that the temperature-entropy conjugate variable product must have dimensions of energy—which is true, whether one uses TS or .

Unit issues aside, what would be most compelling and useful, from both the practical and pedagogical standpoints, is a direct, quantitative connection between the thermodynamic and information definitions of entropy— say, a simple derivation of Equation (10.1), directly from Equation (10.2), which does not explicitly require the heavy machinery of statistical mechanics such as configurations, ensembles, and the like. Such a connection will be provided in Chapter 11, for the special case of the ideal gas.

⊳⊳⊳ To Ponder… We conclude this chapter by reconsidering the question posed at the beginning: what does it really mean to go “one more entropic”? Adopting the fundamental entropy , and the “bit” (p. 83) as the unit of information, this simply means doubling the number of molecular states available to the whole system.