Essential Guide to Microprocessors

In this chapter…

-

Overview of Microprocessors

-

Microprocessor History and Evolution

-

What’s a Processor Architecture?

-

Microprocessor Anatomy and Gazetteer

-

What Do 4-Bit, 8-Bit, 16-Bit, and 32-Bit Mean?

-

Performance, Benchmarks, and Gigahertz

-

What Is Software?

-

Choosing Microprocessors

-

Microprocessor Future Trends

-

Overview of Microprocessors

A musical greeting card has more computing power than the entire world did in 1950. Today even the cheapest microprocessor chips are more powerful than those big government computers from old science-fiction movies. A Palm Pilot is a better computer than the one in NASA’s first lunar lander. Microprocessors have totally changed our society, culture, spending habits, and leisure time. They’re incredibly cheap and ever-present and this trend shows no sign of letting up.

They’re the peak, the top, the Mona Lisa. Microprocessors are the most complex, most expensive, and most innovative of all the semiconductors. They’re also largely misunderstood, victims of mainstream journalism and stilted advertising claims. Of all the types of semiconductors we’ve covered in this book, microprocessors are the most likely to spark debate instead of yawns, both among engineers and normal people.

Microprocessors are called many things, so pick your favorite term. “Computer-on-a-chip” is much beloved by the popular press, and it’s even partially correct. “Electronic brain” is another one. Microprocessors are essentially basic computer systems reduced to a single silicon chip. Microprocessors aren’t exactly the same as computers, and the term will make engineers wince, but it’s close enough for our purposes.

Central processing unit (CPU) and microprocessing (or microprocessor) unit (MPU) are technically accurate and interchangeable terms for microprocessors. Sometimes its name is shortened to “processor” and sometimes even just to “micro.” “Microcontroller” is also popular, although that term has slight shades of meaning to industry insiders and might not always be strictly accurate.

No matter what you call them, microprocessors sit at the top of the semiconductor hierarchy. Among engineers, designing microprocessors is a plum job, a career goal, and a primo adornment for your résumé. Manufacturing microprocessor chips is a status symbol among semiconductor companies, sometimes even a mark of national pride. “Real companies build microprocessors,” avowed one Silicon Valley chairman.

So just what are these things? Basically, microprocessors are chips that run software. If it runs a program, it’s a microprocessor; if it doesn’t, it’s not. Microprocessors are the only type of chip that follows instructions. Those instructions, called either programs or software, might calculate equations, open garage doors, or zap space aliens on your TV screen. The biggest and fastest microprocessors run our computers, predict our weather, and guide our spacecraft.

Microprocessors are traditionally named with unfriendly and inscrutable four- or five-digit numbers. As with German sports cars, the model numbers are meaningful to the cognoscenti but are pretty meaningless to the rest of us. As an example, some popular microprocessors of the recent past are the 68000, the 80386, and the 29020. For the less technically inclined, these happen to be the processor chips inside the first Apple Macintosh, the IBM PCs of the early 1990s, and several Hewlett-Packard laser printers.

Microprocessor companies have recently started giving their chips made-up names, such as Pentium, Athlon, or UltraSPARC. These names are easier to remember than numbers, and when microprocessors and PCs started to become household products, that was an important marketing consideration. In Intel’s case, the name Pentium was created to prevent rivals from making competitive chips with the same part number. It’s easier to trademark “Pentium” than the number “80586.” (By the way, IBM attempted to trademark “/2” shortly after it created its PS/2 line of computers. Fortunately, the trademark office decided the number 2 was in the public domain.)

Microprocessors Everywhere

Every year the world’s microprocessor vendors produce and sell about 10 billion microprocessor chips (give or take). That’s well over one new microprocessor for every man, woman, and child living on Earth. Or if you prefer, that’s about 35 microprocessors for every U.S. resident, every year

Who could possibly be consuming all these shiny new chips?

It’s probably you. The average middle-class Western household contains about 30 to 40 microprocessors, including chips in the television, VCR, microwave oven, thermostat, garage-door opener, each remote control, washer, dryer, sprinkler timer, and a dozen other everyday items. The average car has about a dozen microprocessors; a 2001 Mercedes-Benz S-Class has 65. You might be carrying or wearing a number of microprocessors in your cellular telephone, pager, personal digital assistant (PDA), digital watch, electronic organizer, or wireless e-mail gadget.

Do you have a personal computer? It probably has a dozen different microprocessors in it, in addition to the big one you see in the advertisements. There are separate microprocessor chips for the floppy disk drive, the CD-ROM or DVD-ROM drive, the network, the modem, the graphics, the keyboard, the mouse, the monitor, and still more features that are buried inside. Microprocessors are everywhere and their ubiquity has changed our world.

As the chart in Figure 6.1 shows, microprocessor sales grew pretty steadily all through the decade of the 1990s. (Again, only a small fraction of this was because of the growth of PCs and other personal computers.) As you can see, the vast majority of microprocessors sold are low-end 8-bit processors (more about the nn-bits terminology later). Annual sales of microprocessors surpassed the world population back around 1995. Ever since then we’ve been effectively doubling the population of the world every year in new microprocessor chips.

The average selling price for microprocessor chips is about $5. There are a lot of low-end chips in that volume to make up for the few very expensive microprocessors that go into PCs. The vast majority of microprocessor chips do not go into PCs; they’re used in everyday items and they are called embedded processors.

What Are Embedded Processors?

If you shout “microprocessor” in a crowded theater, most people will think of Pentium or of Intel, the company that manufactures the chip by that name. In fact, Intel and its Pentium processor line have become household names, an odd turn of events for such a technical product. Yet at no point in its history did Pentium ever have more than a 2 percent share of the microprocessor market. This is because only about 2 percent of the world’s microprocessors are used in PCs at all. Even if Intel (or any other company) cornered the market for microprocessors powering personal computers, it would still only have about 2 percent of total world microprocessor volume.

The lion’s share of the microprocessor market is called, oddly, the embedded market. Embedded in this sense means the chips are embedded into electronic products like an ancient insect embedded in amber. The metaphor is an odd one but unfortunately it has stuck. Engineers, managers, executives, and public relations professionals constantly have to explain, or apologize for, the “embedded” moniker.

It’s also frustrating that the 98 percent of the market that’s not involved in PCs should have to explain itself to the comparatively insignificant 2 percent that is. It’s a bit like labeling the majority of the population “people who aren’t astronauts.”

A somewhat more mellifluous description of embedded processors is “the invisible computer,” a term coined by Donald A. Norman in his 1998 book by the same name. As Norman pointed out, the embedded processors in our toys, games, and appliances have affected modern living conditions more than PCs.

What makes a microprocessor an embedded microprocessor? Nothing, other than the fact that it’s not driving a PC. There is nothing technical or objective that separates an embedded processor from a nonembedded processor (i.e., one that drives a computer). Embedded processors are not faster or slower, cheaper or more expensive, or bigger or smaller than other microprocessors. PCs and Macintoshes have more than one microprocessor inside. One is famous and expensive; the others are embedded processors.

If there’s nothing technically separating embedded and nonembedded (computer) processors, the difference is all a matter of context. Embedded describes how a chip is used, not the chip itself. Any microprocessor can be an embedded microprocessor if it’s used that way. A single chip could occupy both camps. When Intel’s 386 processor was giving way to newer PCs with the 486 chip, many engineers put 386 chips into embedded systems, a case where the 386 was both an embedded processor and a computer processor.

Microprocessor History and Evolution

The first microprocessor chip ever invented is regarded to be the 4004, which Intel first sold to Busicom in February 1971 for use in the latter’s calculator. The 4004 was pitifully slow and simple by today’s standards: It had only 2,300 transistors and ran at a pedestrian 750 KHz (that’s 0.000075 GHz). Over the next five years, Intel updated the 4004 chip with the 8008, the 4040, the 8080, and then the 8086, which is still in use today (albeit heavily modified and updated). The 4004 microprocessor was simple enough that you could actually see the transistors and wires, and even a tiny logo in the (enlarged) photograph shown in Figure 6.2.

At about the same time Intel’s 4004 chip debuted, Texas Instruments released its TMC1795 chip. It, too, led to other better chips throughout the early 1970s, but the family eventually died off. Rockwell, RCA, Data General, MOS Technology, Motorola, and other companies also entered the nascent microprocessor market during this period, although few of those companies are still active (or even in existence) today.

The first microprocessors were not created for computers. The very idea was ludicrous in the 1970s, like making rockets in your garage and selling them to NASA. Instead, the first microprocessors were intended for very humble tasks such as running early digital calculators. (Digital calculators existed before 1971, but they used many separate logic chips rather than a single programmable microprocessor chip.)

Around 1980, both Intel and Motorola radically redesigned their microprocessors and came up with the i432 chip and the 68000 chip, respectively. Motorola’s new processor was a success but Intel’s i432 failed miserably. Although it was a fine design from an engineering perspective, it wasn’t successful in the market. Intel quickly resurrected one of its older processors, the 8086, and freshened it up to create the 80186 and the 80286. Also about this time, a small IBM facility in Boca Raton, Florida, selected Intel’s 8088 processor (yet another variation on the 8086 chip) to use in its Model 5150 Personal Computer: the first IBM PC. That single decision eventually made Intel the most profitable semiconductor maker in the world as it rode wave after wave of personal computers patterned after IBM’s machines.

Motorola and Intel have extended, enhanced, and enlarged their two chip families many times over the years. Intel is still at it: The 386, 486, Pentium I, II, III, and 4 are all direct descendants of the original 4004 microprocessor, circa 1971. Motorola let its 68000 dynasty peter out in the mid-1990s, making another radical redesign to a new chip family called PowerPC.

The 1980s saw a number of old microprocessor companies drop out and new ones join in. National Semiconductor, Western Electric, Digital Equipment Corporation (DEC), Fairchild, Inmos, and others all joined the fray. This decade also saw the rise of the RISC design philosophy from the University of California at Berkeley and at Stanford University, located just across San Francisco Bay from each other. Berkeley’s RISC-I and RISC-II chips eventually metamorphosed into Sun Microsystems’s SPARC processors, which are still used today. Stanford’s MIPS project begat MIPS Computer Systems, later Silicon Graphics.

This period also saw the rise of the x86 clones like AMD, Centaur, Cyrix, NexGen, Rise, Transmeta, UMC, and others too numerous to mention. Intel was enjoying such a bonanza as the PC market skyrocketed that it was inevitable the company would attract imitators. Throughout the late 1980s and early 1990s several companies did their level best to clone, or copy (legally or not) the Intel chip that was used in almost all PCs of the period. These were collectively called x86 processors because Intel’s model numbers all ended with the digits 86 (i.e., 8086, 80386, 80486, etc.). Most of these x86 clones were quite good—some were even technically superior to Intel’s own processors. However, the clone makers never made a significant dent in Intel’s PC-processor monopoly (or, as the U.S. Federal Trade Commission called it in a 1999 consent decree, a “dominant market share,” narrowly avoiding the dreaded and legally binding M-word). Most of the clone makers eventually went out of business, unable to compete against Intel’s vast legal, financial, and marketing resources.

The later 1990s saw fewer new companies enter the microprocessor market and some old ones drop off. Cyrix, Centaur, and NexGen were absorbed by other companies, Rise and UMC exited the market, and Hewlett-Packard abandoned its own PA-RISC processor design and began collaborating with Intel on a project code-named Tahoe that would eventually become IA-64 (Itanium). Digital’s ultra-fast Alpha processors pegged out in 2001, after leading the performance race for nearly 10 years.

Strangely, nearly all the world’s significant microprocessors have come from American companies. Although the European and Japanese semiconductor companies were robust, healthy, and active, very few of them ever introduced a successful microprocessor. Some exceptions were the ARM processors (from England) and the V800 and SuperH families (from Japan). Despite the fact that England and Japan were both leaders in computer science and design, neither country made much of a dent in microprocessors.

What’s a Processor Architecture?

Architecture is the slightly pretentious word engineers use to describe how a microprocessor is designed. For simple semiconductor chip like AND-gates or inverters, there aren’t many different ways to make one work. A brick is a brick is a brick. However, when you get to more complex and elaborate chips like microprocessors, there are thousands of different ways to design one, and the differences have a big effect on the chip’s performance, cost, and power usage. All bricks might be the same, but brick buildings can be firehouses, palaces, or skyscrapers. Hence, the microprocessor architecture describes these differences.

RISC and CISC Architecture

The two most common schools of processor architecture are called complex instruction-set computer (CISC) and reduced instruction-set computer (RISC). Like WWI and WWII, the first term didn’t become popular until the second term provided something to compare it to.

Neither RISC nor CISC is fundamentally faster or better than the other, all marketing claims to the contrary. RISC is the newer style of the two, but it has been much less successful in commercial terms. Until RISC came along, all microprocessor chips were CISC designs. Even today, CISC chips outsell RISC chips by a hefty margin. RISC is newer, flashier, and sexier (to an engineer, perhaps), but not necessarily better. (For reference, CISC is pronounced like the penultimate syllable in San Francisco; RISC is just what it looks like.)

Briefly, CISC chips include complex circuitry that allows them to calculate complex instructions (hence the name), such as adding several numbers together at the same time. RISC chips, on the other hand, have a much simpler set of instructions. Anything that’s not absolutely necessary is jettisoned from the chip design, streamlining the chip and making it smaller, faster, and cheaper to manufacture (because it uses less silicon). The trade-off is that it takes several RISC instructions to do the same work as one CISC instruction, almost exactly offsetting the advantage of the faster chip.

Microprocessor designers argue like cooks in a kitchen. You might design or remodel a kitchen to include every modern appliance and gadget known to humankind. The kitchen would be expensive and large, but no recipe would be beyond your ability. You could also go to the opposite extreme and design only the most rudimentary and spartan kitchen. You’d spend a lot less money on hardware and the kitchen would be smaller (and easier to clean). Theoretically, you could prepare the same dishes in both kitchens, but in the “reduced” kitchen you’d have to put in more manual labor (e.g., no automatic pureeing of egg whites) and the recipes in your cookbook would have to be longer and more detailed. Is one better than the other? That depends on your taste and personal style. RISC promotes simple hardware and detailed instructions; CISC endorses complex hardware and fewer instructions.

DSP Architecture

A third major category of microprocessor designs is called digital signal processor (DSP). Like RISC and CISC, DSP is a design style, a way of organizing a chip to efficiently accomplish certain tasks. In the case of DSP chips, those tasks generally involve handling audio, video, and wireless information.

DSPs are like other microprocessors in most respects, but they are more adept at certain complex mathematical equations. In particular, DSPs are good at compressing and decompressing digitized audio, which is useful in cellular telephones and other wireless products. They’re also good at compressing and decompressing video, such as for cable or satellite TV receivers. Finally, DSPs are good at converting these forms of digital information into a form suitable for high-speed transmission, which makes them good for cellular, terrestrial, and satellite communications.

Programming a DSP chip is a bit different than programming a RISC or CISC chip. DSP programmers tend to have a background in mathematics and understand arcane subjects like information theory, signal-to-noise ratios, and Shannon’s Law. DSP programmers and RISC/CISC programmers often find little in common to talk about, even at technical gatherings.

Superscalar Architecture

Superscalar is the microprocessor equivalent of having several cooks in the kitchen. A superscalar microprocessor can run two or more instructions at the same time, like two or more cooks working on different recipes. Just as in a crowded kitchen, there are often resource constraints or other conflicts that reduce efficiency. Two cooks do not necessarily work twice as fast as one.

Some superscalar microprocessors can handle two instructions at a time, some can handle five or six, and some can do even more. The more instructions a chip can handle (the more “massively superscalar,” in computer parlance), the less efficient the whole operation becomes. The point of diminishing returns generally comes after about six simultaneous instructions on one chip.

VLIW Architecture

A microprocessor technique that’s related to superscalar is called very long instruction word (VLIW), which doesn’t say very much about how it works. All VLIW processors are superscalar but not all superscalar processors use VLIW.

To reuse our cooks-and-kitchens analogy, a VLIW processor works by splitting the instructions from a recipe into perhaps six different subrecipes, one for each cook. Six different cooks would all read from their part of the same cookbook and cooperate on the recipe together. This would be a strange arrangement, but it might avoid some of the haphazard confusion of six different cooks all running in different directions with no cooperation. The writer of this six-part cookbook would, we hope, organize the cooking instructions to maximize cooperation and avoid six-way squabbles over the paring knife.

In theory, VLIW microprocessors avoid many of the diminishing-return problems that plague other superscalar microprocessors. However, they put a great burden on computer programmers to maximize efficiency. Unless the programmers can find ways to gracefully orchestrate multiple “cooks,” they’re no better off than before.

Microprocessor Anatomy and Gazetteer

Without becoming a computer scientist or microprocessor engineer, it’s useful to know some of the terms for various parts of a microprocessor chip. Don’t feel you need to memorize these; use them as reference if you get cornered at a party.

Figure 6.3 shows a simplified block diagram of a microprocessor. This could be anything from a simple 4-bit processor to a big 64-bit machine; the fundamentals are the same for all processor chips.

Decoder

Instructions come into the chip from the top of the diagram. Each microprocessor understands a different set of binary patterns called its instruction set. Like people, different processors speak different languages, and even though all languages express the same concepts and ideas, they use different words.

No matter what instruction set the microprocessor “speaks,” those instructions need to be decoded. The instruction decoder decides what the instruction is telling the microprocessor to do. It might be instructing the microprocessor to add two numbers together, subtract one number from another, compare two numbers to see which is larger, or any of a hundred or so other instructions. Most microprocessors understand 100 to 200 different instructions. Any unintelligible instructions are either ignored or cause the microprocessor chip to enter a failsafe mode until it’s given good instructions.

In our example microprocessor, the decoder decides that the current instruction should be handled by one of the three execution units. Some microprocessors have only one execution unit; others have a dozen or more.

Execution Unit

Execution unit is a grisly-sounding term that simply means that all the instructions are executed, or carried out, in this part of the processor. It’s the part of the chip where all the heavy lifting takes place. There might be more than one execution unit in a microprocessor chip (our example in Figure 6.3 has three). Having more execution units is like adding more hamsters to the wheel: It goes faster, but you also have to feed it more (instructions) to make it go.

If there are multiple execution units in a microprocessor, they’re probably designed to specialize on certain instructions. One execution unit might be built to perform arithmetic (adding, subtracting, multiplying, and dividing numbers) whereas another makes logical comparisons (is this number bigger than that number?).

Some execution units are big and some are small because some instructions are harder to execute than others. Multiplication, for example, is harder than addition (just like it is for people), so the multiplier unit will have more transistors than the adder unit. It will take up more space on the microprocessor’s silicon chip and use more electricity when it runs. In the early days, engineers could actually get a microprocessor chip to melt by giving it just the “right” combination of math problems to figure out.

Floating-Point Unit

A floating-point unit (FPU) is just one of many different kinds of execution units. Microprocessors have no trouble working with numbers, as long as they’re round numbers. Like a typical third-grade student, microprocessors get a headache from fractions.

Numbers with fractions or decimal values are called floating-point numbers. Don’t worry about where this term came from; just treat it as nerd-speak for fractions. Fractions are so hard for microprocessors to deal with that some chips don’t do it at all. They completely avoid fractions or decimal values and treat everything as an integer (a counting number with no fractions). Unfortunately for computer programmers, the world is full of fractions, so this presents a problem.

One approach is to round all numbers off and drop the fractions. A few bank robbery movies have been based on this premise. Another approach is to multiply all numbers by, say, 1 million, and shift the decimal point to the right six places. The third, and best, approach is to use a chip that can actually handle floating-point numbers.

An FPU takes up a large amount of silicon space on the chip because it requires a lot of electronic circuitry. It’s also often the slowest part of the chip, sometimes to the point of slowing down the rest of the microprocessor. Like a typical adventure story, the egghead in the white lab coat is often the slowest person in the party. Finally, FPUs are complicated to design and complex to manufacture. This makes them error-prone, as Intel discovered to its everlasting misery when its famous Pentium processor was discovered to have a bug in its FPU.

Registers

Each microprocessor contains little cubbyholes for it to store numbers as it works on them. These are called registers (after the electrical circuit used to build them) and there might be anywhere from 4 to 500 registers, depending on the particular microprocessor. Registers are small and they take up almost no space on the microprocessor’s silicon chip.

Execution units take data from the registers to do their work. When they’re done, the execution units store their results back into the registers. Registers are like a busy executive’s desk: a place to store a few often-needed items close at hand. Like desk space, the number of registers is limited, so computer programmers prefer microprocessors with lots of registers. It’s like having a big desk to work on.

Cache

Like Robinson Crusoe, a secret cache can help get a microprocessor through lean times. A cache is a memory, pure and simple, but a memory that’s built onto the microprocessor chip instead of being outside in another chip. Caches speed up the processor by keeping often-used bits of data close by. If registers are the programmer’s desktop, caches are the programmer’s file drawer: close, but not quite as close as the registers. Without a cache (or sometimes even multiple caches), the microprocessor might have to go looking for data it needs, slowing down the program.

Caches started appearing on microprocessor chips in the late 1980s when engineers noticed that microprocessor chips were speeding up faster than memory chips were. (This is still going on, and the gap is getting wider.) Making the microprocessor wait for comparatively slow memory chips is like making a highly paid chef wait around for the grocery-delivery boy to show up on his bicycle.

In the kitchen we alleviate this problem by keeping a cache, or store, of food in the cupboards and refrigerator. Occasional trips to the grocery store replenish this local cache. Without them, we’d be making trips to the grocery store (or worse, the farm) for every meal. Caches work by keeping some data physically close to the processor’s execution units so it doesn’t have to go “shopping” for data quite so often.

There’s some serious magic involved in designing caches so they hold the most useful data. A cache isn’t big enough to hold everything, so it’s important to make the best use of the limited space. That sort of design magic is beyond our scope here, but it’s an area of microprocessor design that’s closely watched by insiders.

Bus

Sometimes an execution unit won’t store the result of a calculation in a register; instead, it will be instructed to send the result to another chip. In that case, the data will be sent off the microprocessor over a set of wires called the data bus. In our example microprocessor, the data bus is at the bottom of Figure 6.3, on the far side of the data cache. This means that any time our microprocessor sends data to another chip over the data bus, it also stores a copy of that data in its local data cache. If our processor ever needs to refer to that data again, it’ll have a local copy close at hand. It’s much faster to refer to the cache than to request the data from another chip.

The data bus connects the microprocessor chip to all the other chips that need to share data with it. At a minimum, that would include a few memory chips and maybe a communications chip or a graphics chip. The microprocessor might also be connected to other microprocessors so they can share the workload. There could be several chips attached to the same set of bus wires, or only a few.

Because these chips all need to communicate with each other over the same set of wires, some standards need to be established. Like cars sharing the same streets, there need to be some rules of the road that all participants can agree to. In the electronics business, there are several such bus standards, each one incompatible with the others. Engineers therefore need to choose their chips carefully, making sure that all the chips are designed to adhere to the same bus standards. Otherwise, they won’t be able to communicate with each other.

Tech Talk

One Little, Two Little Endians

Suppose someone wrote a very big number on a piece of paper to mail it to you. However, the paper is too big to fit in an envelope, so your friend cuts the paper in half and sends the pieces in two different envelopes. When the two envelopes arrive, how do you know which way the papers go back together? If you tape them together backwards, you’ll get the wrong number.

Computers solve this problem by sending large numbers either “big end first” or “little end first.” Big end first, or big-endian, means the first envelope to arrive holds the big end of the number (first or leftmost part) and that subsequent parts should be pasted on after (to the right of) that one. Little-endian computers work just the opposite way.

There’s no advantage or disadvantage to either method. Unfortunately, most microprocessors (and therefore, most computers) are permanently fixed one way or the other. One of the original challenges of creating the Internet was getting different computers to exchange data without endian problems.

Before you ask, the words for big- and little-endian come from Gulliver’s Travels, the book by Jonathan Swift in which the fictional hero encounters the folk of Brobdingnag, who are warring over whether to break open their hard-boiled eggs from the big end or the little end.

Pipeline

Although Figure 6.3 doesn’t explicitly show it, every microprocessor has a pipeline. Each individual step that a microprocessor performs, such as decoding, accessing registers, and executing instructions, is one stage of the pipeline. Three stages make a very simple pipeline. Complex microprocessors have pipelines that are more than a dozen stages long. Longer pipelines allow a microprocessor to break up its work into smaller stages. The smaller stages, in turn, allow the microprocessor to run faster.

Pipelines are like assembly lines. If it takes four hours to assemble a television, and the assembly line is broken up into four one-hour tasks, then four different workers can be working on four different TVs at once. A new TV rolls off the assembly line every hour, even though it still takes four hours to make each TV. In the same way, a microprocessor with a four-stage pipeline will have four different instructions in various stages of completion at once. The chip only finishes one instruction at a time, but the next three instructions will be ready right behind it.

What do 4-Bit, 8-Bit, 16-Bit, and 32-Bit Mean?

The “bittedness” of a microprocessor tells you how fast and powerful it is in very broad terms. If you’re a car buff, the number of bits is like the number of cylinders in the engine. A 4-bit processor is the very low end and 64-bit processors (for today, at least) are at the very high end. Most microprocessor chips fall somewhere in between. A PC or Macintosh has a 32-bit microprocessor; microwave ovens generally have an 8-bit processor, and a TV remote control is likely to use a 4-bit processor. The average late-model car includes about a dozen 16-bit microprocessors spread throughout the engine, the transmission, and the dashboard.

More technically, these numbers describe the size, or width, of the processor’s data bus. A 16-bit processor can digest 16 bits of data at once. (For more background on data bits, bytes, and related buzzwords, turn to Chapter 9, “Theory.”) Having a wider data bus (e.g., 16 bits compared to 8 bits) is like drinking through a wider straw: You can get more through in the same amount of time.

In microprocessors, as in engines, the number of bits (or engine cylinders) doesn’t tell the whole story—it only gives a rough idea of performance. A 32-bit processor doesn’t necessarily solve complex equations faster than a 16-bit processor. The only thing for sure is that bigger numbers make for better advertising, for cars as well as microprocessor chips. When Sony introduced its PlayStation 2 video game, the company trumpeted the game’s 128-bit processor. Despite the fact that few potential customers had any idea what that meant, or that it was only marginally true, it sounded good in the ads.

Figure 6.4 illustrates the difference between an 8-bit processor and a 16-bit processor. As you can see, the 8-bit processor has eight wires that bring it data from (and send data to) other chips, like eight fire hoses pumping water from a fire truck. The 16-bit processor has twice as many wires so it can move twice as much data in the same amount of time.

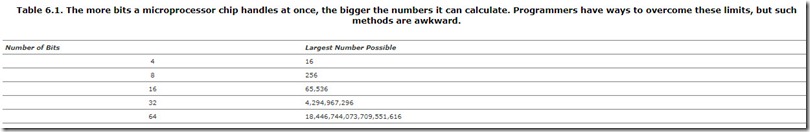

Twice as many wires not only means faster data, it means bigger data. A 16-bit processor can crunch bigger numbers than an 8-bit processor. Imagine you had a calculator that was so small it could show only four digits on the screen. You couldn’t work with any numbers bigger than 9,999, so you’d have to be careful not to add too many numbers together. A calculator with a bigger display is more useful because you aren’t so limited. By the same token, 4-bit processors are limited to working only with small numbers, lest they “overflow” and cut off part of the answer. Table 6.1 shows what the limits are for 4-bit, 8-bit, 16-bit, 32-bit, and 64-bit processors. The bigger processors have much more headroom, making them useful for a bigger class of computing tasks.

Some processors can also “split their bits.” If a 16-bit processor doesn’t need to calculate numbers as big as 65,536 it might be able to do two 8-bit calculations at once, as long as neither one generates an answer bigger than 256. This double-barreled approach allows the chip to do twice as much work in the same amount of time. For 32-bit processors, and especially 64-bit processors, this is actually very common. Even your neighborhood nuclear physicist hardly ever needs 64-bit arithmetic. There just aren’t that many atoms in the universe. Today’s 64-bit microprocessors are more often used for doing four 16-bit calculations (or eight 8-bit calculations, or two 32-bit calculations) at once.

Tech Talk

If you’re mathematically inclined, you might have noticed the pattern in Table 6.1. The number on the right is 2 raised to the power of the number on the left. So, a 4-bit processor can handle values up to 24, which is 16; 28 equals 256; and so on. The reasons for this are detailed in Chapter 9, “Theory,” but it has to do with the fact that computers use binary, or base 2, arithmetic.

Performance, Benchmarks, and Gigahertz

It’s said in the computer world that there are lies, damn lies, and benchmarks. Like Mark Twain’s original observation about statistics, the numbers don’t tell the whole story, or even the half of it. Measuring the speed of a microprocessor chip might seem to be a simple, straightforward task, but it’s anything but.

As an example of the problem, try defining the world’s best car. Do you measure top speed? Trunk space? Fuel efficiency? Price? Resale value? You could combine and weight all these factors, but other people will inevitably argue that you’ve given too much importance to speed and not enough to passenger comfort, for example. You can’t please everyone with a single score. This is the problem with measuring microprocessor chips (and computers). Everyone would like to see a single score for microprocessor “goodness,” but nobody agrees on what that should measure.

Tech Talk

Megahertz, Gigahertz, and Beyond

The speed of microprocessors (or any type of digital chip) is often measured in hertz. One hertz means one “change per second.” If you flip a coin every second, that coin is changing at the rate of 1 hertz. If you can somehow do that trick 1,000 times per second you’d be flipping a coin at 1,000 hertz, or 1 kilohertz(KHz). One million hertz is a megahertz (MHz) and 1 billion hertz is a gigahertz (GHz).

Inexpensive microprocessors might run at 20 MHz or so; more expensive processors for PCs run at more than 1 GHz. The unit of measurement is named after Heinrich Hertz, a German scientist who began measuring the frequency of radio waves back in 1883.

There is no theoretical limit to how high these chip frequencies can go; it’s been creeping up year after year with advances in silicon manufacturing technology. If you’re a radio operator, you might recognize some of these frequencies from the radio spectrum. Today’s microprocessors do indeed broadcast tiny radio signals in the megahertz and gigahertz bands, which is why computers are shielded in metal boxes to minimize interference with TVs and radios.

The easiest thing to measure is the clock speed of a microprocessor, also called its frequency. A chip might run at 5 MHz, 500 MHz, or 5,000 MHz, but at least it’s a verifiable number. Unfortunately, it’s also pretty meaningless. The clock speed of a microprocessor is like the revolutions per minute (RPM) of a car’s engine; it makes a nice “zoom, zoom” noise when you rev it up, but it doesn’t tell you anything about real horsepower. Big frequency numbers add sizzle to advertisements and marketing literature, but they’re not the steak.

To try to bring order to this chaos, a number of people have created special computer programs called benchmarks. Benchmarks instruct a microprocessor to perform repetitive tasks and then measure the time it took to finish them. They are the microprocessor equivalent of drag racing. (The word benchmark comes from an ages-old carpenters’ habit of cutting regularly spaced marks on a workbench to measure materials.)

Benchmarks are fine, as far as they go, but people often read too much into them. Like the miles per gallon (MPG) sticker on a car, benchmarks are only an estimate. Your mileage may vary. Even if the estimate is correct, one number can’t tell you very much about the whole product.

Tech Talk

Measuring Dhrystone MIPS

DEC made a computer called the VAX 11/780, which was the first computer to perform 1 million instructions per second, in 1978. (This turned out to be untrue, but that’s another story.) It wasn’t long before other computer companies wanted to prove their products were as fast as the VAX.

They looked for a program that was easy to translate for different types of computers, and Dhrystone was selected. The VAX happened to run the Dhrystone program 1,757 times per second. This set the bar. Now any computer that could do more than 1,757 runs per second was judged to be faster than a VAX.

Bizarrely, the magic number of 1,757 Dhrystone runs per second began to be used as the definition of 1 MIPS. Whether a computer was really performing 1 million instructions per second (1 MIPS) or not, the Dhrystone score was used as proof. Even computers that were faster than 1 MIPS were downgraded to 1 MIPS based on their Dhrystone performance. The same scale is still used today.

Misleading MIPS

If you’re optimistic, MIPS stands for “millions of instructions per second.” If you’re cynical, it’s “meaningless indicator of performance for salesmen.” The MIPS number was for a long time the accepted way to measure a microprocessor’s performance. Over time it has slowly outgrown is usefulness and it certainly does not measure anything useful anymore.

Early computer engineers naturally liked to compare their latest computers with their rivals’ machines. To do this, they’d count the number of instructions each computer could execute per second. The more instructions per second, the faster the computer. In the 1970s, performing at 1 MIPS was a big achievement, like breaking the sound barrier.

Today’s microprocessors in PCs and games can easily polish off hundreds of millions of instructions per second, and for less money than those early computers. However, they aren’t the same kind of instructions, so comparing today’s computers to yesterday’s isn’t really fair. It’s like comparing a modern Formula 1 auto race to a historic bicycle race. Of course the modern racers go around the track faster, but what does that prove?

By the same token, it’s not relevant, or fair, to compare the MIPS ratings of different microprocessors. All microprocessors execute different kinds of instructions (called their instruction set), and they’re not directly comparable. Person A might be able to read Japanese faster than Person B can read French, but that doesn’t tell us anything useful about either one of them.

Tech Talk

How to Pronounce—and Spell—MIPS

If you really want to impress your colleagues, be sure you always pronounce and spell MIPS with the capital S on the end. Don’t get caught saying “one MIP” because there’s no such thing. It’s always “one MIPS” because the final S stands for “seconds,” sort of like the H in miles per hour (MPH). Even though it sounds funny, “one MIPS” is correct.

The Mythical MIPS-per-MHz Ratio

One favorite trick of chip makers is to brag about the “efficiency” of their processors with a MIPS-per-MHz ratio. Obviously this number is derived by taking the number of MIPS (which might be suspect, as described earlier) and dividing by the chip’s clock speed measured in megahertz. The larger this number is, the more “efficient” the chip is judged to be.

There are a few problems with this approach. First, nobody cares. Of what value is it to a consumer to know that one chip delivers more MIPS (whatever those are) per millionth of a second? Isn’t the end result what matters? Multiplying the two numbers—MIPS times megahertz—might make sense. Dividing one by the other is pointless.

Second, there’s the squishy definition of MIPS itself. It might measure how many millions of instructions the chip executes per second, but probably not. More likely it’s based on Dhrystone, or another benchmark, or drawn from a hat containing competitors’ scores. Even if the true MIPS number is used, that’s not a useful indication of work, efficiency, or forward progress.

Finally, the ratio is self-defining; it’s a circular reference. Chip makers often define the MIPS rating for their microprocessor by multiplying its frequency (megahertz) by some secret number, essentially reversing the MIPS/MHz equation. With only two numbers, and one of them suspect, the MIPS/MHz ratio is really the “megahertz multiplied by some number we made up in the marketing department” ratio.

Power Consumption and MIPS/Watt

Lately it’s become fashionable to design microprocessor chips so that they use the least amount of electricity possible. For battery-operated devices like laptop computers, the motivation for this is obvious. The longer the battery lasts, the happier the customer is. Lower power consumption also means less heat, and sometimes heat is a bigger problem than battery life. Again, customers won’t be happy with a laptop computer that gets too hot to touch.

For these reasons and others, engineers have made rapid progress toward reducing the power consumption of high-end microprocessors to manageable levels. It naturally follows, then, that these same chip makers want to trumpet their advancements to their customers and the press. Alas, measuring power consumption is fraught with as many difficulties as measuring performance. The seemingly straightforward task of measuring electrical consumption has become a battlefield among warring microprocessor companies.

The most common measure of power efficiency is the MIPS-per-watt ratio. As we just saw with MIPS-per-MHz, the first of these two numbers is unreliable to begin with. Sadly, the second number can be just as erratic. Measuring the power usage of a processor isn’t easy because it changes all the time. The amount of electricity the chip uses depends on what it’s doing. It’s easy to invent a situation where the processor is relatively idle—vacationing, if you will—and consuming little energy. It’s also easy to concoct situations where the processor is fantastically busy and consuming far more electricity. This shouldn’t be surprising. Your TV uses more power when it’s turned on than when it’s turned off. Which condition do you measure?

That depends on whether it’s turned on or turned off most of the time. (Let’s hope it’s the latter.) It also depends on whether you want to know overall power consumption (e.g., over the duration of a month) or instantaneous power consumption (e.g., for a period of 10 seconds). In the same way, a processor’s power consumption can be dialed in to nearly any number that suits its promoters.

So we’re left with one suspect number (MIPS) divided by another suspect number (watts of power) to produce a monumentally suspect number. Bearing, as it does, no relation to anything tangible or useful, chip makers might as well be measuring furlongs per fortnight.

What Is Software?

Software, or programming, is the essence of what makes microprocessors interesting. Software is a made-up word meant to indicate it’s somehow the opposite of hardware. Everybody knows what hardware is: It’s the nuts and bolts of a computer, television, or little red wagon. In semiconductor terms, hardware is silicon, plastic, and other things you can touch. Software, on the other hand, is like the music on a CD or the programming on your television. It might not be physical but it’s definitely real.

The TV set, the CD player, the cookbook, and the leaflet printed in 12 languages (none of them your own) are just examples of how the software is delivered. For microprocessor chips, software usually comes on floppy disks, CD-ROMs, over the airwaves (like TV and radio), over the Internet, or permanently stored in a ROM chip. Regardless of the form, software is the programming for microprocessors, just like TV shows are the programming for TVs.

Like TV shows, programs have to be created by someone. Usually a team of programmers works together. Some programming teams even have directors and producers, just like studio productions on a movie lot. Other times a single programmer works alone, like an independent film auteur. Like filmmaking, photography, or recording music, some people write programs as a paying profession, whereas others do it in their spare time just because they love it.

Tech Talk

You can call programs software, but saying “software program” is redundant. Creating software is called programming, but programmers sometimes call themselves software engineers. Programmers (or software engineers) say they spend the day “writing code” or simply “coding.” At the end of a day of coding, a programmer might have written a certain number of “lines of code,” meaning a certain number of instructions to the microprocessor.

In a related vein, there’s also “firmware.” Firmware is software stored in a chip (which is hardware), blurring the distinction between hardware and software. Finally, “wetware” is simply the human brain.

One difference between programs and movies is that movies can be played on any TV (or cinema screen) around the world; this is not so for programs. Computer programs are always created for a specific type of computer. Programs written for an Apple Macintosh, for example, don’t work on a PC running Microsoft Windows. A programmer has to decide early on whether the program should work on a PC, a Macintosh, a UNIX workstation, a Palm Pilot, a Nintendo video game, an antilock brake system, a cellular telephone, or any of a thousand other platforms for running software. You can play music CDs on any CD player, but you can only “play” computer programs on the right kind of computer.

What’s the reason for all this incompatibility? The world might be a better place if programs could all work on any microprocessor, but that’s not the way it is. Each different microprocessor chip is designed to understand a different set of instructions (called, obviously enough, its instruction set). Like speaking Russian or Swahili, different chips simply speak different languages, and none of them are bilingual. Programs created for one type of microprocessor chip are interpreted as gibberish by any other type of microprocessor chip.

There are families of chips that all understand the same language and run the same programs. Chips in the Pentium family, for example, all run the same programs. That’s why old and new PCs can run the same PC programs. Microprocessors from AMD’s Athlon and Opteron product lines are also part of the Pentium family, so they can also run PC programs. However, chips from the SPARC family (to pick just one example) cannot run PC programs because SPARC microprocessors have a different instruction set from Pentium-family microprocessors.

If a team of programmers wants their program to work both on Pentium-family processors and SPARC-family processors, they have to either write the program twice or translate the program from one instruction set to the other using an automated tool called a cross-compiler.

Choosing Microprocessors

The market for microprocessors is enormously competitive. Well over 100 different 32-bit microprocessors are available, with even more 16-bit, 8-bit, and 4-bit chips competing for the low end. In all, about 1,000 different processors are competing over millions of different systems, from thermostats to thermonuclear weapons.

Paradoxically, this most technical of products competes on very nontechnical grounds. At the high end of the market, perception and advertising have a big influence on buying decisions and market success. The ultimate example is PC processors: Television advertisements urge (presumably) nontechnical viewers to buy a product they don’t understand. The ads don’t contain even a hint of technical material. They’re all about image and brand-name recognition. When information fails, image prevails.

The 32-bit embedded microprocessor market is only a little less image-conscious. Processor families like MIPS and ARM are successful because they’re perceived as safe choices, used and adopted by dozens of clients with no apparent complaints. Even though many other 32-bit alternatives exist, some of which might even be technically superior, their advantages aren’t always compelling enough to break through the image of the market leaders.

Software Compatibility

Most of all, the microprocessor business is determined by software. Most buyers choose a microprocessor not by the merits of its hardware, but by the advantages of its software. Because every microprocessor family has its own special instruction set, and programs written for one microprocessor family cannot run on another, it follows that choosing a microprocessor also means choosing the software that’s available for it. For most buyers, that’s putting the cart before the horse. They’d rather decide what software they want, and then choose a microprocessor that works with that software.

Choosing a microprocessor is a bit like choosing a new language to study. Any new language requires about the same amount of effort to master, so you generally choose one that’s widely used (Spanish, French, or Mandarin), one that’s related to your area of interest (Italian for singers), or one that’s locally popular (German in Milwaukee or Vietnamese in San Jose). The choice has nothing to do with the expressive power of the language or its other qualities; it has more to do with its place in the outside world. Similarly, buyers choose microprocessors that are in wide use, suited to their applications, or entrenched within their company or industry. This has little to do with bittedness, architecture, or any other intrinsic qualities of the hardware.

The downside of this herd instinct is that it makes it harder to differentiate a new product. If you’ve chosen a particular microprocessor because others in your industry have done so, you’re no different from your competitors. You’ve gained a software foundation but lost a hardware advantage. In the worst case, you differentiate your product by the color of its plastic (literally true in some cases). More optimistically, you can create new software for the product that’s original. The additional software can add features your competitors don’t have, masking the underlying similarities in the hardware.

Microprocessor Future Trends

There’s no question that microprocessors will continue to grow more complex over time. We’re nowhere near reaching any natural limit to microprocessor growth. Where once it seemed silly to design a computer-on-a-chip—after all, how many computers does the world need—now it seems just as absurd to stop.

The actual size of microprocessor chips is growing only slowly, but the complexity within them is growing much faster because semiconductor technology permits engineers to squeeze more transistors into less space. Microprocessors with 1 million transistors were surpassed by 10-million-transistor processors after only a few years. Microprocessors with 100 million transistors weren’t far behind. This trend continues with no end in sight.

The bigger question is this: How will engineers fill the space? How will they spend their 500-million-transistor budgets? The answer has historically come from above, from mainframe systems and supercomputers. For decades, microprocessors shadowed the developments of high-end computers, first lagging by several generations but now lagging the high-end machines by only a few years. Microprocessors have been nipping at the heels of the computer makers for years, which is why so many big computer companies went out of business in the 1990s. Rather than fight the trend, some computer makers joined the bandwagon and designed their machines around commercial microprocessors, but then had a hard time differentiating their products from other companies with the same strategy and access to the same chips.

Future trends point toward cooperation. Even though microprocessors get about 30 percent faster every year, they’re not doing that much more useful work. Instead of performing single tasks even faster, future chips are likely to perform more simultaneous tasks, but slower. A team of 12 bricklayers is more productive than one really fast bricklayer working alone. By the same token, teams of microprocessors working on a problem together will likely generate a better return on the investment of transistors.

Cooperation within a single chip is one way to go. Called instruction-level parallelism (ILP), microprocessors today can execute a few instructions in parallel. This approach can’t grow forever, though. Superscalar processors can become so complex that they don’t deliver enough extra performance to be worth the extra silicon. ILP has already moved microprocessors about as far forward as it can. It has worked for the past few years, but it’s an approach that’s running out of steam.

Taking the problem outside the chip is another approach. Chip-level symmetric multiprocessing (SMP) promises to keep many microprocessors working in concert. Large supercomputers do this already, on the theory that two (or more) heads are better than one. Using several chips together has economic advantages, too. Individual microprocessors won’t have to be so large (read: expensive) and it might be possible to add or remove processors from a system when upgrading. The problems lie mainly in the software. It’s not trivial to program a computer with multiple processors, any more than it is to command multiple cooks working in the same kitchen. Orchestrating that much computing horsepower is a talent few programmers have thus far demonstrated.

RISC concepts seem to be slowly losing currency as chip complexity increases. Paradoxically, the RISC design philosophy arose just as transistor budgets were expanding. Why economize on silicon when transistors are almost free? Why put the burden on software when programmers are scarce and highly paid? Chip designers have learned they can make their microprocessors more complex and still have them run faster. We get to have our cake and eat it, too. Whichever architectural approach gains prominence, tomorrow’s microprocessors are guaranteed to be more complex than today’s.

The other guarantee is that microprocessors will become even more ubiquitous. In the early 1900s electric motors were rare and precious commodities (so was home electricity). Catalogs and dry-good stores sold myriad attachments and add-ons for householders who presumably had only one electrical motor in the home, if that. Today, electric motors are so prevalent in blenders, tape players, cooling fans, and executive toys that we don’t notice them. If they wear out or break we throw them away because it’s not worth the effort to repair them.

Tomorrow’s microprocessors will go from being in everything to being in everything many times over. The average cellular telephone won’t have one or two microprocessors in it; it will have 15. Cars will become rolling computer farms. Children’s toys will be the envy of the NASA space program. Gumball machines will dispense 64-bit souvenirs, after you wave your computerized debit card in its general direction. Microprocessors will be like pennies on the sidewalk: shiny and interesting but not worth picking up off the ground.