Part 2: Processing

The difference between a computer and a television set at first seems obvious—one has a keyboard and the other doesn’t. Take a look at the modern remote control, however, and you’ll see the difference is more subtle. It’s something that’s going on behind the screens. When you sit around watching the television, you’re gradually putting down roots as a couch potato or some less respected vegetable, but when you sit at the computer, it watches you. It wants to know what you want. It listens to your thoughts, relayed through the keyboard, to carry out your commands—calculating, organizing, alphabetizing, filing away, drawing, and editing. No other machine takes information from you and handles it so adroitly. That’s the computer’s power as a data-processing machine, a thinking machine.

As a physical entity, the processing part of a desktop computer is inside the big box you may call the system unit, CPU (for central processing unit), or simply “the computer.” But the processing part of the computer only takes up a fraction of the space occupied by the system unit. All the essential processing power of the computer fits into a handful of circuit components—the microprocessor, the chipset, and the BIOS. Each has its own function in bringing the processing power of the computer to life, and each is substantially different in concept, design, and operation.

The microprocessor, the chipset, and the BIOS—and the rest of the computer as well—are themselves built the same. Each is a tiny slice of nearly pure silicon that has been carefully fabricated into a digital electronic circuit. Because all three processing components of a computer have this same silicon/electrical foundation—indeed, all of today’s electronic devices share these same design elements—we’ll talk about the underlying technologies that make the processing of a computer possible before looking at the individual components themselves.

Chapter 4. Digital Electronics

First and foremost, the computer is a thinking machine—and that implies all sorts of preposterous nonsense. The thinking machine could be a devious device, hatching plots against you as it sits on your desk. A thinking machine must work the same unfathomable way as the human mind, something so complicated that in thousands of years of attempts by the best geniuses, no one has yet satisfactorily explained how it works. A thinking machine has a brain, so you might suppose that opening it up and working inside it is brain surgery, and the electronic patient is likely to suffer irreversible damage at the hands of an unskilled operator.

But computers don’t think—at least not in the same way you and I do or Albert Einstein did. The computer has no emotions or motivations. The impulses traveling through it are no odd mixture of chemicals and electrical activity, of activation and repression. The computer deals in simple pulses of electricity, well understood and carefully controlled. The intimate workings of the computer are probably better understood than the seemingly simple flame that inhabits the internal combustion engine inside your car. Nothing mysterious lurks inside the thinking machine called the computer.

What gives those electrical pulses their thinking power is a powerful logic system that allows electrical states to represent propositions and combinations of them. Engineers and philosophers tailored this logic system to precisely match the capabilities of a computer while, at the same time, approximating the way you think and express yourself—or at least how they thought you would think and express yourself. When you work with a computer, you bend your thoughts to fit into this clever logical system; then the computer manipulates your ideas with the speed and precision of digital electronics.

This logic system doesn’t deal with full-fledged ideas such as life, liberty, and the pursuit of happiness. It works at a much smaller level, dealing with concepts no more complex than whether a light switch is on or off. But stacking those tiny logical operations together in more and more complex combinations, building them layer after layer, you can approach an approximation of human language with which to control the thoughts of the computer. This language-like control system is the computer program, the software that makes the computer work.

The logic and electronics of computer circuitry are intimately combined. Engineers designed the electronics of computers exactly to suit the needs of binary logic. Through that design, your computer comes to life.

Electronic Design

Computers are thought fearsome because they are based on electrical circuits. Electricity can be dangerous, as the anyone struck by lightning will attest. But inside the computer, the danger is low. At its worst, it measures 12 volts, which makes the inside of a computer as safe as playing with an electric train. Nothing that’s readily accessible inside the computer will shock you, straighten your hair, or shorten your life.

Personal computers could not exist—at least in their current, wildly successful form—were it not for two concepts: binary logic and digital circuitry. The binary approach reduces data to its most minimalist form, essentially an information quantum. A binary data bit simply indicates whether something is or is not. Binary logic provides rules for manipulating those bits to allow them to represent and act like real-world information we care about, such as numbers, names, and images. The binary approach involves both digitization (using binary data to represent information) and Boolean algebra (the rules for carrying out the manipulations of binary data).

The electrical circuits mimic the logic electrically. Binary logic involves two states, which a computer’s digital logic circuitry mimics with two voltage levels.

The logic isn’t only what the computer works with; it’s also what controls the computer. The same voltages used to represent values in binary logic act as signals that control the circuits. That means the signals flowing through the computer can control the computer—and the computer can control the signals. In other words, the computer can control itself. This design gives the computer its power.

Digital Logic Circuitry

The essence of the digital logic that underlies the operation of the microprocessor and motherboard is the ability to use one electrical signal to control another.

Certainly there are a myriad of ways of using one electrical signal to control another, as any student of Rube Goldberg can attest. As interesting and amusing as interspersing cats, bellows, and bowling balls in the flow of control may be, most engineers have opted for a more direct system that uses a more direct means based on time-proved electrical technologies.

In modern digital logic circuitry, the basis of this control is amplification, the process of using a small current to control a larger current (or a small voltage to control a larger voltage). The large current (or voltage) exactly mimics the controlling current (or voltage) but is stronger or amplified. In that every change in the large signal is exactly analogous to each one in the small signal, devices that amplify in this way are called analog. The intensity of the control signal can represent continuously variable information—for example, a sound level in stereo equipment. The electrical signal in this kind of equipment is therefore an analogy to the sound that it represents.

In the early years of the evolution of electronic technology, improving this analog amplification process was the primary goal of engineers. After all, without amplification, signals eventually deteriorated into nothingness. The advent of digital information and the earliest computers made them use the power of amplification differently.

The limiting case of amplification occurs when the control signal causes the larger signal to go from its lowest value, typically zero, to its highest value. In other words, the large signal goes off and on—switches—under control of the smaller signal. The two states of the output signal (on and off) can be used as part of a binary code that represents information. For example, the switch could be used to produce a series of seven pulses to represent the number 7. Because information can be coded as groups of such numbers (digits), electrical devices that use this switching technology are described as digital. Note that this switching directly corresponds to other, more direct control of on/off information, such as pounding on a telegraph key, a concept we’ll return to in later chapters.

Strictly speaking, an electronic digital system works with signals called high and low, corresponding to a digital one and zero. In formal logic systems, these same values are often termed true and false. In general, a digital one or logical true corresponds to an electronic high. Sometimes, however, special digital codes reverse this relationship.

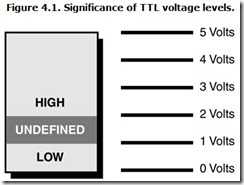

In practical electrical circuits, the high and low signals only roughly correspond to on and off. Standard digital logic systems define both the high and low signals as ranges of voltages. High is a voltage range near the maximum voltage accepted by the system, and low is a voltage range near (but not necessarily exactly at or including) zero. A wide range of undefined voltages spreads between the two, lower than the lowest edge of high but higher than the highest edge of low. The digital system ignores the voltages in the undefined range. Figure 4.1 shows how the ranges of voltages interrelate.

Perhaps the most widely known standard is called TTL (for transistor-transistor logic). In the TTL system, which is still common inside computer equipment, a logical low is any voltage below 0.8 volts. A logical high is any level above 2.0 volts. The range between 0.8 and 2.0 volts is undefined.

As modern computers shift to lower voltages, the top level of the logical high shifts downward—for example, from the old standard of 5.0 volts to the 3.3 volts of the latest computer equipment (and even lower voltages of new power-conserving microprocessors)—but the relationship between the high and low voltages, along with the undefined range in between, remains the same. Modern systems usually retain the same low and undefined ranges—they just lop the top off the figure showing the TTL voltage levels.

Electronics

Modern electronics are filled with mysterious acronyms and even stranger-sounding names. Computer are filled with circuits made from these things, unfamiliar terms such as CMOS and NMOS, semiconductors, and integrated circuits. A bit of historical perspective will show you what the names means and where (and how) the technologies they describe originated.

Over the years, electrical engineers have developed a number of ways one signal can control another. The first approach to the electrical control of electrical flow evolved from the rattling telegraph key. When a telegrapher jammed down on his key to make dots and dashes, he actually closed an electrical circuit, which sent a voltage down the telegraph line. At the far end of the connection, this signal powered an electromagnet that snapped against a piece of iron to create the dot and dash sound.

In 1835 Joseph Henry saw that the electromagnet could be adapted to operate a switch rather than just pull on a hunk of iron. The electromagnetically actuated switch allowed a feeble current to switch on a more powerful current. The resulting device, the electrical relay, was key to the development of the long-distance telegraph. When telegraph signals got too weak from traveling a great distance, a relay could revitalize them.

In operation, a relay is just a switch that’s controlled by an electromagnet. Activating the electromagnet with a small current moves a set of contacts that switch the flow of a larger current. The relay doesn’t care whether the control current starts off in the same box as the relay or from a continent away. As simple in concept as the relay is, its ability to use one signal to control another proved very powerful—powerful enough that relays served as the foundation of some of the first computers (or electrical calculators), such as Bell Lab’s 1946 Mark V computer. Relays are still used in modern electrical equipment.

Vacuum Tubes

The vacuum tube improved on the relay design for computers by eliminating the mechanical part of the remote-action switch. Using electronics only, a tube could switch and perform logic operations faster, thousands of times faster, than relays.

Vacuum tubes developed out of Thomas Edison’s 1879 invention of the incandescent light bulb. After the public demonstration of the bulb, Edison continued to work with and improve it. Along the way, he made a discovery in 1883 (which some historians credit as Edison’s sole original contribution to pure scientific research) of what has come to be called the Edison Effect. Edison noted he could make a current flow through the vacuum in the bulb from the filament to a metal plate he had introduced inside.

The Edison Effect remained a curiosity until 1904 when John Ambrose Fleming created the diode vacuum tube. Fleming found that electrons would flow from the negatively charged hot filament of the light bulb to a positively charged cold collector plate, but not in the other direction. Fleming made an electrical one-way street that could operate as a rectifier to change alternating current into direct current or as a detector that pulled modulation from carrier waves (by stripping off the carrier wave’s alternating cycles).

In 1907 Lee De Forest created the Audion, now known as the triode tube. De Forest introduced an element of control to the bulb-cum-diode. He interposed a control grid between the hot filament (the cathode) and the cold plate (the anode). De Forest found that he could control the electron flow by varying the voltage he applied to the control grid.

The grid allowed the Audion to harness the power of the attraction of unlike electrical charges and the repulsion of like charges, enabling a small charge to control the flow of electrons through the vacuum inside the tube. In the Audion, as with the relay, a small voltage could control a much larger voltage. De Forest created the first electronic amplifier, the basis of all modern electronics.

The advantage of the vacuum tube over the relay in controlling signals is speed. The relay operates at mechanical rates, perhaps a few thousand operations per second. The vacuum tube can switch millions of times per second. The first recognizable computers (such as ENIAC) were built from thousands of tubes, each configured as a digital logic gate.

Semiconductors

Using tube-based electronics in computers is fraught with problems. First is the space-heater effect: Tubes have to glow like light bulbs to work, and they generate heat along the way, enough to smelt rather than process data. And, like light bulbs, tubes burn out. Large tube-based computers required daily shutdown and maintenance as well as several technicians on the payroll. ENIAC was reported to have a mean time between failures of 5.6 hours.

In addition, tube circuits are big. ENIAC filled a room, yet the house-sized computers of 1950s vintage science fiction would easily be outclassed in computing power by today’s desktop machines. In the typical tube-based computer design, one logic gate required one tube that took up considerably more space than a single microprocessor with tens of millions of logic gates. Moreover, physical size isn’t only a matter of housing. The bigger the computer, the longer it takes its thoughts to travel through its circuits—even at the speed of light—and the more slowly it thinks.

Making today’s practical computers took another true breakthrough in electronics: the transistor, first created at Bell Laboratories in 1947 and announced in 1948 by the team of John Bardeen, Walter Brattain, and William Shockley. A tiny fleck of germanium (later, silicon) formed into three layers, the transistor was endowed with the capability to let one electrical current applied to one layer alter the flow of another, larger current between the other two layers. Unlike the vacuum tube, the transistor needed no hot electrons because the current flowed entirely through a solid material—the germanium or silicon—hence, the common name for tubeless technology, solid-state electronics.

Germanium and silicon are special materials—actually, metals—called semiconductors. The term describes how these materials resist the flow of electrical currents. A true electrical conductor (such as the copper in wires) hardly resists the flow of electricity, whereas a non-conductor (or insulator, such as the plastic wrapped around the wires) almost totally prevents the flow of electricity. Semiconductors allow some—but not much—electricity to flow.

By itself, being a poor but not awful electrical conductor is as remarkable as lukewarm water. However, infusing atoms of impurities into the semiconductor’s microscopic lattice structure dramatically alters the electrical characteristics of the material and makes solid-state electronics possible.

This process of adding impurities is called doping. Some impurities add extra electrons (carriers of negative charges) to the crystal. A semiconductor doped to be rich in electrons is called an N-type semiconductor. Other impurities in the lattice leave holes where electrons would ordinarily be, and these holes act as positive charge carriers. A semiconductor doped to be rich in holes is called a P-type semiconductor.

Electricity easily flows across the junction between the two materials when an N-type semiconductor on one side passes electrons to the holes in a P-type semiconductor on the other side. The empty holes willingly accept the electrons. They just fit right in. But electricity doesn’t flow well in the opposite direction, however. If the P-type semiconductor’s holes ferry electrons to the N-type material at the junction, the N-type semiconductor will refuse delivery. It already has all the electrons it needs. It has no place to put any more. In other words, electricity flows only in one direction through the semiconductor junction, just as it flows only one way through a vacuum-tube diode.

The original transistor incorporated three layers with two junctions between dissimilar materials, stacked in layers as N-P-N or P-N-P. Each layer has its own name: The top is the emitter, the middle of the sandwich is the gate, and the bottom is the collector. (The structure of a transistor isn’t usually a three-layer cake with top and bottom, but that’s effectively how it works.)

Ordinarily no electricity could pass through such a stack from emitter to collector because the two junctions in the middle are oriented in opposite directions. One blocks electrical flow one way, and the second blocks the flow in the other direction.

The neat trick that makes a transistor work is changing the voltage on the middle layer. Say you have a P-N-P transistor. Your wire dumps electrons into the holes in the P-layer, and they travel to the junction with the N-layer. The N-layer is full of electrons, so it won’t let the current flow further. But if you drain off some of those electrons through the gate, current can flow through the junction—and it can keep flowing through the next junction as well. It only takes a small current to drain electrons from the gate to permit a large current to flow from emitter to collector.

The design of transistor circuits is more complicated than our simple example. Electrical flow through the transistor depends on the complex relationships between voltages on its junctions, and the electricity doesn’t necessarily have to flow from emitter to collector. In fact, the junction transistor design, although essential to the first transistors, is rarely used in computer circuits today. But the junction transistor best illustrates the core principles of all solid-state electronics.

Modern computer circuits mostly rely on a kind of transistor in which the electrical current flow through a narrow channel of semiconductor material is controlled by a voltage applied to a gate (which surrounds the channel) made from metal oxide. The most common variety of these transistors is made from N-type material and results in a technology called NMOS, an acronym for N-channel Metal Oxide Semiconductor. A related technology combines both N-channel and P-channel devices and is called CMOS (Complementary Metal Oxide Semiconductor) because the N-and P-type materials are complements (opposites) of one another. These names—CMOS particularly—pop up occasionally in discussions of electronic circuits.

The typical microprocessor once was built from NMOS technology. Although NMOS designs are distinguished by their simplicity and small size (even on a microchip level), they have a severe shortcoming: They constantly use electricity whenever their gates are turned on. Because about half of the tens or hundreds of thousands of gates in a microprocessor are switched on at any given time, an NMOS chip can draw a lot of current. This current flow creates heat and wastes power, making NMOS unsuitable for miniaturized computers (which can be difficult to cool) and battery-operated equipment, such as notebook computers.

Some earlier and most contemporary microprocessors now use CMOS designs. CMOS is inherently more complex than NMOS because each gate requires more transistors, at least a pair per gate. But this complexity brings a benefit: When one transistor in a CMOS gate is turned on, its complementary partner is switched off, thus minimizing the current flow through the complementary pair that make up the circuit. When a CMOS gate is idle, just maintaining its state, it requires almost no power. During a state change, the current flow is large but brief. Consequently, the faster the CMOS gate changes state, the more current that flows through it and the more heat it generates. In other words, the faster a CMOS circuit operates, the hotter it becomes. This speed-induced temperature rise is one of the limits on the operating speed of many microprocessors.

CMOS technology can duplicate every logic function made with NMOS but with a substantial saving of electricity. On the other hand, manufacturing costs somewhat more because of the added circuit complexity.

Integrated Circuits

The transistor overcomes several of the problems with using tubes to make a computer. Transistors are smaller than tubes and give off less heat because they don’t need to glow to work. But every logic gate still requires one or more transistors (as well as several other electronic components) to build. If you allocated a mere square inch to every logic gate, the number of logic gates in a personal computer microprocessor such as the Pentium 4 (about fifty million) would require a circuit board on the order of 600 square feet.

At the very end of the 1950s, Robert N. Noyce at Fairchild Instrument and Jack S. Kilby of Texas Instruments independently came up with the same brilliant idea of putting multiple semiconductor devices into a single package. Transistors are typically grown as crystals from thin-cut slices of silicon called wafers. Typically, thousands of transistors are grown at the same time on the same wafer. Instead of carving the wafer into separate transistors, the engineer linked them together (integrated them) to create a complete electronic circuit all on one wafer. Kilby linked the devices with micro-wires; Noyce envisioned fabricating the interconnecting circuits between devices on the silicon itself. The resulting electronic device, for which Noyce applied for a patent on July 30, 1959, became known as the integrated circuit, or IC. Such devices now are often called chips because of their construction from a single small piece of silicon—a chip off the old crystal. Integrated circuit technology has been adapted to both analog and digital circuitry. Their grandest development, however, is the microprocessor.

Partly because of the Noyce invention, Fairchild flourished as a semiconductor manufacturer throughout the 1960s. The company was acquired by Schlumberger Ltd. in 1979, which sold it to National Semiconductor in 1987. In 1996, National spun off Fairchild as an independent manufacturer once again, and the developer of the integrated circuit continues to operate as an independent business, Fairchild Semiconductor Corporation, based in South Portland, Maine.

The IC has several advantages over circuits built from individual (or discrete) transistors, most resulting from miniaturization. Most importantly, integration reduces the amount of packaging. Instead of one metal or plastic transistor case per logic gate, multiple gates (even millions of them) can be combined into one chip package.

Because the current inside the chip need not interact with external circuits, the chips can be made arbitrarily small, enabling the circuits to be made smaller, too. In fact, today the limit on the size of elements inside an integrated circuit is mostly determined by fabrication technology; internal circuitry is as small as today’s manufacturing equipment can make it affordably. The latest Intel microprocessors, which use integrated circuit technology, incorporate the equivalent of over 50 million transistors using interconnections that measure less than 0.13 of a micron (millionths of a meter) across.

In the past, a hierarchy of names was given to ICs depending on the size of circuit elements. Ordinary ICs were the coarsest in construction. Large-scale integration (LSI) put between 500 and 20,000 circuit elements together; very large-scale integration (VLSI) put more than 20,000 circuit elements onto a single chip. All microprocessors use VLSI technology, although the most recent products have become so complex (Intel’s Pentium 4, for example, has the equivalent of about 50 million transistors inside) that a new term has been coined for them, ultra large-scale integration (ULSI).

Moore’s Law

The development of the microprocessor often is summed up by quoting Moore’s Law, which sounds authoritative and predicts an exponential acceleration in computer power. At least that’s how Moore’s Law is most often described when you stumble over it in books and magazines. The most common interpretation occurring these days is that Moore’s Law holds that computer power doubles every 18 months.

In truth, you’ll never find the law concisely quoted anywhere. That’s because it’s a slippery thing. It doesn’t say what most people think. As originally formulated, it doesn’t even apply to microprocessors. That’s because Gordon E. Moore actually created his “law” well before the microprocessor was invented. In its original form, Moore’s Law describes only how quickly the number of transistors in integrated circuits was growing.

What became known as Moore’s Law was first published in the industry journal Electronics on April 19, 1965, in a paper titled “Cramming More Components into Integrated Circuits,” written when Moore was director of the research and development laboratories at Fairchild Semiconductor. His observation—and what Moore’s Law really says—was that the number of transistors in the most complex circuits tended to double every year. Moore’s conclusion was that by 1975, ten years after he was writing, economics would force semiconductor makers to squeeze as many as 65,000 transistors onto a single silicon chip.

Although Moore’s fundamental premise, that integrated circuit complexity increases exponentially, was accurate (although not entirely obvious at the time), the actual numbers given in Moore’s predictions in the paper missed the mark. He was quite optimistic. For example, over the 31-year history of the microprocessor from 4004 to Pentium 4, the transistor count has increased by a factor of 18,667 (from 2250 to 42 million). That’s approximately a doubling every two years.

That’s more of a difference than you might think. At Moore’s rate, a microprocessor today would have over four trillion transistors inside. Even the 18-month doubling rate so often quoted would put about 32 billion transistors in every chip. In either case, you’re talking real computer power.

Over the years, people have gradually adapted Moore’s Law to better suit the facts—in other words, Moore’s Law doesn’t predict how many transistors will be in future circuits. It only describes how circuit complexity has increased.

The future of the “law” is cloudier still. A constant doubling of transistor count requires ever-accelerating spending on circuit design research, which was possible during the 20 years of the personal computer boom but may suffer as the rapid growth of the industry falters. Moreover, the law—or even a linear increase in the number of transistors on a chip—ultimately bumps into fundamental limits imposed by the laws of physics. At some point the increase in complexity of integrated circuits, taken to extreme, will require circuit elements smaller than atoms or quarks, which most physicists believe is not possible.

Printed Circuits

An integrated circuit is like a gear of a complex machine. By itself it does nothing. It must be connected to the rest of the mechanism to perform its appointed task. The integrated circuit needs a means to acquire and send out the logic and electrical signals it manipulates. In other words, each integrated circuit in a computer must be logically and electrically connected—essentially that means linked by wires.

In early electrical devices, wires in fact provided the necessary link. Each wire carried one signal from one point to another, creating a technology called point-to-point wiring. Because people routinely soldered together these point-to-point connections by hand using a soldering iron, they were sometimes called hand-wired. This was a workable, if not particularly cost-effective, technology in the days of tubes, when even a simple circuit spanned a few inches of physical space. Today, point-to-point wiring is virtually inconceivable because a computer crams the equivalent of half a million tube circuits into a few square inches of space. Connecting them with old-fashioned wiring would take a careful hand and some very fine wire. The time required to cut, strip, and solder in place each wire would make building a single computer a lifetime endeavor.

Long before the introduction of the first computer, engineers found a better way of wiring together electrical devices—the printed circuit board. The term is sometimes confusingly shortened to computer board, even when the board is part of some other, non-computer device. Today, printed circuit boards are the standard from which nearly all electronic devices are made. The “board” in the name “motherboard” results from the assembly being a printed circuit board.

Fabrication

Printed circuit board technology allows all the wiring for an entire circuit assembly to be fabricated together in a quick process that can be entirely mechanized. The wires themselves are reduced to copper traces, a pattern of copper foil bonded to the substrate that makes up the support structure of the printed circuit board. In computers, this substrate is usually green composite material called glass-epoxy, because it has a woven glass fiber base that’s filled and reinforced with an epoxy plastic. Less-critical electronic devices (read “cheap”) substitute a simple brownish substrate of phenolic plastic for the glass-epoxy.

The simplest printed circuit boards start life as a sheet of thin copper foil bonded to a substrate. The copper is coated with a compound called photo-resist, a light-sensitive material. When exposed to light, the photo-resist becomes resistant to the effects of compounds, such as nitric acid, that strongly react with copper. A negative image of the desired final circuit pattern is placed over the photo-resist covered copper and exposed to a strong light source. This process is akin to making a print of a photograph. The exposed board is then immersed in an etchant, one of those nasty compounds that etch or eat away the copper that is not protected by the light-exposed photo-resist. The result is a pattern of copper on the substrate corresponding to the photographic original. The copper traces can then be used to connect the various electronic components that will make up the final circuit. All the wiring on a circuit board is thus fabricated in a single step.

When the electronic design on a printed circuit board is too complex to be successfully fabricated on one side of the substrate, engineers can switch to a slightly more complex technology to make two-sided boards. The traces on each side are separately exposed but etched during the same bath in etchant. In general, the circuit traces on one side of the board run parallel in one direction, and the traces on the other side run generally perpendicular. The two sides get connected together by components inserted through the board or through plated-through holes, holes drilled through the board and then filled with solder to provide an electrical connection

To accommodate even more complex designs, engineers have designed multilayer circuit boards. These are essentially two or more thin double-sided boards tightly glued together into a single assembly. Most computer system boards use multilayer technology, both to accommodate complex designs and to improve signals characteristics. Sometimes a layer is left nearly covered with copper to shield the signal in the layers from interacting with one another. These shielding layers are typically held at ground potential and are consequently called ground planes.

One of the biggest problems with the multilayer design (besides the difficulty in fabrication) is the difficulty in repair. Abnormally flexing a multilayer board can break one of the traces hidden in the center of the board. No reasonable amount of work can repair such damage.

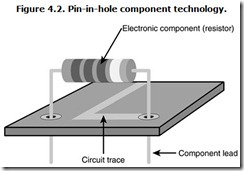

Pin-in-Hole Technology

Two technologies are in wide use for attaching components to the printed circuit board. The older technology is called pin-in-hole. Electric drills bore holes in the circuit board at the points where the electronic components are to attach. Machines (usually) push the leads (wires that come out of the electronic components) into and through the circuit board holes and bend them slightly so that they hold firmly in place. The components are then permanently fixed in place with solder, which forms both a physical and electrical connection. Figure 4.2 shows the installation of a pin-in-hole electronic component.

Most mass-produced pin-in-hole boards use wave-soldering to attach pin-in-hole components. In wave-soldering, a conveyer belt slides the entire board over a pool of molten solder (a tin and lead alloy), and a wave on the solder pool extends up to the board, coating the leads and the circuit traces. When cool, the solder holds all the components firmly in place.

Workers can also push pin-in-hole components into circuit boards and solder them individually in place by hand. Although hand fabrication is time consuming and expensive, it can be effective when a manufacturer requires only a small number of boards. Automatic machinery cuts labor costs and speeds production on long runs; assembly workers typically make prototypes and small production runs or provide the sole means of assembly for tiny companies that can afford neither automatic machinery nor farming out their circuit board work.

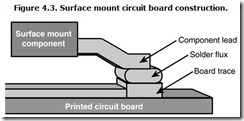

Surface Mount Technology

The newer method of attaching components, called surface-mount technology, promises greater miniaturization and lower costs than pin-in-hole. Instead of holes to secure them, surface-mount components are glued to circuit boards using solder flux or paste, which temporarily holds them in place. After all the components are affixed to a circuit board in their proper places, the entire board assembly runs through a temperature-controlled oven, which melts the solder paste and firmly solders each component to the board. Figure 4.3 illustrates surface-mount construction.

Surface mount components are smaller than their pin-in-hole kin because they don’t need leads. Manufacturing is simpler because there’s no need to drill holes in the circuit boards. Without the need for large leads, the packages of the surface-mount components can be smaller, so more components will fit in a given space with surface mount technology.

On the downside, surface mount fabrication doesn’t lend itself to small production runs or prototyping. It can also be a headache for repair workers. They have to squint and peer at components that are often too small to be handled without tweezers and a lot of luck. Moreover, many surface-mount boards also incorporate some pin-in-hole components, so they still need drilling and wave-soldering.

Logic Design

Reduced to its fundamental principles, the workings of a modern silicon-based microprocessor are not difficult to understand. They are simply the electronic equivalent of a knee-jerk. Every time you hit the microprocessor with an electronic hammer blow (the proper digital input), it reacts by doing a specific something. Like a knee-jerk reflex, the microprocessor’s reaction is always the same. When hammered by the same input and conditions, it kicks out the same function.

The complexity of the microprocessor and what it does arises from the wealth of inputs it can react to and the interaction between successive inputs. Although the microprocessor’s function is precisely defined by its input, the output from that function varies with what the microprocessor has to work on, and that depends on previous inputs. For example, the result of you carrying out a specific command—”Simon says lift your left leg”—will differ dramatically depending on whether the previous command was “Simon says sit down” or “Simon says lift your right leg.”

The rules for controlling the knee-jerks inside a computer are the rules of logic, and not just any logic. Computers use a special symbolic logic system that was created about a century and a half ago in the belief that human thinking could be mechanized much as the production of goods had been mechanized in the Industrial Revolution.

Boolean Logic

As people began to learn to think again after the Dark Ages, they began exploring mathematics, first in the Arabian world, then Europe. They developed a rigorous, objective system—one that was reassuring in its ability to replicate results. Carry out the same operation on the same numbers, and you always got the same answer. Mathematics delivered a certainty that was absent from the rest of the world, one in which dragons inhabited the unknown areas of maps and people had no conception that micro-organisms might cause disease.

Applying the same rigor and structure, scientific methods first pioneered by the Greeks were rediscovered. The objective scientific method found truth, the answers that eluded the world of superstition. Science led to an understanding of the world, new processes, new machines, and medicine.

In Victorian England, philosophers wondered whether the same objectivity and rigor could be applied to all of human thought. A mathematician, George Boole, first proposed applying the rigorous approach of algebra to logical decision-making. In 1847, Boole founded the system of modern symbolic logic that we now term Boolean logic (alternately, Boolean algebra). In his system, Boole reduced propositions to symbols and formal operators that followed the strict rules of mathematics. Using his rigorous approach, logical propositions could be proven with the same certainty as mathematical equations.

Philosophers, including Ludwig Wittgenstein and Bertrand Russell, further developed the concept of symbolic logic and showed that anything that could be known could be expressed in its symbols. By translating what you knew and wanted to know into symbols, you could apply the rules of Boolean logic and find an answer. Knowledge was reduced to a mechanical process, and that made it the province of machines.

In concept, Babbage’s Analytical Engine could have deployed Boole’s symbolic logic and become the first thinking machine. However, neither the logic nor the hardware was up to the task at the time. But when fast calculations became possible, Boolean logic proved key to programming the computers that carried out the tasks.

Logic Gates

Giving an electrical circuit the power to make a decision isn’t as hard as you might think. Start with that same remote signaling of the telegraph but add a mechanical arm that links it to a light switch on your wall. As the telegraph pounds, the light flashes on and off. Certainly you’ll have done a lot of work for a little return, in that the electricity could be used to directly light the bulb. There are other possibilities, however, that produce intriguing results. You could, for example, pair two weak telegraph arms so that their joint effort would be required to throw the switch to turn on the light. Or you could link the two telegraphs so that a signal on either one would switch on the light. Or you could install the switch backwards so that when the telegraph is activated, the light would go out instead of come on.

These three telegraph-based design examples actually provide the basis for three different types of computer circuits, called logic gates (the AND, OR, and NOT gates, respectively). These electrical circuits are called gates because they regulate the flow of electricity, allowing it to pass through or cutting it off, much as a gate in a fence allows or impedes your own progress. These logic gates endow the electrical assembly with decision-making power. In the light example, the decision is necessarily simple: when to switch on the light. But these same simple gates can be formed into elaborate combinations that make up a computer that can make complex logical decisions.

The three logic gates can perform the function of all the operators in Boolean logic. They form the basis of the decision-making capabilities of the computer as well as other logic circuitry. You’ll encounter other kinds of gates, such as NAND (short for “Not AND”), NOR (short for “Not OR”), and XOR (for “Exclusive OR”), but you can build any one of the others from the basic three: AND, OR, and NOT.

In computer circuits, each gate requires at least one transistor. A microprocessor with ten million transistors may have nearly that many gates.

Memory

These same gates also can be arranged to form memory. Start with the familiar telegraph. Instead of operating the current for a light bulb, however, reroute the wires from the switch so that they, too, link to the telegraph’s electromagnet. In other words, when the telegraph moves, it throws a switch that supplies itself with electricity. Once the telegraph is supplying itself with electricity, it will stay on using that power even if you switch off the original power that first made the switch. In effect, this simple system remembers whether it has once been activated. You can go back at any time and see if someone has ever sent a signal to the telegraph memory system.

This basic form of memory has one shortcoming: It’s elephantine and never forgets. Resetting this memory system requires manually switching off both the control voltage and the main voltage source.

A more useful form of memory takes two control signals: One switches it on, the other switches it off. In simplest form, each cell of this kind of memory is made from two latches connected at cross-purposes so that switching one latch on cuts the other off. Because one signal sets this memory to hold data and the other one resets it, this circuit is sometimes called set-reset memory. A more common term is flip-flop because it alternately flips between its two states. In computer circuits, this kind of memory is often simply called a latch. Although the main memory of your computer uses a type of memory that works on a different electrical principal, latch memory remains important in circuit design.

Instructions

Although the millions of gates in a microprocessor are so tiny that you can’t even discern them with an optical microscope (you need at least an electron microscope), they act exactly like elemental, telegraph-based circuits. They use electrical signals to control other signals. The signals are just more complicated, reflecting the more elaborate nature of the computer.

Today’s microprocessors don’t use a single signal to control their operations, rather, they use complex combinations of signals. Each microprocessor command is coded as a pattern of signals, the presence or absence of an electrical signal at one of the pins of the microprocessor’s package. The signal at each pin represents one bit of digital information.

The designers of a microprocessor give certain patterns of these bit-signals specific meanings. Each pattern is a command called a microprocessor instruction that tells the microprocessor to carry out a specific operation. The bit pattern 0010110, for example, is the instruction that tells an Intel 8086-family microprocessor to subtract in a very explicit manner. Other instructions tell the microprocessor to add, multiply, divide, move bits or bytes around, change individual bits, or just wait around for another instruction.

Microprocessor designers can add instructions to do just about anything—from matrix calculations to brewing coffee (that is, if the designers wanted to, if the instructions actually did something useful, and if they had unlimited time and resources to engineer the chip). Practical concerns such as keeping the design work and the chip manageable constrain the range of commands given to a microprocessor.

The entire repertoire of commands that a given microprocessor model understands and can react to is called that microprocessor’s instruction set or its command set. The designer of the microprocessor chooses which pattern to assign to a given function. As a result, different microprocessor designs recognize different instruction sets, just as different board games have different rules.

Despite their pragmatic limits, microprocessor instruction sets can be incredibly rich and diverse, and the individual instructions incredibly specific. The designers of the original 8086-style microprocessor, for example, felt that a simple command to subtract was not enough by itself. They believed that the microprocessor also needed to know what to subtract from what and what it should do with the result. Consequently, they added a rich variety of subtraction instructions to the 8086 family of chips that persists into today’s Athlon and Pentium 4 chips. Each different subtraction instruction tells the microprocessor to take numbers from different places and find the difference in a slightly different manner.

Some microprocessor instructions require a series of steps to be carried out. These multistep commands are sometimes called complex instructions because of their composite nature. Although a complex instruction looks like a simple command, it may involve much work. A simple instruction would be something such as “pound a nail.” A complex instruction may be as far ranging as “frame a house.” Simple subtraction or addition of two numbers may actually involve dozens of steps, including the conversion of the numbers from decimal to the binary (ones and zeros) notation that the microprocessor understands. For instance, the previous sample subtraction instruction tells one kind of microprocessor that it should subtract a number in memory from another number in the microprocessor’s accumulator, a place that’s favored for calculations in today’s most popular microprocessors.

Registers

Before the microprocessor can work on numbers or any other data, it first must know what numbers to work on. The most straightforward method of giving the chip the variables it needs would seem to be supplying more coded signals at the same time the instruction is given. You could dump in the numbers 6 and 3 along with the subtract instruction, just as you would load laundry detergent along with shirts and sheets into your washing machine. This simple method has its shortcomings, however. Somehow the proper numbers must be routed to the right microprocessor inputs. The microprocessor needs to know whether to subtract 6 from 3 or 3 from 6 (the difference could be significant, particularly when you’re balancing your checkbook).

Just as you distinguish the numbers in a subtraction problem by where you put them in the equation (6–3 versus 3–6), a microprocessor distinguishes the numbers on which it works by their position (where they are found). Two memory addresses might suffice were it not for the way most microprocessors are designed. They have only one pathway to memory, so they can effectively “see” only one memory value at a time. So instead, a microprocessor loads at least one number to an internal storage area called a register. It can then simultaneously reach both the number in memory and the value in its internal register. Alternatively (and more commonly today), both values on which the microprocessor is to work can be loaded into separate internal registers.

Part of the function of each microprocessor instruction is to tell the chip which registers to use for data and where to put the answers it comes up with. Other instructions tell the chip to load numbers into its registers to be worked on later or to move information from a register someplace else (for instance, to memory or an output port).

A register functions both as memory and a workbench. It holds bit-patterns until they can be worked on or sent out of the chip. The register is also connected with the processing circuits of the microprocessor so that the changes ordered by instructions actually appear in the register. Most microprocessors typically have several registers, some dedicated to specific functions (such as remembering which step in a function the chip is currently carrying out; this register is called a counter or instruction pointer) and some designed for general purposes. At one time, the accumulator was the only register in a microprocessor that could manage calculations. In modern microprocessors, all registers are more nearly equal (in some of the latest designs, all registers are equal, even interchangeable), so the accumulator is now little more than a colorful term left over from a bygone era.

Not only do microprocessors have differing numbers of registers, but the registers may also be of different sizes. Registers are measured by the number of bits that they can work with at one time. A 16-bit microprocessor, for example, should have one or more registers that each holds 16 bits of data at a time. Today’s microprocessors have 32- or 64-bit registers.

Adding more registers to a microprocessor does not make it inherently faster. When a microprocessor lacks advanced features such as pipelining or superscalar technology (discussed later in this chapter), it can perform only one operation at a time. More than two registers would seem superfluous. After all, most math operations involve only two numbers at a time (or can be reduced to a series of two-number operations). Even with old-technology microprocessors, however, having more registers helps the software writer create more efficient programs. With more places to put data, a program needs to move information in and out of the microprocessor less often, which can potentially save several program steps and clock cycles.

Modern microprocessor designs, particularly those influenced by the latest research into design efficiency, demand more registers. Because microprocessors run much faster than memory, every time the microprocessor has to go to memory, it must slow down. Therefore, minimizing memory accessing helps improve performance. Keeping data in registers instead of memory speeds things up.

The width of the registers also has a substantial effect on the performance of a microprocessor. The more bits assigned to each register, the more information that the microprocessor can process in every cycle. Consequently, a 64-bit register in the next generation of microprocessor chips holds the potential of calculating eight times as fast as an 8-bit register of a first generation microprocessor—all else being equal.

Programs

A computer program is nothing more than a list of instructions. The computer goes through the instruction list of the program step by step, executing each one in turn. Each builds on the previous instructions to carry out a complex function. The program is essentially a recipe for a microprocessor or the step-by-step instructions in a how-to manual.

The challenge for the programmer is to figure out into which steps to break a complex job and to arrange those steps in the best possible order. It can be a big job. Although a program can be as simple as a single step (say, stop), a modern program or software package may comprise millions or tens of millions of steps. They are quite literally too complex for a single human being to understand—or write. They are joint efforts. Not just the work of many people, but the work of people and machines using development environments to divide up the work and take advantage of routines and libraries created by other teams. A modern software package is the result of years of work in putting together simple microprocessor instructions.

One of the most important concepts in the use of modern personal computers is multitasking, the ability to run multiple programs at the same time, shifting your focus from one to another. You can, for example, type a term paper on L. Frank Baum and the real meaning of the Wizard of Oz using your word processor while your MP3 program churns out a techno version of “Over the Rainbow” through your computer’s speakers. Today, you take that kind of thing for granted. But thinking about it, this doesn’t make sense in the context of computer programs being simple lists of instructions and your microprocessor executing the instructions, one by one, in order. How can a computer do two things at the same time?

The answer is easy. It cannot. Computers do, in fact, process instructions as a single list. Computers can, however, switch between lists of instructions. They can execute a series of instructions from one list, shift to another list for a time, and then shift back to the first list. You get the illusion that the computer is doing several things at the same time because it shifts between instruction lists very quickly, dozens of times a second. Just as the separate frames of an animated cartoon blur together into an illusion of continuous motion, the computer switches so fast you cannot perceive the changes.

Multitasking is not an ability of a microprocessor. Even when you’re doing six things at once on your computer, the microprocessor is still doing one thing at a time. It just runs all those instructions. The mediator of the multitasking is your operating system, basically a master program. It keeps track of every program (and subprogram) that’s running—including itself—and decides which gets a given moment of the microprocessor’s time for executing instructions.

Interrupts

Give a computer a program, and it’s like a runaway freight train. Nothing can stop it. It keeps churning through instructions until it reaches the last one. That’s great if what you want is the answer, whenever it may arrive. But if you have a task that needs immediate attention—say a block of data has just arrived from the Internet—you don’t want to wait forever for one program to end before you can start another.

To add immediacy and interactivity to microprocessors, chip designers incorporate a feature called the interrupt. An interrupt is basically a signal to the microprocessor to stop what it is doing and turn its attention to something else. Intel microprocessors understand two kinds of interrupts: software and hardware.

A software interrupt is simply a special instruction in a program that’s controlling the microprocessor. Instead of adding, subtracting, or whatever, the software interrupt causes program execution to temporarily shift to another section of code in memory.

A hardware interrupt causes the same effect but is controlled by special signals outside of the normal data stream. The only problem is that the microprocessors recognize far fewer interrupts than would be useful—only two interrupt signal lines are provided. One of these is a special case, the Non-Maskable Interrupt. The other line is shared by all system interrupts. The support hardware of your computer multiplies the number of hardware interrupts so that all devices that need them have the ability to interrupt the microprocessor.

Clocked Logic

Microprocessors do not carry out instructions as soon as the instruction code signals reach the pins that connect the microprocessor to your computer’s circuitry. If chips did react immediately, they would quickly become confused. Electrical signals cannot change state instantly; they always go through a brief, though measurable, transition period—a period of indeterminate voltage level during which the signals would probably perplex a microprocessor into a crash. Moreover, all signals do not necessarily change at the same rate, so when some signals reach the right values, others may still be at odd values. As a result, a microprocessor must live through long periods of confusion during which its signals are at best meaningless, at worst dangerous.

To prevent the microprocessor from reacting to these invalid signals, the chip waits for an indication that it has a valid command to carry out. It waits until it gets a “Simon says” signal. In today’s computers, this indication is provided by the system clock. The clock sends out regular voltage pulses, the electronic equivalent of the ticking of a grandfather’s clock. The microprocessor checks the instructions given to it each time it receives a clock pulse, providing it is not already busy carrying out another instruction.

Early microprocessors were unable to carry out even one instruction every clock cycle. Vintage microprocessors may require as many as 100 discrete steps (and clock pulses) to carry out a single instruction. The number of cycles required to carry out instructions varies with the instruction and the microprocessor design. Some instructions take a few cycles, others dozens. Moreover, some microprocessors are more efficient than others in carrying out their instructions. The trend today is to minimize and equalize the number of clock cycles needed to carry out a typical instruction.

When you want to squeeze every last bit of performance from a computer, you can sometimes tinker with its timing settings. You can up the pace at which its circuits operate, thus making the system faster. This technique also forces circuits to operate at speeds higher than they were intended, thus compromising the reliability of the computer’s operations. Tinkers don’t worry about such things, believing that most circuits have such a wide safety margin that a little boost will do no harm. The results of their work may delight them—they might eke 10 percent or more extra performance from a computer—but these results might also surprise them when the system operates erratically and shuts down randomly. This game is called overclocking because it forces the microprocessor in the computer to operate at a clock speed that’s over its ratings.

Overclocking also takes a more insidious form. Unscrupulous semiconductor dealers sometimes buy microprocessors (or memory chips or other speed-rated devices) and change their labels to reflect higher-speed potentials (for example, buying a 2.2GHz Pentium 4 and altering its markings to say 2.53GHz). A little white paint increases the market value of some chips by hundreds of dollars. It also creates a product that is likely to be operated out of its reliable range. Intel introduced internal chip serial numbers with the Pentium III to help prevent this form of fraud. From the unalterable serial number of the chip, the circuitry of a computer can figure out the factory-issue speed rating of the chip and automatically adjust itself to the proper speed.