Architectures and elements

Time is an important factor that should be taken into account in an industrial environment, and is key to the wide adoption of real-time control systems in industrial plants and workshops. Real-time control systems are defined as those systems in which the correctness of the system depends not only on the logical result of controls, but also on the time at which the results are produced. A real-time control system in industry requires both dedicated hardware and software to implement real-time algorithms. Many explanations of the architecture of real-time control systems used reference model terminologies; these seen unnecessary for us to understand the architecture of real-time control systems. Advanced real-time control systems are based on real-time algorithms. This textbook will focus on advanced computer-based real-time control systems which require the following hardware and soft- ware to operate real-time controls.

(1) Real-time control system hardware

Real-time hardware includes both microprocessor unit chipsets and programmable peripherals. Microprocessor units are necessary because they are the core of modern digital computers and perform the actual calculations necessary; programmable peripheral interfaces are also necessary they are important auxiliary hardware of modern digital computers and comprise the means of recieving and communicating data.

(a) Microprocessor units

A microprocessor unit is the unified semiconductor of electronic integrated circuits which performs the central processing functions of a computer. A microprocessor unit comprises a CPU (central processing unit), buffer memories, an interrupt control unit, input/output interface units and an internal bus system. Chapter 5 of this textbook discusses this topic in detail.

(b) Programmable peripheral devices (memory chips)

Programmable peripheral interfaces consist of several programmable integrated-circuit units attached to microprocessor units to allow the microprocessor to perform interrupt controlling, timer controlling, and to also control dynamic memory volumes and input/output ports. In industrial controls, controllers need a large quantity of their function information about the systems to perform. It is important that real-time controllers request information dynamically about the controlled systems as resources. To store the information dynamically on controlled systems, such microprocessors must expand their memories using dedicated peripherals such as direct memory access (DMA), non-volatile memory (NVM), etc. Chapter 6 of this textbook discusses this topic in detail.

(c) Industrial computers and motherboards

Nowadays, most industrial computers are able to perform real-time controls because they have necessary microprocessor units and peripherals. An industrial motherboard is a single-board computer normally of a single type. Both industrial computers and motherboards will be explained in Chapter 9 of this textbook.

(d) Real-time controllers

Some dedicated controllers are to real-time control. The PLC (programmable logic control) controller is a typical example. Other examples of real-time controllers are CNC (computer numerical control) controllers, PID (proportional-integral-derivative) controllers, servo controllers and batch controllers. Most modern SCADA (supervisory control access and data acquisition) control systems also contain real-time controllers. In this textbook, Part 4 Chapters (7 and 8) discuss most types of real-time controllers and robots.

(2) Real-time control system software

As mentioned earlier, a real-time control system should be a computerized system that supports real- time control algorithms in its hardware and software. It seems that there is no single definition of a real-time algorithm, and what a real-time algorithm includes. Many have been developed such based on own control applications; thus different real-time algorithms are adapted to different varying systems.

In a, the operating system is responsible for performing these algorithms. An operating system that can handle real-time algorithms is known as a real-time operating system (RTOS). Although Chapter 16 of this textbook is devoted to this topic, a briefer explanation is provided below.

(a) Multitask scheduling

There is a task dispatcher and a task scheduler in a RTOS, both of which handle multi-task scheduling. The dispatcher is responsible for the context switch of the current task to a new task. Once a new task arrives (by means of the interrupt handling mechanism), the context switch function first saves the status and parameters of the current task into memory then, and it terminates; it then transfers to the scheduler to select a task from the system task queue. The selected task is then loaded and run by the system CPU.

The scheduler takes the job of selecting the task from the system task queue. Two factors determine the difference between a RTOS and a non-RTOS; the first is the algorithm which select this task from the system queue, and the second is the algorithm that deals with multitasking concurrency.

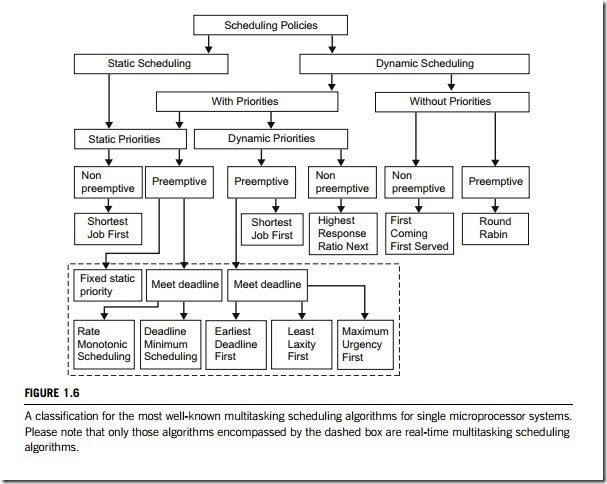

There are two types of algorithms for task selection: static and dynamic scheduling. Static scheduling requires that complete information about the system task queue is available before the scheduler starts working. This includes the number of tasks, its deadline, its priority, and whether it is a periodic or aperiodic task, etc. Obviously, the static algorithm must always be planned when system is off-line. The advantage of off-line scheduling is its determinism, and its disadvantage is its inflexibility. In contrast, dynamic scheduling can be planned when the system is either off-line or on- line. Dynamic scheduling can change its plan as the system status changes. The advantage of dynamic scheduling is its flexibility, and its disadvantage is its poor determinism.

The term “concurrency” in a RTOS means several tasks executing in parallel at the same time. Concurrency supported is a broad range of microprocessor systems, from tightly coupled and largely synchronous parallel in systems, to loosely coupled and largely asynchronous distributed systems. The chief difficulty of concurrency implementation is the race conditions for sharing the system resources, which it can lead to problems such as deadlock and starvation. A mutual exclusion algorithm is generally used to solve the problems arising from race conditions.

An RTOS must guarantee that all deadlines are met. If any deadline is not met, the system suffers overload. Two algorithms are used to meet the deadlines in the RTOS; either pre-emptive-based or priority-based algorithms.

At any specific time, tasks can be grouped into two types: those waiting for the input or output resources such as memory and disks (called I/O bound tasks), and those fully utilizing the CPU (CPU bound tasks). In a non-RTOS, tasks would often poll, or be busy/waiting while waiting for requested input and output resources. However, in a RTOS, these I/O bound tasks still have complete control of the CPU. With the implementation of interrupts and pre-emptive multitasking, these I/O bound tasks could be blocked, or put on hold, pending the arrival of the necessary data, allowing other tasks to utilize the CPU. As the arrival of the requested data would generate an interrupt, blocked tasks could be guaranteed a timely return to execution.

The period for which a microprocessor is allowed to run in a pre-emptive multitasking system is generally called a time slice. The scheduler is run once for each time slice to choose the next task to run. If it is too short, then the scheduler will consume too much processing time, but if it is too long then tasks may not be able to respond to external events quickly enough. An interrupt is scheduled to allow the operating system kernel to switch between tasks when their time slices expire, allowing the microprocessor time to be shared effectively between a number of tasks, giving the illusion that it is dealing with these tasks simultaneously. Pre-emptive multitasking allows the computer system to guarantee more reliably each process a regular slice of operating time. It also allows the system to deal rapidly with external events such as incoming data, which might require the immediate attention of one task or another. Pre-emptive multitasking also involves the use of an interrupt mechanism, which suspends the currently executing task and invokes a scheduler to determine which task should execute next. Therefore all tasks will get some amount of CPU time over any given period of time.

In the RTOS, the priority can be assigned to a task based on either the task’s pre-run-time or its deadline.

In the approach that uses pre-run-time-based priority, each task has a fixed, static priority which is computed pre-run-time. The tasks in the CPU task queue are then executed in the order determined by their priorities. If all tasks are periodic, a simple priority assignment can be done according to the rule; the shorter the period, the higher the priority.

There are some dynamic scheduling algorithms which assign priorities based on the tasks’ respective

deadlines. The simplest of this type is the earliest deadline first rule, where the task with the earliest (shortest) deadline has the highest priority. Thus, the resulting priorities of tasks are naturally dynamic. This algorithm can be used for both dynamic and static scheduling. However, absolute deadlines are normally computed at run-time, and hence the algorithm is presented as dynamic. In this approach, if all tasks are periodic and pre-emptive, then it is optimal. A disadvantage of this algorithm is that the execution time is not taken into account in the assignments of priority.

The third approach in assigning priorities to the tasks is the maximum laxity first algorithm. This algorithm is for scheduling pre-emptive tasks on single-microprocessor systems. At any given time, the laxity (or slack) of a task with a deadline given is equal to:

Laxity ¼ deadline – remaining execution time:

This algorithm assigns priority based on their laxities: the smaller the laxity, the higher the priority. It needs to know the current execution time, and the laxity is essentially a measure of the flexibility available for scheduling a task. Thus, this algorithm takes into consideration the execution time of a task, and this is its advantage over the earliest deadline first rule.

Every commercial RTOS employs a priority-based pre-emptive scheduler. This is despite the fact that real-time systems vary in their requirements and real-time scheduling is not always needed. Multi- tasking and meeting deadlines is certainly not a one-size-fits-all problem. Figure 1.6 presents a classi- fication for the most well-known dynamic scheduling algorithms for single microprocessor systems.

(b) Intertask communications

An RTOS requires intertask communication, as system resources such as data, interfaces, hardware and memories must be shared by multiple tasks. When more than one task concurrently requests the same resource, synchronization is required to coordinate these requests. Synchronization can be carried out in a RTOS by using various message queue program objects.

Two or more tasks can exchange information via their common message queue. A task can send (or put) a data frame called a message into this queue; this message will be kept and blocked until the right task accesses it. In a RTOS, the message queue can be of the mailbox, pipe or shared memory type.

The system common message queue is also a critical section which cannot be accessed by more than one task at a time. Mutually exclusive logic is used to synchronize tasks requesting access to this queue. All types of system message queue use mutual exclusion, including busy-waiting, semaphore and blocked task queue protocols, to synchronize the requested accesses to the system message queue.

(c) Interrupt handling

It can be said that the interrupt handler is a key function of a RTOS. Usually, the RTOS allocates this interrupt handling responsibility to its kernel (or nucleus).

General-purpose operating systems do not usually allow user programs to disable interrupts, because the user program could then control the CPU for as long as it wished. Modern CPUs make the interrupt disable control bit (or instruction) inaccessible in user mode, in order to allow operating systems to prevent user tasks from doing this. Many embedded systems and RTOS allow the user program itself to run in kernel mode for greater system call efficiency, and also to permit the appli- cation to have greater control of the operating environment without requiring RTOS intervention.

A typical way for the RTOS to do this is to acknowledge or disable the interrupt, so that it would not occur again when the interrupt handler returns. The interrupt handler then queues work to be done at a lower priority level. When needing to start the next task, the interrupt handler often unblocks a driver task through releasing a semaphore or sending a message to the system common message queue.

(d) Resource sharing

In a real-time system, all the system resources should be shared by system multitasks, and so they are called shared resources. Thus, algorithms must exist for the RTOS to manage sharing data and hardware resources among multiple tasks because it is dangerous for more than two tasks to access the same specific data or hardware resource simultaneously. There are three common approaches to resolve this problem: temporarily masking (or disabling) interrupts; binary semaphores; and message passing.

In temporarily masking (or disabling) interrupts, if the task can run in system mode and can mask (disable) interrupts, this is often the best (lowest overhead) solution to preventing simultaneous access to a shared resource. While interrupts are masked, the current task has exclusive use of the CPU; no other task or interrupt can take control, so the shared resource is effectively protected. When the task releases its shared resources, it must unmask interrupts. Any pending interrupts will then execute. Temporarily masking interrupts should only be done when the longest path through the shared resource is shorter than the desired maximum interrupt latency, or else this method will increase the system’s maximum interrupt latency. Typically this method of protection is used only when the shared resource is just a few source code lines long and contains no loops. This method is ideal for protecting hardware bitmapped registers when the bits are controlled by different tasks.

When the accessing resource involves lengthy looping, the RTOS must resort to using mechanisms such as semaphores and intertask message-passing. Such mechanisms involve system calls, and usually on exit they invoke the dispatcher code, so they can take many hundreds of CPU instructions to execute; while masking interrupts may take very few instructions on some microprocessors. But for longer resources, there may be no choice; interrupts cannot be masked for long periods without increasing the system’s interrupt latency.

In run-time, a binary semaphore is either locked or unlocked. When it is locked, a queue of tasks can wait for the semaphore. Typically a task can set a timeout on its wait for a semaphore. Problems with semaphore are well known; they are priority inversion and deadlocks. In priority inversion, a high-priority task waits because a low-priority task has a semaphore. A typical solution for this problem is to have the task that has a semaphore run at (inherit) the priority of the highest waiting task.

In a deadlock, two or more tasks lock a number of binary semaphores and then wait forever (no timeout) for other binary semaphores, creating a cyclic dependency graph. The simplest deadlock scenario occurs when two tasks lock two semaphores in lockstep, but in the opposite order. Deadlock is usually prevented by careful design, or by having floored semaphores. A floored semaphore can free a deadlock by passing the control of the semaphore to a higher (or the highest) priority task predefined in a RTOS when some conditions for deadlock are satisfied.

The other approach to resource sharing is message-passing. In this paradigm, the resource is managed directly by only one task; when another task wants to interrogate or manipulate the resource, it sends a message, in the system message queue, to the managing task. This paradigm suffers from problems similar to those with binary semaphores: priority inversion occurs when a task is working on a low-priority message, and ignores a higher-priority message or a message originating indirectly from a high-priority task in its in-box. Protocol deadlocks occur when two or more tasks wait for each other to send response messages.