Creating Digital Electronic Circuits

In the previous chapters, I introduced you to the basic Boolean arithmetic theory behind decoding and design combinatorial circuits; binary data is manipulated by simple operations to produce a desired output. Before going on and showing you how these basic operations are extended to create complicated functions and products, I want to take a step back and look at basic electrical theory and semiconductor operation and how they are applied to digital electronics. While digital electronics work with ‘‘ones and zeros’’, it is still built from the basic electronic devices that are outlined in the beginning of this chapter. It is impossible to work successfully with digital electronics without understanding basic electrical theory and how simple electronic devices work.

For many people, this chapter will be a review, but I still urge you to read through this chapter and answer the quiz at the end of it. While you may be familiar with electrical rules and device operation, you may not be so comfortable understanding how they are used to create digital electronics.

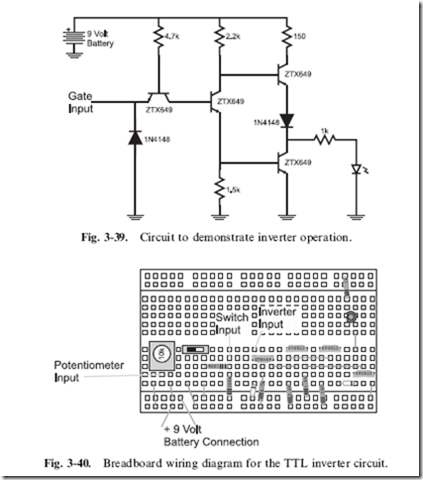

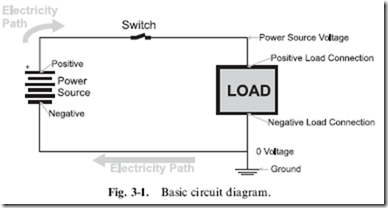

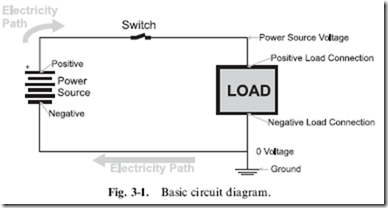

The most basic rule of electricity is that it can only move in a ‘‘closed circuit’’ (Fig. 3-1) in which a ‘‘power source’’ passes electricity to and then pulls it from a load. The power source has two connections that are marked with a ‘‘þ’’ (‘‘positive’’) and ‘‘ ’’ (‘‘negative’’) markings to indicate the ‘‘polarity’’ of the power source and the power source symbol consists of a number of pairs of parallel lines with the longer line in each pair representing the positive connection. The black lines connecting the power source to the load represent wires. When basic electricity is presented, this ‘‘load’’ is most often a lightbulb, because it turns on when electricity passes through it. As well as being a lightbulb, the load can be electrical motors, heater elements or digital electronic chips or any combination of these devices.

In the ‘‘electrical circuit’’ (or ‘‘schematic diagram’’) shown in Fig. 3-1 you can see that I have included a switch, which will open or close the circuit. When the switch is closed, electricity will flow through from the power source, to the load and back. If the switch is open or the wires connecting the power source to the load are broken, then electricity will not flow through the load.

As you are probably aware, electricity consists of electrons moving

from the power source through the wires to the load and back to the power source. There are actually two properties of electricity that you should be aware of and they are analogous to the two properties of water flowing through a pipe. Voltage is the term given to the pressure placed on the electrons to move and current is the number of electrons passing by a point at a given time.

In the early days of electrical experimentation, it was Benjamin Franklin

who postulated that electricity was a fluid, similar to water. As part of this supposition, he suggested that the electrical current flowed from the positive power supply connection to the negative. By suggesting that electrical current

flowed from positive to negative, he started drawing electrical wiring diagrams or schematics (like the one in Fig. 3-1) with the electrical energy at the positive power supply connection being at the highest state. As the electrical current ‘‘flowed down’’ the page to the negative connection of the power supply, the energy of the electricity decreased. This method of drawing electrical circuits is clever and intuitive and caught on because it described what was happening in it.

Unfortunately, Franklin’s suggestion that electrical current flowed from the positive to negative connections of the power source through the load was wrong. As we now know, electrons that make up electricity flow from the negative to positive connections of the power supply. This discovery was made about 150 years after his kite in a lightning storm experiment, so the notion that electrical current flowed from positive to negative was widely accepted and was never really challenged. For this reason, you should keep in mind that ‘‘electrical current flow’’ takes place in the opposite direction to ‘‘electron flow’’ in electrical circuits. This point trips many people new to electronics and I should state emphatically that the direction of current flow follows Franklin’s convention.

Looking at the bottom right hand corner of Fig. 3-1, you will see a funny set of lines attached to the wiring lines – this is the circuit’s ‘‘ground’’ connection. The circuit ground is another invention of Benjamin Franklin. If there is ever a large amount of electricity that finds its way into the circuit, it will have an ‘‘escape route’’ to prevent damage to the circuit’s components or hurting anybody working with the circuit. The ground connection was literally a metal spike driven into the ground and connected to a home or barn’s lightning rod. In modern structures, the ‘‘ground’’ is a connection to the metal pipe bringing in water.

Another term commonly used for a circuit’s wire connections or wiring lines is ‘‘nets’’. The term originated when circuit analysis was first done on complex networks of wiring. It is used to describe the individual wiring connections in a circuit. I will use this term along with ‘‘wiring’’ and ‘‘lines’’ in this book interchangeably.

Like power supplies, many load devices also have connections that

are marked with a positive (‘‘+’’) and negative (‘‘-’’) connections. When discussing the positive and negative connections of a basic two-wire load device, I like to use the terms, anode and cathode to describe the positive and negative connections of the load, respectively. The load’s anode must always be connected to the positive terminal of the power supply and the load’s cathode must always be connected to the negative terminal of the power supply. Reversing these connections may result in the device not working or even going so far as literally ‘‘burning out’’. To keep

the terms anode and cathode straight, I remember that a ‘‘cathode ray tube’’

(i.e. your TV set) involves firing electrons, which are negative, at a

phosphorus screen.

More complex load devices, like logic chips, also have positive and negative connections, but these connections are normally called Vcc or Vdd for the positive connection or Gnd and Vss for the negative (ground) connections.

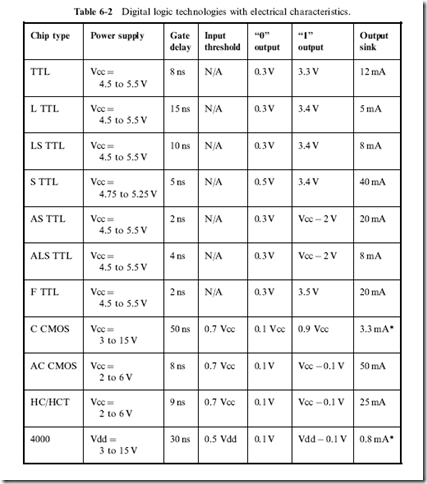

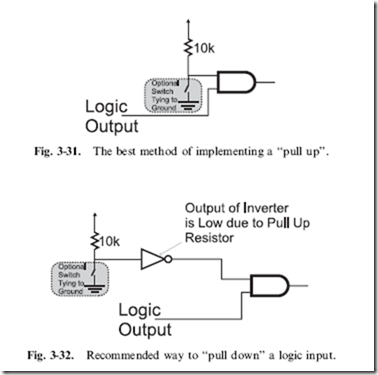

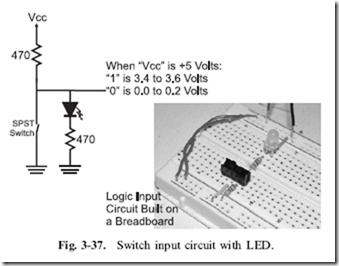

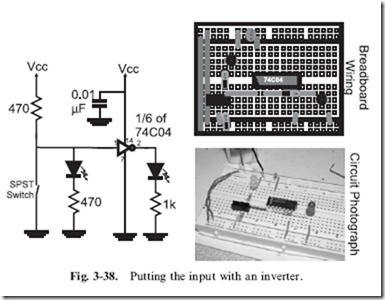

When working with most basic digital electronic circuits, the binary value ‘‘1’’ is applied to a high, positive voltage (usually close to the voltage applied to the Vcc or Vdd pin of the chip). The binary value ‘‘0’’ is applied to low voltage (very close to the ground voltage level of the chip). This is generally considered intuitively obvious and can be easily remembered that a ‘‘1’’ input is the same as connecting an input to the power supply and a ‘‘0’’ input is the same as connecting an input to ground (resulting in ‘‘0’’ voltage). Similarly for outputs, when a ‘‘1’’ is output, you can assume that the chip can turn on a light. These conventions are true for virtually all basic electronic logic technologies; when you get into some advanced, very high speed logic, you may find that chips are designed with different operating conditions.

To simplify wiring diagrams, you will see many cases where the positive power connection and negative power connection are passed to terminal symbols to simplify the diagram and avoid the additional complexity of power and ground lines passing over the page and getting confused with the circuit ‘‘signal’’ lines.

When you are wondering how to connect an electronic device to its power supply, you can use Table 3-1 as a quick reference.

Table 3-1 Power wiring reference.

|

Positive (‘‘Q’’) connection

|

Negative (‘‘R’’) connection

|

Comments

|

|

Red wire

|

Black wire

|

Wires connected to and between devices

|

|

Anode

|

Cathode

|

Diodes and capacitors

|

|

Vcc

|

Gnd

|

TTL

|

|

Vdd

|

Vss

|

CMOS

|

Basic Electronic Laws

Before starting to build your own digital electronics circuits, you should make sure that you are very familiar with the basic direct current electricity laws that govern how electricity flows through them. Don’t worry if you have not yet been exposed to any direct current electrical theory, it’s actually pretty simple and in the introduction to this chapter, I gave you a quick run down of how direct current circuits operate. I’m sure you were able to get through that without too many problems.

To make sure that you are clear on what direct current (also known as

‘‘DC’’) is, it consists of electricity running in a single direction without any changes. Alternating current (‘‘AC’’) continuously changes from positive to negative (as shown in Fig. 3-2). AC is primarily used for high-power circuitry and not for any kind of digital electronics, except as something that is controlled by it. Digital electronics is powered by direct current, which consists of a fixed voltage which does not change level or polarity, as AC does.

As I indicated in the introduction, there are two components to electricity: voltage is the ‘‘pressure’’ applied to the electrons and current is the number of electrons that flow past a point or a set amount of time. I use the terms ‘‘pressure’’ and ‘‘flow’’ to help you visualize electricity moving in a wire as being the same as water flowing through a pipe. Using a water/pipe analogy can help you visualize how electricity moves and changes according to the conditions it is subjected to.

It should be obvious that the more pressure you apply to water in a pipe,

the more water will pass through it. You can demonstrate this with a garden hose and a tap. By partially closing the tap, you are restricting the flow of the water coming from it, and the stream will not go very far from the end of the hose and very little water will flow out. When you completely open the tap, the water will spray out considerably further and a lot more water will be passing out the end of the hose. Instead of saying that you are closing the tap,

why don’t you think of the closing tap as resisting the flow of water through the pipe and into the hose? This is exactly analogous to the load in a circuit converting electrical energy into something else. Electricity coming out of the load will be at a lower pressure (or voltage) than the electricity going into the load and the amount of current will be reduced as well.

When you visualized the pipe/tap/hose analogy, you probably considered that all the resistance in the circuit was provided by the tap – the pipe and the hose did not impede the water’s flow in any way. This is also how we model how electricity flows in wires; the wires do not cause a drop in voltage and do not restrict the amount of current that is flowing in them. If you think about it for a moment, you will probably realize that this assumption means that the wires are ‘‘superconductors’’; any amount of electricity and at any voltage could be carried in the wires without any loss.

The wires that you use are certainly not superconductors, but the assumption that the wires do not impede the flow of electricity is a good one as their resistance in most circuits is usually negligible. By assuming that the wires are superconductors, you can apply some simple rules to understand the behavior of electricity in a circuit.

Going back to the original schematic diagram in this chapter (see Fig. 3-1), we can relate it to the pipe/tap/hose example of this section. The circuit’s power supply is analogous to the pipe supplying water to the tap (which itself is analogous to the electrical circuit’s load). The hose provides the same function as the wires bringing the electrical current back to the power supply.

In the pipe/tap/hose example, you should be able to visualize that the amount of water coming through the hose is dependent on how much the tap impedes the water flow from the pipe. It should be obvious that the less the tap impedes the water flow, the more water will come out the hose. Exactly the same thing happens in an electrical circuit; the ‘‘load’’ will impede or ‘‘resist’’ the flow of electricity through it and, in the process, take energy from the electricity to do something with it.

The most basic load that can be present in a circuit is known as the ‘‘resistor’’ (Fig. 3-3), which provides a specified amount of resistance,

measured in ‘‘ohms’’, to electricity. The ‘‘schematic symbol’’ is the jagged line you will see in various schematic diagrams in this book and in other sources. The schematic symbol is the graphic representation of the component and can be used along with the graphic symbol for a gate in a schematic diagram.

In traditional resistors, the amount of resistance is specified by a number of colored bands that are painted on its sides – the values specified by these bands are calculated using the formula below and the values for each of the colors listed in Table 3-2.

In the introduction to the chapter, I stated that power supplies provide electrons with a specific ‘‘pressure’’ called voltage. Knowing the voltage applied

Table 3-2 Resistor color code values.

|

Color

|

Band color value

|

Tolerance

|

|

Black

|

0

|

N/A

|

|

Brown

|

1

|

1%

|

|

Red

|

2

|

2%

|

|

Orange

|

3

|

N/A

|

|

Yellow

|

4

|

N/A

|

|

Green

|

5

|

0.5%

|

|

Blue

|

6

|

0.25%

|

|

Violet

|

7

|

0.1%

|

|

Gray

|

8

|

0.05%

|

|

White

|

9

|

N/A

|

|

Gold

|

N/A

|

5%

|

|

Silver

|

N/A

|

10%

|

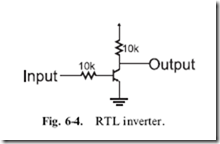

to a load (or resistor), you can calculate the electrical current using Ohm’s law which states:

The voltage applied to a load is equal to the product of its resistance and the current passing through it.

This can be expressed mathematically as:

V= i x R

where ‘‘V’’ is voltage, ‘‘R’’ is resistance and ‘‘i’’ is current. The letter ‘‘i’’ is used to represent current instead of the more obvious ‘‘C’’ because this character was already for specifying capacitance, as I will explain below. Voltage is measured in ‘‘volts’’, resistance in ‘‘ohms’’ and current in ‘‘amperes’’. For the work done in this book, you can assume that ohms have the units of volts/amperes and is given the symbol Q; you can look up how these values are derived, but for now just take them for what I’ve presented here. With a bit of basic algebra, once you know two of the values used in Ohm’s law, you can calculate the third.

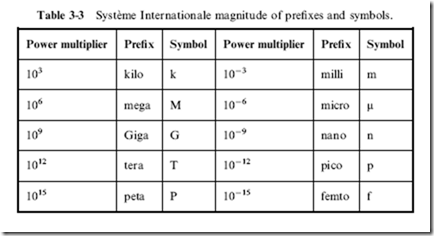

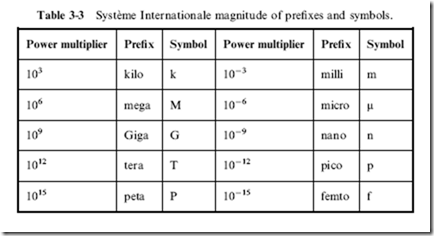

Voltage, current, resistance, and, indeed, all the electrical values that you will see are part of the ‘‘SI’’ (Syste` me Internationale), and its values are governed by SI standards. Each time a unit deviates by three orders of magnitude from the base value, the units are given a prefix that indicates the magnitude multiplier and these multipliers are listed in Table 3-3. For example, one thousandth of a volt is known as a ‘‘millivolt’’. The actual component values are normally given a single letter symbol that indicates its value. Most electronic devices, like resistors are given a two digit value that is multiplied by the power of ten which the symbol indicates. For example,

thousands of units are given the prefix ‘‘k’’, so a resistor having a value of 10,000 ohms is usually referred to as having a value of ‘‘10 kohms’’, or most popularly ‘‘10 k’’.

Looking at more complex circuits, such as the two resistor ‘‘series’’ circuit

shown in Fig. 3-4, you must remember that individual measurements must be taken across each resistor’s two terminals; you do NOT make measurements relative to a common point. The reason for making this statement is to point out that the voltage across a resistor, which is also known as the ‘‘voltage drop’’, is dependent on the current flowing through it.

Using this knowledge, you can understand how electricity flows through the two series resistors in Fig. 3-4. The voltage applied to the circuit causes current to flow through both of the resistors and the amount of current is equal to the current passing through a single resistor value which is the sum of the two resistors. Knowing this current, and an individual resistor’s value, you can calculate the voltage drop across each one. If you do the calculations, you will discover that the voltage drop across each resistor is equal to the applied voltage.

This may be a bit hard to understand, but go back to the pipe/tap/hose

example and think about the situation where you had a pipe/tape/pipe/tap/ hose. In this case, there would be a pressure drop across the first tap and then another pressure drop across the second tap. This is exactly what happens in Fig. 3-4: some voltage ‘‘drops’’ across Resistor 1 and the rest drops across Resistor 2. The amount of the drop across each resistor is proportional to its value relative to the total resistance in the circuit.

To demonstrate this, consider the case where Resistor 1 in Fig. 3-4 is

5 ohms and Resistor 2 is 8 ohms. Current has to flow through Resistor 1 followed by Resistor 2, which means that the total resistance it experiences is equivalent to the sum of the two resistances (13 ohms). The current through the two resistors could be calculated using Ohm’s law, as voltage applied divided by Resistor 1 plus Resistor 2. The general formula for calculating

equivalent the resistance of a series circuit is the sum of the resistances, which is written out as:

Re = R1 + R2+ .. .

Knowing the resistor values, the voltage drop across each resistor can be calculated as its fraction of the total resistance; the voltage across Resistor 1 would be 5/13ths of the applied voltage while the voltage across Resistor 2 would be 8/13ths of the applied voltage. Dividing the resistor values into the individual resistor voltage drops will yield the same current as dividing the applied voltage by the total resistance of the circuit.

Adding the two resistor voltage drops together, you will see that they total the applied voltage. This is a useful test to remember when you are checking your calculations, to make sure they are correct.

The properties of series resistance circuits are summed up quite well as Kirchoff’s voltage law, which states that ‘‘the sum of the voltage drops in a series circuit is equivalent to the applied voltage and current is the same at all points in the circuit.’’

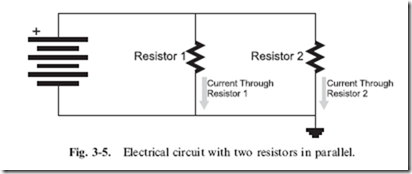

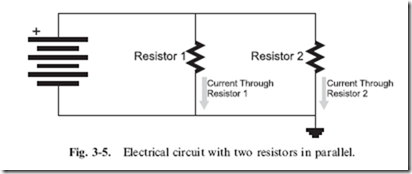

Along with being able to calculate the amount of current passing through a series resistor circuit and the voltage drop across each resistor, you can also calculate the voltage across each resistor in a parallel resistor circuit like Fig. 3-5 as well as the current through all the resistors. To do this, you have to remember Kirchoff’s current law, which states that ‘‘the sum of the currents through each resistance is equivalent to the total current drawn by the circuit and the voltage drops across each resistor is the same as the applied voltage.’’

With each resistor in parallel, it should be fairly obvious that the voltage drop across each one is the same as the applied voltage, and the current flowing through each one can be calculated using Ohm’s law. It should also

be obvious that the current drawn from the power source is equivalent to the sum of the currents passing through each resistor.

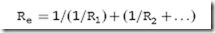

If you were to calculate some different current values for different resistances, you would discover that the general formula for the equivalent resistance was:

For the simple case of two resistors in parallel, the equivalent resistance can be expressed using the formula:

Complex resistor circuits, made up of resistors wired in both series and parallel, like the one shown in Fig. 3-6, can be simplified to a single equivalent resistor by applying the series and resistor formulas that I have presented so far in this section. When doing this, I recommend first finding the equivalent to the series resistances and then the equivalent to the parallel resistances until you are left with one single equivalent resistance.

Complex resistor circuits, made up of resistors wired in both series and parallel, like the one shown in Fig. 3-6, can be simplified to a single equivalent resistor by applying the series and resistor formulas that I have presented so far in this section. When doing this, I recommend first finding the equivalent to the series resistances and then the equivalent to the parallel resistances until you are left with one single equivalent resistance.

The last piece of basic electrical theory that I would like to leave you with is how to calculate the power dissipated by a resistor. When you took Newtonian physics, you were told that power was the product of the rate at which something was moving and the force applied to it. In electrical circuits, we have both these quantities, voltage being the force applied to the electrons and current being the rate of movement. To find the power being dissipated (in watts), you can use the simple formula:

P =V x i

or, if you don’t know one of the two input quantities, you can apply Ohm’s law and the formula becomes:

I must point out that when you are working with digital electronics, most currents in the circuits are measured somewhere between 100 mA to 20 mA. This seemingly small amount of current minimizes the amount of power that is dissipated (or used) in the digital electronic circuits. I’m pointing this out because if you were to get a book on basic electronics you would dis- cover that the examples and questions will usually involve full amperes of current – not thousands or tens of thousands as I have noted here. The reason why basic electronics books work with full amps is because it is easier for students to do the calculations and they don’t have to worry about working with different orders of magnitude.

So far in these few initial pages of this chapter, I have gone through the same amount of material that is presented in multiple courses in electrical theory. Much of the background material has been left out as well as derivations of the various formulas. For the purposes of working with digital electronics, you should be familiar with the following concepts:

1. Electricity flows like water in a closed circuit.

2. The amount of current flow in a circuit is proportional to the amount of resistance it encounters.

3. Voltage across a load or resistance is measured at its two terminals.

4. Voltage is current times resistance (Ohm’s law).

5. Power is simply voltage times current in a DC circuit.

The other rules are derivations of these basic concepts and while I don’t recommend trying to work them out in an exam, what you do remember can be checked against the basic concepts listed above.

Capacitors

When working with digital electronic circuits, it is very important for you to understand the purpose and operation of the capacitor. Many people shy away from working at understanding the role of capacitors in digital electronics because the formulas that define their response to an applied

voltage do not seem to be intuitive and many of them are quite complex. Further reducing the attractiveness of understanding capacitors is that they do not seem to be a basic component of digital electronics, and when they are used their value and wiring seems to be simply specified by a datasheet or an application note. I must confess that these criteria used to apply to me and I never understood the importance of capacitors in digital electronics until I was reviewing failure analysis of a 4 MB memory chip. As I will show, a dynamic RAM memory element (along with a MOSFET transistor) is essentially a capacitor, and the failure analysis of the chips showed how the differences in these capacitors affected their operation. One of the major conclusions of the failure analysis was that the memory chip wasn’t so much a digital electronic device as a massive array of four million capacitors. This example is meant to show the importance of understanding the operation of capacitors and how they influence digital electronic circuits – being comfortable with the information in this section is more than good enough to use and successfully specify capacitors in digital electronic circuits.

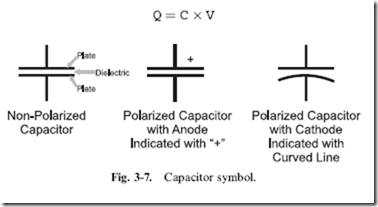

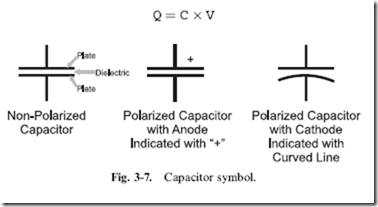

The capacitor itself is a very simple energy storage device; two metal plates (as shown in the leftmost capacitor symbol in Fig. 3-7) are physically separated by a ‘‘dielectric’’ which prevents current from flowing between them. The dielectric is an insulator (‘‘dielectric’’ is a synonym for ‘‘insulator’’) material which enhances the metal plates’ ability to store an electric charge.

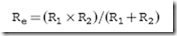

The capacitor is specified by the amount of charge it is able to store. The mount of charge stored in a capacitor (which has the symbol ‘‘C’’) is measured in ‘‘farads’’ which are ‘‘coulombs’’ per volt. One coulomb of electrons is a very large number (roughly 6.2 x 1018) and you will find that for the most part you will only be working with capacitors that can store a very small fraction of a coulomb of electrons.

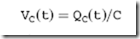

Knowing that farads are in the units of coulombs per volt, you can find the amount of charge (which has the symbol ‘‘Q’’) in a capacitor by using the formula:

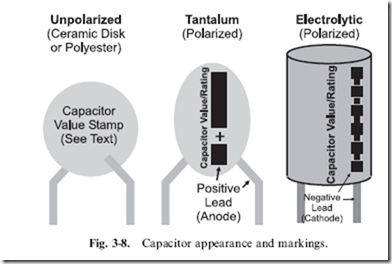

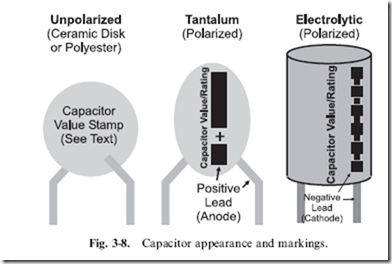

The fraction of a coulomb that is stored in a capacitor is so small, that the most popularly used capacitors are rated in millionth’s (‘‘microfarads’’ or ‘‘mF’’) or trillionth’s (‘‘picofarads’’ or ‘‘pF’’) of farads. Microfarads are commonly referred to as ‘‘mikes’’ and picofarads are often known by the term ‘‘puffs’’. Using standard materials (such as mica, polyester and ceramics), it is possible to build capacitors of a reasonable size of 1 microfarad (one millionth of a farad) but more exotic materials are required for larger value capacitors. For larger capacitors, the dielectric is often a liquid and the capacitor must be wired according to parameter markings stamped on it, as I have indicated in Fig. 3-8. These are known as ‘‘polarized’’ capacitors and either a ‘‘þ’’ marking or a curved plate (as shown in Fig. 3-7) is used to indicate how the capacitor is wired in the schematic. Like other polarized components, the positive connection is called an ‘‘anode’’ and the negative a ‘‘cathode’’. Along with the markings, you should remember that the anode of a polarized two lead component is always longer than the cathode. The different lead lengths allow automated assembly equipment to distinguish between the two leads and determine the component’s polarity.

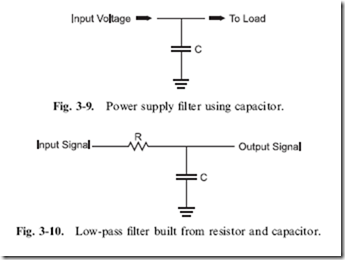

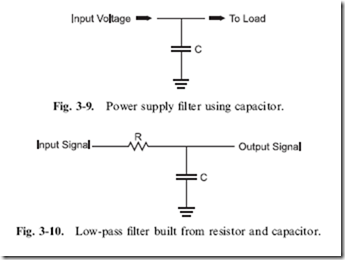

Capacitors have two primary purposes in digital electronic circuits. The first is as a voltage ‘‘filter’’ (Fig. 3-9), reducing ‘‘spikes’’ and other problems on a wire carrying current. This use is similar to the use of a water tower in a city; the water tower is filled due to the pressure of the water being pumped into the community. Water is continually pumped to both houses and the water tower, but in times of high usage (like during the day when people are watering their lawns and washing their cars), water from the tower supplements the pumped water to keep the pressure constant. During the

night, when few people are using water, the pumped water is stored in the water tower, in preparation for the next day’s requirements.

When you look at digital electronic circuits, you will see two types of capacitors used for power filtering. At the connectors to the power supply, you will see a high value capacitor (10 mF or more) filtering out any ‘‘ripples’’ or ‘‘spikes’’ from the incoming power. ‘‘Decoupling’’ capacitors of 0.047 mF to 0.1 mF are placed close to the digital electronic chips to eliminate small spikes caused when the gates within the chips change state.

Large capacitors will filter out low-frequency (long-duration) problems on the power line while the small capacitors will filter out high-frequency (short- duration) spikes on the power line. The combination of the two will keep the power line ‘‘clean’’ and constant, regardless of the changes in current demand from the chips in the circuit.

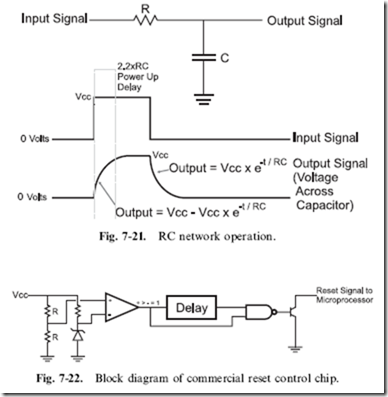

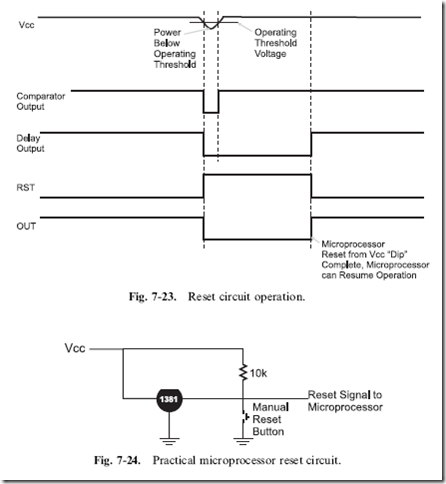

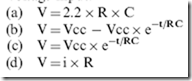

The capacitor’s ability to filter signals is based on its ability to accept or lose charge when the voltage across it changes. This capability allows voltage signals to be transformed using nothing more than a resistor and a capacitor, as in the ‘‘low-pass filter’’ shown in Fig. 3-10. This circuit is known as a low-pass filter because it will pass low-frequency alternating current signals more readily than high-frequency alternating current signals.

In digital electronics, we are not so much concerned with how a capacitor affects an alternating current as how it affects a changing direct current.

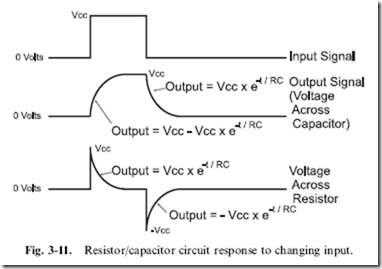

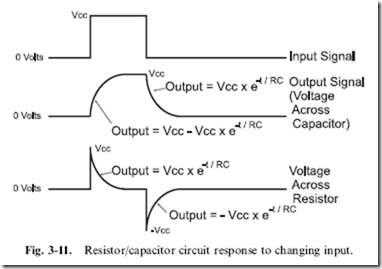

Figure 3-11 shows the response, across Fig. 3-10’s low-pass filter’s capacitor and resistor, to a digital signal that starts off with a low voltage ‘‘steps’’ up to ‘‘V’’ and then has a falling step back to 0 V.

In Fig. 3-11, I have listed formulas defining the voltage response across the resistor and capacitor to the rising and falling step inputs. These formulas are

found within introductory college electricity courses by knowing that the voltage across the capacitor can be defined by using the formula:

which simply states that the voltage across a capacitor at some point in time is a function of the charge within the capacitor at that point of time. The charge within the capacitor is supplied by the current passing through the resistor and the resistor limits the amount of current that can pass through it. As the voltage in the capacitor increases, the voltage across the resistor falls and as the voltage across the resistor falls, the amount of current that is available to charge the capacitor falls. It is a good exercise in calculus to derive these formulas, but understanding how this derivation works is not necessary for working with digital electronics.

There are two things I want to bring out from the discussion of low-pass filters. The first is that the response of the low-pass filter is a function of the product of the resistance and capacitance in the circuit. This product is known as the ‘‘RC time constant’’ and is given the Greek letter ‘‘tau’’ (r) as its symbol. Looking at the formulas, you should see that by increasing the value of r (either by using a larger value resistor or capacitor) the response time of the low-pass filter is increased.

This has two ramifications for digital electronics. The first should be obvious: to minimize the time signals take to pass between gates, the resistance and capacitance of the connection should be minimized. The second is more subtle: the resistor–capacitor response can be used to delay a signal in a circuit. This second issue with resistor–capacitor circuits is actually

very useful in digital electronics for a number of different applications that I will discuss later in the book.

This is a very short introduction to capacitors and their operation in (digital) electronic circuits. Before going on, I would like to reinforce what I’ve said about their importance and recommend that you follow up this section’s material by working through a book devoted to analog electronics.

Semiconductor Operation

Over the past 100 years, we have refined our ability to control the electrical properties of materials in ways that have made radios, TVs and, of course, digital electronic circuits possible. These materials have the ability to change their conductance, allowing current to pass through them under varying conditions. This ability to change from being an insulator to a conductor has resulted in these materials being called ‘‘semiconductors’’, and without them many of the basic devices we take for granted would be impossible.

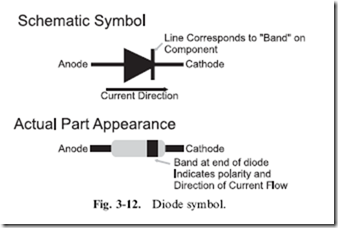

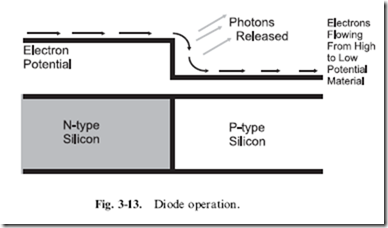

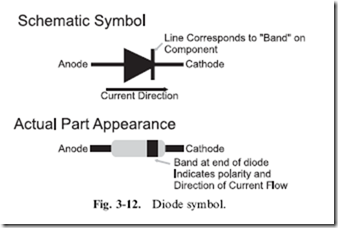

The most basic electronic semiconductor device is the ‘‘diode’’. The electrical symbol and a sketch of the actual part is shown in Fig. 3-12. Diodes are a ‘‘one-way’’ switch for electricity; current will pass easily in one direction and not in the other. If you were to cut a silicon diode in half and look at its operation at a molecular level, you would see that one-half of the silicon was ‘‘doped’’ (infused with atoms) with an element which can easily give up electrons, which is known as an ‘‘N-type’’ semiconductor. On the other side of the diode, the silicon has been doped with an element that can easily accept electrons, a ‘‘P-type’’ semiconductor.

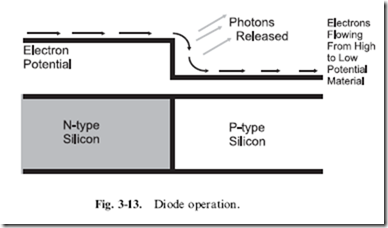

When a voltage is applied to the diode, causing electrons to travel from the atoms of the N-type semiconductor to the atoms of the P-type, the electrons ‘‘fall’’ in energy from their orbits in the N-type to the accepting orbit spaces in the P-type, as shown in Fig. 3-13. This drop in energy by the electron is accompanied by a release in energy by the atoms in the form of photons. The ‘‘quanta’’ of photon energy released is specific to the materials used in the diode – for silicon diodes, the photons are in the far infrared.

The voltage polarity applied to the diode is known as ‘‘bias’’. When the voltage is applied in the direction the diode conducts in, it is known as ‘‘forward biased’’. As you might expect, when the voltage is applied in the direction the diode blocks current flow, it is known as ‘‘reverse biased’’. This is an important point to remember, both for communicating with others about your designs and for understanding the operation of transistors, as explained below.

To keep the thermodynamic books balanced, the release in energy in terms of photons is accompanied by a corresponding voltage drop across the diode. For silicon diodes, this drop is normally 0.7 volts. The power equation I gave earlier (P ¼ V x i) applies to diodes. When large currents are passed through the diode and this is multiplied by 0.7 V, quite a bit of power can be dissipated within the diode.

If voltage is applied in the opposite direction (i.e. injecting electrons into the P-type side of the diode), the electrons normally do not have enough energy to rise up the slope and leave the orbits of the P-type atoms and enter the electron-filled orbits of the N-type atoms. If enough voltage is applied, the diode will ‘‘break down’’ and electrons will jump up the energy slope. The break down voltage for a typical silicon diode is 100 V or more – it is quite substantial.

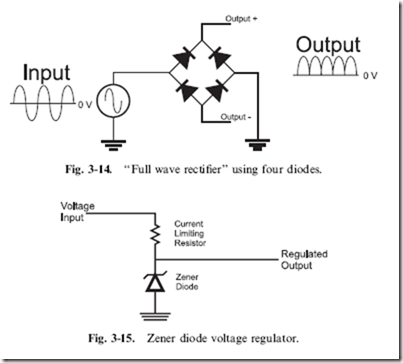

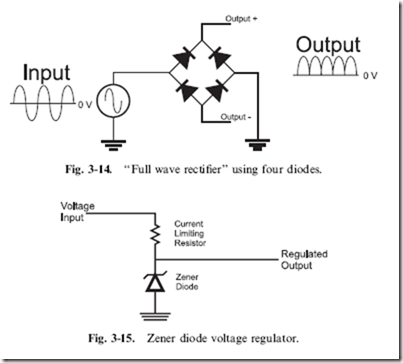

A typical use for a diode is to ‘‘rectify’’ AC to DC, as shown in Fig. 3-14, in which a positive and negative alternating current is converted using the four diodes to a ‘‘lobed’’ positive voltage signal, which can be filtered using capacitors, as discussed in the previous section.

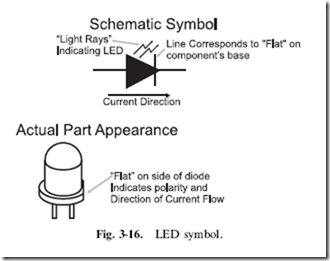

Along with the simple silicon diode discussed above, there are two other types of diodes that you should be aware of. The first is the ‘‘Zener’’ diode which will break down at a low, predetermined voltage. The typical uses for the Zener diode is for accurate voltage references (Zener diodes are typically built with 1% tolerances) or for low-current power supplies like the one shown in Fig. 3-15. The symbol for the Zener diode is the diode symbol with the bent current bar shown in Fig. 3-15.

Building a power supply using this circuit is actually quite simple: the Zener diode’s break down voltage rating will be the ‘‘regulated output’’ and the ‘‘voltage input’’ should be something greater than it. The value of the current limiting resistor is specified by the formula

where ‘‘iapp’’ is the current expected to be drawn (plus a couple of tens of percent margin). The power rating of the Zener diode should take into account the power dissipated if iapp was passing through it.

As I will discuss later in this chapter, there are a lot of inexpensive power regulators that are a lot more efficient than the Zener diode one shown in Fig. 3-15. If you do the math for a typical application (say 9 volts in,

5.1 volt Zener diode and a 20 mA current draw), you will find that at best it is 60% efficient (which is to say 60% of the power drawn by the Zener regulator

circuit and the application is passed to the application, and can often be as low as 25%). The reason for using the Zener diode regulator is its low cost, very small form factor and extreme robustness. Most practical applications will use a linear regulator chip.

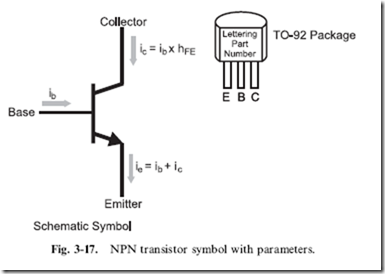

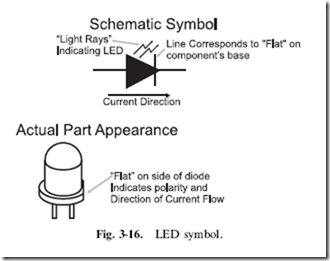

The other type of diode that I want to mention in this section is one that you are already very familiar with – the light-emitting diode or LED. As its name implies, this diode emits light (like a light bulb) when a current passes through it. In Fig. 3-16, note that the LED symbol is the same as the diode’s symbol, but with light rays coming from it. The most common package for the LED is also shown in Fig. 3-16 and it consists of a rounded cylinder (somewhat like ‘‘R2D2’’ from Star Wars) with a raised edge at its base with one side flattened to indicate the LED’s cathode (negative voltage connection).

There are a few points that you should be aware of with regard to LEDs. In the past few years, LEDs producing virtually every color of the rainbow (including white) have become available. I must point out that LEDs can only produce one color because of the chemistry of the semiconductors used to build them. You may see advertisements for two or three color LEDs, but these devices consist of two or three LEDs placed in the same plastic package and wired so that when current passes through its pins in a certain direction, a specific LED turns on.

The brightness of a LED cannot be controlled reliably by varying the current passing through it, as you would with a light bulb. LEDs are designed to provide a set amount of light with current usually in the range of 5 to 10 mA. Reducing the current below 5 mA may dim its output or it may turn it off completely. A much better way to dim a LED is to use ‘‘pulse wave modulation’’ (PWM), in which the current being passed to the LED is turned on, and faster than the human eye can perceive, with varying amounts of on and off time to set the LED’s brightness. I will discuss PWMs later in the book.

Finally, when I first introduced diodes, I noted that silicon diodes output photons of light in the far infrared and have a 0.7 volt drop when current passes through them. To produce visible light, LEDs are not made out of silicon, they are made from other semiconductor materials in which the energy drop from the N-type semiconductor to the P-type semiconductor produces light in the visible spectrum. This change in material means that LEDs do not have silicon’s 0.7 V drop; instead, they typically have a 2.0 V drop. This is an important point because it will affect the value of the current limiting resistor that you put in series to make sure the LED’s current limit rating is not exceeded or that it does not allow too much current in the circuit to pass through it, resulting in an unnecessary current drain.

It is always a source of amazement to me how many people do not understand how transistors work. For the rest of this section, I will introduce you to the two most common types of transistors and explain how they work as well as what applications they are best suited for. Understanding the characteristics of the two types of transistors is critical to understanding how digital logic is implemented and how you can interface it to different technologies.

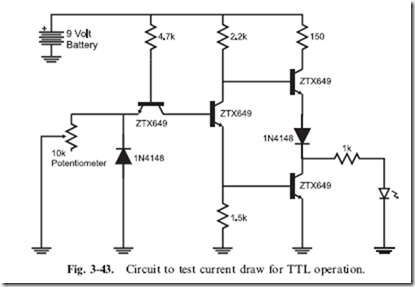

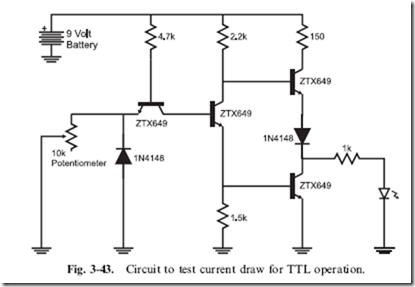

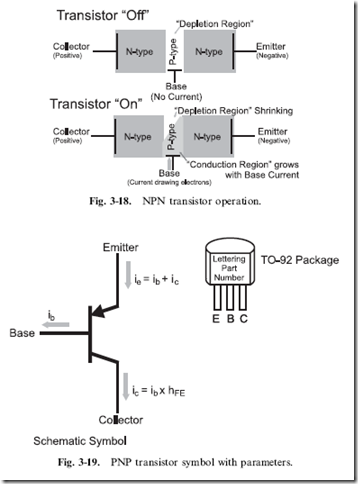

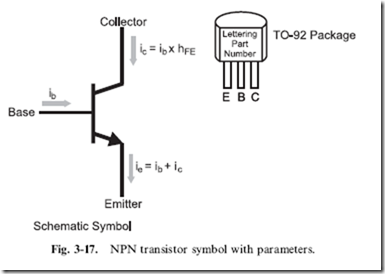

As I explain the operation of the ‘‘bipolar’’ transistor, I will endeavor to keep to the ‘‘high level’’ and avoid trying to explain transistor operation using tools like the ‘‘small signal model’’, which is intimidating and obfuscates the actual operation of the device. Instead, I want to introduce you straight to the ‘‘NPN bipolar transistor’’ by its symbol and typical package and pinout for a small scale (low-power) device in Fig. 3-17.

As you have probably heard, a bipolar transistor can be considered a simple switch or a voltage amplifier, but you are probably mistaken on how it is controlled and how it actually works. The transistor is not voltage controlled (as you may have been led to expect); it is actually current controlled. The amount of current passing through the ‘‘base’’ to the ‘‘emitter’’ controls the amount of current that can pass from the ‘‘collector’’ to the emitter. The amount of current that can be passed through the collector is a multiple (called ‘‘beta’’ and given the symbol ‘‘fJ’’ or hFE) of the

current flowing through the base; the bipolar transistor is actually an amplifier – a small amount of current allows a greater amount to flow. The simple formulas for the relationship between the base and collector currents are listed in Fig. 3-17.

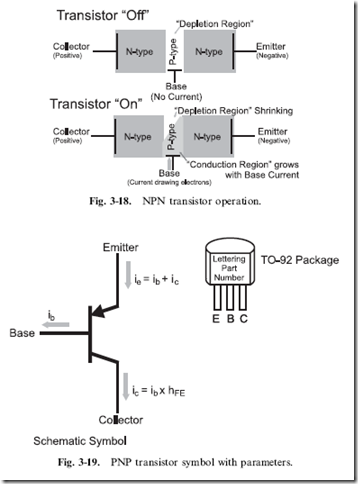

I must point out that these formulas apply while the maximum collector current is in the ‘‘small signal’’ or ‘‘linear’’ operating range. As a physical device, a transistor can only allow so much current to flow through it; as it reaches this limit, increases in the transistor’s base current will not result in a proportional increase in collector current. This operating region is known as the ‘‘non-linear’’ or ‘‘saturation’’ region and what happens in this situation can be easily understood by looking at what happens in a cross section of a transistor (Fig. 3-18).

A bipolar transistor consists of a P-type semiconductor sandwiched between two N-type semiconductors. This structure forms a reverse biased diode and no current can flow through it. With no current being injected into the NPN bipolar transistor, the P-type semiconductor is known as the ‘‘depletion region’’ because it does not have any electrons. When current is passed to the device, electrons are drawn through the P-type semiconductor via the emitter N-type semiconductor. As electrons are drawn into the P-type semiconductor, the properties of the P-type semiconductor change and take on the characteristics of the N-type semiconductors surrounding it and becomes known as the ‘‘conduction region’’. The more electrons that are drawn from the P-type semiconductor, the larger the conduction region bridging the two pieces of N-type semiconductor and the greater amount of current that can pass from the collector to the emitter. As more electrons are

drawn from the P-type semiconductor, the conduction region grows until the entire P-type semiconductor of the transistor becomes ‘‘saturated’’.

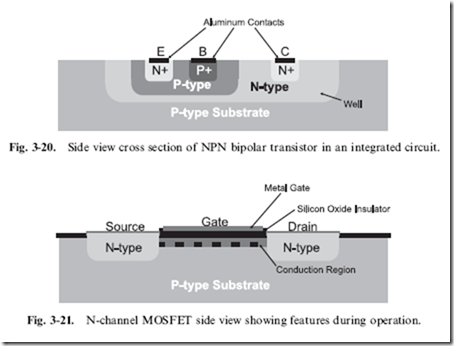

The PNP bipolar transistor (Fig. 3-19) operates in the complete opposite way to the NPN transistor. It is built from an N-type semiconductor between two P-type semiconductors and to create a conduction region, electrons are injected into the base instead of being withdrawn, as in the case of the NPN bipolar transistor. As in the NPN bipolar transistor, the amount of collector current is a multiple of the base current (and that multiple is also called f3 or hFE).

Bipolar transistor hFE values can range anywhere from 50 to 500 and the amount of collector current they can handle ranges from a few tens of milliamps to tens of amps. As well as discrete (single) devices being inexpensive, they respond to changes in inputs in extremely short time intervals. You may think they are perfect for use in digital electronics, but they have two faults that make them less than desirable. First, the base current is actually a source of power dissipation in the device, which is usually not an issue when single transistors are used, but is of major concern when thousands or millions are used together in a highly complex digital electronic system.

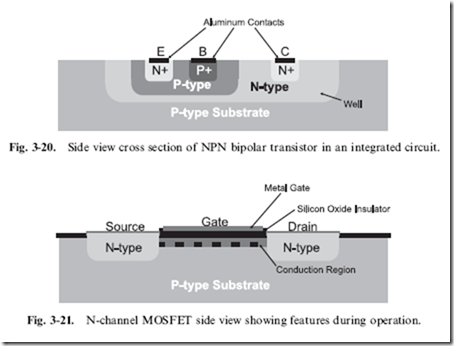

Secondly, they take up a lot of chip ‘‘real estate’’ and are very expensive to manufacture. Figure 3.20 shows the side view of an NPN bipolar transistor

built on a silicon chip. Instead of butting together different types of semiconductor, it is manufactured as a series of ‘‘wells’’, which are doped with the chemicals to produce the desired type of semiconductor by repeated operations. As many as 35 process steps are required to produce a bipolar transistor.

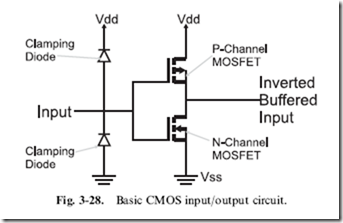

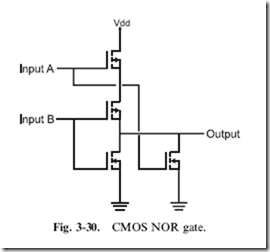

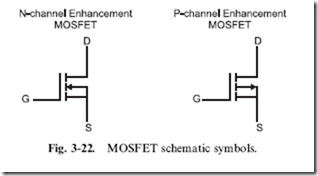

The N-channel enhancement ‘‘metal oxide silicon field effect transistor’’ (MOSFET) does not have these faults – it is built using a much simpler process (the side view of the transistor is shown in Fig. 3-21) that only requires one doping of the base silicon along with the same bonding of aluminum contacts as the bipolar transistor. N-channel MOSFETs (as they are most popularly known) require nine manufacturing processes and take a fraction of the chip real estate used by bipolar transistors.

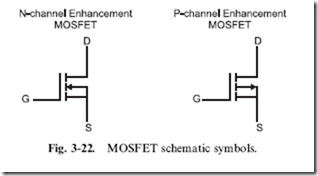

The N-channel MOSFET is not a current-controlled device, like the bipolar transistor, but a voltage-controlled one. To ‘‘turn on’’ the MOSFET

(allow current to flow from the ‘‘source’’ to the ‘‘drain’’ pins), a voltage is applied to the ‘‘gate’’. The gate is a metal plate separated from the P-type silicon semiconductor substrate by a layer of silicon dioxide (most popularly known as ‘‘glass’’). When there is no voltage applied to the gate, the P-type silicon substrate forms a reverse biased diode and does not allow current flow from the source to the drain. When a positive voltage is applied to the gate of the N-channel MOSFET, electrons are drawn to the substrate immediately beneath it, forming a temporary N-type semiconductor ‘‘conduction region’’, which provides a low-resistance path from the source to the drain. MOSFET transistors are normally characterized by the amount of current that can pass from the source to the drain along with the resistance of the source/drain current path.

The symbol for the N-channel MOSFET, along with its complementary device, the P-channel MOSFET are shown in Fig. 3-22. The P-channel MOSFET creates a conduction region when a negative voltage is applied to its gate. MOSFET transistors come in a variety of packages and some can handle tens of amps of current, but they tend to be very expensive.

MOSFETs do not have the issues of bipolar transistors; their gate widths (the measurement used to characterize the size of MOSFET devices) are, at the time of this writing, as small as 57 nm in high-performance micro- processors and memory chips. The voltage-controlled operation of MOSFETs eliminates the wasted current and power of the bipolar transistor’s base, but while MOSFETs do not have the disadvantages of bipolar transistors, they do not have their advantages.

MOSFET transistors do not have a small signal/linear operating region;t hey tend to change from completely off to completely on (conducting) with a

very small intermediate range. MOSFETs also tend to operate at slower speeds than bipolar devices because the gates become capacitors and ‘‘slow down’’ the signals, as I showed in the previous section. This point has become somewhat moot as modern MOSFET designs are continually increasing in speed, providing us with extremely high-speed PCs and other electronic devices. Finally, it is difficult to manufacture MOSFETs with high current capabilities; while high current MOSFETs are available, they are surprisingly expensive.

The characteristics of the two types of transistors give way to the conclusion that bipolar transistors are best suited to situations where a few high current devices are required. MOSFET transistors are best suited for applications where large numbers of transistors are placed on a single chip. Today, for the most part, digital electronic designs follow these guidelines, but we are left with an interesting legacy. Despite being much simpler structurally and cheaper to manufacture, MOSFET transistors were only perfected in the late 1960s, whereas bipolar technology had already been around for 20 years and it was able to become entrenched as the basis for many digital electronic devices and chips. For this reason, you must be cognizant of the operating characteristics of bipolar transistors as well as those of MOSFET transistors. In the next section, many of these differences will become apparent.