19.6.4 TCP over Ad Hoc Networks

TCP is the prevalent transport protocol in the Internet today and its use over ad hoc networks is a certainty. This has motivated a great deal of research efforts aiming not only at evaluating TCP performance over ad hoc networks, but also at proposing appropriate TCP schemes for this kind of networks. TCP was originally

designed for wired network, based on the following assumptions, typical of such an environment: packet losses are mainly caused by congestion, links are reliable (very low bit error rate), round-trip times are stable, and bandwidth is constant (Postel, 1981; Huston, 2001). Based on these assumptions, TCP flow control employs a window-based technique, in which the key idea is to probe the network to determine the available resources. The window is adjusted according to an additive-increase/multiplicative-decrease strategy. When packet loss is detected, the TCP sender retransmits the lost packets and the congestion control mechanisms are invoked, which include exponential backoff of the retransmission timers and reduction of the transmission rate by shrinking the window size. Packet losses are therefore interpreted by TCP as a symptom of congestion (Chandran, 2001). Previous studies on the use of TCP over cellular wireless networks have shown that this protocol suffers from poor performance mainly because the principal cause of packet loss in wireless networks is no longer congestion, but the error-prone wireless medium (Xylomenos, 2001; Balakrishnan, 1997). In addition, multiple users in a wireless network may share the same medium, rendering the transmission delay time-variant. Therefore, packet loss due to transmission error or a delayed packet can be interpreted by TCP as being caused by congestion. When TCP is used over ad hoc networks, additional problems arise. Unlike cellular networks, where only the last hop is wireless, in ad hoc networks the entire path between the TCP sender and the TCP destination may be made up of wireless hops (multihop). Therefore, as discussed earlier in this chapter, appropriate routing protocols and medium access control mechanisms (at the link control layer) are required to establish a path connecting the sender and the destination. The interaction between TCP and the protocols at the physical, link, and network layers can cause serious performance degradation, as discussed in the following.

Physical Layer Impact

Interference and propagation channel effects are the main causes of high bit error rate in wireless networks. Channel induced errors can corrupt TCP data packets or acknowledgement packets (ACK), resulting in packet losses. If an ACK is not received within the retransmit timeout (RTO) interval, the lost packets may be mistakenly interpreted as a symptom of congestion, causing the invocation of TCP congestion control mechanisms. As a consequence, the TCP transmission rate is drastically reduced, degrading the overall performance. Therefore, the reaction of TCP to packet losses due to errors is clearly inappropriate. One approach to avoid this TCP behavior is to make the wireless channel more reliable by employing appropriate forward error correction coding (FEC), at the expense of a reduction of the effective bandwidth (due to the addition of redundancy) and an increase in the transmission delay (Shakkottai, 2003). In addition to FEC, link layer automatic repeat request (ARQ) schemes can be used to provide faster retransmission than that provided at upper layers. ARQ schemes may increase the transmission delay, leading TCP to assume a large round-trip time or to trigger its own retransmission procedure at the same time (Huston, 2001).

MAC Layer Impact

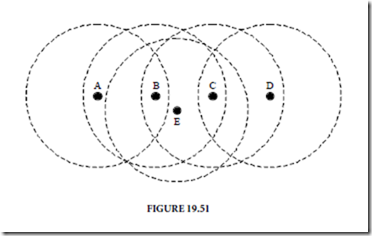

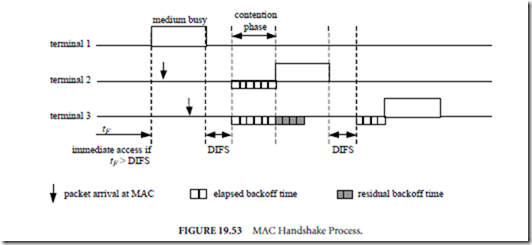

It is well known that the hidden and exposed terminal problems strongly degrade the overall performance of ad hoc networks. Several techniques for avoiding such problems have been proposed, including the RTS/CTS control packets exchange employed in the IEEE 802.11 MAC protocol. However, despite the use of such techniques, hidden and exposed terminal problems can still occur, causing anomalous TCP behavior. The inappropriate interaction between TCP and link control layer mechanisms in multihop scenarios may cause the so-called TCP instability (Xu, 2001). TCP adaptively controls its transmission rate by adjusting its contention window size. The window size determines the number of packets in flight in the network (i.e., the number of packets that can be transmitted before an ACK is received by the TCP sender). Large window sizes increase the contention level at the link layer, as more packets will be trying to make their way to the destination terminal. This increased contention level leads to packet collisions and causes the exposed terminal problem, preventing intermediate nodes from reaching their adjacent terminals (Xu, 2001). When a terminal cannot deliver its packets to its neighbor, it reports a route failure to the source terminal, which reacts by invoking the route reestablishment mechanisms at the routing protocol. If the route reestablishment takes longer than RTO, the TCP congestion control mechanisms are triggered, shrinking the window size and retransmitting the lost packets. The invocation of congestion

control mechanisms results in momentary reduction of TCP throughput, causing the mentioned TCP instability. It has been experimentally verified that reducing the TCP contention window size minimizes TCP instability (Xu, 2001). However, reduced window size inhibits spatial channel reuse in multihop scenarios. For the case of IEEE 802.11 MAC, which uses a four-way handshake (RTS-CTS-Data-ACK), it can be shown that, in an H-hop chain configuration, a maximum of H /4 terminals can simultaneously transmit (Fu, 2003), assuming ideal scheduling and identical packet sizes. Therefore, a window size smaller than this upper limit degrades the channel utilization efficiency. Another important issue related to the interaction between TCP and the link layer protocols regards the unfairness problem when multiple TCP sessions are active. The unfairness problem (Xu, 2001; Tang, 2001) is also rooted in the hidden (collisions) and exposed terminal problems and can completely shut down one of the TCP sessions. When a terminal is not allowed to send its data packet to its neighbor due to collisions or the exposed terminal problem, its back off scheme is invoked at the link layer level, increasing (though randomly) its back off time. If the back off scheme is repeatedly triggered, the terminal will hardly win a contention, and the winner terminal will eventually capture the medium, shutting down the TCP sessions at the loser terminals.

Mobility Impact

Due to terminal mobility, route failures can frequently occur during the lifetime of a TCP session. As discussed above, when a route failure is detected, the routing protocol invokes its route reestablishment mechanisms, and if the discovery of a new route takes longer than RTO, the TCP sender will interpret the route failure as congestion. Consequently, the TCP congestion control is invoked and the lost packets are retransmitted. However, this reaction of TCP in this situation is clearly inappropriate due to several reasons (Chandran, 2001). Firstly, lost packets should not be retransmitted until the route is reestablished. Secondly, when the route is eventually restored, the TCP slow start strategy will force the throughput to be unnecessarily low immediately after the route reestablishment. In addition, if route failures are frequent, TCP throughput will never reach high rates.

Main TCP Schemes Proposals for Ad Hoc Networks

TCP—Feedback

This TCP scheme is based on explicitly informing the TCP sender of a route failure, such that it does not mistakenly invoke the congestion control (Chandran, 2001). When an intermediate terminal detects a route failure, it sends a route failure notification (RFN) to the TCP sender terminal and records this event. Upon receiving an RFN, the TCP sender transitions to a “snooze” state and (i) stops sending packets, (ii) freezes its flow control window size, as well as all its timers, and (iii) starts a route failure timer, among other actions. When an intermediate terminal that forwarded the RFN finds out a new route, it sends a route reestablishment notification (RRN) to the TCP sender, which in turn leaves the snooze state and resumes its normal operation.

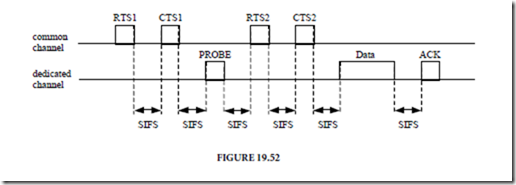

TCP with Explicit Link Failure Notification

Explicit link failure notification (ELFN) technique is based on providing TCP sender with information about link or route failures, preventing TCP from reacting to such failures as if congestions had occurred (Holland, 2002). In this approach, the ELFN message is generated by the routing protocol and a notice to TCP sender about link failure is piggybacked on it. When the TCP sender receives this notice, it disables its retransmission timers and periodically probes the network (by sending packets) to check if the route has been reestablished. When an ACK is received, the TCP sender assumes that a new route has been established and resumes its normal operation.

Ad Hoc TCP

A key feature of this approach is that the standard TCP is not modified, but an intermediate layer, called ad hoc TCP (ATCP), is inserted between IP and TCP (transport) layers. Therefore, ATCP is invisible to TCP and terminals with and without ATCP installed can interoperate. ATCP operates based on the network status information provided by the internet control message protocol (ICMP) and the explicit congestion notification mechanism (ECN) (Floyd, 1994). The ECN mechanism is used to inform the TCP destination of the congestion situation in the network. An ECN bit is included in the TCP header and is set to zero by the TCP sender. Whenever an intermediate router detects congestion, it sets the ECN bit to one. When the TCP destination receives a packet with ECN bit set to one, it informs the TCP sender about the congestion situation, which in turn reduces its transmission rate. ATCP has four possible states: normal, congested, loss, and disconnected. In the normal state ATCP does nothing and is invisible to TCP. In the congested, loss, and disconnected states, ATCP deals with congested network, lossy channel, and partitioned network, respectively. When ATCP sees three duplicate ACKs (likely caused by channel induced errors), ATCP transitions to the loss state and puts TCP into persist mode, ensuring that TCP does not invoke its congestion control mechanisms. In the loss state, ATCP retransmits the unacknowledged segments. When a new ACK arrives, ATCP returns to the normal state and removes TCP from the persist mode, restoring the TCP normal operation. When network congestion occurs, ATCP sees the ECN bit set to one and transitions to congested state. In this state, ATCP does not interfere with TCP congestion control mechanisms. Finally, when a route failure occurs, a destination unreachable message is issued by ICMP. Upon receiving this message, ATCP puts TCP into persist mode and transitions to the disconnected state. While in the persist mode, TCP periodically sends probe packets. When the route is eventually reestablished, TCP is removed from persist mode and ATCP transitions back to the normal state.

19.6.5 Capacity of Ad Hoc Networks

The classical information theory introduced by Shannon (Shannon, 1948) presents the theoretical results on the channel capacity, that is, how much information can be transmitted over a noisy and limited communication channel. In ad-hoc networks, this problem is led to a higher level of difficulty for the capacity now must be investigated in terms of several transmitters and several receivers. The analysis of the capacity of wireless networks has a similar objective as that of the classical information theory: to estimate the limit of how much information can be transmitted and to determine the optimal operation mode, so that this limit can be achieved. A first attempt to calculate these bounds is made by Gupta and Kumar in (Gupta, 2000). In this work, the authors propose a model for studying the capacity of a static ad hoc network (i.e., nodes do not move), based on the following scenario. Suppose that nodes are located in a region of area 1. Each node can transmit at bits per second over a common wireless channel. Packets are sent from node to node in a multi-hop fashion until their final destination is reached and they can be buffered at intermediate nodes while waiting for transmission. Two types of network configurations are considered: arbitrary networks, where the node locations, traffic destinations, rates, and power level are all arbitrary; and random networks, where the node locations and destinations are random, but they have the same transmit power and data rate. Two models of successful reception over one hop are also proposed:

✁ Protocol Model—in which a transmission from node i to j , with a distance dij between them, is successful if dkj ≥ (1 + Υ) dij , that is, if the distance between nodes i and j is smaller than that of nodes k and j with both i and k transmitting to j simultaneously over the same channel. The quantity Υ > 0 models the guard zone specified by the protocol to prevent a neighboring node from simultaneous transmission.

✁ Physical Model—in which, for a subset T of simultaneous transmitting nodes, the transmission from a node i ∈ T is successfully received by node j if

with a minimum signal-to-interference ratio (SIR) β for successful receptions, noise power level N, transmission power level Pi for node i , and signal power decay α.

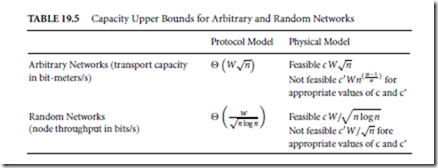

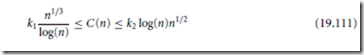

The transport capacity is so defined as the quantity of bits transported in a certain distance, measured in bit-meter. One bit-meter signifies that one bit has been transported over a distance of one meter toward its destination. With the reception models as described previously, the upper bounds for the transport capacity in arbitrary networks and the throughput per node in random networks were calculated. They are summarized in Table 19.5, where the Knuth’s notation has been used, that is, f (n) = τ(g (n)) denotes that f (n) = O(g (n)) as well as g (n) = O( f (n)). In Table 19.5, c and c t are constants which are functions of α and β. These results show that, for arbitrary networks, if the transport capacity is divided into equal parts among all nodes, the throughput per node will be τ(W/√n) bits per second. This shows that, as the number of nodes increases, the throughput capacity for each node diminishes in a square root proportion. The same type of results holds for random networks. These results assume a perfect scheduling algorithm which knows the locations of all nodes and all traffic demands, and which coordinates wireless transmissions temporally and spatially to avoid collisions. Without these assumptions the capacity can be even smaller. Troumpis and Goldsmith (Toumpis, 2000) extend the analysis of upper limits of (Gupta, 2000) to a three dimensional topology, and incorporated the channel capacity into the link model. In this work, the nodes are assumed as uniformly distributed within a cube of volume 1 m3. The capacity C (n) follows the inequality

with probability approaching unity as n → ∞, and k1, k2 some positive constants. Equation Eq. (19.111) also suggests that, although the capacity increases with the number of users, the available rate per user decreases.

Case Studies on Capacity of Ad Hoc Networks

IEEE802.11

Li et al (Li, 2001) study the capacity of ad hoc networks through simulations and field tests. Again, the static ad hoc network is the basic scenario, which is justified by the fact that at most mobility scenarios nodes do not move significant distances during packet transmissions. The 802.11 MAC protocol is used to analyze the capacity of different configuration networks.

For the chain of nodes, the ideal capacity is 1/4 of the raw channel bandwidth obtainable from the radio (single-hop throughput). The simulated 802.11-based ad hoc network achieves a capacity of 1/7 of the single-hop throughput, because the 802.11 protocol fails to discover the optimum schedule of transmission and its backoff procedure performs poorly with ad hoc forwarding. The field experiment does not present different results from those obtained in the simulation. The same results are found for the lattice topology. For random networks with random traffic patterns, the 802.11 protocol is less efficient, but the theoretical maximum capacity of O(1/√n) per node can be achieved.

It is also shown that the scalability of ad hoc networks is a function of the traffic pattern. In order for the total capacity to scale up with the network size, the average distance between source and destination nodes must remain small as the network grows. Therefore, the key factor deciding whether large networks are feasible is the traffic pattern. For networks with localized traffic the expansion is feasible whereas for networks in which the traffic must traverse it then the expansion is questionable.

Wireless Mesh Networks

A particular case of ad-hoc network, which is drawing significant attention, is the wireless mesh network (WMN). The main characteristic that differentiates a WMN from others ad-hoc networks is the traffic pattern: practically, all traffic is either to or from a node (gateway) that is connected to other networks (e.g. Internet). Consequently, the gateway plays a decisive role in the WMN: the greater the number of gateways the greater the capacity of this network as well as its reliability. Jun and Sichitiu (Jun, (Oct) 2003) analyze the capacity of WMNs with stationary nodes. Their work shows that the capacity of WMNs is extremely dependent on the following aspects:

✁ Relayed traffic and fairness—Each node in a WMN must transmit relayed traffic as well as its own. Thus, there is an inevitable contention between its own traffic and relayed traffic. In practice, as the offered load at each node increases, the nodes closest to the gateway tends to consume a larger bandwidth, even for a fair MAC layer protocol. The absolute fairness must be forced according to the offered load.

✁ Nominal capacity of MAC layer (B )—It is defined as the maximum achievable throughput at the MAC layer in one-hop network. It can be calculated as presented in (Jun (April) 2003).

✁ Link constraints and collision domains—In essence, all MAC protocols are designed to avoid collisions while ensuring that only one node transmits at a time in a given region. The collision domain is the set of links (including the transmitting one) that must be inactive for one link to transmit successfully.

The chain topology is first analyzed. It is observed that the node closer to the gateway has to forward more traffic than nodes farther away. For a n-node network and a per node generated load G , the link between the gateway and the node closer to it has to be able to forward a traffic equal to nG . The link between this node and the next node has to be able to forward traffic equal to (n − 1)G , and so on. The collision domains are identified and the bottleneck collision domain, which has to transfer the most traffic in the network, is determined. The throughput available for each node is bounded by the nominal capacity B divided by the total traffic of the bottleneck collision domain. The chain topology analysis can be extended to a two-dimensional topology (arbitrary network). The values obtained for the throughput per node are validated with simulation results.

These results lead to an asymptotic throughput per node of O (1/n). This is significantly worse than the results showed in Table 19.5, mainly because of the presence of gateways, which are the network bottlenecks.

Clearly, the available throughput improves with the increase of the number of gateways in the network.

Increasing the Capacity of Ad Hoc Networks

The expressions presented in Table 19.5 indicate the best performance achievable considering optimal scheduling, routing, and relaying of packets in the static networks. This is a bad result as far as scalability is concerned and encourages researches to pursue techniques that increase the average throughput. One ap- proach to increase capacity is to add relay-only nodes in the network. The major disadvantage of this scheme is that it requires a large number of pure relay nodes. For random networks under the protocol model with m additional relay nodes, the throughput available per node becomes ![]() (Gupta, 2000). For example, in a network with 100 senders, at least 4476 relay nodes are needed to quintuplicate the capacity (Gupta, 2000). Another strategy is to introduce mobility into the model. Grossglauser and Tse (Grossglauser, 2001) show that it is possible for each sender-receiver pair to obtain a constant fraction of the total available bandwidth, which is independent of the number of pairs, at the expense of an increasing delay in the transmission of packets and the size of the buffers needed at the intermediate relay nodes. The same results are presented by Bansal and Liu (Bansal, 2003), but with low delay constraints and a particular mobility model similar to the random waypoint model (Bettstetter, 2002). However, mobility introduces new problems such as maintaining connectivity within the ad hoc network, distributing routing information, and establishing access control. (An analysis on the connectivity of ad hoc networks can be found at (Bettstetter, 2002).) The nodes can also be grouped into small clusters, where in each cluster a specific node (clusterhead) is designated to carry all the relaying packets (Lin, 1997). This can increase the capacity and reduce the impact of the transmission overhead due to routing and MAC protocols. On the other hand, the mechanisms to update the information in clusterheads generate additional transmissions, which reduces the effective node throughput.

(Gupta, 2000). For example, in a network with 100 senders, at least 4476 relay nodes are needed to quintuplicate the capacity (Gupta, 2000). Another strategy is to introduce mobility into the model. Grossglauser and Tse (Grossglauser, 2001) show that it is possible for each sender-receiver pair to obtain a constant fraction of the total available bandwidth, which is independent of the number of pairs, at the expense of an increasing delay in the transmission of packets and the size of the buffers needed at the intermediate relay nodes. The same results are presented by Bansal and Liu (Bansal, 2003), but with low delay constraints and a particular mobility model similar to the random waypoint model (Bettstetter, 2002). However, mobility introduces new problems such as maintaining connectivity within the ad hoc network, distributing routing information, and establishing access control. (An analysis on the connectivity of ad hoc networks can be found at (Bettstetter, 2002).) The nodes can also be grouped into small clusters, where in each cluster a specific node (clusterhead) is designated to carry all the relaying packets (Lin, 1997). This can increase the capacity and reduce the impact of the transmission overhead due to routing and MAC protocols. On the other hand, the mechanisms to update the information in clusterheads generate additional transmissions, which reduces the effective node throughput.

References

Aggelou, G., Tafazolli, R., RDMAR: A Bandwidth-efficient Routing Protocol for Mobile Ad Hoc Networks, ACM International Workshop on Wireless Mobile Multimedia (WoWMoM), 1999, pp. 26–33. Balakrishnan, H., Padmanabhan, V.N., Seshan, S., Katz, R.H.A, A Comparison of Mechanisms for Improving TCP Performance over Wireless Links, IEEE/ACM Trans. on Networking, vol. 5, No. 6, pp. 756–769, December 1997.

Bansal, N. and Liu, Z. Capacity, Delay and Mobility in Wireless Ad-Hoc Networks, Proc. IEE Infocom ‘03, April 2003.

Basagni, S. et al., A Distance Routing Effect Algorithm for Mobility (DREAM), ACM/IEEE Int’l. Conf.

Mobile Comp. Net., pp. 76–84, 1998.

Bellur, B. and Ogier, R.G., A Reliable, Efficient Topology Broadcast Protocol for Dynamic Networks, Proc.

IEEE INFOCOM ’99, New York, March 1999.

Bettstetter, C., On the Minimum Node Degree and Connectivity of a Wireless Multihop Network, Proc.

ACM Intern. Symp. On Mobile Ad Hoc Networking and Computing (MobiHoc), June 2002.

Bluetooth SIG, Specification of the Bluetooth System, vol. 1.0, 1999, available at: http://www.bluetooth.

Chandra, A., Gummalla, V., Limb, J.O., Wireless Medium Access Control Protocols, IEEE Communications Surveys and Tutorials [online], vol. 3, no. 2, 2000, available at: http://www.comsoc.org/pubs/surveys/.

Chandran, K., Raghunathan, S., Venkatesan, S., Prakash, R., A Feedback-Based Scheme for Improving TCP Performance in Ad Hoc Wireless Networks, IEEE Personal Communications, pp. 34–39, February 2001.

Chao, C.M., Sheu, J.P., Chou, I-C., A load awareness medium access control protocol for wireless ad hoc network, IEEE International Conference on Communications, ICC’03, vol. 1, 11–15, pp. 438–442, May 2003.

Chatschik, B., An overview of the Bluetooth wireless technology, IEEE Communications Magazine, vol.

39, no. 12, pp. 86–94, Dec. 2001.

Chen, T.-W., Gerla, M., Global State Routing: a New Routing Scheme for Ad-hoc Wireless Networks, Proceedings of the IEEE ICC, 1998.

Chiang, C.-C. and Gerla, M., Routing and Multicast in Multihop, Mobile Wireless Networks, Proc. IEEE ICUPC ’97, San Diego, CA, Oct. 1997.

Corson, M.S. and Ephremides, A., A Distributed Routing Algorithm for Mobile Wireless Networks, ACM/Baltzer Wireless Networks 1, vol 1, pp. 61-81, 1995.

Das, S., Perkins, C., and Royer, E., Ad Hoc on Demand Distance Vector (AODV) Routing, Internet Draft, draft-ietf-manet-aodv-11.txt, 2002.

Dube, R., Rais, C., Wang, K., and Tripathi, S., Signal Stability Based Adaptive Routing (SSA) for Ad Hoc Mobile Networks, IEEE Personal Communication 4, vol 1, pp. 36–45, 1997.

Fang, J.C. and Kondylis, G.D., A synchronous, reservation based medium access control protocol for multihop wireless networks, 2003 IEEE Wireless Communications and Networking, WCNC 2003, vol. 2, 16–20. March 2003, pp. 994–998.

Fitzek, F.H.P., Angelini, D., Mazzini, G., and Zorzi, M., Design and performance of an enhanced IEEE 802.11 MAC protocol for multihop coverage extension, IEEE Wireless Communications, vol. 10, no.

6, pp. 30–39, Dec. 2003.

Floyd, S., TCP and Explicit Congestion Notification, ACM Computer Communication Review, vol. 24, pp. 10–23, October 1994.

Fu, Z., Zerfos, P., Luo, H., Lu, S., Zhang, L., and Gerla, M., The Impact of Multihop Wireless Channel on TCP Throughput and Loss, IEEE INFOCOM, pp. 1744–1753, 2003.

Garcia-Luna-Aceves, J.J. and Marcelo Spohn, C., Source-tree Routing in Wireless Networks, Proceedings of the Seventh Annual International Conference on Network Protocols, Toronto, Canada, p. 273, October 1999.

Garcia-Luna-Aceves, J.J. and Tzamaloukas, A., Reversing the Collision-Avoidance Handhske in Wireless Networks, ACM/IEEE MobiCom’99, pp. 15–20, August 1999.

Goodman, D.J., Valenzuela, R.A., Gayliard, K.T., Ramamurthi, B., Packet reservation multiple access for local wireless communications, IEEE Transactions on Communications, vol. 37, no. 8, pp. 885–890, Aug. 1989.

Grossglauser, M. and Tse, D., Mobility Increases the Capacity of Ad Hoc Wireless Networks, Proc. IEE Infocom ‘01, April 2001.

Gu¨nes, M., Sorges, U., and Bouazizi, I., ARA–The Ant-colony Based Routing Algorithm for Manets, ICPP Workshop on Ad Hoc Networks (IWAHN 2002), pp. 79–85, August 2002.

Gupta, P. and Kumar, P.R., The Capacity of Wireless Networks, IEEE Trans. Info. Theory, vol. 46, March 2000.

Haas, Z.J. and Deng, J., Dual busy tone multiple access (DBTMA)—a multiple access control scheme for ad hoc networks, IEEE Transactions on Communications, vol. 50, no. 6, pp. 975–985, June 2002.

Haas, Z.J. and Pearlman, R., Zone Routing Protocol for Ad-hoc Networks, Internet Draft, draft-ietf- manet-zrp-02.txt,1999.

Holland, G. and Vaidya, N., Analysis of TCP Performance over Mobile Ad Hoc Networks, Wireless Networks, Kluwer Academic Publishers, vol. 8, pp. 275–288, 2002.

Huston, G., TCP in a Wireless World, IEEE Internet Computing, pp. 82–84, March–April, 2001.

IEEE 802.11 WG, Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) Specifications, IEEE/ANSI Std. 802-11, 1999 edn.

IEEE 802.11 WG, Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) Specifications:

high-speed physical layer in the 5 GHz band, IEEE Std. 802-11a.

IEEE 802.11 WG, Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) Specifications:

higher-speed physical layer extension in the 2.4 GHz band, IEEE Std. 802-11b.

IEEE 802.11 WG, Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) Specifications:

Medium Access Control (MAC) Enhancements for Quality of Service (QoS), IEEE Draft Std.

802.11e/D5.0, August 2003.

Iwata, A. et al., Scalable Routing Strategies for Ad-hoc Wireless Net-works, IEEE JSAC, pp. 1369–179, August. 1999.

Jacquet, P., Muhlethaler, P., Clausen, T., Laouiti, A., Qayyum, A., and Viennot, L., Optimized Link State Routing Protocol for Ad Hoc Networks, IEEE INMIC, Pakistan, 2001.

Jiang, M., Ji, J., and Tay, Y.C., Cluster based routing protocol, Internet Draft, draft-ietf-manet-cbrp-spec- 01.txt, 1999.

Jiang, S., Rao, J., He, D., Ling, X., and Ko, C.C., A simple distributed PRMA for MANETs, IEEE Transactions on Vehicular Technology, vol. 51, no. 2, pp. 293–305, March 2002.

Joa-Ng, M. and Lu, I.-T., A Peer-to-peer Zone-based Two-level Link State Routing for Mobile Ad Hoc Networks, IEEE Journal on Selected Areas in Communications 17, vol. 8, pp. 1415–1425, 1999.

Johnson, D., Maltz, D., and Jetcheva, J., The Dynamic Source Routing Protocol for Mobile Ad Hoc Networks, Internet Draft, draft-ietf-manet-dsr-07.txt, 2002.

Jun, J. and Sichitiu, M.L., The Nominal Capacity of Wireless Mesh Networks, IEEE Wireless Communications, October 2003.

Jun, J., Peddabachagari, P., and Sichitiu, M.L., Theoretical Maximum Throughput of IEEE 802.11 and its Applications, Proc. 2nd IEEE Int’l Symp. Net. Comp. and Applications, pp. 212–25, April 2003.

Jurdak, R., Lopes, C.V., and Baldi, P., A Survey, Classification and Comparative Analysis of Medium Access Control Protocols for Ad Hoc Networks, IEEE Communications Surveys and Tutorials [online], vol. 6, no. 1, 2004. available at: http://www.comsoc.org/pubs/surveys/.

Kasera, K.K. and Ramanathan, R., A Location Management Protocol for Hierarchically Organized Multihop Mobile Wireless Networks, Proceedings of the IEEE ICUPC’97, San Diego, CA, pp. 158–162, October 1997.

Ko, Y.-B., Vaidya, N.H., Location-aided Routing (LAR) in Mobile Ad Hoc Networks, Proceedings of the Fourth Annual ACM/IEEE International Conference on Mobile Computing and Networking (Mobicom’98), Dallas, TX, 1998.

Li, J., Blake, C., De Couto, D.S.J., Lee, H.I., and Morris, R., Capacity of Ad Hoc Wireless Networks, Proc.

7th ACM Int’l Conf. Mobile Comp. and Net., pp. 61–69, July 2001.

Lin, C.R. and Gerla, M., Adaptative Clustering for Mobile Wireless Networks, IEEE Journal on Selected Ares in Communications, vol. 15, pp. 1265–1275, September 1997.

Mangold, S., Sunghyun Choi, Hiertz, G.R., Klein, O., and Walke, B., Analysis of IEEE 802.11e for QoS support in wireless LANs, IEEE Wireless Communications, vol. 10, no. 6, pp. 40–50, December 2003.

Muqattash, A. and Krunz, M., Power controlled dual channel (PCDC) medium access protocol for wireless ad hoc networks, 22nd. Annual Joint Conference of the IEEE Computer and Communications Societies, INFOCOM 2003, vol. 1, pp. 470–480, 30 March–3 April 2003.

Murthy, S. and Garcia-Lunas-Aceves, J.J., An Efficient Routing Protocol for Wireless Networks, ACM Mobile Networks and App. J., Special Issue on Routing in Mobile Communication Networks, pp. 183–197, Oct. 1996.

Nikaein, N., Laboid, H., and Bonnet, C., Distributed Dynamic Routing Algorithm (DDR) for Mobile Ad Hoc Networks, Proceedings of the MobiHOC 2000:First Annual Workshop on Mobile Ad Hoc Networking and Computing, 2000.

Ogier, R.G. et al., Topology Broadcast based on Reverse-Path Forwarding (TBRPF), draft-ietf-manet-tbrpf-05.txt, INTERNET-DRAFT, MANET Working Group, March 2002.

Park, V.D. and Corson, M.S., A Highly Adaptive Distributed Routing Algorithm for Mobile Wireless Networks, Proceedings of INFOCOM, April 1997.

Pei, G., Gerla, M., and Chen, T.-W., Fisheye State Routing: A Routing Scheme for Ad Hoc Wireless Networks, Proc. ICC 2000, New Orleans, LA, June 2000.

Pei, G., Gerla, M., Hong, X., and Chiang, C., A Wireless Hierarchical Routing Protocol with Group Mobility, Proceedings of Wireless Communications and Networking, New Orleans, 1999.

Perkins, C.E. and Bhagwat, P., Highly Dynamic Destination-Sequenced Distance-Vector Routing (DSDV) for Mobile Computers, Comp. Commun. Rev., pp. 234–244, Oct. 1994.

Postel, J., Transmission Control Protocol, IETF RFC 793, September 1981.

Radhakrishnan, S., Rao, N.S.V., Racherla, G., Sekharan, C.N., and Batsell, S.G., DST–A Routing Protocol for Ad Hoc Networks Using Distributed Spanning Trees, IEEE Wireless Communications and Networking Conference, New Orleans,1999.

Raju, J. and Garcia-Luna-Aceves, J., A New Approach to On-demand Loop-free Multipath Routing, Proceedings of the 8th Annual IEEE International Conference on Computer Communications and Networks (ICCCN), pp. 522–527, Boston, MA, October 1999.

Santivanez, C., Ramanathan, R., and Stavrakakis, I., Making Link-State Routing Scale for Ad Hoc Networks, Proc. 2001 ACM Int’l. Symp. Mobile Ad Hoc Net. Comp., Long Beach, CA, October 2001.

Schiller, J., Mobile Communications, Addison-Wesley, Reading, MA, 2000.

Shakkottai, S., Rappaport, T.S., and Karlsson, P.C, Cross-Layer Design for Wireless Networks, IEEE Communications Magazine, pp. 74–80, October 2003.

Shannon, C.E., A mathematical theory of communication, Bell System Technical Journal, vol. 79, pp. 379–423, July 1948.

Su, W. and Gerla, M., IPv6 Flow Handoff in Ad-hoc Wireless Networks Using Mobility Prediction, IEEE Global Communications Conference, Rio de Janeiro, Brazil, pp. 271–275, December 1999.

Talucci, F., Gerla, M., and Fratta, L., MACA-BI (MACA By Invitation)-a receiver oriented access protocol for wireless multihop networks, 8th IEEE International Symposium on Personal, Indoor and Mobile Radio Communications, PIMRC’97, Vol. 2, pp. 435–439, 1-4 Sept. 1997.

Tang, K., Gerla, M., and Correa, M., Effects of Ad Hoc MAC Layer Medium Access Mechanisms under TCP, Mobile Networks and Applications, Kluwer Academic Publishers, Vol. 6, pp. 317–329, 2001.

Toh, C., A Novel Distributed Routing Protocol to Support Ad-hoc Mobile Computing, IEEE 15th Annual International Phoenix Conf., pp. 480–486, 1996.

Toumpis, S. and Goldsmith, A., Ad Hoc Network Capacity, Conference Record of Thirty-fourth Asilomar Conference on Signals Systems and Computers, Vol 2, pp. 1265–1269, 2000.

Tseng, Y.C. and Hsieh, T.Y., Fully power-aware and location-aware protocols for wireless multi-hop ad hoc networks, 11th. International Conference on Computer Communications and Networks, pp. 608–613, 14–16 Oct. 2002.

Woo, S.-C. and Singh, S., Scalable Routing Protocol for Ad Hoc Networks, Wireless Networks 7, Vol. 5, 513–529, 2001.

Xu, S. and Saadawi, T., Does the IEEE 802.11 MAC Protocol Work Well in Multihop Wireless Ad Hoc Networks? IEEE Communications Magazine, pp. 130–137, June 2001.

Xylomenos, G., Polyzos, G. C., Mahonen, P., and Saaranen, M., TCP Performance Issues over Wireless Links, IEEE Communications Magazine, Vol. 39, No. 4, pp. 53–58, April 2001.