COMPUTER DATA FORMATS

Successful programming requires a precise understanding of data formats. In this section, many common computer data formats are described as they are used with the Intel family of micro- processors. Commonly, data appear as ASCII, Unicode, BCD, signed and unsigned integers, and floating-point numbers (real numbers). Other forms are available, but are not presented here because they are not commonly found.

ASCII and Unicode Data

ASCII (American Standard Code for Information Interchange) data represent alphanumeric characters in the memory of a computer system (see Table 1–8). The standard ASCII code is a 7-bit code, with the eighth and most significant bit used to hold parity in some antiquated systems. If ASCII data are used with a printer, the most significant bits are a 0 for alphanumeric printing and 1 for graphics printing. In the personal computer, an extended ASCII character set is selected by placing a 1 in the leftmost bit. Table 1–9 shows the extended ASCII character set, using code 80H–FFH. The extended ASCII characters store some foreign letters and punctuation, Greek

characters, mathematical characters, box-drawing characters, and other special characters. Note that extended characters can vary from one printer to another. The list provided is designed to be used with the IBM ProPrinter, which also matches the special character set found with most word processors.

The ASCII control characters, also listed in Table 1–8, perform control functions in a computer system, including clear screen, backspace, line feed, and so on. To enter the control codes through the computer keyboard, hold down the Control key while typing a letter. To obtain the control code 01H, type a Control-A; a 02H is obtained by a Control-B, and so on. Note that the control codes appear on the screen, from the DOS prompt, as ^A for Control-A, ^B for Control-B, and so forth. Also note that the carriage return code (CR) is the Enter key on most modem key- boards. The purpose of CR is to return the cursor or print head to the left margin. Another code that appears in many programs is the line feed code (LF), which moves the cursor down one line.

To use Table 1–8 or 1–9 for converting alphanumeric or control characters into ASCII characters, first locate the alphanumeric code for conversion. Next, find the first digit of the hexadecimal ASCII code. Then find the second digit. For example, the capital letter “A” is ASCII code 41H, and the lowercase letter “a” is ASCII code 61H. Many Windows-based applications, since Windows 95, use the Unicode system to store alphanumeric data. This system stores each character as 16-bit data. The codes 0000H–00FFH are the same as standard ASCII code. The remaining codes, 0100H–FFFFH, are used to store all special characters from many worldwide character sets. This allows software written for the Windows environment to be used in many countries around the world. For complete information on Unicode, visit http://www.unicode.org.

ASCII data are most often stored in memory by using a special directive to the assembler program called define byte(s), or DB. (The assembler is a program that is used to program a computer in its native binary machine language.) An alternative to DB is the word BYTE. The DB and BYTE directives, and several examples of their usage with ASCII-coded character strings, are listed in Example 1–18. Notice how each character string is surrounded by apostrophes (’)—never use the quote (”). Also notice that the assembler lists the ASCII-coded value for each character to the left of the character string. To the far left is the hexadecimal memory location where the character string is first stored in the memory system. For example, the character string WHAT is stored beginning at memory address 001D, and the first letter is stored as 57 (W), followed by 68 (H), and so forth. Example 1–19 shows the same three strings defined as String^ character strings for use with Visual C++ Express 2005 and 2008. Note that Visual C++ uses quotes to surround strings. If an earlier version of C++ is used, then the string is defined with a CString for Microsoft Visual C++ instead of a String^. The ^ symbol indicates that String is a member of the garbage collection heap for managing the storage. A garbage collector cleans off the memory system (frees unused memory) when the object falls from visibility or scope in a C++ program and it also prevents memory leaks.

BCD (Binary-Coded Decimal) Data

Binary-coded decimal (BCD) information is stored in either packed or unpacked forms. Packed BCD data are stored as two digits per byte and unpacked BCD data are stored as one digit per byte. The range of a BCD digit extends from 00002 to 10012, or 0–9 decimal. Unpacked BCD data are returned from a keypad or keyboard. Packed BCD data are used for some of the instruc- tions included for BCD addition and subtraction in the instruction set of the microprocessor.

Table 1–10 shows some decimal numbers converted to both the packed and unpacked BCD forms. Applications that require BCD data are point-of-sales terminals and almost any device that performs a minimal amount of simple arithmetic. If a system requires complex arithmetic, BCD data are seldom used because there is no simple and efficient method of performing complex BCD arithmetic.

Example 1–20 shows how to use the assembler to define both packed and unpacked BCD data. Example 1–21 shows how to do this using Visual C++ and char or bytes. In all cases, the convention of storing the least-significant data first is followed. This means that to store 83 into memory, the 3 is stored first, and then followed by the 8. Also note that with packed BCD data, the letter H (hexadecimal) follows the number to ensure that the assembler stores the BCD value rather than a decimal value for packed BCD data. Notice how the numbers are stored in memory as unpacked, one digit per byte; or packed, as two digits per byte.

Byte-Sized Data

Byte-sized data are stored as unsigned and signed integers. Figure 1–14 illustrates both the unsigned and signed forms of the byte-sized integer. The difference in these forms is the weight of the leftmost bit position. Its value is 128 for the unsigned integer and minus 128 for the signed integer. In the signed integer format, the leftmost bit represents the sign bit of the number, as well as a weight of minus 128. For example, 80H represents a value of 128 as an unsigned number; as a signed number, it represents a value of minus 128. Unsigned integers range in value from 00H to FFH (0–255). Signed integers range in value from -128 to 0 to + 127.

Although negative signed numbers are represented in this way, they are stored in the two’s complement form. The method of evaluating a signed number by using the weights of each bit position is much easier than the act of two’s complementing a number to find its value. This is especially true in the world of calculators designed for programmers.

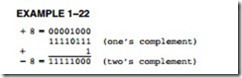

Whenever a number is two’s complemented, its sign changes from negative to positive or positive to negative. For example, the number 00001000 is a +8. Its negative value (-8) is found by two’s complementing the +8. To form a two’s complement, first one’s complement the number. To one’s complement a number, invert each bit of a number from zero to one or from one to zero. Once the one’s complement is formed, the two’s complement is found by adding a one to the one’s complement. Example 1–22 shows how numbers are two’s complemented using this technique.

Another, and probably simpler, technique for two’s complementing a number starts with the rightmost digit. Start by writing down the number from right to left. Write the number exactly as it appears until the first one. Write down the first one, and then invert all bits to its left. Example 1–23 shows this technique with the same number as in Example 1–22.

To store 8-bit data in memory using the assembler program, use the DB directive as in prior examples or char as in Visual C++ examples. Example 1–24 lists many forms of 8-bit numbers stored in memory using the assembler program. Notice in the example that a hexadecimal number is defined with the letter H following the number, and that a decimal number is written as is, without anything special. Example 1–25 shows the same byte data defined for use with a Visual C++ program. In C/C++ the hexadecimal value is preceded by a 0x to indicate a hexadecimal value.

Word-Sized Data

A word (16-bits) is formed with two bytes of data. The least significant byte is always stored in the lowest-numbered memory location, and the most significant byte is stored in the highest. This method of storing a number is called the little endian format. An alternate method, not used with the Intel family of microprocessors, is called the big endian format. In the big endian format, numbers are stored with the lowest location containing the most significant data. The big endian format is used with the Motorola family of microprocessors. Figure 1–15 (a) shows the weights of each bit position in a word of data, and Figure 1–15 (b) shows how the number 1234H appears when stored in the memory locations 3000H and 3001H. The only difference between a signed and an unsigned word in the leftmost bit is position. In the unsigned form, the leftmost bit is unsigned and has a weight of 32,768; in the signed form, its weight is -32,768. As with byte- sized signed data, the signed word is in two’s complement form when representing a negative number. Also, notice that the low-order byte is stored in the lowest-numbered memory location (3000H) and the high-order byte is stored in the highest-numbered location (3001H).

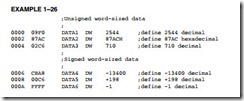

Example 1–26 shows several signed and unsigned word-sized data stored in memory using the assembler program. Example 1–27 shows how to store the same numbers in a Visual C++

program (assuming version 5.0 or newer), which uses the short directive to store a 16-bit integer. Notice that the define word(s) directive, or DW, causes the assembler to store words in the memory instead of bytes, as in prior examples. The WORD directive can also be used to define a word. Notice that the word data is displayed by the assembler in the same form as entered. For example, a l000H is displayed by the assembler as a 1000. This is for our convenience because the number is actually stored in the memory as 00 l0 in two consecutive memory bytes.

Doubleword-Sized Data

Doubleword-sized data requires four bytes of memory because it is a 32-bit number. Doubleword data appear as a product after a multiplication and also as a dividend before a division. In the 80386 through the Core2, memory and registers are also 32 bits in width. Figure 1–16 shows the form used to store doublewords in the memory and the binary weights of each bit position.

When a doubleword is stored in memory, its least significant byte is stored in the lowest numbered memory location, and its most significant byte is stored in the highest-numbered

memory location using the little endian format. Recall that this is also true for word-sized data. For example, 12345678H that is stored in memory locations 00100H–00103H is stored with the 78H in memory location 00100H, the 56H in location 00101H, the 34H in location 00102H, and the 12H in location 00103H.

To define doubleword-sized data, use the assembler directive define doubleword(s), or DD. (You can also use the DWORD directive in place of DD.) Example 1–28 shows both signed and unsigned numbers stored in memory using the DD directive. Example 1–29 shows how to define the same doublewords in Visual C++ using the int directive.

Integers may also be stored in memory that is of any width. The forms listed here are standard forms, but that doesn’t mean that a 256-byte wide integer can’t be stored in the memory. The microprocessor is flexible enough to allow any size of data in assembly language. When non- standard-width numbers are stored in memory, the DB directive is normally used to store them. For example, the 24-bit number 123456H is stored using a DB 56H, 34H, 12H directive. Note that this conforms to the little endian format. This could also be done in Visual C++ using the char directive.

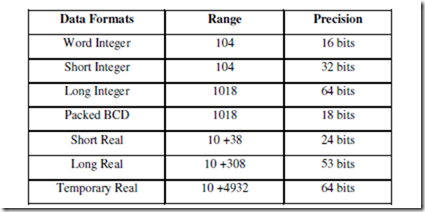

Real Numbers

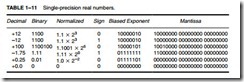

Because many high-level languages use the Intel family of microprocessors, real numbers are often encountered. A real number, or a floating-point number, as it is often called, contains two parts: a mantissa, significand, or fraction; and an exponent. Figure 1–17 depicts both the 4- and 8-byte forms of real numbers as they are stored in any Intel system. Note that the 4-byte number is called single-precision and the 8-byte form is called double-precision. The form presented here is the same form specified by the IEEE10 standard, IEEE-754, version 10.0. The standard has been adopted as the standard form of real numbers with virtually all programming languages and many applications packages. The standard also applies the data manipulated by the numeric coprocessor in the personal computer. Figure 1–17 (a) shows the single-precision form that contains a sign-bit, an 8-bit exponent, and a 24-bit fraction (mantissa). Note that because applications often require double-precision floating-point numbers [see Figure 1–17 (b)], the Pentium–Core2 with their 64-bit data bus perform memory transfers at twice the speed of the 80386/80486 microprocessors.

Simple arithmetic indicates that it should take 33 bits to store all three pieces of data. Not true—the 24-bit mantissa contains an implied (hidden) one-bit that allows the mantissa to represent 24 bits while being stored in only 23 bits. The hidden bit is the first bit of the normalized real number. When normalizing a number, it is adjusted so that its value is at least 1, but less than 2.

For example, if 12 is converted to binary (11002), it is normalized and the result is 1.1 * 23. The whole number 1 is not stored in the 23-bit mantissa portion of the number; the 1 is the hidden one-bit. Table 1–11 shows the single-precision form of this number and others.

The exponent is stored as a biased exponent. With the single-precision form of the real number, the bias is 127 (7FH) and with the double-precision form, it is 1023 (3FFH). The bias

and exponent are added before being stored in the exponent portion of the floating-point number. In the previous example, there is an exponent of 23, represented as a biased exponent of 127 + 3 or 130 (82H) in the single-precision form, or as 1026 (402H) in the double-precision form.

There are two exceptions to the rules for floating-point numbers. The number 0.0 is stored as all zeros. The number infinity is stored as all ones in the exponent and all zeros in the mantissa. The sign-bit indicates either a positive or a negative infinity.

As with other data types, the assembler can be used to define real numbers in both single- and double-precision forms. Because single-precision numbers are 32-bit numbers, use the DD directive or use the define quadword(s), or DQ, directive to define 64-bit double-precision real numbers. Optional directives for real numbers are REAL4, REAL8, and REAL10 for defining single-, double-, and extended precision real numbers. Example 1–30 shows numbers defined in real number format for the assembler. If using the inline assembler in Visual C++ single- precision numbers are defined as float and double-precision numbers are defined as double as shown in Example 1–31. There is no way to define the extended-precision floating-point number for use in Visual C++.

![clip_image001[4] clip_image001[4]](http://lh3.googleusercontent.com/-pTBie5YOirs/VcY2CbKhSvI/AAAAAAAANfc/C1OZBItOHas/clip_image001%25255B4%25255D_thumb.gif?imgmax=800)

![clip_image002[4] clip_image002[4]](http://lh3.googleusercontent.com/-8yVu08s7TfE/VcY2DoZrptI/AAAAAAAANfs/J4FSvX8VDLE/clip_image002%25255B4%25255D_thumb.gif?imgmax=800)

![clip_image003[4] clip_image003[4]](http://lh3.googleusercontent.com/-yDXp7dkBHxk/VcY2E9sE63I/AAAAAAAANf8/jxi_3jTOjwk/clip_image003%25255B4%25255D_thumb.gif?imgmax=800)

![clip_image008[3] clip_image008[3]](http://lh3.googleusercontent.com/--KjTQrOBjPY/VcY2KrTgSxI/AAAAAAAANhM/lgSbRgwlsnc/clip_image008%25255B3%25255D_thumb.gif?imgmax=800)

![clip_image010[3] clip_image010[3]](http://lh3.googleusercontent.com/-Su50LiOA3v0/VcY2MzYprGI/AAAAAAAANhs/2Tf1INDulqA/clip_image010%25255B3%25255D_thumb.gif?imgmax=800)

![clip_image012[3] clip_image012[3]](http://lh3.googleusercontent.com/-zGGqKILSGwc/VcY2PU0ivqI/AAAAAAAANh8/2JvZ1c2q-KM/clip_image012%25255B3%25255D_thumb.jpg?imgmax=800)

![clip_image014[3] clip_image014[3]](http://lh3.googleusercontent.com/-k1EItvtOp0k/VcY2SKFBxaI/AAAAAAAANiM/yVfpdNMgtIs/clip_image014%25255B3%25255D_thumb.jpg?imgmax=800)

![clip_image020[3] clip_image020[3]](http://lh3.googleusercontent.com/-YXLqSevvHYQ/VcY2Zy65jvI/AAAAAAAANj4/gKr04gm8VQw/clip_image020%25255B3%25255D_thumb.gif?imgmax=800)

![clip_image002[6] clip_image002[6]](http://lh3.googleusercontent.com/-SMiVFo-ZP3k/VcYzZyQN57I/AAAAAAAANc0/dbKC5NmqpWM/clip_image002%25255B6%25255D_thumb.gif?imgmax=800)

![clip_image003[4] clip_image003[4]](http://lh3.googleusercontent.com/-iiy6fOfBOok/VcYzaxy4eBI/AAAAAAAANdI/zN720_6tMyU/clip_image003%25255B4%25255D_thumb.gif?imgmax=800)

![clip_image005[8] clip_image005[8]](http://lh3.googleusercontent.com/-VAw_DtKED_0/VcYzccBRwbI/AAAAAAAANdU/WY6TZtAewPo/clip_image005%25255B8%25255D_thumb.gif?imgmax=800)

![clip_image009[4] clip_image009[4]](http://lh3.googleusercontent.com/-wiyKPHOd-a0/VcYzid-7feI/AAAAAAAANek/s_RQryLxbpQ/clip_image009%25255B4%25255D_thumb.gif?imgmax=800)

![clip_image010[4] clip_image010[4]](http://lh3.googleusercontent.com/-dnh_OI4EcDc/VcYzja-w08I/AAAAAAAANe0/kg0EcLFAICY/clip_image010%25255B4%25255D_thumb.gif?imgmax=800)