Overlapping Register Windows

One modern architectural feature that has not been as widely adopted as other features (such as pipelining) is overlapping register windows, which to date has only been adopted by the SPARC family. This feature is based upon studies that show typical programs spend much of their time dealing with procedure call-and-return overhead, which involves passing parameters on a stack located in main memory in traditional architectures. The SPARC architecture reduces much of this overhead by employing multiple register sets that overlap. These registers are used for passing parameters between procedures, instead of using a stack in main memory.

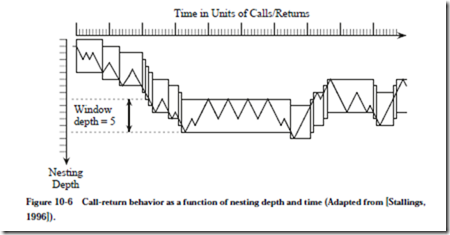

Procedure calls may be deeply nested in an ordinary program, but for a given window of time, the nesting depth fluctuates within a narrow band. Figure 10-6

illustrates this behavior. For a nesting depth window size of five, the window moves only 18 times for 100 procedure calls. Results produced by a group at UC Berkeley (Tamir and Sequin, 1983) show that a window size of eight will shift on less than 1% of the calls or returns.

The small window size for nested calls is important for improving performance. For each procedure call, a stack frame is normally constructed in which parameters, a return address, and local variables are placed. There is thus a great deal of stack manipulation that takes place for procedure calls, but the complexity of the manipulation is not all that great. That is, stack references are highly localized within a small area.

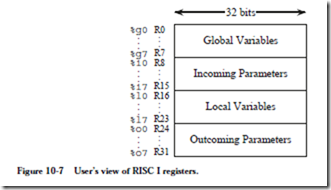

The RISC I architecture exploits this locality by keeping the active portion of the stack in registers. Figure 10-7 shows the user’s view of register usage for the RISC

I. The user sees 32 registers in which each register is 32 bits wide. Registers R0-R7 are used for global variables. Registers R8-R15 are used for incoming parameters. Registers R16-R23 are used for local variables, and registers R24-R31 are used for outgoing parameters. The eight registers within each group are enough to satisfy the bulk of call/return activity, as evidenced by the frequency distribution in Figure 10-6.

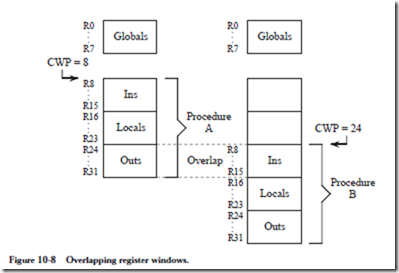

Although the user sees 32 registers, there may be several hundred registers that overlap. Figure 10-8 illustrates the concept of overlapping register windows. The global registers are detached from the others, and are continuously available as R0-R7. Registers R8-R31 make up the remaining 24 registers that the user sees, but this group of registers slides deeper into the register file (the entire set of registers) on each procedure call. Since the outgoing parameters for one procedure are the incoming parameters to another, these sets of registers can overlap. Registers R8-R31 are referred to as a window. A current window pointer (CWP) points to the current window, and increases or decreases for calls and returns, respectively.

In the statistically rare event when there are not enough registers for the level of nesting, then main memory is used. However, main memory is used for the lowest numbered window, so that the new current window still uses registers. The

highest register location then wraps around to the lowest, forming a circular buffer. As returns are made, registers that were written to memory are restored to the register file. Thus, execution always takes place with registers and never directly with main memory.

EXAMPLE: COMPILED CODE FOR OVERLAPPING REGISTER WINDOWS AND DELAYED BRANCHES

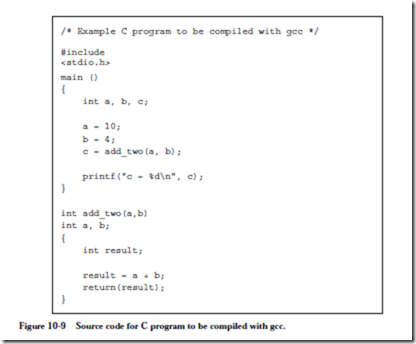

In this section, we analyze a C compiler-produced SPARC assembly program that exploits features of the RISC concept. We start with the C program shown in Figure 10-9, in which the main routine passes two integers to a subroutine, which returns the sum of the integers to the main routine. The code produced by a Solaris Unix C compiler using the command line:

An explanation of the more significant aspects of the assembled code is given in Figure 10-10, which includes a number of features found only in RISC code. There are a number of new instructions and pseudo-ops introduced in this code:

.seg/.section Unix executable programs have three segments for data, text (the instructions), and the stack. The.seg pseudo-op instructs the assembler to place the code that follows into one of these three segments. Some of the segments have different protections, which is why there is a data segment and also a data1 segment. The data1 segment contains constants, and should be protected from writing. The data segment is both readable and writable and is therefore not protected against reading or writing (but it is protected from being executed, as is data). Newer versions of Unix allow more text and data areas to be used for different read, write, and execute protections.

%hi Same as ARC pseudo-op .high22.

%lo Same as ARC pseudo-op .low10.

add Same as addcc except that the condition codes are unaffected.

save Advances current window pointer and increments stack pointer to create space for local variables.

mov Same as:

or %g0,register_or_immediate,destination_register. This differs from st because the destination is a register.

nop No-operation (the processor waits for one instruction cycle, while the branch finishes).

.asci i/.asciz Reserves space for an ASCII string.

set Sets a register to a value. This is a macro that expands to the sethi, %hi, and %lo constructs shown in #PROLOGUE# 1.

ret Return. Same as: jmpl %i7+8, %g0. Notice that it seems like the return should only go 4 bytes past the calling instruction, not 8 bytes as indicated above. This is because the instruction that follows the call is a nop, inserted by the compiler to protect the integrity of the pipeline.

-

restore Decrements current window pointer.

-

b Same as ba.

-

.file Identifies the source file.

-

.align Forces the code that follows onto a boundary evenly divisible by its argument.

-

.type Associates a label with its type.

-

.size Computes the size of a segment.

-

.ident Identifies the compiler version.

We can see the positions of the delay slots, marked with nop instructions. (The optimizing feature of the compiler has not been applied yet.) Despite the avail- ability of overlapping register windows in the SPARC architecture, the unoptimized code makes no use of this feature: parameters to be passed to a routine are copied to the stack, and the called routine then retrieves these parameters from the stack. Again, the optimizing feature of the compiler has not yet been invoked. (But read on).

Notice that the compiler does not seem to be consistent with its choice of registers for parameter passing. Prior to the call to add_two, the compiler uses %o0 and %o1 (%r0 and %r1) for parameters passed to add_two. Then, %r1 is used for the parameters passed to printf. Why did the compiler not start with %r0 again, or choose the next available register (%o2)? This is the register assignment problem, which has been the object of a great deal of study. We will not go into details here1, as this is more appropriate for a course in compiler design, but suffice it to say that any logically correct assignment of variables to registers will work, but that some assignments are better than others in terms of the number of registers used and the overall program execution time.

Why are the stack frames so large? We only need three words on the stack frame for local variables a, b, and c in main. We might also need a word to store the return address, although the compiler does not seem to generate code for that. There are no parameters passed to main by the operating system, and so the stack frame that main sees should only be four words (16 bytes) in size. Thus, the line at the beginning of routine main:

save %sp, -128, %sp

should only be:

save %sp, -16, %sp.

What is all of the extra space for? There are a number of runtime situations that may need stack space. For instance, if the nesting depth is greater than the number of windows, then the stack must be used for overflow. (See Figure D-2 in [SPARC, 1992])

If a scalar is passed from one routine to another, then everything is fine. But if a callee refers to the address of a passed scalar (or aggregate), then the scalar (or aggregate) must be copied to the stack and be referenced from there for the life- time of the pointer (or for the lifetime of the procedure, if the pointer lifetime is not known).

Why does the return statement ret cause a return to the code that is 8 bytes past the call, instead of 4 as we have been doing it? As mentioned above, this is because there is a nop that follows call (the so-called “delay-slot instruction”).

Notice that routine labels that appear in the source code are prepended with an underscore in the assembly code, so that main, add_two, and printf in C become _main, _add_two, and _printf in gcc generated SPARC code. This means that if we want to write a C program that is linked to a gcc generated SPARC program, that the C calls should be made to routines that begin with underscores. For example, if add_two is compiled into SPARC code, and we invoke it from a C main program in another file, then the C program should make a call to _add_two, and not add_two, even though the routine started out as add_two. Further, the C program needs to declare _add_two as external.

If the compilation for add_two is continued down to an executable file, then there is no need to treat the labels differently. The add_two routine will still be labeled _add_two, but routine main will be compiled into code that expects to see _add_two and so everything will work OK. This is not the case, however, if a gcc program makes calls to a Fortran library.

Fortran is a commonly used language in the scientific community, and there are a number of significant Fortran libraries that are used for linear algebra, modeling and simulation, and parallel scientific applications. As programmers of language XYZ (whatever language that may be), we sometimes find ourselves wanting to write XYZ programs that make calls to Fortran routines. This is easy to do once we understand what is happening.

There are two significant issues that need to be addressed:

(1) differences in routine labels;

(2) differences in subroutine linkage.

In Fortran, the source code labels are prepended with two underscores in the assembly code. A C program (if C is language XYZ) that makes a call to Fortran routine add_two would then make a call to _ _add_two, which also must be declared as external in the C source code (and declared as global in the Fortran program).

If all of the parameters that are passed to the Fortran routines are pointers, then everything will work OK. If there are any scalars passed, then there will be trouble because C (like Java) uses call-by-value for scalars whereas Fortran uses call-by-reference. We need to “trick” the C compiler into using call-by-reference by making it explicit. Wherever a Fortran routine expects a scalar in its argument list, we use a pointer to the scalar in the C code.

As a practical consideration, the gcc compiler will compile Fortran programs. It knows what to do by observing the extension of the source file, which should be .f for Fortran.

Now, let us take a look at how an optimizing compiler improves the code. Figure 10-11 shows the optimized code using the compiler’s -O flag. Notice there is not a single nop, ld, or st instruction. Wasted cycles devoted to nop instructions have been reclaimed, and memory references devoted to stack manipulation have been eliminated.