10.2 Pipelining the Data path

The flow of instructions through a pipeline follows the steps normally taken when an instruction is executed. In the discussion below we consider how three classes of instructions: arithmetic, branch, and load-store, are executed, and then we relate this to how the instructions are pipelined.

10.3.1 ARITHMETIC, BRANCH, AND LOAD-STORE INSTRUCTIONS

Consider the “normal” sequence of events when an arithmetic instruction is executed in a load-store machine:

1) Fetch the instruction from memory;

2) Decode the instruction (it is an arithmetic instruction, but the CPU has to find that out through a decode operation);

3) Fetch the operands from the register file;

4) Apply the operands to the ALU;

5) Write the result back to the register file.

There are similar patterns for other instruction classes. For branch instructions the sequence is:

1) Fetch the instruction from memory;

2) Decode the instruction (it is a branch instruction);

3) Fetch the components of the address from the instruction or register file;

4) Apply the components of the address to the ALU (address arithmetic);

5) Copy the resulting effective address into the PC, thus accomplishing the branch.

The sequence for load and store instructions is:

1) Fetch the instruction from memory;

2) Decode the instruction (it is a load or store instruction);

3) Fetch the components of the address from the instruction or register file;

4) Apply the components of the address to the ALU (address arithmetic);

5) Apply the resulting effective address to memory along with a read (load) or write (store) signal. If it is a write signal, the data item to be written must also be retrieved from the register file.

The three sequences above show a high degree of similarity in what is done at each stage: (1) fetch, (2) decode, (3) operand fetch, (4) ALU operation, (5) result writeback.

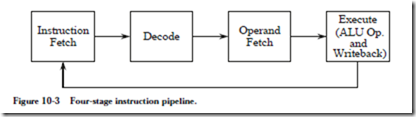

These five phases are similar to the four phases discussed in chapters 4 and 6 except that we have refined the fourth phase, “execute,” into two subphases: ALU operation and writeback, as illustrated in Figure 10-3. A result writeback is not

always needed, and one way to deal with this is to have two separate subphases (ALU and writeback) with a bypass path for situations when a writeback is not needed. For this discussion, we take a simpler approach, and force all instructions to go entirely through each phase, whether or not that is actually needed.

10.3.2 PIPELINING INSTRUCTIONS

In practice, each CPU designer approaches the design of the pipeline from a dif- ferent perspective, depending upon the particular design goals and instruction set. For example the original SPARC implementation had only four pipeline stages, while some floating point pipelines may have a dozen or more stages.

Each of the execution units performs a different operation in the fetch-execute cycle. After the Instruction Fetch unit finishes its task, the fetched instruction is handed off to the Decode unit. At this point, the Instruction Fetch unit can begin fetching the next instruction, which overlaps with the decoding of the previous instruction. When the Instruction Fetch and Decode units complete their tasks, they hand off the remaining tasks to the next units (Operand Fetch is the next unit for Decode). The flow of control continues until all units are filled.

10.3.3 KEEPING THE PIPELINE FILLED

Notice an important point: although it takes multiple steps to execute an instruction in this model, on average, one instruction can be executed per cycle as long as the pipeline stays filled. The pipeline does not stay filled, however, unless we are careful as to how instructions are ordered. We know from Figure 10-1 that approximately one in every four instructions is a branch. We cannot fetch the instruction that follows a branch until the branch completes execution. Thus, as soon as the pipeline fills, a branch is encountered, and then the pipeline has to be

flushed by filling it with no-operations (NOPs). These NOPs are sometimes referred to as pipeline bubbles. A similar situation arises with the LOAD and STORE instructions. They generally require an additional clock cycle in which to access memory, which has the effect of expanding the Execute phase from one cycle to two cycles at times. The “wait” cycles are filled with NOPs.

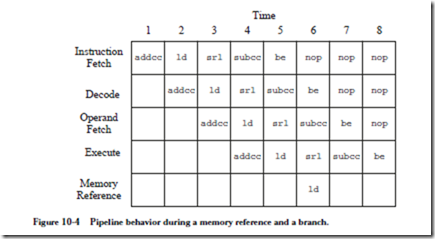

Figure 10-4 illustrates the pipeline behavior during a memory reference and also

during a branch for the ARC instruction set. The addcc instruction enters the pipeline on time step (cycle) 1. On cycle 2, the ld instruction, which references memory, enters the pipeline and addcc moves to the Decode stage. The pipeline continues filling with the srl and subcc instructions on cycles 3 and 4, respectively. On cycle 4, the addcc instruction is executed and leaves the pipeline. On cycle 5, the ld instruction reaches the Execute level, but does not finish execution because an additional cycle is needed for memory references. The ld instruction finishes execution during cycle 6.

Branch and Load Delay Slots

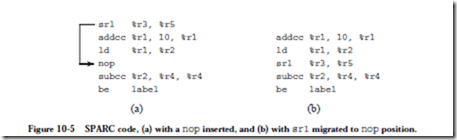

The ld and st instructions both require five cycles, but the remaining instructions require only four. Thus, an instruction that follows an ld or st should not use the register that is being loaded or stored. A safe approach is to insert a NOP after an ld or an st as shown in Figure 10-5a. The extra cycle (or cycles, depending on the architecture) for a load is known as a delayed load, since the data from the load is not immediately available on the next cycle. A delayed

branch is similar, as shown for the be instruction in cycles 5 through 8 of Figure 10-4. The position occupied by this NOP instruction is known as a load delay slot or branch delay slot respectively.

It is often possible for the compiler to find a nearby instruction to fill the delay slot. In Figure 10-5a, the srl instruction can be moved to the position of the nop since its register usage does not conflict with the surrounding code and reordering instructions this way does not impact the result. After replacing the nop line with the srl line, the code shown in Figure 10-5b is obtained. This is the code that is traced through the pipeline in Figure 10-4.

Speculative Execution of Instructions

An alternative approach to dealing with branch behavior in pipelines is to simply guess which way the branch will go, and then undo any damage if the wrong path is taken. Statistically, loops are executed more often than not, and so it is usually a good guess to assume that a branch that exits a loop will not be taken. Thus, a processor can start processing the next instruction in anticipation of the direction of the branch. If the branch goes the wrong way, then the execution phase for the next instruction, and any subsequent instructions that enter the pipeline, can be stopped so that the pipeline can be flushed. This approach works well for a number of architectures, particularly those with slow cycle speeds or deep pipelines. For RISCs, however, the overhead of determining when a branch goes the wrong way and then cleaning up any side effects caused by wrong instructions entering the pipeline is generally too great. The nop instruction is normally used in RISC pipelines when something useful cannot be used to replace it.

EXAMPLE: ANALYSIS OF PIPELINE EFFICIENCY

In this example, we analyze the efficiency of a pipeline.

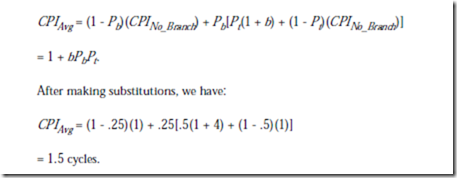

A processor has a five stage pipeline. If a branch is taken, then four cycles are needed to flush the pipeline. The branch penalty b is thus 4. The probability Pb that a particular instruction is a branch is .25. The probability Pt that the branch is taken is .5. We would like to compute the average number of cycles needed to execute an instruction, and the execution efficiency.

When the pipeline is filled and there are no branches, then the average number of cycles per instruction (CPINo_Branch) is 1. The average number of cycles per instruction when there are branches is then:

The execution efficiency is the ratio of the cycles per instruction when there are no branches to the cycles per instruction when there are branches. Thus we have:

The processor runs at 67% of its potential speed as a result of branches, but this is still much better than the five cycles per instruction that might be needed without pipelining.

There are techniques for improving the efficiency. As stated above, we know that loops are usually executed more than once, so we can guess that a branch out of a loop will not be taken and be right most of the time. We can also run simulations on the non-loop branches, and get a statistical sampling of which branches are likely to be taken, and then guess the branches accordingly. As explained above, this approach works best when the pipeline is deep or the clock rate is slow.