■ SUMMARY

In the RISC approach, the most frequently occurring instructions are optimized by eliminating or reducing the complexity of other instructions and addressing modes commonly found in CISC architectures. The performance of RISC architectures is further enhanced by pipelining and increasing the number of registers available to the CPU. Superscalar and VLIW architectures are examples of newer performance enhancements that extend, rather than replace, the RISC approach.

Parallel architectures can be classified as MISD, SIMD, or MIMD. The MISD approach is used for systolic array processing, and is the least general architecture of the three. In a SIMD architecture, all PEs carry out the same operations on different data sets, in an “army of ants” approach to parallel processing. The MIMD approach can be characterized as “herd of elephants,” because there are a small number of powerful processors, each with their own data and instruction streams.

The current trend is moving away from the fine grain parallelism that is exemplified by the MISD and SIMD approaches, and toward the MIMD approach. This trend is due to the high time cost of communicating among PEs, and the economy of using networks of workstations over tightly coupled parallel processors. The goal of the MIMD approach is to better balance the time spent in computation with the time spent in communication.

■ FURTHER READING

Three primary characteristics of RISC architectures enumerated in Section 10.2 originated at IBM’s T. J. Watson Research Center, as summarized in (Ralston and Reilly, 1993, pp. 1165 – 1167). (Hennessy and Patterson, 1995) is a classic reference on much of the work that led to the RISC concept, although the word “RISC” does not appear in the title of their textbook. (Stallings, 1991) is a thorough reference on RISCs. (Tamir and Sequin, 1983) show that a window size of eight will shift on less than 1% of the calls or returns. (Tanenbaum, 1999) pro- vides a readable introduction to the RISC concept. (Dulong, 1998) describes the IA-64. The PowerPC 601 architecture is described in (Motorola).

(Quinn, 1987) and (Hwang, 1993) overview the field of parallel processing in terms of architectures and algorithms. (Flynn, 1972) covers the Flynn taxonomy of architectures. (Yang and Gerasoulis, 1991) argue for maintaining a ratio of communication time to computation time of less than 1. (Hillis, 1985) and (Hillis, 1993) describe the architectures of the CM-1 and CM-5, respectively. (Hui, 1990) covers interconnection networks, and (Leighton, 1992) covers routing

algorithms for a few types of interconnection networks. (Wu and Feng, 1981) cover routing on a shuffle-exchange network.

Additional information can be found on programming the Sega Genesis at http://hiwaay.net/~jfrohwei/sega/genesis.html.

Dulong, C., “The IA-64 Architecture at Work,” IEEE Computer, vol. 31, pp. 24-32, (July 1998).

Flynn, M. J., “Some Computer Organizations and Their Effectiveness,” IEEE Transactions on Computers, vol. 30, no. 7, pp. 948-960, (1972).

Hennessy, J. L. and D. A. Patterson, Computer Architecture: A Quantitative Approach, 2/e, Morgan Kaufmann Publishers, San Mateo, (1995).

Hillis, W. D., The Connection Machine, The MIT Press, (1985).

Hillis, W. D. and L. W. Tucker, “The CM-5 Connection Machine: A Scalable Supercomputer,” Communications of the ACM, vol. 36, no. 11, pp. 31-40, (Nov., 1993).

Hui, J. Y., Switching and Traffic Theory for Integrated Broadband Networks, Kluwer Academic Publishers, (1990).

Hwang, K., Advanced Computer Architecture: Parallelism, Scalability, Programmability, McGraw-Hill, (1993).

Knuth, D. E., An Empirical Study of FORTRAN Programs, Software—Practice and Experience, 1, 105-133, 1971.

Leighton, F. T., Introduction to Parallel Algorithms and Architectures: Arrays, Trees, Hypercubes, Morgan Kaufmann, (1992).

Motorola Inc., PowerPC 601 RISC Microprocessor User’s Manual, Motorola Literature Distribution, P. O. Box 20912, Phoenix, AZ, 85036.

Quinn, M. J., Designing Efficient Algorithms for Parallel Computers, McGraw-Hill, (1987).

Ralston, A. and E. D. Reilly, eds., Encyclopedia of Computer Science, 3/e, van Nostrand Reinhold, (1993).

SPARC International, Inc., The SPARC Architecture Manual: Version 8, Prentice Hall, Englewood Cliffs, New Jersey, (1992).

Stone, H. S. and J. Cocke, “Computer Architecture in the 1990s,” IEEE Computer, vol. 24, no. 9, pp. 30-38, (Sept., 1991).

Stallings, W., Computer Organization and Architecture: Designing for Performance, 4/e, Prentice Hall, Upper Saddle River, (1996).

Stallings, W., Reduced Instruction Set Computers, 3/e, IEEE Computer Society Press, Washington, D.C., (1991).

Tamir, Y., and C. Sequin, “Strategies for Managing the Register File in RISC,”

IEEE Trans. Comp., (Nov. 1983).

Tanenbaum, A., Structured Computer Organization, 4/e, Prentice Hall, Upper Saddle River, New Jersey, (1999).

Yang, T. and A. Gerasoulis, “A Fast Static Scheduling Algorithm for DAGs on an Unbounded Number of Processors,” Proceedings of Supercomputing ’91, Albu- querque, New Mexico, (Nov. 1991).

Wu, C.-L. and T.-Y. Feng, “The Universality of the Shuffle-Exchange Network,”

IEEE Transactions on Computers, vol. C-30, no. 5, pp. 324-, (1981).

■ PROBLEMS

10.1 Increasing the number of cycles per instruction can sometimes improve the execution efficiency of a pipeline. If the time per cycle for the pipeline described in Section 10.3 is 20 ns, then CPIAvg is 1.5 ´ 20 ns = 30 ns. Compute the execution efficiency for the same pipeline in which the pipeline depth increases from 5 to 6 and the cycle time decreases from 20 ns to 10 ns.

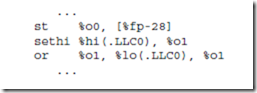

10.2 The SPARC code below is taken from the gcc generated code in Figure 10-10. Can %r0 be used in all three lines, instead of “wasting” %r1 in the second line?

10.3 Calculate the speedup that can be expected if a 200 MHz Pentium chip is replaced with a 300 MHz Pentium chip, if all other parameters remain unchanged.

10.4 What is the speedup that can be expected if the instruction set of a certain machine is changed so that the branch instruction takes 1 clock cycle instead of 3 clock cycles, if branch instructions account for 20% of all instructions executed by a certain program? Assume that other instructions average 3 clock cycles per instruction, and that nothing else is altered by the change.

10.5 Create a dependency graph for the following expression:

10.6 Given 100 processors for a computation with 5% of the code that cannot be parallelized, compute speedup and efficiency.

10.7 What is the diameter of a 16-space hypercube?

10.8 For the EXAMPLE at the end of Section 10.9.2, compute the total cross- point complexity over all three stages.