Business and Markets

In this chapter…

Worldwide Production of Semiconductors

Worldwide Consumption of Semiconductors

Military Electronics

The Business of Making Semiconductors

Processed silicon is more valuable than gold, ounce for ounce.

The gross national product of the United States was $9.2 trillion in 2000; semiconductors accounted for $204 billion, or about 2.2 percent of that total. Semiconductor manufacturing is a bigger part of the U.S. economy than mining, communications, utilities, or agriculture, forestry, and fishing. The U.S. Bureau of Labor Statistics says 284,000 Americans are directly employed in the semiconductor industry; 52,000 of those are semiconductor processors, or people in the so-called “bunny suits.”

The top 10 U.S. airlines put together made only half as much money as semiconductor makers. Intel and Texas Instruments sold more than Coca-Cola and Pepsi. Every one of the 15 corporations receiving the most patents in 2000 was in the semiconductor or computer business. San Jose, California—the capital of Silicon Valley—led the nation in highest average annual salary in 2000, outdistancing the second-ranked city (San Francisco) by a hefty 28 percent margin. Most important, the average Nintendo game has more computer power than NASA had for its moon landings.

Tech Talk

In 2001, there were about 60 million transistors built for every man, woman, and child on Earth. By 2010, the number should be close to 1 billion transistors per person.

Worldwide Production of Semiconductors

Excepting the annus horribilis of 2001, the worldwide market for semiconductors recently passed $200 billion per year. That’s about the same as the national output of Saudi Arabia or Switzerland. Figure 5.1 shows month-by-month sales of semiconductors for 312 consecutive months (26 years), from the beginning of 1976 through the end of 2001. Note that these are monthly, not yearly, figures, so sales have been in the range of $10 billion to $15 billion per month—or $400 million per day—for several years.

Figure 5.2 shows the same data graphed yearly, so the vertical scale is 10 times higher. Although some years have been volatile, the overall growth in semiconductors is evident, and has been averaging about 15 percent compounded growth per year.

Sales by Revenue

The year 2001 saw the worst downturn in the comparatively brief history of the industry, with total revenue falling by an astounding 32 percent from the previous year’s level of over $204 billion. Memory chips led the decline, with sales falling by half, but all sectors suffered.

In good years and in bad, the proportion of revenue contributed by each type of component remains about the same. Microprocessors, microcontrollers, digital signal processors (DSPs), and peripheral chips account for about one-third of the total revenue (but not of the total unit volume). The next largest contributor to vendors’ coffers is memory. DRAMs, SRAMs, and ROMs of all types account for 20 percent to 25 percent of the revenue share. Analog components and miscellaneous digital-logic devices each contribute 15 percent to 17 percent to the total. Discrete components, such as resistors and capacitors, and optoelectronic components, such as LEDs and optical sensors, both make single-digit contributions. These segments are summarized in Figure 5.3. Bear in mind that, barring the first few years of the 21st century, every percentage point represents about $2 billion in revenue. That’s not small potatoes.

Sales by Unit

Using data from 2001 as our yardstick, the huge majority of unit sales are in discrete components. Like the insects of the semiconductor realm, these tiny devices are almost invisible, even to industry insiders. At an astounding 200 billion units per year, it’s clear that diodes, transistors, rectifiers, and the like are ubiquitous. (See Figure 5.4.)

Next up, but almost an order of magnitude lower in unit sales, are the analog components. Analog amplifiers, D/A and A/D converters, voltage regulators, and numerous other nondigital components make up this category.

Optoelectronics are not far behind their analog cousins, including LEDs, laser diodes, CCD image sensors, and other things that light up. The majority of optoelectronic components are used in consumer electronics, making up the LED displays of alarm clocks, the laser diodes of CD players, and the “film” of digital cameras.

Miscellaneous digital logic components take fourth place. This category includes low-end logic chips such as AND gates and OR gates, but also much higher value devices such as programmable logic (CPLDs and FPGAs) and custom ASIC chips. These latter chips tend to be expensive so they give the logic segment a bigger slice of the revenue pie than they do of the unit-volume pie.

It’s interesting that the number of microprocessors and microcontrollers sold is not much lower than the number of memory chips. Because every microprocessor typically requires several memory chips as “support” components, you’d expect this ratio to be more lopsided. Yet the number of chips in the CPU and DSP category is only about 20 percent smaller than the number of memory chips. In part, this is because the microprocessor category also includes some peripheral controllers, which aren’t really microprocessors and don’t require external memory chips. Many low-end microprocessors and microcontrollers have some memory of their own built in, so they often don’t need extra memory chips, either. The high volume of these one-chip microcontrollers balances out the relatively few high-end microprocessors that need lots of extra memory.

Taking another big jump down the volume chart, we see the sensors. This category includes silicon acceleration sensors for automobile airbags, temperature sensors used in cars and industrial applications, magnetic sensors, and other specialized chips.

Bringing up the rear are the so-called bipolar components. Bipolar components aren’t really a separate category from sensors, microprocessors, and so forth, but they are manufactured using a different process than all the other devices we’ve covered. Market research usually classifies them separately for that reason. As you can see, there are very few bipolar components made or sold, and their numbers are dwindling slowly.

Average Selling Prices

The average selling price (ASP) of a class of components can be both useful and misleading. It is useful because it gives us an idea of what the high-value components are and where the most value is being added. It is misleading because the statistics group many different types of components together, and not all chips within each category have similar selling prices. The mix of components can significantly skew the perception of the value of an entire segment.

Having said all that, the discrete components clearly have the lowest ASP, at about $0.06 per device. Some components, such as microwave or radio frequency (RF) transistors, raise this average while low-value components, such as diodes and rectifiers, lower it. Still, when discrete components account for nearly half of the unit volume but only about 8 percent of the revenue, you know you’re dealing with inexpensive parts.

Optoelectronics are the only other major category of components with ASPs under half a dollar, at $0.37. Bipolar components and sensors both hover at about $0.50 each. Then there’s a modest $0.75 for analog components, as Figure 5.5 shows.

Large-scale digital chips clearly command a price premium. Part of this is perception and part is actual component cost. Some large-scale digital chips, such as FPGAs and ASICs, have huge development costs that must be amortized across comparatively few components. Other logic devices in this same category, such as individual logic gates, are commodities, sold almost by the pound. Overall, this category commands an ASP of $1.50.

Although memory chips are sometimes characterized as the semiconductor commodity, their prices suggest otherwise. The ASP for all memory chips in 2001 was $3.25, a fair number, but one that is very volatile. Because so many memory chips are interchangeable and demand is so cyclical, price competition is ferocious. Memory production might be unconscionably profitable one year and diabolically costly the next. Each downturn tends to shake out one or two memory vendors, slowly reducing their ranks.

At the top of the pricing chain are, of course, the microprocessors. Intel didn’t get to its exalted position by making commodity components. Intel’s well-known PC processors account for an increasingly small proportion of total microprocessor unit sales, but its success nonetheless pumps up the average for the whole category. The only thing preventing the $6.12 ASP from being contaminated by this anomaly is the enormous unit volume of the other components.

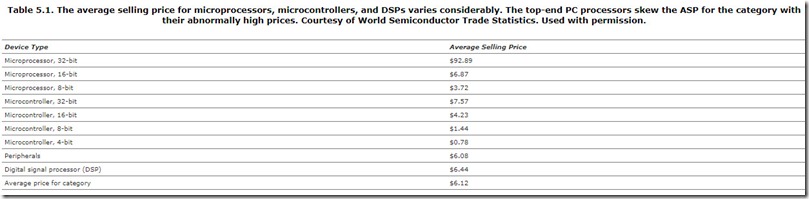

Average Microprocessor Prices

The ASP for microprocessors and related components is so lopsided that it warrants further examination. The data in Table 5.1 are once again taken from the World Semiconductor Trade Statistics (WSTS) organization for the calendar year 2001. Although overall semiconductor sales were badly depressed in that year, the ratio of ASPs and the relationship among components is the same in any year.

In Table 5.1, the top line (32-bit microprocessors) represents only a few vendors’ components. Specifically, it is overwhelmingly Intel’s latest generation Pentium processors used in PCs. This segment also includes AMD’s PC-compatible processors and the PowerPC processors made by Motorola and IBM, primarily for Apple’s Macintosh computers. Macintosh commands about a 4 percent market share of desktop computers compared to 95 percent for the “Wintel” PC, so the unit volume (and hence, the ASP) is comparatively unaffected by Macs.

Other desktop computers, such as engineering workstations from Sun Microsystems, Silicon Graphics (SGI), Hewlett-Packard, and others merely represent rounding errors in this segment. Their unit volumes are “in the noise margin,” as electrical engineers might say. As proud as these computer companies are of their high-end microprocessor technology, those processors have next to no effect on the economy of semiconductors.

With an ASP fully 13 times higher than the average for the rest of the category—which is itself the highest in the semiconductor industry—it’s no wonder that Intel attracts so many imitators. Intel’s processors are difficult to clone because of their aging, baroque design. Indeed, it’s very likely Intel itself would have abandoned the product line years ago had not IBM serendipitously chosen it for its first IBM PC back in 1981. Intel’s Pentium family has succeeded completely in spite of its technology, not because of it.

Geographic Breakdown of Production

For geographic and political purposes, semiconductor manufacturing is generally divided into four major regions: North America, Europe, Japan, and Asia and the Pacific region, as shown in Figure 5.6. Roughly speaking, production is evenly divided among these four areas, with North America (mostly the United States) maintaining a slight lead. Throughout the late 1980s and 1990s and into the following decade, the United States produced a little more than 30 percent of the world’s semiconductors (measured by revenue), with the other three regions each accounting for about 20 percent to 24 percent of the total. During those two decades, Japan’s share of production eroded gradually, losing ground mostly to vendors in Taiwan, Korea, and Singapore.

Because these statistics measure revenue and not units, they’re skewed toward higher priced parts. Thus, the United States is somewhat overrepresented because of its preponderance of microprocessor companies. Texas Instruments, Motorola, Intel, AMD, and the dozens of other microprocessor, DSP, and microcontroller companies based in the United States contribute revenue out of proportion to their impact in simple unit volume.

The United States consumes most of its own chips, swallowing three-quarters of its domestic production. Table 5.2 shows that Europe and the Far East show somewhat less provincialism, but Japan keeps a generous 82 percent of its chips within its borders.

The European and Far Eastern regions both ship about half of their semiconductors to the United States. The exception again is Japan, which gets only about 20 percent of its revenue from North American customers; the rest stays in the country.

If we examine the data from the demand side, shown in Figure 5.7, North America buys about half of the world’s production of semiconductors. Japanese consumption dropped in the late 1990s because of the country’s economic recession, whereas other Far Eastern and European countries increased consumption slightly. The rise of the Asia and Pacific region vendors’ percentage of consumption was not merely a statistical side effect of the Japanese reduction. These countries really did purchase more chips on an absolute revenue basis, not simply in relation to Japan’s shrinking share.

Worldwide Consumption of Semiconductors

Where do all these chips, laser diodes, and sensors go? They end up in quite a few places, as you might imagine, and some that you probably can’t. Semiconductors are used in many different kinds of systems, from the obvious ones like computers and televisions, to the obscure ones like dog collars, telephone wiring, and greeting cards. Here we’ll take a look at some of the major categories, as shown in Figure 5.8.

Computers and PCs

First and foremost among consumers of semiconductors are computers. Computers are almost all semiconductors, with a little metal or plastic wrapped around the outside. A 30-pound bag of electronics would not be an inappropriate description of the average PC. Because we’re measuring consumption by revenue, not units, PCs are even more heavily represented in the total because of their expensive main microprocessor. PCs also have a lot of memory chips, the second-most expensive class of semiconductors. All this makes PC sales a good proxy for semiconductor sales, at least in that segment.

Tech Talk

Processor Pricing Battles

For years, AMD and Intel have battled over the PC-processor business. Intel has always commanded the lion’s share of the market, yet AMD’s chips are sometimes considered technically superior and are almost always cheaper. How can AMD build its chips so inexpensively, and why don’t customers flock to the less expensive processors?

To answer the first question, it’s important to understand that price and cost are not the same thing. They’re only loosely related. Both Intel and AMD sell their PC processors for far more than they cost to make. Like perfume and luxury cars, the cost of the materials and labor is almost irrelevant. The price is set by market conditions, not by cost overhead.

The second question hinges on the small part the processor plays in the total PC price structure. Mainstream PCs generally sell for around $800 and laptop computers can easily be priced at double that amount. The cost of the microprocessor is only a small part of the cost of building a whole PC. A suicidal competitor could give its chips away for free, but the complete PC would only be about $35 less expensive, not enough to sway most customers.

Enter brand-name marketing. It’s fair to say that most people buying a PC don’t have the faintest idea of how they work or what all the specifications mean. This is the perfect environment for marketing tactics to flower. Years of “Intel Inside” ad campaigns never mentioned anything about the chip’s technical features. They were pure brand-awareness ploys, imprinting a particular brand name on a largely nave public. Microprocessors are now sold like perfume.

It also explains why PCs are so price competitive. Virtually no PC makers also make chips. (IBM is one of the exceptions.) That means PC makers have to buy their chips from the same semiconductor vendors that are also supplying their competitors. Volume discounts are about the only concession PC makers can wring out of chip makers. With little value to add other than the color of the plastic box, PC makers fight for every penny, which depends on keeping their volumes up. The advantage to consumers is that prices keep going down as chips get cheaper and PC makers struggle to remain competitive.

Communications and Networking

After PCs, communications equipment is the biggest consumer of electronics. For our purposes, communications means telephones and telephone equipment, computer networking, cellular phones and their infrastructure, and anything to do with satellites, television, and radio transmissions (although not the TVs and radios themselves). This segment has been growing rapidly for a number of years. The actual percentage you hear depends on whom you ask and when you ask them. It dips and rises with market conditions, of course, as telephone companies and network companies first invest, then retrench, depending on regulatory and market conditions.

Networking equipment consumes high-end microprocessors and DSP chips, lots and lots of memory, and special-purpose communications chips developed especially for one or two customers. Network and telephone companies also buy lots of laser diodes and optical sensors for their fiber-optic networks. Cellular telephone makers consume vast quantities of DSP and microcontroller chips—there’s typically one of each in every cell phone—as well as mountains of tiny RF components such as resistors, capacitors, and inductors.

Consumer Electronics

Consumer electronics gives communications systems a good run for the money, consuming about one-fifth of all the world’s semiconductor value. Consumer items can be televisions, DVD players, electronic toys, and also “white goods” such as refrigerators, washers, and dryers, all of which now include microcontrollers to mange power consumption and add exotic features.

Tech Talk

Running Rings Around Sega Saturn

In the late 1990s one of the most popular home video game consoles was Sega’s Saturn. The Saturn was an extraordinary system in many ways. The system was so advanced and high-end that, ironically, it led to Saturn’s collapse and Sega’s eventual withdrawal from the hardware market.

Saturn had no fewer than four 32-bit microprocessors, three from Hitachi and one from Motorola. These were partnered with six custom-designed ASIC chips and several megabytes of memory. The entire system was considerably more complex than the average PC of its day. It was so complex, in fact, that most game programmers couldn’t exploit its features well. Under pressure to meet deadlines, most game programmers took shortcuts and used only one or two of Saturn’s four processors. Although many Saturn games were good, few flexed its considerable hardware muscle. After it cratered, Saturn left a vacancy for Microsoft’s Xbox and Sony’s PlayStation 2.

Home video games are a big consumer of electronics in the home. Nintendo, Sony, and Microsoft (and Sega, Atari, and Commodore before them) have all created very high-end computers that sell for very little money. In fact, these companies sell their game consoles at a loss. A new PlayStation 2, for example, might cost Sony $350 or more to manufacture, yet sells for $200 to $300 when new. Sony makes up the money on game (software) sales. Unlike PC software, PlayStation software must be officially licensed and “approved” by Sony, and royalties apply. (The same is true for other game consoles.) In this razor-and-blade business model, the game console is merely an enticement for consumers to buy games. Each game brings in a royalty of a few dollars to the maker of the console, in this case, Sony. Over the life of the console, Sony will make more than enough money from software royalties to offset the cost of effectively taping a $100 bill to every PlayStation.

The problems with this business model are obvious, yet the concept itself is a very old one. If game players don’t buy enough games, the game maker loses money. The break-even point for most video game systems comes after consumers buy three to five titles. That makes it a safe bet, as statistics show that most video game owners buy more than a dozen games over the useful life of the system.

The second problem is that of deferred revenue versus instant gratification. The game maker must spend the money up front to manufacture and market millions of game consoles, generating a huge financial loss. Only after several months have passed will software royalties begin to make up these losses. Companies must have deep pockets, or very patient investors, to enter the video game market.

Finally, the entire scheme hinges on licensed software. There must be no “shareware” game titles, no pirated or copied games, and no independent or unlicensed game developers. In short, it needs to be the exact opposite of the PC software industry. To prevent this, game consoles include obscure and undocumented hardware features that independent programmers are unlikely to figure out. Officially approved and licensed programmers, however, are taught the secrets of the system in return for a licensing fee and a promise to pay royalties on every game they sell. In some cases (e.g., Sony’s original PlayStation), the game CDs themselves are mastered and duplicated by the game manufacturer, which brings in additional revenue and helps control inventory. As a last resort, video game manufacturers can exert legal pressure on unlicensed programmers producing “rogue” software that doesn’t generate royalties.

Industrial Electronics

The industrial uses of semiconductors are many and varied. Chips show up in robots, vision-inspection systems, alarms and security systems, and power generators, to name but a few. Large, expensive, high-powered semiconductors are used in dams, nuclear plants, and oil plants to regulate and control the electricity these plants generate.

Robots are full of electronics, of course. Heavy industrial robots have a half-dozen motors to move their joints, and each motor is usually controlled by its own miniature computer. Then there’s one main computer (the robot’s “head”) that controls all of these. Heavy robots are amazing for their ability to pick up and move heavy loads, then set them down accurately to within fractions of an inch. That kind of accuracy calls for some exotic mathematics, called kinematics, to predict how and when the robot arm needs to speed up and slow down. This is all handled by low-cost microprocessor chips, along with dozens of memory chips, communications chips (for talking to other robots on the assembly line), and high-voltage chips to power the whole thing.

Robots with vision systems combine CCD image sensors with more miniature computers to analyze what they see. Some robots are nothing but vision systems, with no moving arms at all. Either way, these electronic eyeballs can look at parts moving by on a conveyor belt and instantly recognize any flawed or damaged ones. Other robots can then throw the bad pieces into the trash. Robots can also sort and straighten scattered parts so they’re all turned the same way in nice, neat rows, making the job easier for the next robot down the assembly line. Robots with vision can assemble anything from vacuum cleaners to chocolates. A popular brand of sandwich cookie is made by robots that deliberately assemble the two halves slightly off-center, to make the cookies look handmade.

Automotive Electronics

The semiconductor content of automobiles has been growing steadily for years and shows little sign of abating. The average new automobile now carries about $200 worth of electronics, including almost a dozen microprocessors or microcontrollers. Large, late-model luxury cars can have well over 60 microprocessors. Some electronics are added for safety (collision-avoidance detectors, airbags, night vision), some for comfort or entertainment (in-dash CD players, electrically adjustable seats, air conditioning), and some to just run the car, replacing older mechanical designs (electronic ignition, antilock brakes, or automatic transmission). That doesn’t even include the bits that actually look like computers, such as satellite-guided navigation systems and rear-seat movie players.

The electronic systems in cars are starting to communicate and interact in unusual ways. For example, in some cars the electronics controlling the position of the side-view mirrors communicates with the electronics in the automatic transmission. Why? So the mirrors will automatically tilt down and inward whenever you put the car into reverse, the better for you to see the rear of your car while backing up.

Other cars connect the in-dash radio or CD player to the antilock brakes. This seemingly bizarre combination allows the radio to adjust its volume automatically to compensate for road speed. (The antilock brake system has the best gauge of current road speed.) Cars fitted with satellite navigation systems and cellular telephones often connect these two systems together with the airbag controller. The purpose is to detect whether the car is involved in an accident serious enough to deploy the airbags. If so, the car automatically phones for emergency services and, using the satellite navigation system, transmits the exact location of the accident. Often this system is tied to a fourth system, unlocking the car doors automatically.

Military Electronics

Military spending on semiconductors is relatively small, given the popular view about overspending on development and procurement. On the other hand, military procurement departments tend to be conservative in their use of new technology, often waiting several years before replacing mechanical devices with electronic ones or adopting new components over older ones. Ostensibly, this avoids teething problems with raw, untried technologies. In fact, it’s sometimes counterproductive because older parts might be nearly obsolete or have undesirable features that are improved in newer chips. Since the 1980s, the U.S. military has made a concerted effort to avoid custom, one-off, and expensive “military specials” and stick to commercial, off-the-shelf (COTS) components wherever possible.

What semiconductors the military does buy can be excruciatingly expensive. Depending on the intended application, chips might have to undergo rigorous testing to prove their immunity to extremely high and low temperatures, dampness, harsh vibration, radio interference, and even nuclear radiation. So-called “rad hard” chips must be able to operate in space (for satellites) or in battlefield conditions (for weapons). Military-grade chips must be housed in special ceramic packages rather than the traditional, low-cost plastic. Semiconductor vendors supplying the military must often guarantee that the same exact parts, with no updates, modifications, or changes whatsoever, will remain in production for many years. In this fast-moving industry, that requirement alone drives up costs considerably.

The Business of Making Semiconductors

Semiconductor manufacturing is a multibillion-dollar business with hundreds of suppliers, large and small, playing their part. From raw silicon in one end to finished product out the other, every chip passes through a dozen different corporate hands. Nothing is small scale and even the smallest niches in the supply chain are multimillion-dollar markets employing thousands of people.

Some segments of the industry are labor intensive and have been gradually moving from country to country as taxes, wage rates, and educational levels change and shift. Others are capital intensive and tend to stay centered in industrialized countries. Copyrights, patents, and intellectual property laws also affect what business is best carried out where.

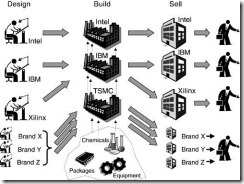

Semiconductor Food Chain

Like any big industry, the business of designing, making, and selling semiconductors has several steps and a lot of middlemen. The big all-under-one-roof manufacturers like Intel are fairly rare. Most of the world’s big chip makers use outside contractors for some of their business, whereas some small (and not-so-small) chip companies don’t manufacture anything at all.

Figure 5.9 provides a simplified illustration of how the entire semiconductor “food chain” works for a number of representative companies. The diagram flows from left to right and includes some of the interrelated players. It doesn’t include the chip-design phase, which was covered in Chapter 3, “How Chips Are Designed.” At this point, we’re assuming that the chip design is finished and ready to go.

Independent Device Manufacturer

The simplest example is also the most well known. Intel is an example of an independent device manufacturer (IDM). Nearly everything about Intel’s chips is done in-house. Its processors are designed by Intel engineering employees, they’re manufactured on Intel’s own fabs, and the chips are sold, obviously, under Intel’s name. The only part that Intel outsources is the actual stocking, warehousing, and distribution of its chips. A worldwide network of independent third-party companies handles this final link in the value chain.

Fabless Chip Company

In our second example, IBM operates just like Intel does. IBM employees design the chips and IBM employees manufacture them using IBM’s own factories. The chips are sold with IBM’s name and logo on them. Where IBM differs from Intel is that IBM also makes chips for other companies. IBM rents out its factories to a select group of companies that do not own a fab. These “fabless” customers rely on IBM and companies like it to build their chips for them.

Xilinx is an example of a fabless chip company. Xilinx designs and sells its own FPGAs but doesn’t actually build them. For years, Xilinx has used IBM (and a few other companies) to build its FPGA chips. The chips are labeled with Xilinx’s name and logo and appear to be Xilinx-made chips. IBM only provides a service; the company can’t sell Xilinx’s chips, nor can it make any extra ones for its own use.

Pure-Play Foundries

Our third example is simply Brand X, a fabless chip company that uses a foundry instead of another chip company to manufacture its chips. Foundries are simply independently owned fabs, and they are covered in more detail near the end of this chapter. Unlike the Intel and IBM examples, foundries don’t make any chips of their own; they simply provide manufacturing services to their clients. Part of a foundry’s business is to keep all of its clients separate and anonymous. It’s also a delicate juggling act to balance the production needs of several clients that are all demanding parts at once. TSMC is an example of a foundry, the largest one in Taiwan.

Materials In, Distributors Out

All chip companies share some things in common, regardless of which of these three business models they follow. Fabs and foundries need to buy their manufacturing tools and equipment from outside vendors. Whether it’s Intel, IBM, or TSMC, they all buy their heavy equipment from a variety of American, European, and Asian tool suppliers. Likewise, all the manufacturers buy their chemicals and other consumable items from an assortment of competitive vendors.

Finally, few of the major chip companies actually sells its own chips. Instead, they refer the task to a components distributor or a manufacturers’ representative firm, or both. Reps and disties, as they’re often called, are the front-line sales force for almost all chip makers around the world. Like automobile dealers, they can carry more than one product line as long as those lines aren’t directly competitive (i.e., Intel and AMD for microprocessors). Distributors tend to be bigger companies that deal in a greater volume of parts, and they specialize in quick delivery time and razor-thin margins. Rep firms, on the other hand, tend to be component “boutiques,” representing smaller companies with esoteric or specialized chips. Manufacturers’ reps always represent more than one company’s products, and often more than a dozen, so avoiding conflicts of interest is a lot tougher.

Facilities and Equipment

Semiconductor manufacturing is a hugely capital-intensive business, right up there with steel mills, space programs, and automobile manufacturing. Erecting a new chip factory costs more than $1 billion, and it’ll be obsolete in less than five years. The stakes are very high indeed, and national pride is often at stake. Labor costs add to the overhead, and shipping costs can be considerable if the plant is not located in the same country as its customers.

Even a back-of-the-napkin analysis shows that, with a startup cost of over $1 billion and a useful life of only a few years, you’d need to depreciate a mind-boggling $500,000 to $1 million per day. Let’s hope you’re manufacturing something the world wants!

Part of the cost of building a fab is, of course, the building itself (see Figure 5.10). Fabs might be as small as 50,000 square feet (4,500 square meters) or as large as 1 million square feet (90,000 square meters). They can be built almost anywhere because they don’t require much in the way of natural resources. Lots of open space, stable soil, and solid bedrock are the most important factors, making the preponderance of fabs in California’s Silicon Valley seem a little incongruous. A stable foundation is the most important thing because chip-making equipment is very delicate and sensitive to vibrations. Imagine a Swiss watchmaker trying to work on a wobbling Alpine train and you get the idea. These machines have to be kept very still.

Tradition keeps a lot of fabs in California. Crowded, expensive, and prone to earthquakes, the Santa Clara Valley would seem the worst place to build a fab, and geologically speaking it is. However, this area is the sentimental heart of the semiconductor industry, the location of most companies’ headquarters, and source of trained talent and graduate students. Most new fabs are now built elsewhere, but many of the industry’s early fabs are still running at full capacity in the heart of Silicon Valley.

Construction firms specialize in building fabs and clean rooms. A fab looks like a conventional office building, and much of it is. Most of the workers will have their offices on the ground floor. The clean rooms will be on the upper floor, mounted on rubber shock absorbers, literally suspended in the middle of the building. Rubber bumpers and springs isolate the sensitive equipment from earth tremors and even from the disruptive footsteps of the workers downstairs. (For more on how chips are made in a clean room, turn to Chapter 4, “How Chips Are Made.”)

It costs about $3,500 per square foot to build a clean room, versus $175 per square foot for the office space in the rest of the building. Annual maintenance costs per square foot are also higher for the clean room, because of the need to keep the air filters and other high-maintenance equipment in top condition.

Because fabs require lots of space but little in the way of natural resources (other than educated labor), they tend to be located near, but just outside of, major cities. Fabs employ thousands of well-trained and well-paid people who contribute to the local economy, making them desirable commercial neighbors. Finally, fabs are relatively clean industrial installations that require only a supply of fresh water and a way to contain the hazardous chemicals used in manufacturing.

Governments and municipalities quickly zeroed in on these positive aspects of the business and routinely offer compelling tax incentives for semiconductor companies to locate new fabs in their jurisdiction. Roads and water supplies are put in and even new universities are constructed to provide a ready supply of skilled labor. The government of Arizona was a particularly attentive suitor in the 1990s, which explains why so many fabs are located around the cities of Scottsdale and Phoenix. The governments of Ireland and Scotland were similarly generous, offering five- and 10-year tax waivers for companies that would build a fab on their shores and employ their citizens. Only now are some companies paying local, state, or national taxes on the business coming from facilities that were built in the early 1990s.

Manufacturing Equipment

Once the fab is built, it’s time to outfit it with equipment. In the early days of the semiconductor industry, all the major players used to build their own equipment. Fairchild, Texas Instruments, Motorola, and others all designed their own manufacturing equipment on one floor, then dragged it upstairs to make chips. Nowadays, semiconductor-manufacturing equipment is a multibillion-dollar commercial industry in its own right.

The necessary equipment can be purchased from a number of different companies based in Japan, Europe, and the United States. Chief among these are Applied Materials, Tokyo Electron Limited (TEL), Nikon, KLA-Tencor, ASM Lithography, Teradyne, Lam Research, Canon, and a dozen others. Applied Materials of Santa Clara, California, has been the market leader in recent years, as Figure 5.11 shows. Before the industry downturn in 2001, the top 10 semiconductor-equipment manufacturers collected a total of $29.3 billion. That’s about 14 percent of the size of the semiconductor market itself in that year ($204 billion), or more than all the DRAM makers combined. It’s sometimes wiser to be the one supplying the picks and shovels than to be standing in the creek panning for gold.

It’s common for companies to build a new fab and then outfit only half of the building or less with manufacturing equipment. That’s pretty normal for heavy manufacturing businesses; the first shovel of dirt is the most expensive part. After that, it’s just capital expenditure.

There are currently about 1,000 working fabs around the world in a dozen different countries. Most of these are different from one another, although large companies such as Intel prefer to build identical facilities in separate locations. This strategy, which Intel calls “copy exact,” allows the company to increase, reduce, or shift production of its components based on market demand or in the event of a plant failure.

It takes about 18 months to build and outfit a new fab, so planning ahead is crucial. Given that semiconductor product cycles and trends can shift suddenly and abruptly in just a few weeks or months, companies take a big gamble when they commit to a new fab. One billion dollars and a year or so later, it might turn out to have been a bad bet.

Fab Utilization

The most expensive thing in the world is a chip-making plant running at less than full capacity. Fabs depreciate at an alarming rate. If that manufacturing capacity isn’t utilized while it’s still valuable, the money sunk into building the fab is lost.

Figure 5.12 shows a snapshot of IC fab utilization worldwide for 12 quarters (three years) from the end of 1999 through mid-2002. Not surprisingly, utilization fell off in 2001 as vendors cut back production to meet diminishing demand. Unfortunately for them, their rent didn’t decrease and the equipment didn’t stop depreciating during that time. They merely invested $1 billion dollars in a fab that only produced two-thirds of the chips originally forecast.

Nowhere is the urgency to run at full production more apparent than for DRAMs. DRAMs are nearly commodities, produced in huge volumes, technically interchangeable, and very price competitive. Maintaining tolerable profit margins depends on economies of scale. With only a few pennies’ profit per chip (in normal times), every little bit of volume or percentage point of market share can make a big difference. The DRAM market is also very elastic: As prices go down, demand goes up. Furthermore, the efficiency of the DRAM manufacturing process depends largely on experience and volume. The more DRAMs you make, the better you get at it.

When you combine the financial risk of building and running a fab with the volatility and narrow profit margins of DRAMs, you conjure up the recipe for a seriously precarious business, and so it is with DRAMs. DRAM prices cycle up and down with such regularity that market researchers serenely predict downturns seven years in advance, and with some accuracy. Every boom-and-bust cycle shakes out a few DRAM manufacturers, and the survivors close ranks. If a DRAM company misjudges its manufacturing capacity by a few percentage points or is a few months late in bringing out the next new chip, its business might collapse in the next downturn. There are fewer DRAM makers today than there were five years ago, and fewer still than there were five years before that.

Microprocessor makers sometimes face the opposite problem. Microprocessor chips can be very profitable (sometimes more than $100 per chip), but there’s a limited demand for them at any given price. Rather than optimize their fabs to run at 100 percent of capacity, microprocessor makers want to ensure they have some excess capacity in case demand suddenly surges. The opportunity cost lost by misjudging demand (and abdicating market share to a competitor) encourages CPU makers to keep a little fab capacity in reserve.

Full capacity means different things at different fabs. Not all fabs are created equal and some can process more wafers than others. For a new, high-capacity fab, 50,000 wafers per month is a good production target. Using this as a yardstick, Figure 5.13 shows how reducing production increases the cost per wafer. This is because fabs are fixed-cost assets where amortization of the equipment and the building don’t change with volume. Although material costs might fall, and labor might be reduced a little bit, these are relatively minor expenses next to maintaining the fab itself. For a top-of-the-line fab processing 300-mm wafers, as much as 50 percent of the production cost is depreciation on the equipment.

Because of this, a semiconductor vendor’s profit margins closely track utilization of its fabs. Graphing overall average utilization versus overall average profit margins for the world’s semiconductor makers over the period of 1978 to 1995 (Figure 5.14), we see a strong correlation between the two. The break-even point comes at about 50 percent utilization; below that, companies lose money as their investment in their fabs evaporates.

Silicon Cost Model

Part of the cost of producing chips is, of course, the silicon itself, although not as much as you might think. There are a great many chips in which the silicon is actually the cheapest part. The plastic package sometimes outweighs the silicon it houses in the financial balance.

Let’s look at an example of a medium-sized chip to see the factors that affect its cost. Figure 5.15 shows materials and processes that contribute to the $10.37 cost of manufacturing our chip. Our example chip measures 50 mm2 (about 7 mm, or a quarter of an inch, on a side), which is about the size of a pencil eraser. The number of transistors in the chip isn’t relevant, but let’s say it has about 8 million. The chip is manufactured in the United States (which is relevant) on 200-mm (8-inch) wafers using a 0.25-micron, four-layer metal process.

Bear in mind that our cost model does not include any of the overhead costs for the fab previously mentioned (depreciation, electricity, water, maintenance, etc.), nor does it include the cost of labor, photomasks, or consumable chemicals. We’re only considering actual material costs for now.

Wafer Cost

For our example chip, the biggest material cost is the silicon wafer itself. The going rate for a 200-mm silicon wafer suitable for our purposes is about $3,100. (This is fully burdened and partially processed, factors that we’ll ignore from here on.) If we were to use a more modern or aggressive process, say, 0.13-micron or 0.09-micron, the wafer would be more expensive. If we needed more than four layers of metal wiring to connect our 8 million transistors together, the wafer would be more expensive still. Finally, if we wanted to use copper instead of aluminum on any of the metal layers, the cost of the wafer would probably double. Naturally, going the other way and using smaller wafers and coarser design geometry could cut the cost of the wafer by half, or even less.

Figure 5.16 breaks down the worldwide market for semiconductor materials, including silicon wafers. Again using our benchmark year of 2000, this was a $16 billion market in raw materials and various hazardous chemicals. This is about half as much revenue as the equipment makers collected in that year, or about 8 percent of what the semiconductor makers themselves raked in.

Because 47 percent of the raw materials market, or $7.5 billion, was for the magic stuff itself, let’s take a quick look at who supplies the silicon to Silicon Valley and the rest of the world. Figure 5.17 breaks down the processed-silicon market, including the top vendors’ market shares in 2000.

Looking at the top half-dozen suppliers of silicon—the very stuff of modern technology and the basis of so much world industry—are any of the names familiar to you? Not even one? Don’t feel bad, because these are companies that only a chemical engineer could love. Although they fulfill a vital role in the modern economy, they’re largely unknown and unsung.

Gross Die per Wafer

Chip cost is basically the cost of processing the silicon wafer divided by the number of chips on the wafer, minus the cost of any chips that don’t work—and there are always chips that don’t work. Naturally, you want to get as many chips as possible from each wafer, but this isn’t always easy. Chips are square (or rectangular) and wafers are round, so fitting them isn’t easy. Here we need to call on some obscure mathematics called packaging theory. For any shape and size of chip, there is an ideal way to arrange them on a circular wafer to get the greatest number of chips.

You’ll notice that around the edges of the wafer in Figure 5.18 there are some chips that go off the edge. Obviously these chips are no good, but it’s easier for the equipment to put them there anyway. Sometimes engineers probe or measure these partial chips just to see how well the manufacturing equipment is working at the edge of the wafer.

Our example chip is square (as opposed to rectangular) so, without getting into the mathematics, we discover we can fit 575 whole chips onto a 200-mm wafer. Dozens more will overlap the edges but we won’t count those because they’ll be cut off and discarded.

Defects and Yield

Not every one of our 575 chips is likely to work after all is said and done. There will inevitably be some slight manufacturing defects here and there. Tiny particles of dust in the cleanest of clean rooms can settle on a wafer during processing and ruin some of the chips. Some particles stick and some can be dusted off, although at this microscopic scale, just the “impact” of a dust particle landing on the wafer usually craters the chip in question. Imperfections in the processing chemicals also cause defects, with contaminated water being a major culprit. Contaminated in this case means ultrapure water that has a few stray molecules of something other than hydrogen and oxygen. Every step in the complicated manufacturing process adds to the risk of contamination as stray particles are stirred up while the chip passes through each piece of equipment.

The ratio of good chips to bad chips is called the yield and it’s never 100 percent. Chip companies very closely guard their actual yield numbers the way poker players guard their cards and for the same reason: They don’t want to give competitors any inside information. Anything less than 100 percent yield is embarrassing, but every company knows that goal is impossible. Yields of 85 percent to about 97 percent are typical for midrange and high-end ICs. Simple chips will enjoy higher yields, whereas new or complex chips will have embarrassingly low yields.

The yield depends on two things: the defect density and the die area. The defect density is the average number of contaminating particles that land on every square centimeter of silicon. The fewer and farther apart these particles are, the better. Defect density is like the scatter pattern of a shotgun; die area is the size of the target.

Defect densities for good, clean, mature processes can range from 0.05/cm2 to 0.20/cm2. In the early stages of production, these figures will be much higher. As the equipment “settles in” and the production staff gains experience, the defect density goes down over time. Changing air filters in the fab can also make a difference.

Big chips make bigger targets, so their yield rate is lower. Also, some chips are more resistant to defects than others. For example, almost any defect on a microprocessor, DSP, or logic chip will ruin it. Memory chips, on the other hand, are designed with redundant memory cells for exactly this reason. If a few cells get damaged during manufacturing, alternate memory cells are used in their place. So although dust particles don’t deliberately seek out logic chips and avoid memory chips, memory chips seem more immune to contamination because of their redundant nature.

Big chips are a big financial risk. Assuming dust and contaminants scatter themselves randomly over the wafer, and assuming there are more than a few particles to go around, it’s likely that nearly every big chip will get hit with some contaminant or another. Intuitively, this makes sense. If the entire wafer were nothing but one big chip, it would definitely be ruined. If the wafer was covered with zillions of tiny chips, most of them could dodge the barrage. Figure 5.19 shows how this could happen. The bigger the chip, the worse the yield is going to be, making large chips disproportionately expensive to manufacture.

Yields generally improve dramatically over time, if you define dramatically as a 5 percent improvement. Experience counts for a lot in this business and process technicians spend agonizing hours and sacks of their employers’ money to improve yields by 1 percent. For the first few days after a new fab comes online, virtually all of the chips are a total loss. It takes time for the heavy equipment to settle in, and for the environment to cleanse itself. Little details here and there can affect yield, and nothing escapes the process engineers’ scrutiny. Four to six months into production, yields should be nearly optimal.

Getting back to our example chip, our 0.25-micron fab processes have a defect density of 0.50 defects per cm2 (pretty average) and the effective area of the chip is 85 percent, meaning 15 percent of the chip is not completely covered with transistors and therefore relatively insensitive to flaws. According to something called Dingwall’s equation, we can expect about 81 percent of our chips to work. Another 19 percent, alas, won’t make it out the door as working chips.

An 81 percent yield leaves us with 465 good chips out of 575 total chips on the wafer. The other 110 chips can be made into tie tacks or ground into sparkly scrap. Dividing the $3,100 cost of the wafer by the 465 good chips, we come up with a cost of $6.67 per chip. However, we’re not done.

Packaging and Testing

At this point, our example chip is still just a bare square of silicon. We know it works but it’s not ready to sell. We’ll put this chip into a plastic housing that costs about $2.00 in volume. The labor to attach the bare chip to a lead frame (a collection of wires) and encapsulate it in plastic adds another $0.50 of cost. There are a number of package types we can choose from, and our customers might be very picky about the package they prefer. Small-outline, surface-mount packages have become very popular, as the statistics in Figure 5.20 show. Fortunately, these small-outline packages are among the cheapest. Packages made out of ceramic, such as the pin-grid array, are expensive in comparison to plastic, sometimes eclipsing the cost of the silicon itself.

Now our chip must be tested one last time before we ship it to a customer or a distributor. After all the handling and packaging, a few chips will fail their final test. Some might have been zapped by static electricity because a technician walked across a carpet or combed his or her hair. Some chips will be overly sensitive to heat and fail during the plastic encapsulation process. Others might work fine at slow speeds but do funny things at full speed. In this last case, we have the option of marking the chips down and selling them at a discount or scrapping them completely. Testing itself adds $1.00 to our cost (mostly labor), and the fallout from testing effectively adds $0.20 to each remaining part. At the end of the day, we’ve spent $10.37 to produce one chip.

Economics of Updating Fabs

Semiconductor fabs depreciate rapidly and the state of the art is never so for long. Top-end chip makers have to commit to getting on, and staying on, an as tonishingly expensive treadmill. Leading-edge production equipment is hideously expensive and needs to be upgraded or replaced every year or so. For major segments of the semiconductor market, there is no other way to stay in business. It’s said that driving racecars for a living is like standing in a wind tunnel tearing up $100 bills. Making chips for a living is like buying a new Boeing 747 every week.

For some high-end chips like microprocessors, nothing but the very best will do. Nobody wants to buy a slow PC. These fabs need to be kept at the cutting edge of fabrication technology all the time. Falling behind your competitor, even for a few months, might doom all the chips your fab produces to the scrap bin, or they might become key chains, given away to bored attendees at the next trade show.

It isn’t always so stressful, however. There are plenty of other types of chips that don’t have to be particularly fast or especially advanced. In fact, most of the world’s chips are built in relatively pedestrian semiconductor technologies that are one or two steps behind the current state of the art (e.g., making 150-nanometer transistors when 90-nanometer technology is the latest thing). Plenty of chips are built on fab lines that are a few generations behind the curve. Old fabs don’t spontaneously explode or fall into the soil when they get out of date, but they don’t necessarily get cheaper, either.

There are good reasons why companies want to leave older fabs in place, and good reasons why they wouldn’t. It all depends on the segments of the market they are trying to serve. On the one hand, keeping an older fab in production makes perfect sense. It gives a company more time to amortize the crushing cost of building it. Maybe they won’t be able to build leading-edge chips anymore, but the world buys lots of cheap and slow chips, too. Instead of making ultrafast microprocessors, the company could shift production to standard logic parts, and then to diodes and LEDs, and finally, to resistors. With this strategy, they could keep a fab running at full capacity for many years. As the depreciation overhead decreased, so would the need to wring profit from each component, which is good, because it’s the leading-edge components that generate the most profits.

Although this strategy works well for a while, it is inherently self-defeating. By neglecting (or refusing) to upgrade a fab, a company is doomed to ride it into the ground. Eventually even the best process technology becomes so old that nothing it produces is saleable. Buggy whips, no matter how cheaply produced, just aren’t in demand.

It is better to upgrade the fab at regular intervals, despite the expense. Paradoxically, this can be cheaper than letting it go. Newer process technologies inevitably shrink chip features, which reduces die size. A smaller die means more chips per wafer. More chips per wafer means more product to sell from the same amount of silicon. It is thus better to process 1,000 tiny chips than 350 larger ones. At the same time, each chip will get faster (because it’s smaller) and more power-efficient (ditto). Whether these characteristics are valuable to your end market is irrelevant; you still get more chips per wafer than before. If you can charge extra for the smaller, faster, low-power chips, so much the better.

Economics of Larger Wafers

Semiconductor wafers have grown steadily in size over time, from the little 2-inch wafers of a few decades ago to the comparatively monstrous pizza-sized 300-mm (12-inch) wafers used in some high-end fabs today. By 2002, there were a few dozen fabs processing 300-mm wafers.

Apart from being more expensive in and of themselves, large wafers are more expensive to process. Like developing photographs into poster-sized prints, processing large wafers requires plenty of large-sized equipment. In a fab, nearly every piece of equipment at every stage of manufacturing has to be completely replaced to handle the new platters. What possible economic incentive is there to go through this trouble?

It’s almost always more profitable to use the biggest wafers possible, that’s why. There are direct and indirect effects that make bigger wafers appealing. First, the number of chips you can fit on a wafer increases with the square of its radius. In other words, 300-mm wafers don’t just hold more chips; they hold a lot more chips. All other things being equal, a 200-mm wafer can produce 266 chips at 100 mm2 apiece, but a 300-mm wafer can hold 630 chips, well over twice as many chips for the same amount of work.

Second, the manufacturing equipment for 300-mm wafers is all brand new so it includes all the latest gadgets and gizmos for improving production efficiency. Transistor geometry gets smaller on the newer equipment, so more transistors fit on a chip, making each chip smaller. Much of the same effect could be had by replacing or refurbishing existing equipment, but if you’re going to spend the money anyway, why not spend it on equipment that handles larger wafers, too? In effect, the ability to handle finer geometries on 300-mm equipment comes almost for free. Finer geometry means smaller chips, multiplying the beneficial effect of the larger wafer.

The cumulative effect of all this will increase the overhead burden, but will actually reduce the overhead burden per chip. Amortizing all the new fab equipment will still be a crushing burden, but you’ll have a lot more chips—and more competitive chips—with which to do this.

Economic Summary

Semiconductor manufacturing suffers from an odd economic condition that other industries don’t share. Almost all the cost of producing a chip comes from fixed overhead, specifically in the depreciation of the fab and its equipment. Unlike boats or telephones, the incremental cost of producing each chip is almost nothing. The very first chip out the door is extraordinarily expensive. After that, the next million chips are practically free.

The only other industry that compares to this is, coincidentally, software. Most of the cost is in development; the cost of delivering software is almost zero. Music distribution and movie making come close, but there are some material costs (and enormous marketing costs) associated with those businesses. The strange economics of chip production puts direct pressure on manufacturers to increase volume. Depreciation directly burdens every chip; the more chips they make, the smaller the burden per chip. Producing twice as many chips means you can sell them for half the price.

The grimmer side of this equation means that slow-selling chips carry an enormous cost burden. Chip makers have to think long and hard before committing to produce a new chip. They can’t afford to take too long, though, or the market might pass them by.

The ever-increasing cost to build a fab might mean that fewer and fewer companies each year will be able to afford this luxury. The alternative is not to fold the tent and leave the semiconductor industry; it’s to outsource production to an independent foundry. Foundries are becoming an increasingly important part of the semiconductor production chain. The number of companies building their own fabs is slowly collapsing; with each new technology generation fewer and fewer companies are able to invest the capital required.

Separating the fabrication business from the design business leads to a horizontal segmentation of the industry. Chip companies become design-and-marketing companies, outsourcing their actual production to foundries. Further down the road, some firms might become just marketing companies. Already there are firms that do only chip design, with no fabrication or marketing.

The rising cost of fabs and the shrinking number of companies operating them will consolidate the number of “recipes” for making advanced silicon. Chip manufacturing will become more alike, almost generic. Companies will no longer be able to compete on the same basis they used to. Silicon speed and performance will be the same, if they all use the same third-party foundry. That will move the emphasis to marketing, where it already is for more mature products like cars and microwave ovens.

For 30 years the semiconductor market grew an average of 14.8 percent per year, from about $800 million in 1970 to $200 billion in 2000. Naturally, there were some good years and some bad years, but the overall trend was remarkably constant. During those same 30 years the cost of semiconductor capital equipment rose by 16.3 percent per year, a bit faster than the growth in sales. This means that spending on capital equipment grew from being 17 percent of a company’s sales back in 1980 to a heftier 22 percent of sales in 2000. This ratio also fluctuates from year to year, going as high as 34 percent in 1996 to as low as 16 percent just three years later.

Yet paradoxically the cost of the chips themselves keeps falling. Each year sees higher productivity and better technology that more than make up for the spiraling cost of producing new chips. Chips are getting cheaper faster than they’re getting more expensive.

If you’re in the business of selling chips, though, you don’t want them to get cheaper. To keep up profit margins, chip makers continually design more elaborate and expensive chips. By constantly moving up-market, chip makers neatly offset the inexorable trends moving them down-market. The result is that consumers keep getting more and more features for the same amount of money. There are few products in the world you can say that about.

If you focus on a few arbitrary units of semiconductor production—one transistor, 1 million bits of memory, one MIPS of microprocessor performance, or whatever—and measure it over time, you’ll see that the cost to make that unit has been falling at an exponential rate. As Figure 5.21 shows, the cost per unit function of semiconductor falls faster than the cost of semiconductor capital equipment rises.

How do semiconductor makers reduce their costs so rapidly? There’s no one silver bullet; it’s a number of related technologies. Figure 5.22 shows the cumulative effect of larger wafers, finer process geometry, improvements in yield (lower defect density), and various other factors that drive down the cost to make a chip. Wafer sizes aren’t increasing as often as they used to, so this effect is slowing down. On the other hand, process geometries shrink more frequently than they used to, neatly making up the difference.

This overall trend is popularly known as Moore’s Law, the observation that we seem to be able to double the number of transistors packed into a given amount of silicon every 18 months. A two times return in 18 months works out to nearly 59 percent annual percentage rate: not a bad investment at all.

This is good news for manufacturers but even better news for consumers. The price (as opposed to the cost) for a unit function of semiconductor falls an average of 35 percent per year. Producing the same chip year after year will produce a steadily diminishing return on investment, so chip makers relentlessly improve their products. They add features to shore up the steadily shrinking die size, and then raise prices to cover the new features. In the end, consumers get more silicon goodness for their dollar.

For all-electronic products such as PCs and VCRs, a new and improved version comes along to replace the old one several times per year, something that would be unimaginable for more mature products like cars, refrigerators, or washing machines. Last year’s top-of-the-line PC is this year’s budget PC. A few months later, that same PC isn’t worth selling at all. This constant upgrade treadmill means consumers always get the latest technology at the best price, but it also means rapid obsolescence. Electronic products have become disposable by their nature.

It’s been said, “Speed, not yield, differentiates the leaders.” Because prices spiral downward all the time, it’s important to be early to market and enjoy the higher chip prices while you can, even if you pay for it in lower yields. By the time you’ve got your yield up to where you want it, market prices have eroded so much that the chip is barely profitable. Prices erode faster than yield improves. This race is for the swift.