The term “user interface” refers to the methods and devices that are used to accommodate interaction between machines and the human who use them. The user interface of a mechanical system, a vehicle, a ship, a robot, or an industrial process is often referred to as the human machine interface. In any industrial control system, the human machine interface can be used to deliver information from machine to people, allowing them to control, monitor, record, and diagnose the machine system through devices such as image, keyboard, mouse, screen, video, radio, software, etc. Although many techniques and methods are used in industry, the human machine interface always accomplishes two fundamental tasks: communicating information from the machine to the user, and communicating information from the user to the machine.

Two types of user interface are currently the most common. Graphical user interfaces (GUI) accept input via input devices and provide an articulated graphical display on the output devices. Web user interfaces (WUI) accept input and provide output by generating web pages which are transmitted via the Internet and viewed by the user via a web browser. These types of user interfaces have become essential components in modern industrial systems, for example, human robot communication interfaces, SCADA human machine interfaces, road vehicle driver screens, aircraft and aerospace shuttle control panels, medical instrument monitors, etc.

HUMAN–MACHINE INTERACTIONS

Automated equipment has penetrated virtually every area of our life and our work environments. Human machine interaction is already playing a vital role across the entire production process, from planning individual links in the production chain right through to designing the finished product. Innovative technology is made for humans, used by and monitored by humans. The products therefore should be reliable in operation, safe, accepted by personnel, and, last but not least, cost-effective. This interplay between technology and user, known as human machine interaction, is hence at the very heart of industrial automation, automated control, and industrial production.

13.1.1 Models for human–machine interactions

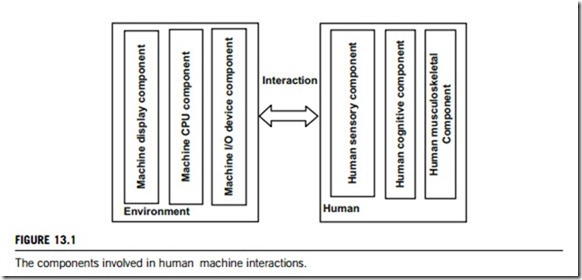

Modelling the human machine interaction is done to depict how human and machine interact with each other in a system. It illustrates a typical information flow (or process context) between the “human” and “machine” components of a system. Figure 13.1 shows the components involved in each side of the human machine interaction. The environment side has three components: display, CPU, and I/O device components. The human side has another three components: sensory, cognitive, and musculoskeletal components.

Human

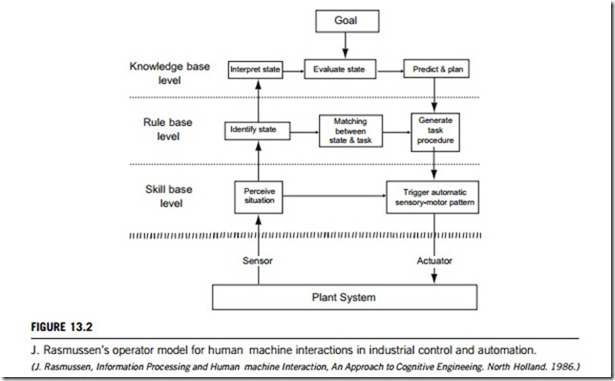

At this point, we need to consider the process of human thinking (cognitive process) in the interaction. Figure 13.2 shows a model in which the operator’s cognitive behavior and action mode differ in accordance with their perception of the information presented at the interface. As a result, he or she has three modes of action: skill-based, rule-based, and knowledge-based.

In the skill-based mode, the operator acts unconsciously as smoothly as an automated control system. However, in the rule-based mode, the operator acts consciously and rapidly makes associative actions by remembering the relationship between the perceived state of situation and the corre- sponding action that is required. The relationship between the environmental situation, the state and the action is a mental model that the operator represents internally as a scheme. The skill-based and rule-based modes are automatic, thereby requiring minimal mental effort. Well-trained operators can perform actions in both models.

In contrast, mental models used in the knowledge-based mode differ from the associative relationship. Here, operators recognize the state of the plant by using their knowledge of the semiotic relationship of the plant’s configuration: that is, of its goal and function, means and ends, parts and whole. They also recognize the logical relationship between factors such as the cause consequence relations between the input and output variables, as well as the qualitative relations between variables based on physical equations. By using these difficult, abstract models, operators can interpret the sensor information, find the root cause of any failure, predict future trends, and then decide what to do. This type of action is intense, laborious, and time-consuming, especially for a poorly trained novice. However, even well-trained operators can use this knowledge-based mode when they encounter unfamiliar situations.

(1) Definitions and constructs

In modern control systems, a model is a common architecture for grouping several machine configurations under one label. The set of models in a control system corresponds to a set of unique machine behaviors. The operator interacts with the machine by switching among models manually, or monitoring the automatic switching triggered by the machine. However, our understanding of models and their potential contribution to confusion and error is still far from complete. For example, there is widespread disagreement among user interface designers and researchers about what models are, and also how they affect users. This lack of clarity impedes our ability to develop methods for representing and evaluating human interaction with control systems. This limitation is magnified in high-risk systems such as automated cockpits, for which there is an urgent need to develop methods that will allow designers to identify the potential for error early in the design phase. The errors arising from modeling the human machine interaction are thus an important issue that cannot be ignored.

The constructs of the human machine interaction model discussed below are measurable aspects of human interaction with machines. As such, they can be used to form the foundation of a systematic and quantitative analysis.

(a) Model behaviors

One of the first treatments of models came in the early 1960s, from cybernetics; the comparative study of human control systems and complex machines. The first treatments set out the construct of a machine with different behaviors. In the following two paragraphs, we define a model as a machine configuration that corresponds to a unique behavior.

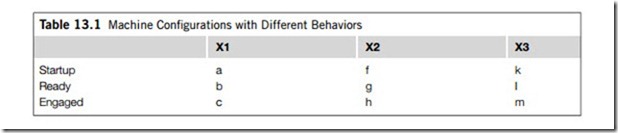

The following is a simplified description of the constructs of machine behaviors. A given machine may have several components (e.g., X1, X2, X3), each having a finite set of states. On Startup, for example, the machine initializes itself so that the active state of component X1 is “a,” X2 is “f,” and X3 is “k” (see Table 13.1). The vector of states (a, f, k) thus defines the machine’s configuration on Startup. Once a built-in test is performed, the machine can move from Startup to Ready. The transition to Ready

can be defined such that for component X1, state “a” undergoes a transition and becomes state “b”; for X2 state “f” transitions to “g”, and for X3, “k” changes to “l”. The new configuration (b, g, l) defines the Ready model of the machine. Now, there might be a third set of transitions, for example, to Engaged (c, h, m), and so on.

The set of configurations labelled Startup, Ready and Engaged, if embedded in the same physical unit, corresponds to a machine with three different ways of behaving. A real machine whose behavior can be so represented is defined as a machine with input. The input causes the machine to change its behavior. One source of input to the machine is manual; the user selects the model, for example, by turning a switch, and the corresponding transitions take place. But there can be another type of input

if some other machine selects the model, the input is “automatic”. More precisely, the output of the other machine becomes the input to our machine. For example, a separate machine performs a built-in test and outputs a signal that causes the machine in Table 13.1 to transition from Startup to Ready, automatically.

(b) Model error and ambiguity

Model errors fall into a class of errors that involve forming and carrying out an intention (or a goal). That is, when a situation is falsely classified, the resulting action may be one appropriate for a perceived or expected situation, but is inappropriate for the actual situation. The type of situations leading to model error are not well known. For instance, consider a word processor, particularly when used in situations in which the user’s input has different interpretations, depending on the model. For example, in one word processing application, the keystroke “d” can be interpreted as either the literal text “d” or as the command “delete”. The interpretation depends on the word processor’s currently active model: either Text or Command.

Model error can be linked to model ambiguity by interjecting the notion of user expectations. In this view, “model ambiguity” will result in model error only when the user has a false expectation about the result of his or her actions. There are two types of ambiguity: one that leads to model error, and one that does not. An example of model ambiguity that does lead to model error is timesharing operating systems in which keystrokes are buffered until a Return or Enter key is pressed. However, when the buffer is full, all subsequent keystrokes are ignored. This leads to two possible outcomes; either all or only a portion of the keystrokes will be processed. The two outcomes depend on the state of the buffer, which is either not full or full. Since this is unknown to the user, false expectations may occur. The user’s action, hitting the Return key and seeing only part of what was keyed on the screen, is therefore a “model error” because the buffer has already filled up, but this was unknown to the user.

An example of model ambiguity that does not lead to model error is a common end-of-line algorithm for editing a word file which determines the number of words in a line. An ambiguity is introduced because the criteria for including the last word on the current line, or wrapping to the next line, are unknown to the user. Nevertheless, as long as the algorithm works reasonably well, the user will not complain because he or she has not formed any expectation about which word will stay on the line or be placed on the next, and either outcome is usually acceptable. Therefore, model error will not occur even though model ambiguity does indeed exist.

(c) User factors: task, knowledge, and ability

One important element that constrains user expectations is the task at hand. If discerning between two or more different machine configurations is not part of the user’s task, model error will not occur. Consider, for example, the radiator fan of your car. Do you know what configuration (OFF, ON) it is in? The answer, of course, is no. There is no such indication in most modern cars. The fan mechanism changes its mode automatically depending on the output of the water temperature sensor. Model ambiguity exists because at any point in time, the fan mechanism can change its model or stay in the current model.

The configuration of the fan is completely unknown to the driver. But does such model ambiguity lead to model error? The answer is obvious; not at all, because monitoring the fan configuration is not part of the driver’s task. Therefore, the user’s task is an important determinant of which machine configurations must be tracked and which need not be tracked.

The second element is user knowledge about the machine’s behaviors. By this, we mean that the user constructs some mental model of the machine’s response map. This mental model allows the user to track the machine’s configuration, and, most importantly, to anticipate the next one. Specifically, our user must be able to predict what the new configuration will be following a manually or an automatically triggered event.

The problem of anticipating the next configuration of the machine reliably becomes difficult when the number of transitions between configurations is large. Another factor in user knowledge is the number of conditions that must be evaluated as TRUE before a transition from one model to another takes place. For example, the automated flight control systems of modern aircraft can execute a fully automatic (hands-off) landing. Several conditions (two engaged autopilots, two navigation receivers tuned to the correct frequency, identical course set, and others) must be TRUE before the aircraft will execute automatic landing. Therefore, in order to reliably anticipate the next model configuration of the machine, the user must have a complete and accurate model of the machine’s behavior, including its configurations, transitions, and associated conditions. This model, however, does not have to be complete in the sense that it describes every configuration and transition of the machine. Instead, as discussed earlier, the details of the user’s model must be merely sufficient for their task, which is a much weaker requirement.

The third element in the assessment of expectations is the user’s ability to sense the conditions that trigger a transition. Specifically, the user must be able to first sense the events (e.g., a flight director is engaged; aircraft is more than 400 feet above the ground) and then evaluate whether or not the transition to a model (say, a vertical navigation) will take place. These events are usually made known to the user through an interface, but there are several control systems in which the interface does not depict the necessary input events. Such interfaces are said to be incorrect.

In large and complex control systems, the user may have to integrate information from several displays in order to evaluate whether the transition will take place or not. For example, one of the conditions for a fully automated landing in a two-engine jetliner is that two separate electrical sources must be online, each one supplying its respective autopilot. This information is external to the automatic flight control system, in the sense that it involves another system of the aircraft. The user’s job of integrating events, some of which are located in different displays, is not trivial. One important requirement for an efficient design is for the interface to integrate these events and provide the user with a succinct cue.

In summary, we have discussed three elements that help to determine whether a given model ambiguity will or will not lead to false expectations. First is the relationship between model ambiguity and the user’s task. If distinguishing between models (e.g., radiator fan is ON or OFF) is not part of the user’s task, no meaningful errors will occur. Second, in a case where model ambiguity is relevant to the user’s task, we assess the user’s knowledge. If the user has an inaccurate and or incomplete model of the machine’s response map, he or she will not be able to anticipate the next configuration and model confusion will occur. Third, we evaluate the user’s ability to sense input events that trigger transitions. The interface must provide the user with all the necessary input events. If it does not, no accurate and complete model will help; the user may know what to look for but will never find it. As a result, confusion and model error will occur.

(2) Classifications and types

The constructs described above are measurable aspects of human interaction with machines. As such, they can be used to form the foundation of a systematic and quantitative analysis. But before such an analysis can be undertaken, some form of representation, such as classification and types of models, is needed.

A classification of the human machine interaction models is proposed here to encompass three types of models in automated control systems: (1) interface models that specify the behavior of the interface, (2) functional models that specify the behavior of the various functions of a machine, and (3) supervisory models that specify the level of user and machine involvement in supervising the process. Before we proceed to discuss this classification, we shall briefly describe a modeling language, “State Charts”, that will allow us to represent these models.

The Finite State Machine Model is a natural medium for describing the behavior of a model-based system. A basic fragment of such a description is a state transition which captures the states, conditions or events, and transitions in a system. The State Chart language is a visual formalism for describing states and transitions in a modular fashion by extending the traditional Finite State Machine to include three unique features: hierarchy, concurrency, and broadcast. Hierarchy is represented by substates encapsulated within a superstate. Concurrency is shown by means of two or more independent processes working in parallel. The broadcast mechanism allows for coupling of components, in the sense that an event in one end of the network can trigger transitions in another. These features of the State Chart are further explained in the following three examples.

(a) Interface models

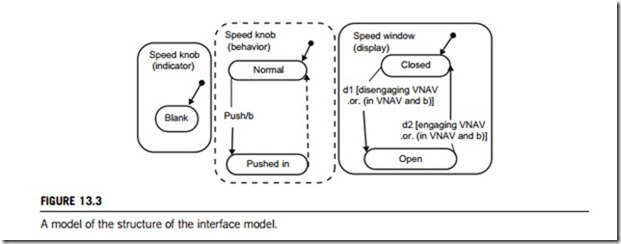

Figure 13.3 is a modeling structure of an interface model. It has three concurrently active processes (separated by a broken line): speed knob behavior, speed knob indicator, and speed window display. The behavior of the speed knob (middle process) is either “normal” or “pushed-in”. (These two states are depicted, in the State Chart language, by two rounded rectangles.) The initial state of the speed knob is normal (indicated by the small arrow above the state), but when momentarily pushed, the speed knob engages or disengages the Speed Intervene submodes of the vertical navigation (VNAV,

hereafter) model. The transition between normal and pushed-in is depicted by a solid arrow and the label “Push” describes the triggering event. The transition back to normal occurs immediately after the pilot lifts his or her finger (the knob is spring-loaded).

The left-most process shown in Figure 13.3 is the speed knob indicator. In contrast to many such knobs that have indicators, for example, the Boeing-757, the speed knob in this example has no indicator and therefore is depicted as a single (blank) state. The right-most process is the speed window display, which can be either closed or open. After VNAV is engaged, the speed window display is closed (im- plying that the source of the speed is from another component; the flight management computer). After VNAV is disengaged, and a semiautomatic mode such as vertical speed is active, the speed window display is open, and the pilot can observe the current speed value and enter a new one. This logic is depicted in the speed knob indicator process in Figure 13.3: transition d1 from closed to open is conditioned by the event “disengaging VNAV”, and d2 will take place when the pilot is “engaging VNAV”.

When in vertical navigation mode, the pilot can engage the Speed Intervene submodes by pushing the speed knob. This event, “push” (which can be seen in the speed knob behavior process), triggers event b, which is then broadcast to other processes. Being in VNAV and sensing event b (“in VNAV and b”) is another (OR) condition on transition d1 from closed to open. Likewise, it is also the condition on transition d2 that takes us back to close. To this end, the behavior of the speed knob is circular; the pilot can push the knob to close and push it again to open, ad infinitum.

As explained above and illustrated in Figure 13.3, there are two sets of conditions on the transitions between close and open. Of all these conditions, one, namely “disengaging VNAV”, is not always directly within the pilot’s control; it sometimes takes place automatically (e.g., during a transition from VNAV to the altitude hold mode). Manual re-engagement of VNAV will cause the speed parameter in the speed window to be replaced by economy speed computed by the flight management computer. If the speed value in the speed window was a restriction required by the American Transport Council, the aircraft will now accelerate/decelerate to the computed speed and the American Transport Council speed restriction will be ignored!

(b) Functional models

When we survey the use of models in devices, an additional type emerges: the functional model, which refers to the active function of the machine that produces a distinct behavior. An automatic gearshift mechanism of a car is one example of a machine with different models, each one defining different behaviors.

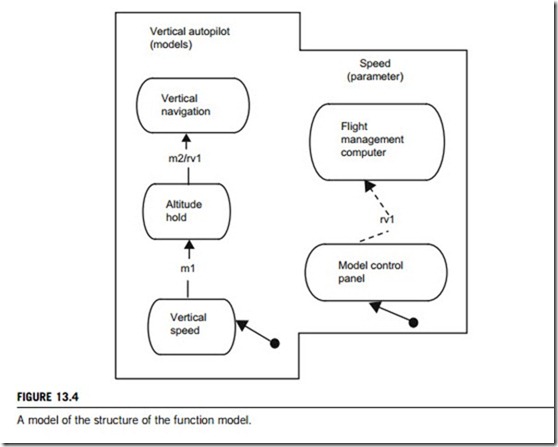

As we move to discussion of functional models and their uses in machines that control a timed process, we encounter the concept of dynamics. In dynamic control systems, the configuration and resulting behavior of the machine are a combination of a model and its associated parameter (e.g., speed, time, direction, etc.). Referring back to our car example, the active model is the engaged gear that is Drive, and the associated parameter is the speed that corresponds to the angle of the accelerator pedal (say, 65 miles/h). Both model (Drive) and parameter (65 miles/h) define the configuration of the mechanism. Figure 13.4 depicts the structure of a functional model in the dynamic automated control system of a modern airliner. Two concurrent processes are depicted in this modeling structure: (1) models, and (2) parameter sources.

Three models are depicted in the vertical model superstate in Figure 13.4: vertical navigation, altitude hold, and vertical speed (the default model). All are functional models related to the vertical aspect of flight. The speed parameter can be obtained from two different sources: the flight management computer or the model control panel. The default source of the speed parameter, indicated by the small arrow in Figure 13.4, is the model control panel. As mentioned in the discussion on interface models, engagement of vertical navigation via the model control panel will cause a transition

to the flight management computer as the source of speed. This can be seen in Figure 13.4 where transition m2 will trigger event rv1, which, in turn, triggers an automatic transition (depicted as a broken line) from “model control panel” to “flight management computer”. In many dynamic control mechanisms, some model transitions trigger a parameter source change while others do not. Such independence appears to be a source of confusion to operators.

(c) Supervisory models

The third type of model we discuss here is the supervisory model, sometimes also referred to as participatory or control models. Modern automated control mechanisms usually allow the user flex- ibility in specifying the level of human and machine involvement in controlling the process, that is, the operator may decide to engage a manual model in which he or she controls the process; a semi- automatic model in which the operator specifies target values, in real-time, and the machine attempts to maintain them; or fully automatic models in which the operator specifies in advance a sequence of target values, and the machine attempts to achieve these automatically, one after the other.

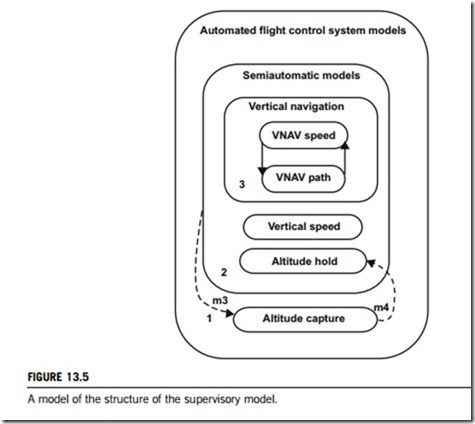

Figure 13.5 is an example of a supervisory model structure that can be found in many control mechanisms, such as automated flight control systems, cruise control of a car, and robots on assembly lines. The modeling structure consists of hierarchical layers of superstates, each with its own set of models. The supervisory models in the Automated Flight Control System are organized hierarchically.

Three main levels are described in Figure 13.5. The highest level of automation is the vertical navi- gation model (level 3), depicted as a superstate at the top of the models pyramid. Two submodels are encapsulated in the vertical navigation model; VNAV Speed and VNAV Path; each one exhibiting a somewhat different control behavior. One level below (level 2) are two semiautomatic models: vertical speed and altitude hold.

One model in the automated flight control system, altitude capture, can only be engaged automatically; no direct manual engagement is possible. It engages when the aircraft is beginning the level- off maneuver to capture the selected altitude. When several hundred feet from the selected altitude, an automatic transition from any climb model to altitude capture takes place (m3). In this example, an aspect can be that a transition from vertical navigation or vertical speed to altitude capture takes place (m3). Finally, when the aircraft reaches the selected altitude, a transition back from altitude capture to altitude hold model takes place, also automatically (m4).

In summary, we have illustrated a modeling language, Start Charts, for representing human interaction with control systems, and proposed a classification of three different types of model that are employed in computers, devices, and supervisory control systems. Interface models change display format, functional models allow for different functions and associated parameters, and lastly supervisory models specify the level of supervision (manual, semiautomatic, and fully automatic) in the human machine system. The three types of models described here are essentially similar in that they all define the manner in which a certain component of the machine behaves. The component may be the interface only, a function of the machine, or the level of supervision. This commonality brings us back to our general working definition of the term model; a machine configuration that corresponds to unique behavior.

Systems of human–machine interactions

There are three architectures of human machine systems that are currently popular in industrial control: adaptive, supervisory, and distributed.

(1) Adaptive human machine interface

In a complex control system, the human machine interface attempts to give users the means to perceive and manipulate huge quantities of information under constraints such as time, cognitive workload, devices, etc. The intelligence in the human machine interface makes the control system more flexible and more adaptable. One subset of the intelligent user interface is the adaptive interface. An adaptive interface modifies its behavior according to some defined constraints in order to best satisfy all of them, and varies in the ways and means that are used to achieve adaptation.

In the human machine interface of an industrial control, operators, system, and context are continuously changing and are sometimes in contradiction with one another. Thus, this kind of interface can be viewed as the result of a balance between these three components and their changing relative importance and priority. An adaptive interface aims to assist the operator in acquiring the most salient information, in the most appropriate form, and at the most opportune time. In any industrial control systems, two main factors are considered: the system that generates the information stream and the operator to which this stream is presented. The system and the operator share a common goal: to control the process and to solve any problems that may arise. This common objective makes them cooperate although they may both have their own goals. The role of the interface is to integrate these different goals with different prioritics and the various constraints that come from the task, the environment, or the interface itself. Specifically, an industrial process control should react consistently, and in a timely fashion, without disturbing the operator needlessly in his task. However the most salient pieces of information should be presented in the most appropriate way.

To develop an adaptive model that meets a set of criteria, the model must: incorporate representations of operator states; represent interface features which are adaptable; represent cognitive processing in a computational framework; provide measures of merit for matches between the input state variables and interface adaptation permutations; operate in a real-time mode; and, finally, incorporate self-evolving mechanisms.

The two main adaptation triggers used to modify the human machine interface could be:

(a) The process: when the process moves from a normal to a disturbed state, the streams of information may become denser and more numerous. To avoid any cognitive overload, the interface acts as a filter that channels the streams of information. To this end, it adapts the presentation of the pieces of information in order to help the operator identify and solve the problem.

(b) The operator: an operator is very difficult to adapt to the user state. As a matter of fact, the interface has to infer whether the operator reacts incorrectly and needs help based on his actions. Then, it may decide to adapt itself to highlight the problem and suggest solutions to assist the user.

The aim of the adaptation is to optimize the organization and the presentation of the pieces of information, according to the state of the process and the inferred state of the operator. What is expected is to improve the communication between the system and the user. The means proposed in an adaptive human machine interface are the following:

(i) Highlight relevant pieces of information. The importance of a piece of information depends on its relevance according to the particular goals and constraints of each of the entities that participates in the communication between the system and the operator.

(ii) Optimize space usage. According to the current usage of the resources, it may turn out that it is

necessary to reorganize the display space to cope with new constraints and parameters.

(iii) Select the best representation. According to the piece of information, its importance, the resources available, and the media currently in use, the most appropriate media to communicate with the operator should be used.

(iv) Timeliness of information. The display of a particular piece of information should be timely with

respect to the process and the operator. This adaptation should follow the evolution of the process over time, but it should also adapt the timing of the displayed information to the inferred needs of the operator.

(v) Perspectives. In traditional interfaces, the operator has to decide what, where, when, and how the information should be presented. This raises at least four questions: (1) from the client’s point of view, is the cost of developing an adaptive human machine interface justifiable? (2) From the human machine interface designer’s point of view, is this kind of interface usable and can its usability be evaluted? (3) From the developer’s point of view, what are the best technical solutions to implement an efficient system within the required time? (4) From the operators’ point of view, is such an adaptive interface a collaborator or a competitor?

(2) Supervisory human machine interface

A supervisory human machine interface can be used in systems where there is a considerable distance between the control room and the machine. It is from this machine house that the controller such as a SCADA or PLC controls the objects, which are, for example, pumps, blowers and purification monitors, etc. To provide the data communication, the supervisory software of the human machine interface is linked with the controllers over a network such as Ethernet, a control area network (CAN), etc. The supervisory software is such that only one person is needed at any one time to monitor the whole plant from a single master device. The generated graphics show a clear representation, on screen, of the current status of any part of the system. A number of alarms are automatically activated directly if parameters deviate from their tight tolerance band. This requires extremely rapid updating of the control room screen contents. All the calculations for the controllers are performed by the control software, using constant feedback from sensors throughout the production process.

In many applications, the supervisory human machine interface is indeed an ideal software package for cases such as the above. Thanks to its interactive configuration and ease of setting up, it is able to get the system up and running and tested in a straightforward manner.

Such an interface follows an open architecture offering all the functions and options necessary for data collection and representation. The system provides comprehensive logging of all measured values in a database. By accessing this database and by using real-time measurements, a wide variety of reports and trend curves can be viewed on the screen or output to a printer.

(3) Distributed human machine interface

The distributed human machine interface is a component-based approach. In a system using such an approach, the interface can directly access any controller component, which also means that each controller displays the human machine interface. Since all the system components are location- transparent, the human machine interface can bind to a component in any location, be it in-process, local, or a remote. The most likely case is remote binding since it is likely that the human machine interface and the controller would reside on different platforms.

In such a set-up, multiple servers are typically used to provide the system with the flexibility and power of a peer-to-peer architecture. Each controller can have its own human machine interface server. A controller’s assigned server or proxy server is sufficient for each controller to manage expansion, frequent system changes, maintenance, and replicated automation lines within or across plants.

The primary drawback to decentralized components is the uncertainty of real-time controller performance, generally resulting from poorly designed proxy agent use, for example, if the human machine interface samples controller data at too high a frequency. However, the distributed human machine interface is ideal for SCADA applications, since its distributed peer-to-peer architecture, reusable components, and remote deployment and maintenance capabilities make supporting these applications remarkably efficient. The software’s network services have been optimized for use over slow and intermittent networks, which significantly enhances application deployment and communications.

Designs of human–machine interactions

The design of a human machine interface is important, since its effectiveness will often make or break the application. Although the functionality that an application provides to users is important, the way in which this is provided is equally important. An application that is difficult to use will not be used, hence the value of human machine interface design should not be underestimated.

One of the simplest approaches to interactive systems is to describe the stages that users go through when faced with the task of using it. We can identify roughly five steps for a typical user interaction with a generic interactive system: setting up the design goals, specifying the design principles, executing the design procedures, testing the system performance, and evaluating the design results.

(1) Design principles

The following describes a collection of principles for improving the quality of human machine interface design.

(a) The structure principle. The design should organize the interface purposefully, in meaningful and useful ways based on clear, consistent models that are apparent and recognizable to users. Putting related things together and separating unrelated things, differentiating dissimilar things and making similar things resemble one another are all important. The structure principle is concerned with the overall interface architecture.

(b) The simplicity principle. The human machine interface design should make commonly performed tasks easy to do, communicating clearly and simply in the user’s own language, and providing good shortcuts that are meaningfully related to longer procedures.

(c) The visibility principle. The human machine interface design should keep all needed options and

materials for a given task visible, without distracting the user with extraneous or redundant information. Good designs do not overwhelm users with too many alternatives or confuse them with unneeded information.

(d) The feedback principle. The human machine interface design should keep users informed of actions or interpretations, changes of state or condition, and errors or exceptions that are relevant to the user through clear, concise, familiar, and unambiguous language.

(e) The tolerance principle. The human machine interface design should be flexible and tolerant,

reducing the cost of mistakes and misuse by allowing undoing and redoing, while also preventing errors wherever possible by tolerating varied inputs and sequences and by interpreting all reasonable actions.

(f) The reuse principle. The human machine interface design should reuse internal and external

components and behaviors, maintaining consistency with purpose, thus reducing the need for users to rethink and remember.

(2) Design process

The design of human machine interfaces should follow these principles and seek a human-centered and integrated automation approach. Functionalities of the interfaces are explained with respect to goals, means, tasks, dialogue, presentation, and error tolerance. The design process itself is considered as a problem-solving activity which contains knowledge about goals, the application domain, human operators, tasks, and human machine interface ergonomics (with the availability of graphical and dialogue editors).

(a) Phase one. The design process begins with a task analysis in which we identify all the stakeholders, examine existing control or production systems and processes, whether paper- based or computerized, and identify ways and means to streamline and improve the process.

540 CHAPTER 13 Human machine interfaces

Tasks at this phase are to conduct background research, interview stakeholders, and observe people conducting tasks.

(b) Phase two. Once having an agreed-upon objective and set of functional requirements, the next step should generate a design that meets all the requirements. The goal of the design process is to develop a coherent, easy to understand software front-end that makes sense to the eventual users of the system. The design and review cycle should be iterated until a satisfactory design is obtained.

(c) Phase three. The next phase is implementation and testing; along with developing and implementing any performance support aids, for example, on-line help, paper manuals, etc.

(d) Phase four. Once a functional system is complete, we move into the final phase. What constitutes “success” is people using our system, whether it be an intelligent tutoring system or an online decision aid, and are able to see solutions that they could not see before and/or better understand the constraints that are in place. We generally conduct formal experiments, comparing performance using our system with perhaps different features turned on and off, to make a contribution to the literature on decision support and human machine interaction.

(3) Design evaluation

An important aspect of human machine interaction is the methodology for evaluation of user interface techniques. Precision and recall measures have been widely used for ranking non- interactive systems, but are less appropriate for assessing interactive systems. Standard evaluations emphasize high recall levels, but in many interactive settings, users require only a few relevant documents and do not need high recall to evaluate highly interactive information access systems. Useful metrics beyond precision and recall include: time required to learn the system, time required to achieve goals on benchmark tasks, error rates, and retention of the way to use the interface over time.

Empirical data involving human users are time-consuming to gather, and difficult to draw conclusions from. This is due in part to variation in users’ characteristics and motivations, and in part to the broad scope of information access activities. Formal psychological studies usually only uncover narrow conclusions within restricted contexts, for example, quantities such as the length of time it takes for a user to select an item from a fixed menu under various conditions have been characterized empirically, but variations in interaction behavior for complex tasks like information access are difficult to account for accurately. A more informal evaluation approach is called a heuristic evaluation, in which user interface affordances are assessed in terms of more general properties and without concern about statistically significant results.