Mental Processes

The nerve impulses are processed in specific areas of the brain that appear to have evolved at different times to provide different types of information. The time domain response works quickly, primarily aiding the direction-sensing mechanism and is older in evolutionary terms. The frequency domain response works more slowly, aiding the determination of pitch and timbre and evolved later, presumably as speech evolved.

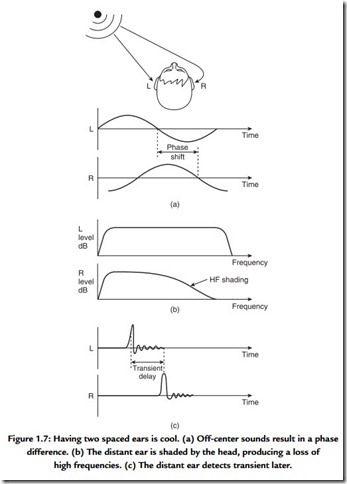

The earliest use of hearing was as a survival mechanism to augment vision. The most important aspect of the hearing mechanism was the ability to determine the location of the sound source. Figure 1.7 shows that the brain can examine several possible differences between the signals reaching the two ears. In Figure 1.7(a), a phase shift is apparent. In Figure 1.7(b), the distant ear is shaded by the head, resulting in a different frequency response compared to the nearer ear. In Figure 1.7(c), a transient sound arrives later at the more distant ear. The interaural phase, delay, and level mechanisms vary in their effectiveness depending on the nature of the sound to be located. At some point a fuzzy logic decision has to be made as to how the information from these different mechanisms will be weighted.

There will be considerable variation with frequency in the phase shift between the ears. At a low frequency such as 30 Hz, the wavelength is around 11.5 m so this mechanism must be quite weak at low frequencies. At high frequencies the ear spacing is many wavelengths, producing a confusing and complex phase relationship. This suggests a frequency limit of around 1500 Hz, which has been confirmed experimently.

At low and middle frequencies, sound will diffract round the head sufficiently well that there will be no significant difference between the levels at the two ears. Only at high

frequencies does sound become directional enough for the head to shade the distant ear, causing what is called interaural intensity difference.

Phase differences are only useful at low frequencies and shading only works at high frequencies. Fortunately, real-world noises and sounds are broadband and often contain transients. Timbral, broadband, and transient sounds differ from tones in that they contain many different frequencies. Pure tones are rare in nature.

A transient has a unique aperiodic waveform, which, as Figure 1.7(c) shows, suffers no ambiguity in the assessment of interaural delay (IAD) between two versions. Note that a one-degree change in sound location causes an IAD of around 10 μs. The smallest detectable IAD is a remarkable 6 μs. This should be the criterion for spatial reproduction accuracy.

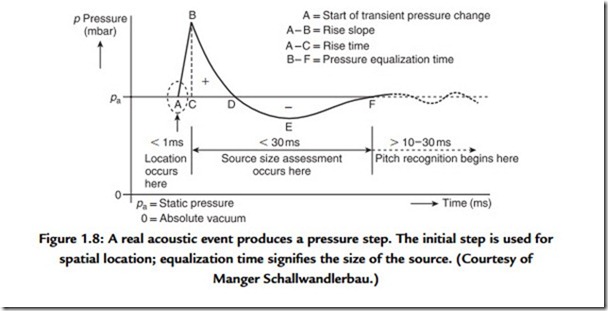

Transient noises produce a one-off pressure step whose source is accurately and instinctively located. Figure 1.8 shows an idealized transient pressure waveform following an acoustic event. Only the initial transient pressure change is required for location. The time of arrival of the transient at the two ears will be different and will locate the source laterally within a processing delay of around a millisecond.

Following the event that generated the transient, the air pressure equalizes. The time taken for this equalization varies and allows the listener to establish the likely size of the sound source. The larger the source, the longer the pressure–equalization time. Only after this does the frequency analysis mechanism tell anything about the pitch and timbre of the sound.

The aforementioned results suggest that anything in a sound reproduction system that impairs the reproduction of a transient pressure change will damage localization and the assessment of the pressure–equalization time. Clearly, in an audio system that claims to offer any degree of precision, every component must be able to reproduce transients accurately and must have at least a minimum phase characteristic if it cannot be phase linear. In this respect, digital audio represents a distinct technical performance advantage, although much of this is later lost in poor transducer design, especially in loudspeakers.