DIGITAL AUDIO

The sounds we hear and the sounds we generate are analog signals. A micro- phone picks up the voice and music and produces an analog signal to be amplified or stored. We have amplifiers and speakers that will accurately reproduce the

sounds. But the real challenge with audio is capturing, storing, or recording the sounds for later reproduction. With today’s digital techniques, we can store not only voice and music more accurately than before, but we can also transmit it more easily by radio, TV, or the Internet.

Past Recording Media

Edison invented the phonograph in the late 1800s. This was the first device ever conceived to record voice. A microphone converted the sound into vibrations that moved a sharp needle in direct accordance with the sound. The nee- dle cut a varying groove in the wax coating on a rotating cylinder. Later, a needle placed in the groove moved the diaphragm in a horn speaker that translated the movement into sound. The quality was poor but it worked, and the concept was soon translated into what we know as a phonograph record. The record disc is rotated while a needle cuts a spiral groove into the plastic that accurately captures the sound. To reproduce the sound the disc is rotated at the same speed and a needle is placed in the groove to reproduce the sound in a speaker. A transducer converts the movement of the needle in the groove into an analog signal that is amplified.

Early records spun at a speed of 78 rpm and could only hold a few minutes of sound. Later a smaller 45-rpm record was invented to play minutes of music at a lower cost. Then a larger long-playing record spinning at only 331⁄3 rpm was produced that could hold an hour or so of sound. Stereo or two channel sound was also effectively recorded into a V-shaped groove in the record.

Records were soon upstaged by magnetic tape. A plastic such as Mylar was coated with a powdered iron or ferrite magnetic material. The music or sound was then applied to a magnetic coil that put a magnetic pattern on the tape representing the sound. A coil passed near the tape would convert the magnetic pattern variations into a small voltage to recover the sound. Reel-to-reel tape was popular for a while, but magnetic tape became the recording media of choice for years when the Philips cassette tape was invented. Other formats like the ever-popular 8-track unit were also used.

All of these formats worked well but had their limitations. Record grooves wore out with usage causing the high-frequency response to be eroded. Scratches and dirt on the record also produced noise. As for tape, it had good frequency response but had its own noise that could not be reduced below a certain level. The dynamic range of both was poor.

Digital technology has solved all these problems. Now the most popular medium is the compact disc (CD), which records sound in full digital format. It has very low, practically undetectable noise, wide frequency response, and a very long life. The cost is also low.

Digital sound is also routinely stored in computer memories like flash EEPROMs and transmitted over the Internet. With digital techniques, sound can also be processed with DSP such as compression, filtering, and equalization.

Digitizing Sound

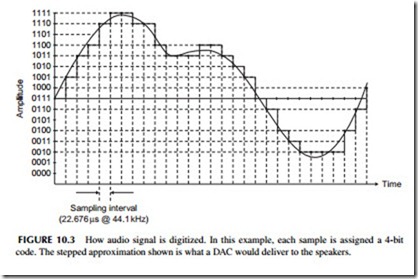

Most digital sound and music is digitized with a sampling rate of 44.1 kHz or 48 kHz. Recall the Nyquist requirement that to capture and retain the fre- quency content accurately, the analog signal has to be sampled at least twice the highest frequency of the sound. Since most music and the human ear cut off at about 20 kHz, then any sampling rate greater than 2 X 20 kHz = 40 kHz will satisfy that requirement. The rate of 44.1 kHz was standardized for CDs and 48 kHz for professional recordings. At 44.1 kHz, a sample is taken every 1/44,100 = 22.676 microseconds.

Figure 10.3 shows the sampling of an analog signal. The smooth curve is the analog music or voice. At each vertical interval a sample is taken. In this

example, a 4-bit ADC is used so there are 24 = 16 possible levels. Note the 4-bit code associated with each sample amplitude. The binary samples are stored for later playback. When sent to a DAC, the samples will reproduce the audio in a stepped approximation shown overlaid with the analog signal. Some low- pass filtering of that signal will produce a sound which almost duplicates the original.

Digitizing audio usually results in each sample producing a 16-bit word. With 16 bits you can represent 216 = 65,536 levels. That produces a dynamic range from the highest to lowest amplitude levels of 65,536 to 1. On the decibel (dB) scale, that is a range of 96 dB—far greater than any dynamic range of previous analog recording methods. And noise is virtually nonexistent. It is so low no one can hear it. The overall result is a highly accurate way to capture sound and music. The 16-bit words can then be conveniently stored in a memory, or captured on a compact disc.

Digital Compression

Digital compression is a mathematical technique that greatly reduces the size of a digital word or bitstream so that it may be transmitted faster or stored in a smaller memory. Digitizing sound creates a huge number of bits. Assume stereo music that sampled at a rate of 44.1 kHz to create 16-bit words for each sample. One second of stereo music, then, produces 41,000 X 16 X 2 = 1,411,200 bits. A 3-minute song is 60 X 3 = 180 seconds long. The result is 1,411,200 X 180 = 254,016,000 bits. Since there are 8 bits per byte, the result is 31,742,000 bytes or nearly 32 MB or megabytes. That is an enormous amount of memory for just one song. With a recording medium like the CD with a storage capacity of about 700 MB, that is okay. But for computers or portable music devices, it is impractical, not to mention expensive. And to transmit that over the Internet would take about 4 minutes at a 1 Mb/s rate. Pretty slow by today’s standards.

The solution to this storage and transmission problem is to compress the bitstream into fewer bits. This is done by a variety of mathematical algorithms that greatly reduce the number of bits without materially affecting the qual- ity of the sound. The process is called digital compression. The music is com- pressed before it is stored or transmitted. Then it has to be decompressed to hear the original sound.

The two most commonly used music compression algorithms are MP3 and AAC. MP3 is short for MPEG-1 Audio Layer 3, the algorithm developed by the Motion Picture Experts Group as part of a system that compressed video as well as audio. AAC means advanced audio coding. MP3 is by far the most widely used for storing music in MP3 music players and sending music over the Internet. AAC is used in the Apple iPod and iPhone and used on the iTunes site to send music. It is also part of later MPEG2 and MPEG4 video compression formats. Both methods significantly reduce the number of bits to roughly a tenth of their original size, greatly speeding up transmission and easing storage requirements. There are many more compression standards out there, but these are by far the most used and the ones you will most likely encounter.

To perform the compression process you actually need a special CPU or processor. It is typically a special DSP device programmed with the algorithm for either compressing or decompressing the audio.

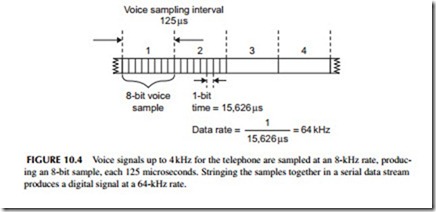

There are also a number of compression methods used just for voice. Voice compression was created to produce signals for telephony. Most phone systems assume a maximum voice frequency of 4 kHz. The most common digitizing rate is twice that or 8 kHz. Eight-bit samples are typical. If you digitize voice creating a stream of samples in serial format, the signal would look like that in Figure 10.4. Each sample produces an 8-bit word where each bit is 125/8 = 15.625 μs long. That translates to a serial data rate of 1/15.625 μs = 64 kbps. This takes up too much bandwidth in a telephone system, so compression is used. The International Telecommunications Union (ITU), an international standards organization, has created a whole family of compression standards. These are designated as G.711, G.723, G.729, and others. These mathematical algorithms reduce the bit rate for transmission to about 8 kbps. You will see them used in VoIP (voice over Internet phone) digital phones, which are gradu- ally replacing regular old-style analog phones.

There are many other forms of audio compression. Another common one is Dolby Digital or AC-3 that is used in digital movie theater presentations and some DVD players.

How an MP3 Player Works

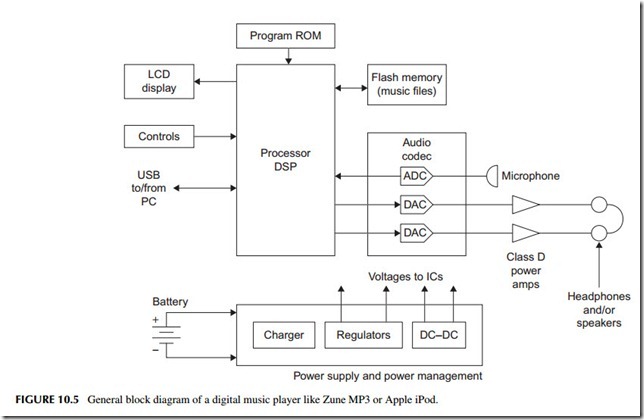

MP3 players such as the Microsoft Zune or Apple iPod are amazing electronic systems in a hand-held package. They all take the general form shown in Figure 10.5. At the heart of the player is a processor. It may be a general pro- cessor or a DSP. In some cases, two processors are used, one to control the player buttons, controls, and display, and other general housekeeping functions; a separate DSP handles the compression/decompression. A ROM holds the general control program for the device. A large flash EEPROM is used to store the music in compressed form. Some devices also provide for an external flash memory plug-in device to hold more music. A USB port is the main I/O interface to connect to a PC for the music downloads.

The codec in the device is the coder-decoder. This chip holds the ADC to digitize music from an external source such as a microphone (coder) and the DACs that decode or translate the decompressed audio back into the original analog music for amplification. Class-D switching power amplifiers are used for the headphones to reduce power consumption. One or more internal speak- ers may also be used. Other circuits include the LCD display and its drivers and LED back-lights if used. A power management chip is normally used to provide multiple DC voltages to the different circuits and to manage power to conserve it. This section also contains the battery charger.

The Compact Disc

The compact disc (CD) is by far the most popular digital audio medium in use today. It has been around since the 1980s and is still going strong. It has virtually totally replaced the Philips cassette magnetic tape cartridges and vinyl phonograph discs. About the only competition the CD has is from flash EEPROM devices such as those used to store MP3/AAC music for portable music players.

You have probably seen a CD. Let’s add a little background so you will appreciate the CD. It is a 4.72-inch (120-mm) diameter disc made of plastic. It is about 0.05 inch (1.2 mm) thick and has a hole in the center for mounting on the motor that spins the disc. It is actually a sandwich of clear plastic and a thin metal layer for reflecting light.

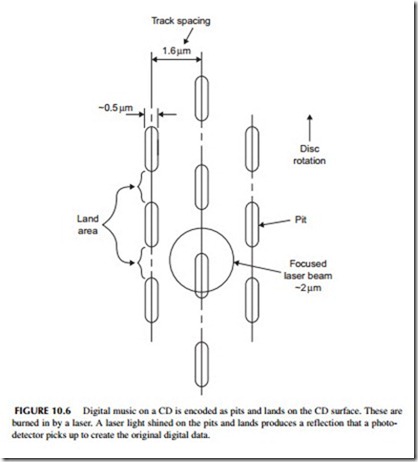

The music is recorded on the disc in digital format. A laser actually burns pits into the plastic representing the 1’s and 0’s of the digital data (see Figure 10.6). These pits are extremely small, about 0.5 μm wide (a micron designated μm is 1-millionth of a meter). The flat areas between the pits are called lands. A binary 1 occurs at a transition from a pit to a land or vice versa while the land is a string of binary 0s. The binary data is recorded as a continuous spiral track about 1.6 μm wide, starting at the center and working out- ward. That is a very efficient way to store the data, but it means that the motor

speed must vary to ensure a steady bit rate when the music is played back. The pickup mechanism will experience higher speeds at the center of the disc and lower speeds at the outer edges of the disc, so that a speed control mechanism keeps the recovered data rate constant.

The music on a CD is derived from two stereo channels of music with a frequency response from 20 Hz to 20 kHz. Digitization occurs at the standard 44.1-kHz rate. Each sample is 16 bits long. The 16-bit words from the left and right channels are alternated and formatted into a serial data stream that occurs at a rate of 44.1 kHz X 16 X 2 = 1.4112 MHz. The 16-bit words are then encoded in a special way. First, they undergo an error detection and cor- rection encoding scheme using what is called cross-interleaved Reed-Solomon code (CIRC). This coding helps detect errors in reading the disc caused by dirt, scratches, or other distortion. The CIRC adds extra bits that are used to find the errors and fix them prior to playback.

Next, the serial data string is then processed using what is called eight-to- fourteen modulation (EFM). Each 8-bit piece of data is translated into a 14-bit word by a look-up table. EFM formats the data for the pit-and-land encoding scheme. Finally, the completed data is formatted into frames 588 bits long and occurring at a rate of 4.32 Mbps. That is the speed of the serial data coming from the pickup assembly in a CD player before processing.

To recover the audio from the CD, the disc is put into the player and a motor rotates it. Refer to Figure 10.7. A laser beam is shined on the disc as indicated in Figure 10.6. The reflections from the pits and lands produce an optical light pattern that is picked up by a photodetector that converts the light variations into the 4.32 Mbps bitstream. A motor control system varies the speed of the motor to keep the data rate steady. The data stream goes to a batch of processing circuits. First, the EFM is removed, and then a CIRC decoder identifies and repairs any errors and recovers the original 1.41-Mbps data stream. A demultiplexer separates the left- and right-channel 16-bit words and sends them to the DACs, where the original analog music is recovered and sent to the power amplifiers and speakers.

The CD can store lots of data. Its capacity is in the 650- to 700-MB range. That translates into a maximum of about 74 minutes of audio. And this is not compressed.