Process

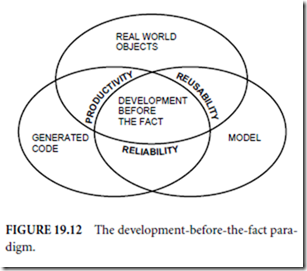

Derived from the combination of steps taken to solve the problems of traditional systems engineering and software development, each DBTF system is defined with built-in quality, built-in productivity and built-in control (like the biological superorganism). The process combines mathematical perfection with engineering precision. Its purpose is to facilitate the “doing things right in the first place” development style, avoiding the “fixing wrong things up” traditional approach. Its automation is developed with the following considerations: error prevention from the early stage of system definition, life cycle control of the system under development, and inherent reuse of highly reliable systems. The development life cycle is divided into a sequence of stages, including: requirements and design modeling by formal specification and analysis; automatic code generation based on consistent and logically complete models; test and execution; and simulation.

The first step is to define a model with the language. This process could be in any phase of the develop- mental life cycle, including problem analysis, operational scenarios, and design. The model is automatically analyzed to ensure that it was defined properly. This includes static analysis for preventive properties and dynamic analysis for user intent properties. In the next stage, the generic source code generator automatically generates a fully production-ready and fully integrated software implementation for any kind or size of application, consistent with the model, for a selected target environment in the language and architecture of choice. If the selected environment has already been configured, the generator selects that environment directly; otherwise, the generator is first configured for a new language and architecture.

Because of its open architecture, the generator can be configured to reside on any new architecture (or interface to any outside environment), e.g., to a language, communications package, an internet interface, a database package, or an operating system of choice; or it can be configured to interface to the users own legacy code. Once configured for a new environment, an existing system can be automatically regenerated to reside on that new environment. This open architecture approach, which lends itself to true component based development, provides more flexibility to the user when changing requirements or architectures; or when moving from an older technology to a newer one.

It then becomes possible to execute the resulting system. If it is software, the system can undergo testing for further user intent errors. It becomes operational after testing. Application changes are made to the requirements definition—not to the code. Target architecture changes are made to the configuration of the generator environment (which generates one of a possible set of implementations from the model)—not to the code. If the real system is hardware or peopleware, the software system serves as a simulation upon which the real system can be based. Once a system has been developed, the system and the process used to develop it are analyzed to understand how to improve the next round of system development.

Seamless integration is provided throughout from systems to software, requirements to design to code to tests to other requirements and back again; level to level, and layer to layer. The developer is able to trace from requirements to code and back again.

Given an automation that has these capabilities, it should be no surprise that it has been defined with itself and that it continues to automatically generate itself as it evolves with changing architectures and changing technologies. Table 19.2 contains a summary of some of the differences between the more modern preventative paradigm and the traditional approach.

A relatively small set of things is needed to master the concepts behind DBTF. Everything else can be derived, leading to powerful reuse capabilities for building systems. It quickly becomes clear why it is no longer necessary to add features to the language or changes to a developed application in an ad hoc fashion, since each new aspect is ultimately and inherently derived from its mathematical foundations.

Although this approach addresses many of the challenges and solves many of the problems of traditional software environments, it could take time before this paradigm (or one with similar properties) is adopted by the more mainstream users, since it requires a change to the corporate culture. The same has been true in related fields. At the time when the computer was first invented and manual calculators were used for almost every computation; it has been said that it was believed by hardware pioneers like Ken Olson, founder of Digital Equipment Corporation, that there would only be a need for four computers in the world. It took awhile for the idea of computers and what they were capable of to catch on. Such could be the case for a more advanced software paradigm as well. That is, it could take awhile for software to be truly something to manufacture as opposed to being handcrafted as it is in today’s traditional development environments.

Select the Right Paradigm and then Automate

Where software engineering fails is in its inability to grasp that not only the right paradigm (out of many paradigms) must be selected, but that the paradigm must be part of an environment that provides an inherent and integrated automated means to solve the problem at hand. What this means is that the paradigm must be coupled with an integrated set of tools with which to implement the results of utilizing the paradigm to design and develop the system. Essentially, the paradigm generates the model and a toolset must be provided to generate the system.

Businesses that expected a big productivity payoff from investing in technology are, in many cases, still waiting to collect. A substantial part of the problem stems from the manner in which organizations are building their automated systems. Although hardware capabilities have increased dramatically, organizations are still mired in the same methodologies that saw the rise of the behemoth mainframes. Obsolete methodologies simply cannot build new systems.

There are other changes as well. Users demand much more functionality and flexibility in their systems. And given the nature of many of the problems to be solved by this new technology, these systems must also be error free.

Where the biological superorganism has built-in control mechanisms fostering quality and productivity, up until now, the silicon superorganism has had none. Hence, the productivity paradox. Often, the only way to solve major issues or to survive tough times is through nontraditional paths or innovation. One must create new methods or new environments for using new methods. Innovation for success often starts with a look at mistakes from traditional systems. The first step is to recognize the true root problems, then categorize them according to how they might be prevented. Derivation of practical solutions is a logical next step. Iterations of the process entail looking for new problem areas in terms of the new solution environment and repeating the scenario. That is how DBTF came into being.

With DBTF, all aspects of system design and development are integrated with one systems language and its associated automation. Reuse naturally takes place throughout the life cycle. Objects, no matter how complex, can be reused and integrated. Environment configurations for different kinds of architectures can be reused. A newly developed system can be safely reused to increase even further the productivity of the systems developed with it.

This preventative approach can support a user in addressing many of the challenges presented in today’s software development environments. There will, however, always be more to do to capitalize on this technology. That is part of what makes a technology like this so interesting to work with. Because it is based on a different premise or set of assumptions (axioms), a significant number of things can and will change because of it. There is the continuing opportunity for new research projects and new products. Some problems can be solved, because of the language, that could not be solved before. Software development, as we have known it, will never be the same. Many things will no longer need to exist—they, in fact, will be rendered extinct, just as that phenomenon occurs with the process of natural selection in the biological system. Techniques for bridging the gap from one phase of the life cycle to another become obsolete. Techniques for maintaining the source code as a separate process are no longer needed, since the source is automatically generated from the requirements specification. Verification, too, becomes obsolete. Techniques for man- aging paper documents give way to entering requirements and their changes directly into the requirements specification database. Testing procedures and tools for finding most errors are no longer needed because those errors no longer exist. Tools to support programming as a manual process are no longer needed.

Compared to development using traditional techniques, the productivity of DBTF developed systems has been shown to be significantly greater (DOD, 1992; Krut, 1993; Ouyang, 1995; Keyes, 2000). Upon further analysis, it was discovered that the productivity was greater the larger and more complex the system—the opposite of what one finds with traditional systems development. This is, in pa2004rt, because of the high degree of DBTF’s support of reuse. The larger a system, the more it has the opportunity to capitalize on reuse. As more reuse is employed, productivity continues to increase. Measuring productivity becomes a process of relativity—that is, relative to the last system developed.

Capitalizing on reusables within a DBTF environment is an ongoing area of research interest1 (Hamilton, 2003–2004). An example is understanding the relationship between types of reusables and metrics. This takes into consideration that a reusable can be categorized in many ways. One is according to the manner in which its use saves time (which translates to how it impacts cost and schedules). More intelligent tradeoffs can then be made. The more we know about how some kinds of reusables are used, the more information we have to estimate costs for an overall system. Keep in mind, also, that the traditional methods for estimating time and costs for developing software are no longer valid for estimating systems developed with preventative techniques.

There are other reasons for this higher productivity as well, such as the savings realized and time saved due to tasks and processes that are no longer necessary with the use of this preventative approach. There is less to learn and less to do—less analysis, little or no implementation, less testing, less to manage, less to document, less to maintain, and less to integrate. This is because a major part of these areas has been automated or because of what inherently takes place because of the nature of the formal systems language. The paradigm shift occurs once a designer realizes that many of the old tools are no longer needed to design and develop a system. For example, with one formal semantic language to define and integrate all aspects of a system, diverse modeling languages (and methodologies for using them), each of which defines only part of a system, are no longer necessary. There is no longer a need to reconcile multiple techniques with semantics that interfere with each other.

Software is a relatively young technological field that is still in a constant state of change. Changing from a traditional software environment to a preventative one is like going from the typewriter to the word processor. Whenever there is any major change, there is always the initial overhead needed for learning the new way of doing things. But, as with the word processor, progress begets progress.

In the end, it is the combination of the methodology and the technology that executes it that forms the foundation of successful software. Software is so ingrained in our society that its success or failure will dramatically influence both the operation and the success of businesses and government. For that reason, today’s decisions about systems engineering and software development will have far reaching effects.

Collective experience strongly confirms that quality and productivity increase with the increased use of properties of preventative systems. In contrast to the “better late than never” after the fact philosophy, the preventative philosophy is to solve—or possible, prevent—a given problem as early as possible. This means finding a problem statically is better than finding it dynamically. Preventing it by the way a system is defined is even better. Better yet, is not having to define (and build) it at all.

Reusing a reliable system is better than reusing one that is not reliable. Automated reuse is better than manual reuse. Inherent reuse is better than automated reuse. Reuse that can evolve is better than one that cannot evolve. Best of all is reuse that approaches wisdom itself. Then, have the wisdom to use it. One such wisdom is that of applying a common systems paradigm to systems and software, unifying their understanding with a commonly held set of system semantics.

DBTF embodies a preventative systems approach, the language supports its representation, and its automation supports its application and use. Each is evolutionary (in fact, recursively so), with experience feeding the theory, the theory feeding the language, which in turn feeds the automation. All are used, in concert, to design systems and build software. The answer continues to be in the results just as in the biological system and the goal is that the systems of tomorrow will inherit the best of the systems of today.

Defining Terms

Data base management system (DBMS): The computer program that is used to control and provide rapid access to a database. A language is used with the DBMS to control the functions that a DBMS provides. For example, SQL is the language that is used to control all of the functions that a relational architecture based DBMS provides for its users, including data definition, data retrieval, data manipulation, access control, data sharing, and data integrity.

Graphical user interface (GUI): The ultimate user interface, by which the deployed system interfaces with the computer most productively, using visual means. Graphical user interfaces provide a series of intuitive, colorful, and graphical mechanisms which enable the end-user to view, update, and manipulate information.

Formal system: A system defined in terms of a known set of axioms (or assumptions); it is therefore mathematically based (for example, a DBTF system is based on a set of axioms of control). Some of its properties are that it is consistent and logically complete. A system is consistent if it can be shown that no assumption of the system contradicts any other assumption of that system. A system is logically complete if the assumptions of the method completely define a given set of properties. This assures that a model of the method has that set of properties. Other properties of the models defined with the method may not be provable from the method’s assumptions. A logically complete system has a semantic basis (i.e., a way of expressing the meaning of that system’s objects). In terms of the semantics of a DBTF system, this means it has no interface errors and is unambiguous, contains what is necessary and sufficient, and has a unique state identification.

Interface: A seam between objects, or programs, or systems. It is at this juncture that many errors surface.

Software can interface with hardware, humans, and other software.

Methodology: A set of procedures, precepts, and constructs for the construction of software.

Metrics: A series of formulas which measure such things as quality and productivity.

Operating system: A program that manages the application programs that run under its control.

Software architecture: The structure and relationships among the components of software.

Acknowledgment

The author is indebted to Jessica Keyes for her helpful suggestions.

References

Boehm, B.W. 1981. Software Engineering Economics. Prentice-Hall, Englewood Cliffs, NJ.

Booch, G., Rumbaugh, J., and Jacobson, I. 1999. The Unified Modeling Language User Guide. Addison- Wesley, Reading, MA.

Department of Defense. 1992. Software engineering tools experiment-Final report. Vol. 1, Experiment Summary, Table 1, p. 9. Strategic Defense Initiative, Washington, DC.

Goldberg, A. and Robson, D. 1983. Smalltalk-80 the Language and its Implementation. Addison-Wesley, Reading, MA.

Gosling, J., Joy, B., and Steele, G. 1996. The Java Language Specification, Addison-Wesley, Reading, MA.

Hamilton, M. 1994. Development before the fact in action. Electronic Design, June 13. ES.

Hamilton, M. 1994. Inside development before the fact. Electronic Design, April 4. ES.

Hamilton, M. (In press). System Oriented Objects: Development Before the Fact.

Hamilton, M. and Hackler, W.R. 2000. Towards Cost Effective and Timely End-to-End Testing, HTI, prepared for Army Research Laboratory, Contract No. DAKF11-99-P-1236.

HTI Technical Report, Common Software Interface for Common Guidance Systems, US. Army DAAE30- 02-D-1020 and DAAB07-98-D-H502/0180, Precision Guided Munitions, Under the direction of Cliff McLain, ARES, and Nigel Gray, COTR ARDEC, Picatinny Arsenal, NJ, 2003–2004.

Harbison, S.P. and Steele, G.L., Jr. 1997. C A Reference Manual. Prentice-Hall, Englewood Cliffs, NJ.

Jones, T.C. 1977. Program quality and programmer productivity. IBM Tech. Report TR02.764, Jan.: 80, Santa Teresa Labs., San Jose, CA.

Keyes, J. The Ultimate Internet Developers Sourcebook, Chapter 42, Developing Web Applications with 001, AMACOM.

Keyes, J. 2000. Internet Management, chapters 30–33, on 001-developed systems for the Internet, Auerbach.

Krut, B. Jr. 1993. Integrating 001 Tool Support in the Feature-Oriented Domain Analysis Methodology (CMU/SEI-93-TR-11, ESC-TR-93-188). Pittsburgh, PA: Software Engineering Institute, Carnegie-Mellon University.

Lickly, D. J. 1974. HAL/S Language Specification, Intermetrics, prepared for NASA Lyndon Johnson Space Center.

Lientz, B.P. and Swanson, E.B. 1980. Software Maintenance Management. Addison-Wesley, Reading, MA.

Martin, J. and Finkelstein, C.B. 1981. Information Engineering. Savant Inst., Carnforth, Lancashire, UK.

Meyer, B. 1992. Eiffel the Language. Prentice-Hall, New York.

NSQE Experiment: http://hometown.aol.com/ONeillDon/index.html, Director of Defense Research and Engineering (DOD Software Technology Strategy), 1992–2002.

Ouyang, M., Golay, M. W. 1995. An Integrated Formal Approach for Developing High Quality Software of Safety-Critical Systems, Massachusetts Institute of Technology, Cambridge, MA, Report No. MIT-ANP-TR-035.

Software Engineering Inst. 1991. Capability Maturity Model. Carnegie-Mellon University Pittsburgh, PA.

Stefik, M. and Bobrow, D.J. 1985. Object-Oriented Programming: Themes and Variations. AI Magazine, Zerox Corporation, pp. 40–62.

Stroustrup, B. 1994. The Design and Evolution of C++. AT&T Bell Laboratories, Murray Hill, NJ.

Stroustrup, B. 1997. The C++ Programming Language, Addison-Wesley, Reading, MA.

Yourdon E. and Constantine, L. 1978. Structured Design: Fundamentals of a Discipline of Computer

Program and Systems Design, Yourdan Press, New York.

Further Information

Hamilton, M. and Hackler, R. 1990. 001: A rapid development approach for rapid prototyping based on a system that supports its own life cycle. IEEE Proceedings, First International Workshop on Rapid System Prototyping (Research Triangle Park, NC) pp. 46–62, June 4.

Hamilton, M. 1986. Zero-defect software: The elusive goal. IEEE Spectrum 23(3):48–53, March. Hamilton, M. and Zeldin, S. 1976. Higher Order Software—A Methodology for Defining Software. IEEE

Transactions on Software Engineering, vol. SE-2, no. 1.

McCauley, B. 1993. Software Development Tools in the 1990s. AIS Security Technology for Space Operations Conference, Houston, Texas.

Schindler, M. 1990. Computer Aided Software Design. John Wiley & Sons, New York.

The 001 Tool Suite Reference Manual, Version 3, Jan. 1993. Hamilton Technologies Inc., Cambridge, MA.