ARC, A RISC Computer

In the remainder of this chapter, we will study a model architecture that is based on the commercial Scalable Processor Architecture (SPARC) processor that was developed at Sun Microsystems in the mid-1980’s. The SPARC has become a

popular architecture since its introduction, which is partly due to its “open” nature: the full definition of the SPARC architecture is made readily available to the public (SPARC, 1992). In this chapter, we will look at just a subset of the SPARC, which we call “A RISC Computer” (ARC). “RISC” is yet another acronym, for reduced instruction set computer, which is discussed in Chapter 9. The ARC has most of the important features of the SPARC architecture, but without some of the more complex features that are present in a commercial processor.

ARC MEMORY

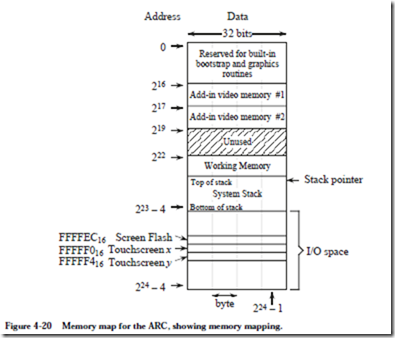

The ARC is a 32-bit machine with byte-addressable memory: it can manipulate 32-bit data types, but all data is stored in memory as bytes, and the address of a 32-bit word is the address of its byte that has the lowest address. As described earlier in the chapter in the context of Figure 4-4, the ARC has a 32-bit address space, in which our example architecture is divided into distinct regions for use by the operating system code, user program code, the system stack (used to store temporary data), and input and output, (I/O). These memory regions are detailed as follows:

• The lowest 211 = 2048 addresses of the memory map are reserved for use by the operating system.

• The user space is where a user’s assembled program is loaded, and can grow during operation from location 2048 until it meets up with the system stack.

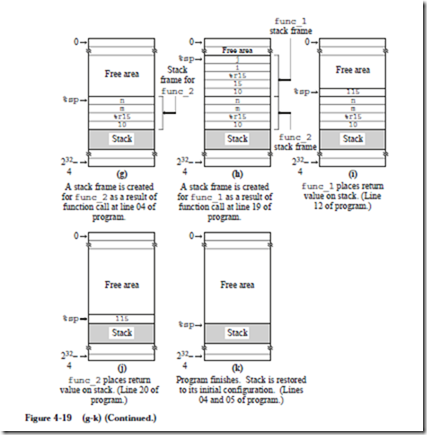

• The system stack starts at location 231 – 4 and grows toward lower addresses. The reason for this organization of programs growing upward in memory and the system stack growing downward can be seen in Figure 4-4: it accommodates both large programs with small stacks and small programs with large stacks.

• The portion of the address space between 231 and 232 – 1 is reserved for I/O devices—each device has a collection of memory addresses where its data is stored, which is referred to as “memory mapped I/O.”

The ARC has several data types (byte, halfword, integer, etc.), but for now we will consider only the 32-bit integer data type. Each integer is stored in memory as a collection of four bytes. ARC is a big-endian architecture, so the highest-order byte is stored at the lowest address. The largest possible byte address in the ARC is 232 – 1, so the address of the highest word in the memory map is three bytes lower than this, or 232 – 4.

ARC INSTRUCTION SET

As we get into details of the ARC instruction set, let us start by making an over- view of the CPU:

• The ARC has 32 32-bit general-purpose registers, as well as a PC and an IR.

• There is a Processor Status Register (PSR) that contains information about the state of the processor, including information about the results of arithmetic operations. The “arithmetic flags” in the PSR are called the condition codes. They specify whether a specified arithmetic operation resulted in a zero value (z), a negative value (n), a carry out from the 32-bit ALU (c), and an overflow (v). The v bit is set when the results of the arithmetic operation are too large to be handled by the ALU.

• All instructions are one word (32-bits) in size.

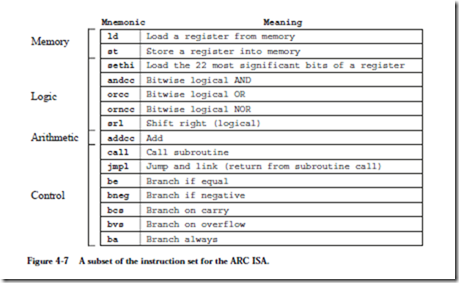

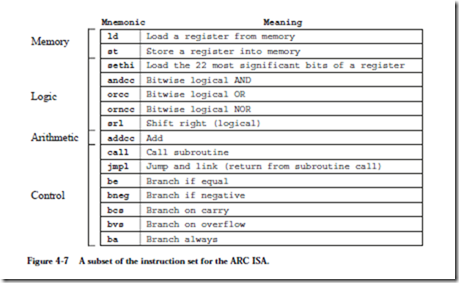

• The ARC is a load–store machine: the only allowable memory access operations load a value into one of the registers, or store a value contained in one of the registers into a memory location. All arithmetic operations operate on values that are contained in registers, and the results are placed in a register. There are approximately 200 instructions in the SPARC instruction set, upon which the ARC instruction set is based. A subset of 15 instructions is shown in Figure 4-7. Each instruction is represented by a mnemonic, which is a name that represents the instruction.

Data Movement Instructions

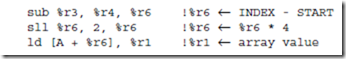

The first two instructions: ld (load) and st (store) transfer a word between the main memory and one of the ARC registers. These are the only instructions that can access memory in the ARC.

The sethi instruction sets the 22 most significant bits (MSBs) of a register with a 22-bit constant contained within the instruction. It is commonly used for constructing an arbitrary 32-bit constant in a register, in conjunction with another instruction that sets the low-order 10 bits of the register.

Arithmetic and Logic Instructions

The andcc, orcc, and orncc instructions perform a bit-by-bit AND, OR, and NOR operation, respectively, on their operands. One of the two source operands must be in a register. The other may either be in a register, or it may be a 13-bit two’s complement constant contained in the instruction, which is sign extended to 32-bits when it is used. The result is stored in a register.

For the andcc instruction, each bit of the result is set to 1 if the corresponding bits of both operands are 1, otherwise the result bit is set to 0. For the orcc instruction, each bit of the register is 1 if either or both of the corresponding source operand bits are 1, otherwise the corresponding result bit is set to 0. The orncc operation is the complement of orcc, so each bit of the result is 0 if either or both of the corresponding operand bits are 1, otherwise the result bit is set to 1. The “cc” suffixes specify that after performing the operation, the condition code bits in the PSR are updated to reflect the results of the operation. In particular, the z bit is set if the result register contains all zeros, the n bit is set if the most significant bit of the result register is a 1, and the c and v flags are cleared for these particular instructions. (Why?)

The shift instructions shift the contents of one register into another. The srl (shift right logical) instruction shifts a register to the right, and copies zeros into the leftmost bit(s). The sra (shift right arithmetic) instruction (not shown), shifts the original register contents to the right, placing a copy of the MSB of the original register into the newly created vacant bit(s) in the left side of the register. This results in sign-extending the number, thus preserving its arithmetic sign.

The addcc instruction performs a 32-bit two’s complement addition on its operands.

Control Instructions

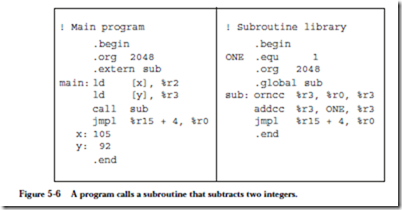

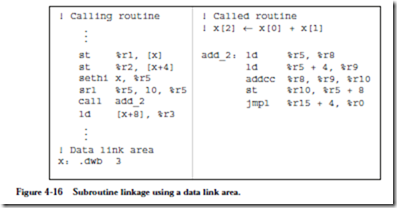

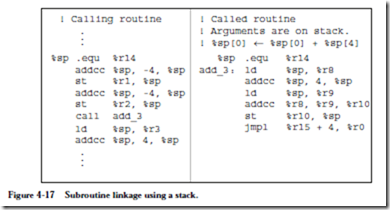

The call and jmpl instructions form a pair that are used in calling and return- ing from a subroutine, respectively. jmpl is also used to transfer control to another part of the program.

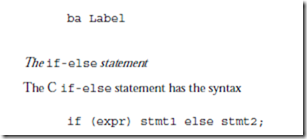

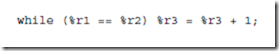

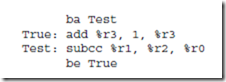

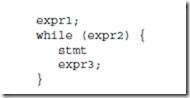

The lower five instructions are called conditional branch instructions. The be, bneg, bcs, bvs, and ba instructions cause a branch in the execution of a pro- gram. They are called conditional because they test one or more of the condition code bits in the PSR, and branch if the bits indicate the condition is met. They are used in implementing high level constructs such as goto, if-then-else and do-while. Detailed descriptions of these instructions and examples of their usages are given in the sections that follow.

4.2.3 ARC ASSEMBLY LANGUAGE FORMAT

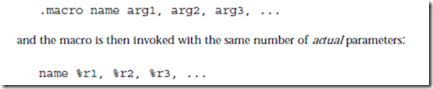

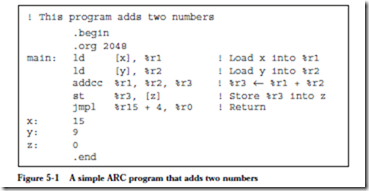

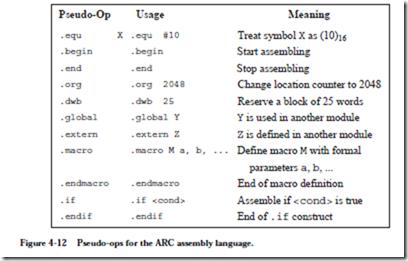

Each assembly language has its own syntax. We will follow the SPARC assembly language syntax, as shown in Figure 4-8. The format consists of four fields: an

optional label field, an opcode field, one or more fields specifying the source and destination operands (if there are operands), and an optional comment field. A label consists of any combination of alphabetic or numeric characters, under- scores (_), dollar signs ($), or periods (.), as long as the first character is not a digit. A label must be followed by a colon. The language is sensitive to case, and so a distinction is made between upper and lower case letters. The language is “free format” in the sense that any field can begin in any column, but the relative left-to-right ordering must be maintained.

The ARC architecture contains 32 registers labeled %r0 – %r31, that each hold a 32-bit word. There is also a 32-bit Processor State Register (PSR) that describes the current state of the processor, and a 32-bit program counter (PC), that keeps track of the instruction being executed, as illustrated in Figure 4-9. The

PSR is labeled %psr and the PC register is labeled %pc. Register %r0 always contains the value 0, which cannot be changed. Registers %r14 and %r15 have additional uses as a stack pointer (%sp) and a link register, respectively, as described later.

Operands in an assembly language statement are separated by commas, and the destination operand always appears in the rightmost position in the operand field. Thus, the example shown in Figure 4-8 specifies adding registers %r1 and %r2, with the result placed in %r3. If %r0 appears in the destination operand field instead of %r3, the result is discarded. The default base for a numeric operand is 10, so the assembly language statement:

addcc %r1, 12, %r3

shows an operand of (12)10 that will be added to %r1, with the result placed in %r3. Numbers are interpreted in base 10 unless preceded by “0x” or ending in “H”, either of which denotes a hexadecimal number. The comment field follows the operand field, and begins with an exclamation mark ‘!’ and terminates at the end of the line.

ARC INSTRUCTION FORMATS

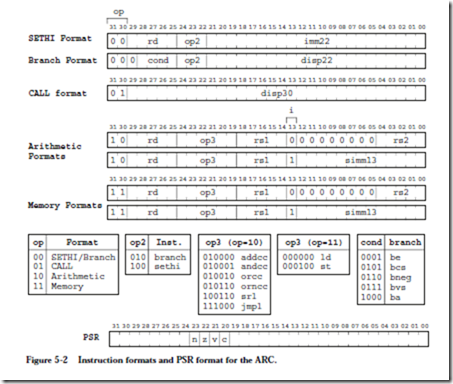

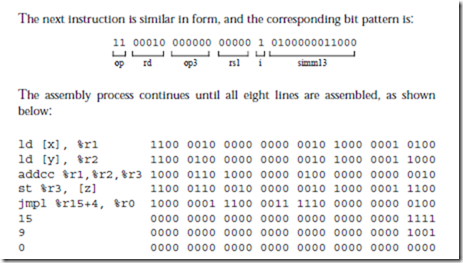

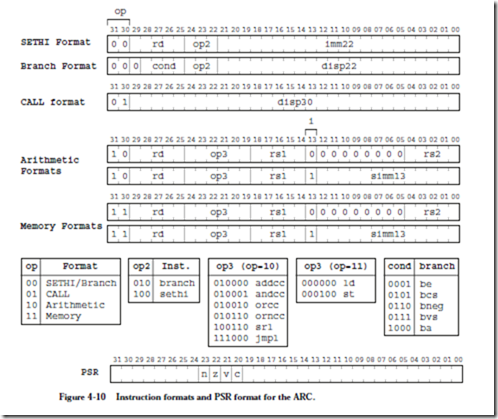

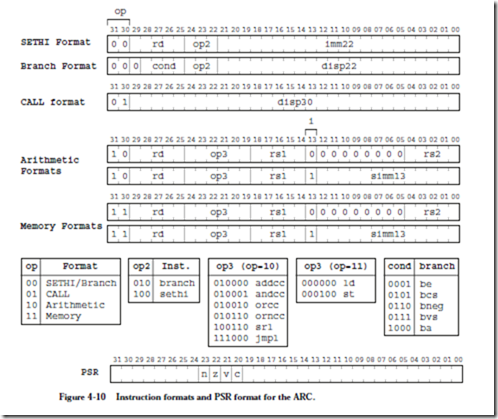

The instruction format defines how the various bit fields of an instruction are laid out by the assembler, and how they are interpreted by the ARC control unit. The ARC architecture has just a few instruction formats. The five formats are: SETHI, Branch, Call, Arithmetic, and Memory, as shown in Figure 4-10. Each

instruction has a mnemonic form such as “ld,” and an opcode. A particular instruction format may have more than one opcode field, which collectively identify an instruction in one of its various forms. (Note that these four instruction formats do not directly correspond to the four instruction classifications shown in Figure 4-7.)

The leftmost two bits of each instruction form the op (opcode) field, which identifies the format. The SETHI and Branch formats both contain 00 in the op field, and so they can be considered together as the SETHI/Branch format. The actual SETHI or Branch format is determined by the bit pattern in the op2 opcode field (010 = Branch; 100 = SETHI). Bit 29 in the Branch format always contains a zero. The five-bit rd field identifies the target register for the SETHI operation.

The cond field identifies the type of branch, based on the condition code bits (n, z, v, and c) in the PSR, as indicated at the bottom of Figure 4-10. The result of executing an instruction in which the mnemonic ends with “cc” sets the condition code bits such that n=1 if the result of the operation is negative; z=1 if the result is zero; v=1 if the operation causes an overflow; and c=1 if the operation produces a carry. The instructions that do not end in “cc” do not affect the condition codes. The imm22 and disp22 fields each hold a 22-bit constant that is used as the operand for the SETHI format (for imm22) or for calculating a dis- placement for a branch address (for disp22).

The CALL format contains only two fields: the op field, which contains the bit pattern 01, and the disp30 field, which contains a 30-bit displacement that is used in calculating the address of the called routine.

The Arithmetic (op = 10) and Memory (op = 11) formats both make use of rd fields to identify either a source register for st, or a destination register for the remaining instructions. The rs1 field identifies the first source register, and the rs2 field identifies the second source register. The op3 opcode field identifies the instruction according to the op3 tables shown in Figure 4-10.

The simm13 field is a 13-bit immediate value that is sign extended to 32 bits for the second source when the i (immediate) field is 1. The meaning of “sign extended” is that the leftmost bit of the simm13 field (the sign bit) is copied to the left into the remaining bits that make up a 32-bit integer, before adding it to rs1 in this case. This ensures that a two’s complement negative number remains negative (and a two’s complement positive number remains positive). For instance, (-13)10 = (1111111110011)2, and after sign extension to a 32-bit integer, we have (11111111111111111111111111110011)2 which is still equivalent to (-13)10.

The Arithmetic instructions need two source operands and a destination operand, for a total of three operands. The Memory instructions only need two operands: one for the address and one for the data. The remaining source operand is also used for the address, however. The operands in the rs1 and rs2 fields are added to obtain the address when i = 0. When i = 1, then the rs1 field and the simm13 field are added to obtain the address. For the first few examples we will encounter, %r0 will be used for rs2 and so only the remaining source operand will be specified.

ARC DATA FORMATS

The ARC supports 12 different data formats as illustrated in Figure 4-11. The data formats are grouped into three types: signed integer, unsigned integer, and floating point. Within these types, allowable format widths are byte (8 bits), half- word (16 bits), word/singleword (32 bits), tagged word (32 bits, in which the two least significant bits form a tag and the most significant 30 bits form the value), doubleword (64 bits), and quadword (128 bits).

In reality, the ARC does not differentiate between unsigned and signed integers. Both are stored and manipulated as two’s complement integers. It is their interpretation that varies. In particular one subset of the branch instructions assumes that the value(s) being compared are signed integers, while the other subset assumes they are unsigned. Likewise, the c bit indicates unsigned integer over- flow, and the v bit, signed overflow.

The tagged word uses the two least significant bits to indicate overflow, in which an attempt is made to store a value that is larger than 30 bits into the allocated 30 bits of the 32-bit word. Tagged arithmetic operations are used in languages with dynamically typed data, such as Lisp and Smalltalk. In its generic form, a 1 in either bit of the tag field indicates an overflow situation for that word. The tags can be used to ensure proper alignment conditions (that words begin on four-byte boundaries, quadwords begin on eight-byte boundaries, etc.), particularly for pointers.

The floating point formats conform to the IEEE 754-1985 standard (see Chapter 2). There are special instructions that invoke the floating point formats that are not described here, that can be found in (SPARC, 1992).

4.2.6 ARC INSTRUCTION DESCRIPTIONS

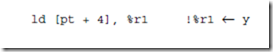

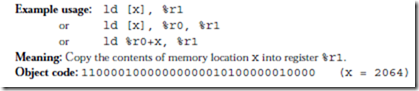

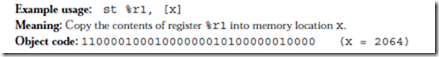

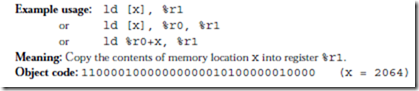

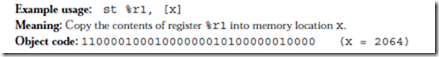

Now that we know the instruction formats, we can create detailed descriptions of the 15 instructions listed in Figure 4-7, which are given below. The translation to object code is provided only as a reference, and is described in detail in the next chapter. In the descriptions below, a reference to the contents of a memory location (for ld and st) is indicated by square brackets, as in “ld [x], %r1” which copies the contents of location x into %r1. A reference to the address of a

memory location is specified directly, without brackets, as in “call sub_r,” which makes a call to subroutine sub_r. Only ld and st can access memory, therefore only ld and st use brackets. Registers are always referred to in terms of their contents, and never in terms of an address, and so there is no need to enclose references to registers in brackets.

Instruction: ld

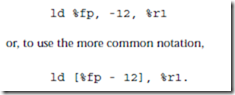

Description: Load a register from main memory. The memory address must be aligned on a word boundary (that is, the address must be evenly divisible by 4). The address is computed by adding the contents of the register in the rs1 field to either the contents of the register in the rs2 field or the value in the simm13 field, as appropriate for the con- text.

Description: Store a register into main memory. The memory address must be aligned on a word boundary. The address is computed by adding the contents of the register in the rs1 field to either the contents of the register in the rs2 field or the value in the simm13 field, as appropriate for the context. The rd field of this instruction is actually used for the source register.

Instruction: sethi

Description: Set the high 22 bits and zero the low 10 bits of a register. If the operand is 0 and the register is %r0, then the instruction behaves as a no-op (NOP), which means that no operation takes place.

Example usage: sethi 0x304F15, %r1

Meaning: Set the high 22 bits of %r1 to (304F15)16, and set the low 10 bits to zero.

Object code: 00000011001100000100111100010101

Instruction: andcc

Description: Bitwise AND the source operands into the destination operand. The condition codes are set according to the result.

Example usage: andcc %r1, %r2, %r3

Meaning: Logically AND %r1 and %r2 and place the result in %r3.

Object code: 10000110100010000100000000000010

Instruction: orcc

Description: Bitwise OR the source operands into the destination operand. The condition codes are set according to the result.

Example usage: orcc %r1, 1, %r1

Meaning: Set the least significant bit of %r1 to 1.

Object code: 10000010100100000110000000000001

Instruction: orncc

Description: Bitwise NOR the source operands into the destination operand. The con- dition codes are set according to the result.

Example usage: orncc %r1, %r0, %r1

Meaning: Complement %r1.

Object code: 10000010101100000100000000000000

Instruction: srl

Description: Shift a register to the right by 0 – 31 bits. The vacant bit positions in the left side of the shifted register are filled with 0’s.

Example usage: srl %r1, 3, %r2

Meaning: Shift %r1 right by three bits and store in %r2. Zeros are copied into the three most significant bits of %r2.

Object code: 10000101001100000110000000000011

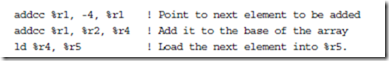

Instruction: addcc

Description: Add the source operands into the destination operand using two’s complement arithmetic. The condition codes are set according to the result.

Example usage: addcc %r1, 5, %r1

Meaning: Add 5 to %r1.

Object code: 10000010100000000110000000000101

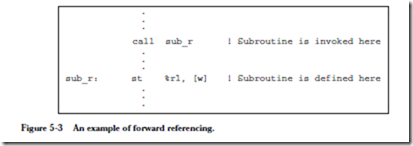

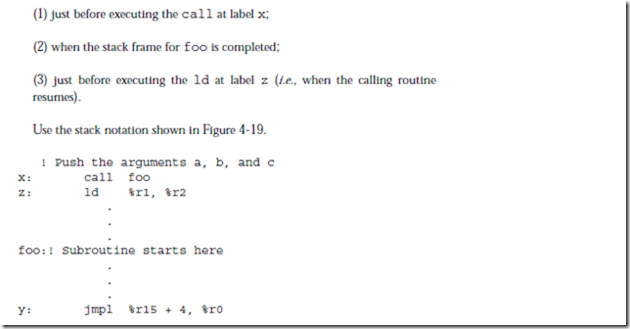

Instruction: call

Description: Call a subroutine and store the address of the current instruction (where the call itself is stored) in %r15, which effects a “call and link” operation. In the assem- bled code, the disp30 field in the CALL format will contain a 30-bit displacement from the address of the call instruction. The address of the next instruction to be exe- cuted is computed by adding 4 ´ disp30 (which shifts disp30 to the high 30 bits of the 32-bit address) to the address of the current instruction. Note that disp30 can be negative.

Example usage: call sub_r

Meaning: Call a subroutine that begins at location sub_r. For the object code shown below, sub_r is 25 words (100 bytes) farther in memory than the call instruction. Object code: 01000000000000000000000000011001

Instruction: jmpl

Description: Jump and link (return from subroutine). Jump to a new address and store the address of the current instruction (where the jmpl instruction is located) in the destination register.

Example usage: jmpl %r15 + 4, %r0

Meaning: Return from subroutine. The value of the PC for the call instruction was previously saved in %r15, and so the return address should be computed for the instruction that follows the call, at %r15 + 4. The current address is discarded in %r0.

Object code: 10000001110000111110000000000100

Instruction: be

Description: If the z condition code is 1, then branch to the address computed by adding 4 ´ disp22 in the Branch instruction format to the address of the current instruction. If the z condition code is 0, then control is transferred to the instruction that follows be.

Example usage: be label

Meaning: Branch to label if the z condition code is 1. For the object code shown below, label is five words (20 bytes) farther in memory than the be instruction. Object code: 00000010100000000000000000000101

Instruction: bneg

Description: If the n condition code is 1, then branch to the address computed by add- ing 4 ´ disp22 in the Branch instruction format to the address of the current instruction. If the n condition code is 0, then control is transferred to the instruction that follows bneg.

Example usage: bneg label

Meaning: Branch to label if the n condition code is 1. For the object code shown below, label is five words farther in memory than the bneg instruction.

Object code: 00001100100000000000000000000101

Instruction: bcs

Description: If the c condition code is 1, then branch to the address computed by adding 4 ´ disp22 in the Branch instruction format to the address of the current instruction. If the c condition code is 0, then control is transferred to the instruction that follows bcs.

Example usage: bcs label

Meaning: Branch to label if the c condition code is 1. For the object code shown below, label is five words farther in memory than the bcs instruction.

Object code: 00001010100000000000000000000101

Instruction: bvs

Description: If the v condition code is 1, then branch to the address computed by adding 4 ´ disp22 in the Branch instruction format to the address of the current instruction. If the v condition code is 0, then control is transferred to the instruction that follows bvs.

Example usage: bvs label

Meaning: Branch to label if the v condition code is 1. For the object code shown below, label is five words farther in memory than the bvs instruction.

Object code: 00001110100000000000000000000101

Instruction: ba

Description: Branch to the address computed by adding 4 ´ disp22 in the Branch instruction format to the address of the current instruction.

Example usage: ba label

Meaning: Branch to label regardless of the settings of the condition codes. For the object code shown below, label is five words earlier in memory than the ba instruction.

Object code: 00010000101111111111111111111011

![]() .

.