TIMING

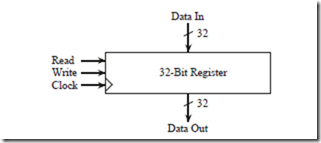

The microarchitecture operates on a two-phase clock cycle, in which the master sections of all of the registers change on the rising edge of the clock and the slave sections change on the falling edge of the clock as shown in Figure 6-14. All of the registers use falling edge-triggered master/slave D flip-flops except for %r0

which does not need flip-flops. On the falling edge of the clock, data stored in the master sections of the registers are clocked into the slave sections. This makes the data available for operations involving the ALU. While the clock is low, the ALU, CBL, and MUX functions are performed, which settle in time for the rising edge of the clock. On the rising edge of the clock, the new values of the registers are written into the master sections. The registers settle while the clock is high, and the process then repeats.

DEVELOPING THE MICROPROGRAM

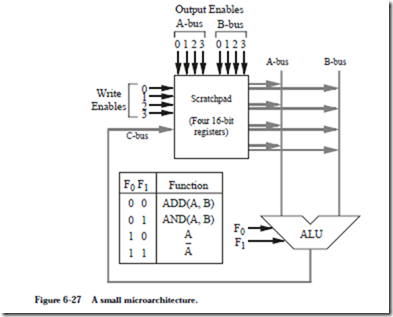

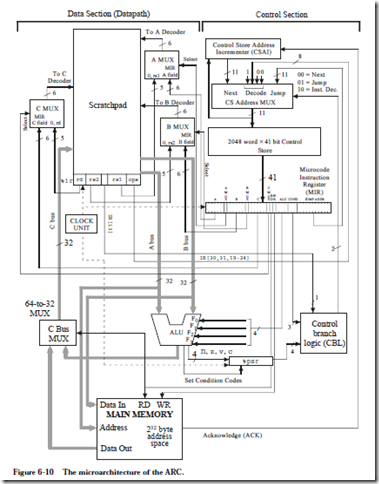

In a micro programmed architecture, instructions are interpreted by the micro- program in the control store. The micro program is often referred to as firmware because it bridges the gap between the hardware and the software. The microarchitecture shown in Figure 6-10 needs firmware in order to execute ARC instructions, and one possible coding is described in this section.

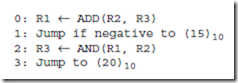

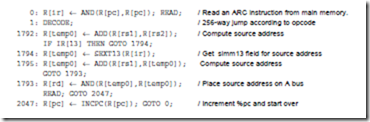

A portion of a microprogram that implements the fetch-execute cycle for the ARC is shown in Figure 6-15. In the control store, each microstatement is stored in coded form (1’s and 0’s) in a single microword. For simplicity, the micro-assembly language shown in Figure 6-15 is loosely defined here, and we will leave out labels, pseudo-ops, etc., that we would normally associate with a full-featured assembly language. Translation to the 41-bit format used in the microstore is not difficult to perform by hand for a small microprogram, and is frequently performed manually in practice (as we will do here) rather than creating a suite of software tools for such a small program.

Although our micro-assembly language is indeed an assembly language, it is not the same kind of assembly language as the ARC that we studied in Chapter 4.

The ARC assembly language is visible to the user, and is used for coding general purpose programs. Our micro-assembly language is used for coding firmware, and is not visible to the user. The sole purpose of the firmware is to interpret a user-visible instruction set. A change to the instruction set involves changes to the firmware, whereas a change in user-level software has no influence on the firmware.

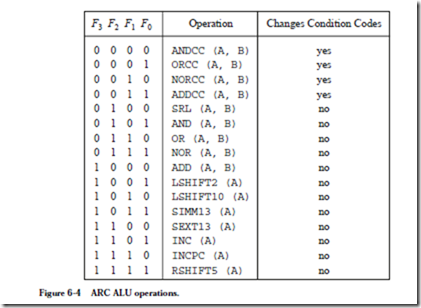

Each statement in the microprogram shown in Figure 6-15 is preceded by a decimal number that indicates the address of the corresponding microword in the 2048-word control store. The address is followed by a colon. The operation statements follow the address, and are terminated by semicolons. An optional comment follows the operation field and begins with a slash ‘/.’ The comment terminates at the end of the line. More than one operation is allowed per line, as long as all of the operations can be performed in a single instruction cycle. The ALU operations come from Figure 6-4, and there are a few others as we will see. Note that the 65 statements are shown in logical sequence, rather than in numerical sequence.

Before the microprogram begins execution, the PC is set up with the starting address of a program that has been loaded into the main memory. This may hap- pen as the result of an initialization sequence when the computer is powered on, or by the operating system during the normal course of operation.

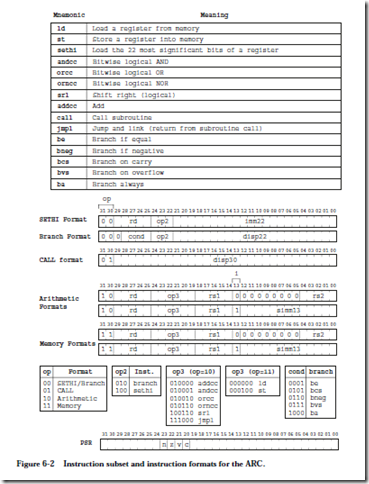

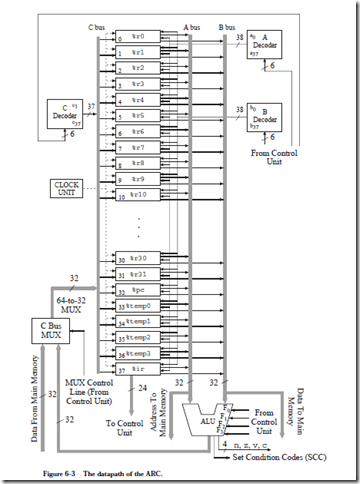

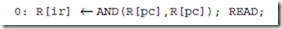

The first task in the execution of a user-level program is to bring the instruction pointed to by the PC from the main memory into the IR. Recall from Figure 6-10 that the address lines to main memory are taken from the A bus. In line 0, the PC is loaded onto the A bus, and a Read operation is initiated to memory. The notation “R[x]” means “register x,” in which x is replaced with one of the registers in the datapath, and so “R[1]” means “register %r1,” “R[ir]” means “register %ir,” and “R[rs1]” means the register that appears in the 5-bit rs1 field of an instruction (refer to Figure 6-2.)

The expression “AND(R[pc],R[pc])” simply performs a logical AND of %pc with itself in a literal interpretation. This operation is not very useful in a logical sense, but what we are interested in are the side effects. In order to place %pc onto the A bus, we have to choose an ALU operation that uses the A bus but does not affect the condition codes. There is a host of alternative choices that can be used, and the AND approach is arbitrarily chosen here. Note that the result of the AND operation is discarded because the C bus MUX in Figure 6-10 only allows the data output from main memory onto the C bus during a read operation.

A read operation normally takes more time to complete than the time required for one microinstruction to execute. The access time of main memory can vary depending on the memory organization, as we will see in Chapter 7. In order to account for variations in the access times of memory, the control store address incrementer (CSAI) does not increment the address until an acknowledge (ACK) signal is sent which indicates the memory has completed its operation.

Flow of control within the microprogram defaults to the next higher numbered statement unless a GOTO operation or a DECODE operation is encountered, and so microword 1 (line 1) is read into the MIR on the next cycle. Notice that some of the microcode statements in Figure 6-15 take up more than one line on the page, but are part of a single microinstruction. See, for example, lines 1283 and 1601.

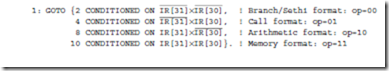

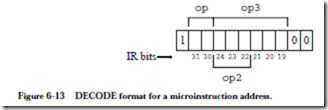

Now that the instruction is in the IR as a result of the read operation in line 0, the next step is to decode the opcode fields. This is performed by taking a 256-way branch into the microcode as indicated by the DECODE keyword in line 1 of the microprogram. The 11-bit pattern for the branch is constructed by appending a 1 to the left of bits 30 and 31 of the IR, followed by bits 19-24 of the IR, followed by the pattern 00. After the opcode fields are decoded, execution of the microcode continues according to which of the 15 ARC instructions is being interpreted.

As an example of how the decode operation works, consider the addcc instruction. According to the Arithmetic instruction format in Figure 6-2, the op field is 10 and the op3 field is 010000. If we append a 1 to the left of the op bit pat- tern, followed by the op3 bit pattern, followed by 00, the DECODE address is 11001000000 = (1600)10. This means that the microinstructions that interpret the addcc instruction begin at control store location 1600.

A number of DECODE addresses should never arise in practice. There is no Arithmetic instruction that corresponds to the invalid op3 field 111111, but if this situation does arise, possibly due to an errant program, then a microstore routine should be placed at the corresponding DECODE address 11011111100 = (1788)10 in order to deal with the illegal instruction. These locations are left blank in the microprogram shown in Figure 6-15.

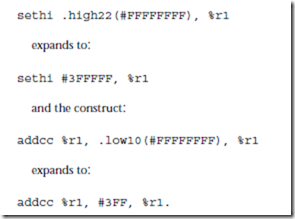

Instructions in the SETHI/Branch and Call formats do not have op3 fields. The SETHI/Branch formats have op and op2 fields, and the Call format has only the op field. In order to maintain a simple decoding mechanism, we can create duplicate entries in the control store. Consider the SETHI format. If we follow the rule for constructing the DECODE address, then the DECODE address will have a 1 in the leftmost position, followed by 00 for the op field, followed by 100 which identifies SETHI in bit positions 19 – 21, followed by the bits in positions 22 – 24 of the IR, followed by 00, resulting in the bit pattern 100100xxx00 where xxx can take on any value, depending on the imm22 field. There are eight possible bit patterns for the xxx bits, and so we need to have duplicate SETHI codes at locations 10010000000, 10010000100, 10010001000, 10010001100, 10010010000, 10010010100, 10010011000, and 10010011100. DECODE addresses for the Branch and CALL formats are constructed in duplicate locations in a similar manner. Only the lowest addressed version of each set of duplicate codes is shown in Figure 6-15.

Although this method of decoding is fast and simple, a large amount of control store memory is wasted. An alternative approach that wastes much less space is to modify the decoder for the control store so that all possible branch patterns for SETHI point to the same location, and the same for the Branch and Call format instructions. For our microarchitecture, we will stay with the simpler approach and pay the price of having a large control store.

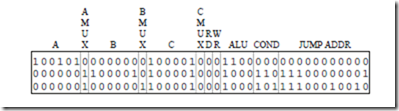

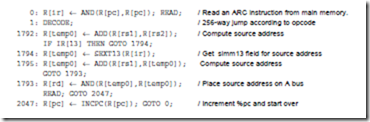

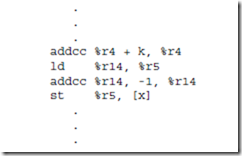

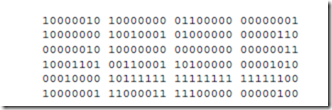

Consider now how the ld instruction is interpreted. The microprogram begins at location 0, and at this point does not know that ld is the instruction that the PC points to in main memory. Line 0 of the microprogram begins the Read operation as indicated by the READ keyword, which brings an instruction into the IR from the main memory address pointed to by the PC. For this case, let us assume that the IR now contains the 32-bit pattern:

which is a translation of the ARC assembly code: ld %r5 + 80, %r2. Line 1 then performs a branch to control store address (11100000000)2 = (1792)10.

At line 1792, execution of the ld instruction begins. In line 1792, the immediate bit i is tested. For this example, i = 1, and so control is transferred to microword 1794. If instead we had i = 0, then control would pass to the next higher numbered microword, which is 1793 for this case. Line 1792 adds the registers in the rs1 and rs2 fields of the instruction, in anticipation of a non-immediate form of ld, but this only makes sense if i = 0, which it is not for this example. The result that is stored in %temp0 is thus discarded when control is transferred to microword 1794, but this introduces no time penalty and does not produce any unwanted side effects (ADD does not change the condition codes).

In microword 1794, the simm13 field is extracted (using sign extension, as indicated by the SEXT13 operation), which is added with the register in the rs1 field in microword 1795. Control is then passed to microword 1793 which is where the READ operation takes place. Control passes to line 2047 where the PC is incremented in anticipation of reading the next instruction from main memory. Since instructions are four bytes long and must be aligned on word bound- aries in memory, the PC is incremented by four. Control then returns to line 0 where the process repeats. A total of seven microinstructions are thus executed in

interpreting the ld instruction. These microinstructions are repeated below:

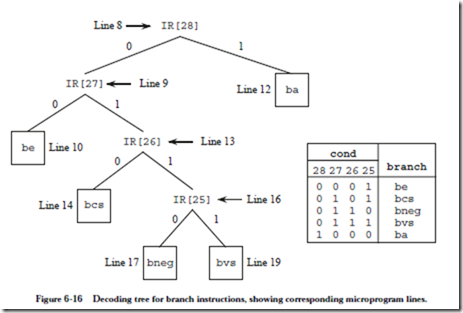

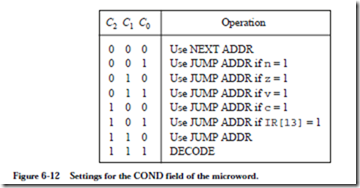

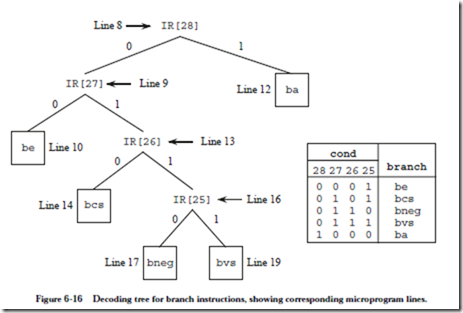

The remaining instructions, except for branches, are interpreted similar to the way ld is interpreted. Additional decoding is needed for the branch instructions because the type of branch is determined by the COND field of the branch format (bits 25 – 28), which is not used during a DECODE operation. The approach used here is to shift the COND bits into IR[13] one bit at a time, and then jump to different locations in the microcode depending on the COND bit pattern.

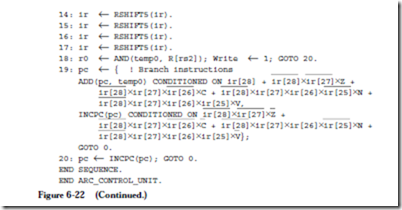

For branch instructions, the DECODE operation on line 2 of the microprogram transfers control to location 1088. We need more space for the branch instructions than the four-word per instruction allocation, so line 1088 transfers control to line 2 which is the starting address of a large section of available control store memory.

Lines 2 – 4 extract the 22-bit displacement for the branch by zeroing the high order 10 bits and storing the result in %temp0. This is accomplished by shifting %ir to the left by 10 bits and storing it in %temp0, and then shifting the result back to the right by 10 bits. (Notice that sign extension is performed on the dis- placement, which may be negative. RSHIFT5 implements sign extension.) Lines 5 – 7 shift %ir to the right by 15 bits so that the most significant COND bit (IR[28]) lines up in position IR[13], which allows the Jump on IR[13]=1 operation to test each bit. Alternatively, we could shift the COND field to IR[31] one bit at a time, and use the Jump on n condition to test each bit. (Note that there is a subtle error in how the PC is updated in line 12. See Problem 6.21 for an explanation.)

Line 8 starts the branch decoding process, which is summarized in Figure 6-16. If IR[28], which is now in IR[13], is set to 1, then the instruction is ba, which is executed in line 12. Notice that control returns to line 0, rather than to line 2047, so that the PC does not get changed twice for the same instruction.

If IR[28] is zero, then %ir is shifted to the left by one bit by adding it to itself, so that IR[27] lines up in position IR[13]. Bit IR[27] is tested in line 9. If IR[27] is zero, then the be instruction is executed in line 10, otherwise %ir is shifted to the left and IR[26] is then tested in line 13. The remaining branch instructions are interpreted in a similar manner.

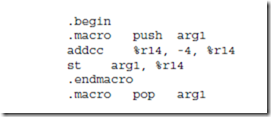

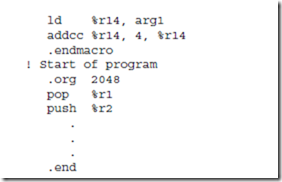

Microassembly Language Translation

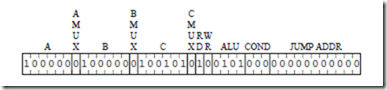

A microassembly language microprogram must be translated into binary object code before it is stored in the control store, just as an assembly language program must be translated into a binary object form before it is stored in main memory. Each line in the ARC microprogram corresponds to exactly one word in the control store, and there are no unnumbered forward references in the microprogram, so we can assemble the ARC microprogram one line at a time in a single pass. Consider assembling line 0 of the microprogram shown in Figure 6-15:

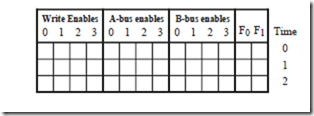

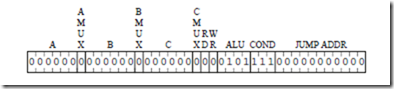

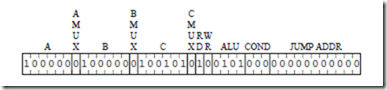

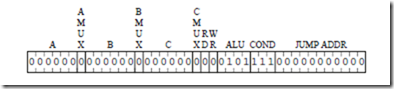

We can fill in the fields of the 41-bit microword as shown below:

The PC is enabled onto both the A and B busses for the AND operation, which transfers a word through the ALU without changing it. The A and B fields have the bit pattern for the PC (3210 = 1000002). The AMUX and BMUX fields both contain 0’s, since the inputs to these MUXes are taken from the MIR. The target of the Read operation is the IR, which has a corresponding bit pattern of (3710 = 1001012) for the C field. The CMUX field contains a 0 because the input to the CMUX is taken from the MIR. A read operation to memory takes place, and so the RD field contains a 1 and the WR field contains a 0. The ALU field contains 0101, which corresponds to the AND operation. Note that the condition codes are not affected, which would happen if ANDCC is used instead. The COND field contains 000 since control passes to the next microword, and so the bit pat- tern in the JUMP ADDR field does not matter. Zeros are arbitrarily placed in the JUMP ADDR field.

The second microword implements the 256-way branch. For this case, all that matters is that the bit pattern 111 appears in the COND field for the DECODE operation, and that no registers, memory, or condition codes are disturbed. The corresponding bit pattern is then:

A number of different bit patterns would also work for line 1. For example, any bit patterns can appear in the A, B, or JUMP ADDR fields when a DECODE operation takes place. The use of the zero bit patterns is an arbitrary choice. The ALU field is 0101 which is for AND, which does not affect the condition codes. Any other ALU operation that does not affect the condition codes can also be used.

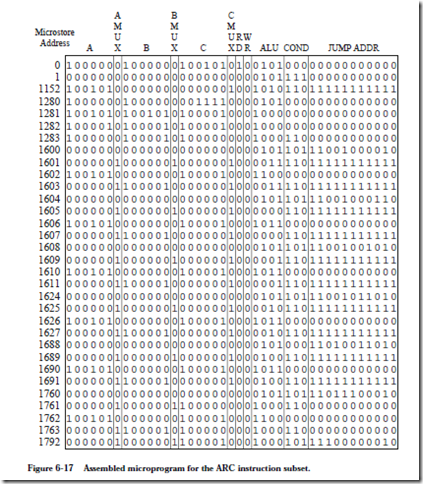

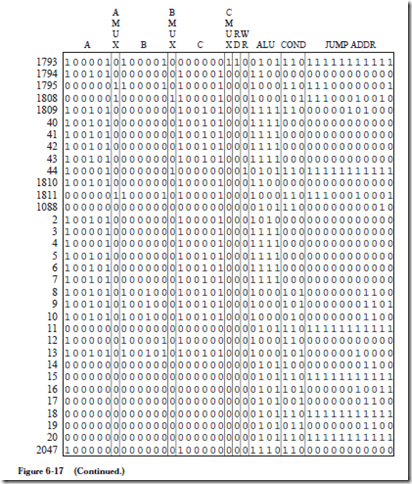

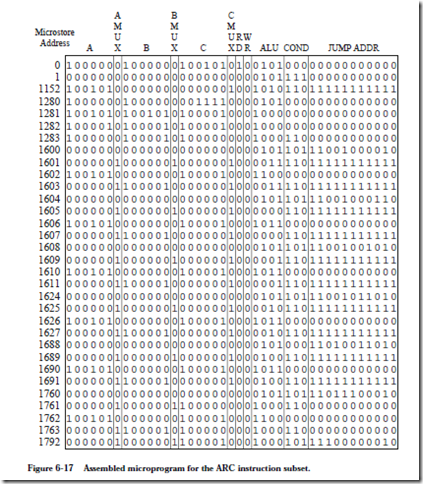

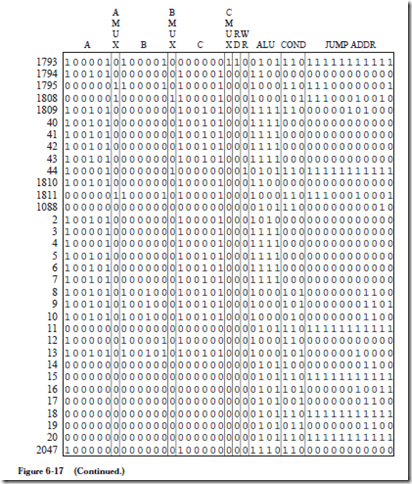

The remainder of the microprogram is translated in a similar manner. The trans-

lated microprogram is shown in Figure 6-17, except for gaps where duplicate branch code would appear, or where “illegal instruction” code would appear.

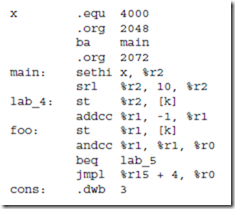

EXAMPLE

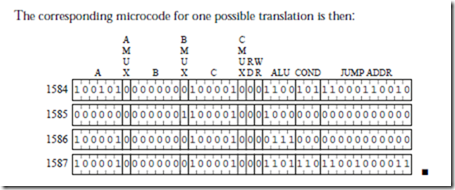

Consider adding an instruction called subcc to the microcoded implementation of the ARC instruction set, which subtracts its second source operand from the first, using two’s complement arithmetic. The new instruction uses the Arithmetic format and an op3 field of 001100.

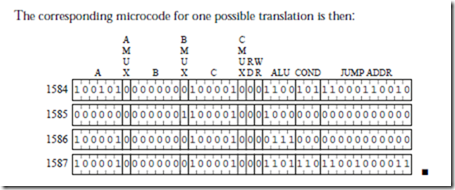

We need to modify the microprogram to add this new instruction. We start by computing the starting location of subcc in the control store, by appending a ‘1’ to the left of the op field, which is 10, followed by the op3 field which is 001100, followed by 00. This results in the bit pattern 11000110000 which corresponds to control store location (1584)10. We can then create microassembly code that is similar to the addcc microassembly code at location 1600, except that the two’s complement negative of the subtrahend (the second source operand) is formed before performing the addition. The subtrahend is complemented by making use of the NOR operation, and 1 is added to it by using the INC operation. The subtraction is then completed by using the code for addcc. A microassembly coding for subcc is shown below:

TRAPS AND INTERRUPTS

A trap is an automatic procedure call initiated by the hardware after an exceptional condition caused by an executing program, such as an illegal instruction, overflow, underflow, dividing by zero, etc. When a trap occurs, control is transferred to a “trap handler” which is a routine that is part of the operating system. The handler might do something like print a message and terminate the offending program.

One way to handle traps is to modify the microcode, possibly to check the status bits. For instance, we can check the v bit to see if an overflow has occurred. The microcode can then load an address into the PC (if a trap occurs) for the starting location of the trap handler.

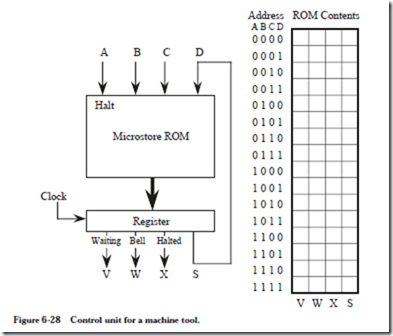

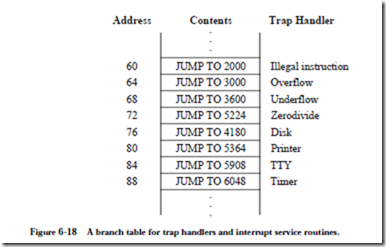

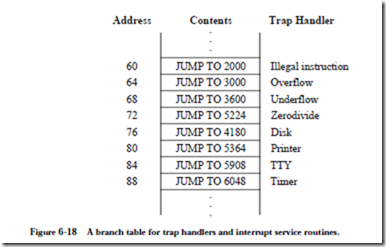

Normally, there is a fixed section of memory for trap handler starting addresses where only a single word is allocated for each handler. This section of memory forms a branch table that transfers control to the handlers, as illustrated in Figure 6-18. The reason for using a branch table is that the absolute addresses for each type of trap can be embedded in the microcode this way, while the targets of the jumps can be changed at the user level to handle traps differently.

A historically common trap is for floating point instructions, which may be emulated by the operating system if they are not implemented directly in hard- ware. Floating point instructions have their own opcodes, but if they are not implemented by the hardware (that is, the microcode does not know about them) then they will generate an illegal instruction trap when an attempt is made to execute them. When an illegal instruction occurs, control is passed to the illegal instruction handler which checks to see if the trap is caused by a floating point instruction, and then passes control to a floating point emulation routine as appropriate for the cause of the trap. Although floating point units are normally integrated into CPU chips these days, this method is still used when extending the instruction set for other instructions, such as graphics extensions to the ISA.

Interrupts are similar to traps, but are initiated after a hardware exception such as a user hitting a key on a keyboard, an incoming telephone call for a modem, a power fluctuation, an unsafe operating temperature, etc. Traps are synchronous with a running program, whereas interrupts are asynchronous. Thus, a trap will always happen at the same place in the same program running with the same data set, whereas the timing of interrupts is largely unpredictable.

When a key is pressed on an interrupt based keyboard, the keyboard asserts an interrupt line on the bus, and the CPU then asserts an acknowledge line as soon as it is ready (this is where bus arbitration comes in, which is covered in Chapter 8, if more than one device wants to interrupt at the same time). The keyboard then places an interrupt vector onto the data bus which identifies itself to the

CPU. The CPU then pushes the program counter and processor status register (where the flags are stored) onto the stack. The interrupt vector is used to index into the branch table, which lists the starting addresses of the interrupt service routines.

When a trap handler or an interrupt service routine begins execution, it saves the registers that it plans to modify on the stack, performs its task, restores the registers, and then returns from the interrupt. The process of returning from a trap is different from returning from a subroutine, since the process of entering a trap is different from a subroutine call (because the %psr register is also saved and restored). For the ARC, the rett instruction (see Chapter 8) is used for returning from a trap or interrupt. Interrupts can interrupt other interrupts, and so the first thing that an interrupt service routine might do is to raise its priority (using a special supervisor mode instruction) so that no interrupts of lower priority are accepted.

NANOPROGRAMMING

If the microstore is wide, and has lots of the same words, then we can save microstore memory by placing one copy of each unique microword in a nanostore, and then use the microstore to index into the nanostore. For instance, in the microprogram shown in Figure 6-15, lines 1281 and 1282 are the same. Lines 3, 4, and 40-44 are the same, and there are a number of other microin- structions that recur, especially for the duplicated branch microcode and the duplicated illegal instruction microcode.

Figure 6-19a illustrates the space requirement for the original microstore ROM. There are n=2048 words that are each 41 bits wide, giving an area complexity of 2048 ´ 41 = 83,968 bits. Suppose now that there are 100 unique microwords in the ROM (the microprogram in Figure 6-15 is only partially complete so we can- not measure the number of unique microwords directly). Figure 6-19b illustrates a configuration that uses a nanostore, in which an area savings can be realized if there are a number of bit patterns that recur in the original microcode sequence. The unique microwords (100 for this case) form a nanoprogram, which is stored in a ROM that is 100 words deep by 41 bits wide.

The microprogram now indexes into the nanostore. The microprogram has the same number of microwords regardless of whether or not a nanostore is used, but when a nanostore is used, pointers into the nanostore are stored in the microstore rather than the wider 41-bit words. For this case, the microstore is now 2048

For small m and large n, where m is the length of the nanoprogram, we can realize a large savings in memory. This frees up area that can be applied in some other way, possibly to improve performance. However, instead of accessing only the microstore, we must now access the microstore first, followed by an access to the nanostore. The machine will thus run more slowly, but will fit into a smaller area.