How do we link more than one processor?

We will now begin to consider some of the challenges that face developers who wish to design multiprocessor applications. We begin with a fundamental problem:

● How do we keep the clocks on the various nodes synchronized?

We then go on to address two further problems that can arise with many such systems:

● How do we transfer data between the various nodes?

● How does one node check for errors on the other nodes?

As we will see, by using a shared-clock (S-C) scheduler, we can address all three prob- lems. Moreover, the time division multiple access (TDMA) protocol we employ to achieve this is a ‘natural extension’ (Burns and Wellings, 1997, p. 484), to the time-triggered archi- tectures for single-processor systems which we have described in earlier parts of this book.

Synchronizing the clocks

Why do we need to synchronize the tasks running on different parts of a multiprocessor system?

Consider a simple example. Suppose we are developing a portable traffic-light system designed to control the flow of traffic on a narrow road while repairs are carried out. The system is to be used at both ends of the area of road works and will allow traffic to move in only one direction at a time (Figure 25.5).

The conventional ‘red’, ‘amber’ and ‘green’ bulbs will be used on each node, with the usual sequencing (Figure 25.6).

We will assume that there will be a microcontroller at each end of the traffic-light application to control the two sets of lights. We will also assume that each microcon- troller is running a scheduler and that each is driven by an independent crystal oscillator circuit.

The problem with this arrangement is that the schedulers on the two microcontrollers are likely to get quickly ‘out of sync’. This will happen primarily because the two boards will never run at exactly the same temperature and, therefore, the crystal oscillators will operate at different rates.

This can cause real practical difficulties. In this case, for example, we run the risk that both sets of traffic lights will show ‘green’ at the same time, a fact likely to result, quickly, in an accident.

The S-C scheduler tackles this problem by sharing a single clock between the vari- ous processor boards, as illustrated schematically in Figure 25.7.

Here we have one, accurate, clock on the Master node in the network. This clock is used to drive the scheduler in the Master node in exactly the manner discussed in Part C .1

The Slave nodes also have schedulers: however, the interrupts used to drive these schedulers are derived from ‘tick messages’ generated by the Master (Figure 25.8). Thus, in a CAN-based network (for example), the Slave node will have a S-C scheduler driven by the ‘receive’ interrupts generated through the receipt of a byte of data sent by the Master.

In the case of the traffic lights, changes in temperature will, at worst, cause the lights to cycle more quickly or more slowly: the two sets of lights will not, however, get out of sync.

Transferring data

So far we have focused on synchronizing the schedulers in individual nodes. In many applications, we will also need to transfer data between the tasks running on different processor nodes.

To illustrate this, consider again the traffic-light controller. Suppose that a bulbblows in one of the light units. When a bulb is missing, the traffic control signals are ambiguous: we therefore need to detect bulb failures on each node and, having detected a failure, notify the other node that a failure has occurred. This will allow us, for example, to extinguish all the (available) bulbs on both nodes or to flash all the bulbs on both nodes: in either case, this will inform the road user that something is amiss and that the road must be negotiated with caution.

If the light failure is detected on the Master node, then this is straightforward. As we discussed earlier, the Master sends regular tick messages to the Slave, typically once per millisecond. These tick messages can – in most S-C schedulers – include data transfers: it is therefore straightforward to send an appropriate tick message to the Slave to alert it to the bulb failure.

To support the transfer of data from the Slave to the Master, we need an additional mechanism: this is provided through the use of ‘acknowledgement’ messages (Figure 25.9). The end result is a simple and predictable ‘time division multiple access’ (TDMA) protocol (e.g. see Burns and Wellings, 1997), in which acknowledgement messages are interleaved with the tick messages. For example, Figure 25.10 shows the mix of tick and acknowledgement messages that will be transferred in a typical two-Slave (CAN) network.

Note that, in a shared-clock scheduler, all data transfers are carried out using the interleaved tick and acknowledgement messages: no additional messages are permitted on the bus. As a result, we are able to pre-determine the network band- width required to ensure that all messages are delivered precisely on time.

Detecting network and node errors

Consider the traffic light control system one final time. We have already discussed the synchronization of the two nodes and the mechanisms that can be used to transfer data. What we have not yet discussed is problems caused by the failure of the network hardware (cabling, tranceivers, connectors and so on) or the failure of one of the net- work nodes.

For example, a simple problem that might arise is that the cable connecting the two sets of lights becomes damaged or is severed completely. This is likely to mean that the ‘tick messages’ from the Master are not received by the Slave, causing the slave to ‘freeze’. If the Master is unaware that the Slave is not receiving messages then again we run the risk that the two sets of lights will both, simultaneously, show green, with the potential risk of a serious accident (see Figure 25.11).

The S-C scheduler deals with this potential problem using the error detection and recovery mechanisms which we discuss in the next section.

Detecting errors in the Slave(s)

The use of a shared-clock scheduler makes it straightforward for the Slave to detect errors very rapidly. Specifically, because we know from the design specification that the Slave should receive ticks at (say) 1 ms intervals, we simply need to measure the time interval between ticks; if a period greater than 1 ms elapses between ticks, we conclude that an error has occurred.

In many circumstances an effective way of achieving this is to set a watchdog timer2 in the Slave to overflow at a period slightly longer than the tick interval. Under normal cir- cumstances, the ‘update’ function in the Slave will be invoked by the arrival of each tick and this update function will, in turn, refresh the watchdog timer. If a tick is not received, the timer will overflow and we can invoke an appropriate error-handling routine.

We discuss the required error-handling functions further next.

Detecting errors in the Master

Detecting errors in the Master node requires that each Slave sends appropriate acknowledgement messages to the Master at regular intervals (see Figure 25.10). A simple way of achieving this may be illustrated by considering the operation of a particular one-Master, ten-Slave network:

● The Master node sends tick messages to all nodes, simultaneously, every millisec- ond; these messages are used to invoke the update function in all Slaves every millisecond.

● Each tick message will, in most schedulers, be accompanied by data for a particular node. In this case, we will assume that the Master sends tick messages to each of the Slaves in turn; thus, each Slave receives data in every tenth tick message (every 10 milliseconds in this case).

● Each Slave sends an acknowledgement message to the Master only when it receives a tick message with its ID; it does not send an acknowledgement to any other tick messages.

As mentioned previously, this arrangement provides the predictable bus loading that we require and a means of communicating with each Slave individually. It also means that the Master is able to detect whether or not a particular Slave has responded to its tick message.

Handling errors detected by the Slave

We will assume that errors in the Slave are detected with a watchdog timer. To deal with such errors, the shared-clock schedulers presented in this book all operate as follows:

● Whenever the Slave node is reset (either having been powered up or reset as a result of a watchdog overflow), the node enters a ‘safe state’.

● The node remains in this state until it receives an appropriate series of ‘start’ com- mands from the Master.

This form of error handling is easily produced and is effective in most circumstances. One important alternative form of behaviour involves converting a Slave into a

Master node in the event that failure of the Master is detected. This behaviour can be very effective, particularly on networks (such as CAN networks) which allow the transmission of messages with a range of priority levels. We will not consider this pos- sibility in detail in the present edition of this book.

Handling errors detected by the Master

Handling errors detected by the Slave node(s) is straightforward in a shared-clock net- work. Handling errors detected by the Master is more complicated. We consider and illustrate three main options in this book:

● The ‘Enter safe state then shut down’ option

● The ‘Restart the network’ option

● The ‘Engage backup Slave’ option

We consider each of these options now.

Enter a safe state and shut down the network

Shutting down the network following the detection of errors by the Master node is easily achieved. We simply stop the transmission of tick messages by the Master. By stopping the tick messages, we cause the Slave(s) to be reset too; the Slaves will then wait (in a safe state). The whole network will therefore stop, until the Master is reset.

This behaviour is the most appropriate behaviour in many systems in the event of a network error, if a ‘safe state’ can be identified. This will, of course, be highly application dependent.

For example, we have already mentioned the A310 Airbus’ slat and flap control computers which, on detecting an error during landing, restore the wing system to a safe state and then shut down. In this situation, a ‘safe state’ involves having both wings with the same settings; only asymmetric settings are hazardous during landing (Burns and Wellings, 1997, p.102).

The strengths and weaknesses of this approach are as follows:

It is very easy to implement.

It is effective in many systems.

It can often be a ‘last line of defence’ if more advanced recovery schemes have failed.

It does not attempt to recover normal network operation or to engage backup nodes.

This approach may be used with any of the networks we discuss in this book (interrupt based, UART based or CAN based). We illustrate the approach in detail in Chapter 26.

Reset the network

Another simple way of dealing with errors is to reset the Master and, hence, the whole network. When it is reset, the Master will attempt to re-establish communica- tion with each Slave in turn; if it fails to establish contact with a particular Slave, it will attempt to connect to the backup device for that Slave.

This approach is easy to implement and can be effective. For example, many designs use ‘N-version’ programming to create backup versions of key components.3 By performing a reset, we keep all the nodes in the network synchronized and we engage a backup Slave (if one is available).

The strengths and weaknesses of this approach are as follows:

It allows full use to be made of backup nodes.

It may take time (possibly half a second or more) to restart the network; even if the network becomes fully operational, the delay involved may be too long (for example, in automotive braking or aerospace flight-control applications).

With poor design or implementation, errors can cause the network to be continu- ally reset. This may be rather less safe than the simple ‘enter safe state and shut down’ option.

With poor design or implementation, errors can cause the network to be continu- ally reset. This may be rather less safe than the simple ‘enter safe state and shut down’ option.

This approach may be used with any of the UART- or CAN-based networks we discuss in this book. We illustrate the approach in detail in Chapter 27.

Engage a backup Slave

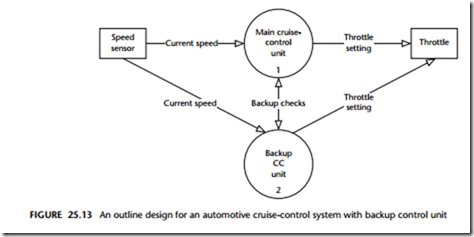

The third and final recovery technique we discuss in the present edition of this book is as follows. If a Slave fails, then – rather than restarting the whole network – we start the corresponding backup unit.

The strengths and weaknesses of this approach are as follows:

It allows full use to be made of backup nodes.

In most circumstances it take comparatively little time to engage the backup unit.

The underlying coding is more complicated than the other alternatives discussed in this book.

This approach may be used with any of the UART- or CAN-based networks we discuss in this book. We illustrate the approach in detail in Chapter 28.

![]() However, the mere presence of redundant networks does not itself guarantee increased reliability. For example, in 1974, in a Turkish Airlines DC-10 aircraft, the cargo door opened at high altitude. This event caused the cargo hold to depressurize, which in

However, the mere presence of redundant networks does not itself guarantee increased reliability. For example, in 1974, in a Turkish Airlines DC-10 aircraft, the cargo door opened at high altitude. This event caused the cargo hold to depressurize, which in