Co-operative and pre-emptive scheduling

We have discussed in very general terms the use of a scheduler to execute functions at particular times. Before we begin to consider the creation and use of a scheduler in detail in the next chapter, we need to appreciate that there are two broad classes of scheduler:

● The co-operative scheduler

● The pre-emptive scheduler

The features of the two types of scheduler are compared in Figures 13.5 and 13.6.

The co-operative scheduler

● A co-operative scheduler provides a single-tasking system architecture

Operation:

● Tasks are scheduled to run at specific times (either on a periodic or one-shot basis)

● When a task is scheduled to run it is added to the waiting list

● When the CPU is free, the next waiting task (if any) is executed

● The task runs to completion, then returns control to the scheduler

Implementation:

● The scheduler is simple and can be implemented in a small amount of code

● The scheduler must allocate memory for only a single task at a time

● The scheduler will generally be written entirely in a high-level language (such as ‘C’)

● The scheduler is not a separate application; it becomes part of the developer’s code

Performance:

● Obtaining rapid responses to external events requires care at the design stage

Reliability and safety:

● Co-operate scheduling is simple, predictable, reliable and safe

The pre-emptive scheduler

● A pre-emptive scheduler provides a multitasking system architecture

Operation:

● Tasks are scheduled to run at specific times (either on a periodic or one-shot basis)

● When a task is scheduled to run it is added to the waiting list

● Waiting tasks (if any) are run for a fixed period then, if not completed, are paused and placed back in the waiting list. The next waiting task is then run for a fixed period, and so on

Implementation:

● The scheduler is comparatively complicated, not least because features such as semaphores must be implemented to avoid conflicts when ‘concurrent’ tasks attempt to access shared resources

● The scheduler must allocate memory to hold all the intermediate states of pre-empted tasks

● The scheduler will generally be written (at least in part) in Assembly language

● The scheduler is generally created as a separate application

Performance:

● Rapid responses to external events can be obtained

Reliability and safety:

● Generally considered to be less predictable, and less reliable, than co-operative approaches

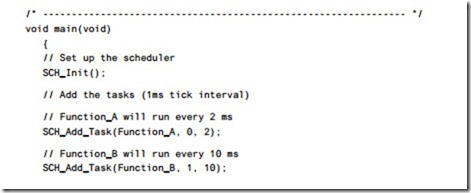

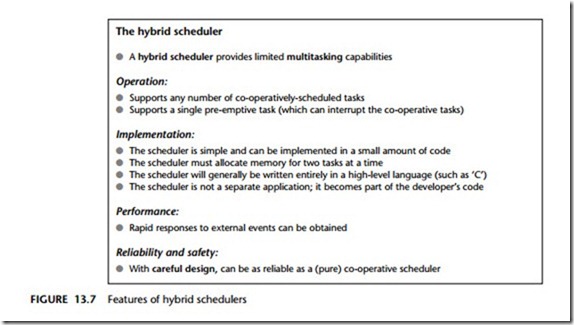

In this book, we use mainly co-operative schedulers and will make limited use of hybrid schedulers (Figure 13.7). Together, these two forms of scheduler will provide the facilities we require (the ability to share a timer between multiple tasks, the ability to run both ‘periodic’ and ‘one-shot’ tasks): they do this while avoiding the complexi- ties inherent in (fully) pre-emptive environments.

The key reason why the co-operative schedulers are both reliable and predictable is that only one task is active at any point in time: this task runs to completion, and then returns control to the scheduler. Contrast this with the situation in a fully pre- emptive system with more than one active task. Suppose one task in such a system which is reading from a port and the scheduler performs a ‘context switch’, causing a different task to access the same port: under these circumstances, unless we take action to prevent it, data may be lost or corrupted.

This problem arises frequently in multitasking environments where we have what are known as ‘critical sections’ of code. Such critical section are code areas that – once started – must be allowed to run to completion without interruption. Examples of critical sections include:

● Code which modifies or reads variables, particularly global variables used for inter- task communication. In general, this is the most common form of critical section, since inter-task communication is often a key requirement.

The hybrid scheduler

● A hybrid scheduler provides limited multitasking capabilities

Operation:

● Supports any number of co-operatively-scheduled tasks

● Supports a single pre-emptive task (which can interrupt the co-operative tasks)

Implementation:

● The scheduler is simple and can be implemented in a small amount of code

● The scheduler must allocate memory for two tasks at a time

● The scheduler will generally be written entirely in a high-level language (such as ‘C’)

● The scheduler is not a separate application; it becomes part of the developer’s code

Performance:

● Rapid responses to external events can be obtained

Reliability and safety:

● With careful design, can be as reliable as a (pure) co-operative scheduler

● Code which interfaces to hardware, such as ports, analog-to-digital converters (ADCs) and so on. What happens, for example, if the same ADC is used simultane- ously by more than one task?

● Code which calls common functions. What happens, for example, if the same function is called simultaneously by more than one task?

In a co-operative system, these problems do not arise, since only one task is ever active at the same time. To deal with such critical sections of code in a pre-emptive system, we have two main possibilities:

● ‘Pause’ the scheduling by disabling the scheduler interrupt before beginning the critical section; re-enable the scheduler interrupt when we leave the critical section.

● Or use a ‘lock’ (or some other form of ‘semaphore mechanism’) to achieve a similar result.

The first solution is that, when we start accessing the shared resource (say Port X), we disable the scheduler. This solves the immediate problem since (say) Task A will be allowed to run without interruption until it has finished with Port X. However, this ‘solution’ is less than perfect. For one thing, by disabling the scheduler, we will no longer be keeping track of the elapsed time and all timing functions will begin to drift

– in this case by a period up to the duration of Task A every time we access Port X. This simply is not acceptable.

The use of locks is a better solution and appears, at first inspection, easy to imple- ment. Before entering the critical section of code, we ‘lock’ the associated resource;

when we have finished with the resource we ‘unlock’ it. While locked, no other process may enter the critical section.1

This is one way we might try to achieve this:

1 Task A checks the ‘lock’ for Port X it wishes to access.

2 If the section is locked, Task A waits.

3 When the port is unlocked, Task A sets the lock and then uses the port.

4 When Task A has finished with the port, it leaves the critical section and unlocks the port.

Implementing this algorithm in code also seems straightforward, as illustrated in Listing 13.7.

1. Of course, this is only a partial solution to the problems caused by multitasking. If the purpose of Task A is to read from an ADC, and Task B has locked the ADC when the Task A is involved, then Task A cannot carry out its required activity. Use of locks, or any other mechanisms, will not solve this problem; however, they may prevent the system from crashing. Of course, by using a co- operative scheduler, these problems do not arise.

Consider the part of the code labelled ‘A’ in Listing 13.7. If our system is fully pre- emptive, then our task can reach this point at the same time as the scheduler performs a context switch and allows (say) Task B access to the CPU. If Task Y also wants to access the Port X

We can then have a situation as follows:

● Task A has checked the lock for Port X and found that the port is not locked; Task A has, however, not yet changed the lock flag.

● Task B is then ‘switched in’. Task B checks the lock flag and it is still clear. Task B sets the lock flag and begins to use Port X.

● Task A is ‘switched in’ again. As far as Task A is concerned, the port is not locked; this task therefore sets the flag and starts to use the port, unaware that Task B is already doing so.

● …

As we can see, this simple lock code violates the principal of mutual exclusion: that is, it allows more than one task to access a critical code section. The problem arises because it is possible for the context switch to occur after a task has checked the lock flag but before the task changes the lock flag. In other words, the lock ‘check and set code’ (designed to control access to a critical section of code), is itself a critical section.

This problem can be solved. For example, because it takes little time to ‘check and set’ the lock code, we can disable interrupts for this period. However, this is not in itself a complete solution: because there is a chance that an interrupt may have occurred even in the short period of ‘check and set’, we may then need to check the relevant interrupt flag(s) and, if necessary, call the relevant ISR(s). This can be done, but it adds to the complexity of the operating environment.