8.2 Bridge- Based Bus Architectures

From a logical viewpoint, all of the system components are connected directly to the system bus in the previous section. From an operational viewpoint, this approach is overly burdensome on the system bus because simultaneous transfers cannot take place among the various components. While every device at the same time, several independent transfers may need to take place at any time. For example, a graphics component may be repainting a video screen at the same time that a cache line is being retrieved from main memory, while an I/O trans- fer is taking place over a network.

These different transfers are typically segregated onto separate busses through the use of bridges. Figure 8-7 illustrates bridging with Intel’s Pentium II Xeon processors. At the top of the diagram are two Pentium II processors, arranged in a symmetric multiprocessor (SMP) configuration. The operating system per- forms load balancing by selecting one processor over another when assigning tasks (this is different from parallel processing, discussed in Chapter 10, in which multiple processors work on a common problem.) Each Pentium II processor has a “backside bus” to its own cache of 3200 MB/sec (8 bytes wide ´ 400 MHz), thus segregating cache traffic from other bus traffic.

Working down from the top of the diagram, the two Pentium II processors converge on the System Bus (sometimes called the “frontside bus.” The System Bus is 32 bits wide and makes transfers on both the leading and falling edges of the 100 MHz bus clock, giving a total available bandwidth of 4 bytes ´ 2 edges ´ 100 MHz = 800 MB/sec that is shared between the processors.

At the center of the diagram is the Intel 440GX AGPset “Host Bridge” which connects the System Bus to the remaining busses. The Host Bridge acts as a go-between among the System Bus, the main memory, the graphics processor, and a hierarchy of other busses. To the right of the Host Bridge is the main memory (synchronous DRAM), connected to the Host Bridge by an 800 MB/sec bus.

In this particular example, a separate bus known as the Advanced Graphics Port (AGP) is provided from the Host Bridge to the graphics processor over a 533 MB/sec bus. Graphics rendering (that is, filling an object with color) commonly needs texture information that is too large to economically place on a graphics card. The AGP allows for a high speed path between the graphics processor and the main memory, where texture maps can now be effectively stored.

Below the Host Bridge is the 33 MHz Peripheral Component Interconnect (PCI) bus, which connects the remaining busses to the Host Bridge. The PCI bus has a number of components connected to it, such as the Small Computer System Interface (SCSI) controller which is yet another bus, that in this illustration accepts an Ethernet network interface card. Prior to the introduction of the AGP, graphics cards were placed on the PCI bus, which created a bottleneck for all of the other bus traffic.

Attached to the PCI bus is a PCI-to-ISA bridge, which actually provides bridging for two 1.5 MB/sec Universal Serial Bus (USB) busses, two 33 MB/sec integrated Drive Electronics (IDE) busses, and a 16.7 MB/sec Industry Standard Architecture (ISA) bus. The IDE busses are generally used for disk drives, the ISA bus is generally used for moderate rate devices like printers and voice-band modems, and the USB busses are used for low bit rate devices like mice and snapshot digital cameras.

8.3 Communication Methodologies

Computer systems have a wide range of communication tasks. The CPU must communicate with memory and with a wide range of I/O devices, from extremely slow devices such as keyboards, to high-speed devices like disk drives and network interfaces. There may be multiple CPUs that communicate with one another either directly or through a shared memory, as described in the previous section for the dual Pentium II Xeon configuration.

Three methods for managing input and output are programmed I/O (also known as polling), interrupt driven I/O, and direct memory access (DMA).

8.3.1 PROGRAMMED I/O

Consider reading a block of data from a disk. In programmed I/O, the CPU polls each device to see if it needs servicing. In a restaurant analogy, the host would approach the patron and ask if the patron is ready.

The operations that take place for programmed I/O are shown in the flowchart in Figure 8-8. The CPU first checks the status of the disk by reading a special register that can be accessed in the memory space, or by issuing a special I/O instruction if this is how the architecture implements I/O. If the disk is not ready to be read or written, then the process loops back and checks the status continuously until the disk is ready. This is referred to as a busy-wait. When the disk is finally ready, then a transfer of data is made between the disk and the CPU.

After the transfer is completed, the CPU checks to see if there is another communication request for the disk. If there is, then the process repeats, otherwise the CPU continues with another task.

In programmed I/O the CPU wastes time polling devices. Another problem is that high priority devices are not checked until the CPU is finished with its cur- rent I/O task, which may have a low priority. Programmed I/O is simple to implement, however, and so it has advantages in some applications.

8.3.2 INTERRUPT-DRIVEN I/O

With interrupt driven I/O, the CPU does not access a device until it needs servicing, and so it does not get caught up in busy-waits. In interrupt-driven I/O, the device requests service through a special interrupt request line that goes directly to the CPU. The restaurant analogy would have the patron politely tap- ping silverware on a water glass, thus interrupting the waiter when service is required.

A flowchart for interrupt driven I/O is shown in Figure 8-9. The CPU issues a

request to the disk for reading or for writing, and then immediately resumes execution of another process. At some later time, when the disk is ready, it interrupts the CPU. The CPU then invokes an interrupt service routine (ISR) for the disk, and returns to normal execution when the interrupt service routine completes its task. The ISR is similar in structure to the procedure presented in Chapter 4, except that interrupts occur asynchronously with respect to the process being executed by the CPU: an interrupt can occur at any time during pro- gram execution.

There are times when a process being executed by the CPU should not be interrupted because some critical operation is taking place. For this reason, instruction sets include instructions to disable and enable interrupts under programmed control. (The waiter can ignore the patron at times.) Whether or not interrupts are accepted is generally determined by the state of the Interrupt Flag (IF) which is part of the Processor Status Register. Furthermore, in most systems priorities are assigned to the interrupts, either enforced by the processor or by a peripheral interrupt controller (PIC). (The waiter may attend to the head table first.) At the top priority level in many systems, there is a non maskable interrupt (NMI) which, as the name implies, cannot be disabled. (The waiter will in all cases pay attention to the fire alarm!) The NMI is used for handling potentially catastrophic events such as power failures, and more ordinary but crucially uninterruptible operations such as file system updates.

At the time when an interrupt occurs (which is sometimes loosely referred to as a trap, even though traps usually have a different meaning, as explained in Chapter 6), the Processor Status Register and the Program Counter (%psr and %pc for the ARC) are automatically pushed onto the stack, and the Program Counter is loaded with the address of the appropriate interrupt service routine. The processor status register is pushed onto the stack because it contains the interrupt flag (IF), and the processor must disable interrupts for at least the duration of the first instruction of the ISR. (See problem 8.2.) Execution of the interrupt routine then begins. When the interrupt service routine finishes, execution of the interrupted program then resumes.

The ARC jmpl instruction (see Chapter 4) will not work properly for resuming execution of the interrupted routine, because in addition to restoring the pro- gram counter contents, the processor status register must be restored. Instead, the rett (return from trap) instruction is invoked, which reverses the interrupt process and restores the %psr and %pc registers to their values prior to the interrupt. In the ARC architecture, rett is an arithmetic format instruction with op3 = 111001, and an unused rd field (all zeros).

8.3.3 DIRECT MEMORY ACCESS (DMA)

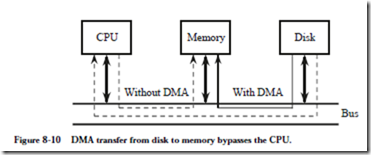

Although interrupt driven I/O frees the CPU until the device requires service, the CPU is still responsible for making the actual data transfer. Figure 8-10 high-

lights the problem. In order to transfer a block of data between the memory and the disk using either programmed I/O or interrupt driven I/O, every word travels over the system bus (or equivalently, through the Host Bridge) twice: first to the CPU, then again over the system bus to its destination.

A DMA device can transfer data directly to and from memory, rather than using the CPU as an intermediary, and can thus relieve congestion on the system bus. In keeping with the restaurant analogy, the host serves everyone at one table before serving anyone at another table. DMA services are usually provided by a DMA controller, which is itself a specialized processor whose specialty is transfer- ring data directly to or from I/O devices and memory. Most DMA controllers can also be programmed to make memory-to-memory block moves. A DMA device thus takes over the job of the CPU during a transfer. In setting up the transfer, the CPU programs the DMA device with the starting address in main memory, the starting address in the device, and the length of the block to be transferred.

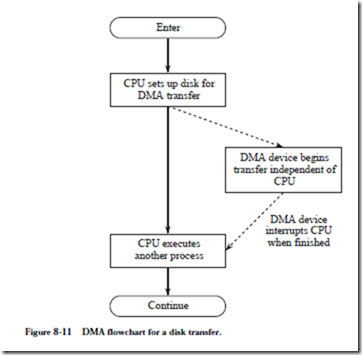

Figure 8-11 illustrates the DMA process for a disk transfer. The CPU sets up the DMA device and then signals the device to start the transfer. While the transfer is taking place, the CPU continues execution of another process. When the DMA transfer is completed, the device informs the CPU through an interrupt. A sys- tem that implements DMA thus also implements interrupts as well.

If the DMA device transfers a large block of data without relinquishing the bus, the CPU may become starved for instructions or data, and thus its work is halted until the DMA transfer has completed. In order to alleviate this problem, DMA controllers usually have a “cycle-stealing” mode. In cycle-stealing DMA the controller acquires the bus, transfers a single byte or word, and then relinquishes the bus. This allows other devices, and in particular the CPU, to share the bus during DMA transfers. In the restaurant analogy, a patron can request a check while the host is serving another table.