Chapter 11: Network Organization and Architecture

11.1 Introduction

—Sun Microsystems, Inc.

Sun Microsystems launched a major advertising campaign in the 1980s with the catchy slogan that opens this chapter. A couple of decades ago, its pitch was surely more sizzle than steak, but it was like a voice in the wilderness, heralding today’s wired world with the Web at the heart of global commerce. Standalone business computers are now obsolete and irrelevant.

This chapter will introduce you to the vast and complex arena of data communications with a particular focus on the Internet. We will look at architectural models (network protocols) from a historical point of view, a theoretical point of view, and a practical point of view. Once you have an understanding of how a network operates, you will learn about many of the components that constitute network organization. Our intention is to give you a broad view of the technologies and terminology that every computer professional will encounter at some time during his or her career. To understand the computer is to also understand the network.

11.2 Early Business Computer Networks

Today’s computer networks evolved along two different paths. One path was directed toward enabling fast and accurate business transactions, whereas the other was aimed at facilitating collaboration and knowledge sharing in the academic and scientific communities.

Digital networks of all varieties aspire to share computer resources in the simplest, fastest, and most cost-effective manner possible. The more costly the computer, the stronger the motivation to share it among as many users as possible. In the 1950s, when most computers cost millions of dollars, only the wealthiest companies could afford more than one system. Of course, employees in remote locations had as much need for computer resources as their central office counterparts, so some method of getting them connected had to be devised. And virtually every vendor had a different connectivity solution. The most dominant of these vendors was IBM with its Systems Network Architecture (SNA). This communications architecture, with modifications, has persisted for over three decades.

IBM’s SNA is a specification for end-to-end communication between physical devices (called physical units, or PUs) over which logical sessions (known as logical units, or LUs) take place. In the original architecture, the physical components of this system consisted of terminals, printers, communications controllers, multiplexers, and front-end processors. Front-end processors sat between the host (mainframe) system and the communications lines. They managed all of the communications overhead including polling each of the communications controllers, which in turn polled each of their attached terminals. This architecture is shown in Figure 11.1.

IBM’s SNA was geared toward high-speed transaction entry and customer service inquiries. Even at the modest line speed of 9600bps (bits per second), access to data on the host was nearly instantaneous when all network components were functioning properly under normal loads. The speed of this architecture, however, came at the expense of flexibility and interoperability. The human overhead in managing and supporting these networks was enormous, and connections to other vendors’ equipment and networks were often laudable feats of software and hardware engineering. Over the past 30 years, SNA has adapted to changing business needs and networking environments, but the underlying concepts are essentially what they were decades ago. In fact, this architecture was so well designed that aspects of it formed the foundation for the definitive international communications architecture, OSI, which we discuss in Section 11.4. Although SNA contributed much to the young science of data communications, the technology has just about run its course. In most installations, it has been replaced by “open” Internet protocols.

11.3 Early Academic and Scientific Networks — The Roots and Architecture of the Internet

Amid the angst of the Cold War, American scientists at far-flung research institutions toiled under government contracts, seeking to preserve the military ascendancy of the United States. At a time when we had fallen behind in the technology race, the United States government created an organization called the Advanced Research Projects Agency (ARPA). The sophisticated computers this organization needed to carry out its work, however, were scarce and extremely costly—even by Pentagon standards. Before long, it occurred to someone that by establishing communication links into the few supercomputers that were scattered all over the United States, computational resources could be shared by innumerable like-minded researchers. Moreover, this network would be designed with sufficient redundancy to provide for continuous communication, even if thermonuclear war knocked out a large number of nodes or communication lines. To this end in December 1968, a Cambridge, Massachusetts, consulting firm called BBN (Bolt, Beranek and Newman, now Genuity Corporation) was awarded the contract to construct such a network. In December 1969, four nodes, the University of Utah, the University of California at Los Angeles, the University of California at Santa Barbara, and the Stanford Research Institute, went online. ARPAnet gradually expanded to include more government and research institutions. When President Reagan changed the name of ARPA to the Defense Advanced Research Projects Network (DARPA), ARPAnet became DARPAnet. Through the early 1980s, nodes were added at a rate of a little more than one per month. However, military researchers eventually abandoned DARPAnet in favor of more secure channels.

In 1985, the National Science Foundation established its own network, NSFnet, to support its scientific and academic research. NSFnet and DARPAnet served a similar purpose and a similar user community, but the capabilities of NSFnet outstripped those of DARPAnet. Consequently, when the military abandoned DARPAnet, NSFnet absorbed it, and became what we now know as the Internet. By the early 1990s, the NSF had outgrown NSFnet, so it began building a faster, more reliable NSFnet. Administration of the public Internet then fell to private national and regional corporations, such as Sprint, MCI, and PacBell, to name a few. These companies bought the NSFnet trunk lines, called backbones, and made money by selling backbone capacity to various Internet service providers (ISPs).

The original DARPAnet (and now the Internet) would have survived thermonuclear war because, unlike all other networks in existence in the 1970s, it had no dedicated connections between systems. Information was instead routed along whatever pathways were available. Parts of the data stream belonging to a single dialogue could take different routes to their destinations. The key to this robustness is the idea of datagram message packets, which carry data in chunks instead of the streams used by the SNA model. Each datagram contains addressing information so that every datagram can be routed as a single, discrete unit.

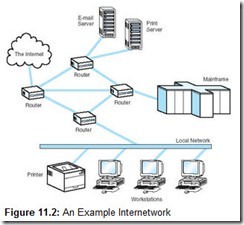

A second revolutionary aspect of DARPAnet was that it created a uniform protocol for communications between dissimilar hosts along networks of differing speeds. Because it connected many different kinds of networks, DARPAnet was said to be an internetwork. As originally specified, each host computer connected to DARPAnet by way of an Interface Message Processor (IMP). IMPs took care of protocol translation from the language of DARPAnet to the communications language native to the host system, so any communications protocol could be used between the IMP and the host. Today, routers (discussed in Section 11.6.7) have replaced IMPs, and the communications protocols are less heterogeneous than they were in the 1970s. However, the underlying principles have remained the same, and the generic concept of internetworking has become practically synonymous with the Internet. A modern internetwork configuration is shown in Figure 11.2. The diagram shows how four routers form the heart of the network. They connect many different types of equipment, making decisions on their own as to how datagrams should get to their destinations in the most efficient way possible.

The Internet is much more than a set of good data communication specifications. It is, perhaps, a philosophy. The foremost principle of this philosophy is the idea of a free and open world of information sharing, with the destiny of this world being shaped collaboratively by the people and ideas in it. The epitome of this openness is the manner in which Internet standards are created. Internet standards are formulated through a democratic process that takes place under the auspices of the Internet Architecture Board (IAB), which itself operates under the oversight of the not-for-profit Internet Society (ISOC). The Internet Engineering Task Force (IETF), operating within the IAB, is a loose alliance of industry experts that develops detailed specifications for Internet protocols. The IETF publishes all proposed standards in the form of Requests for Comment (RFCs), which are open to anyone’s scrutiny and comment. The two most important RFCs—RFC 791 (Internet Protocol Version 4) and RFC 793 (Transmission Control Protocol)—form the foundation of today’s global Internet.

The organization of all the ISOC’s committees under more committees could have resulted in a tangle of bureaucracy producing inscrutable and convoluted specifications. But owing to the openness of the entire process, as well as the talents of the reviewers, RFCs are among the clearest and most readable documents in the entire body of networking literature. It is little wonder that manufacturers were so quick to adopt Internet protocols. Internet protocols are now running on all sizes of networks, both publicly and privately owned. Formerly, networking standards were handed down by a centralized committee or through an equipment vendor. One such approach resulted in the ISO/OSI protocol model, which we discuss next.

11.5 Network Protocols II — TCP/IP Network Architecture

While the ISO and the CCITT were haggling over the finer points of the perfect protocol stack, TCP/IP was rapidly spreading across the globe. By the sheer weight of its popularity within the academic and scientific communications communities, TCP/IP quietly became the de facto global data communication standard.

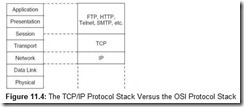

Although it didn’t start out that way, TCP/IP is now a lean and effective protocol stack. It has three layers that can be mapped to five of the seven layers in the OSI model. These layers are shown in Figure 11.4. Because the IP layer is loosely coupled with OSI’s Data Link and Physical layers, TCP/IP can be used with any type of network, even different types of networks within a single session. The singular requirement is that all of the participating networks must be running—at minimum—Version 4 of the Internet Protocol (IPv4).

There are two versions of the Internet Protocol in use today, Version 4 and Version 6. IPv6 addresses many of the limitations of IPv4. Despite the many advantages of IPv6, the huge installed base of IPv4 ensures that it will be supported for many years to come. Some of the major differences between IPv4 and IPv6 are outlined in Section 11.5.5. But first, we take a detailed look at IPv4.

11.5.1 The IP Layer for Version 4

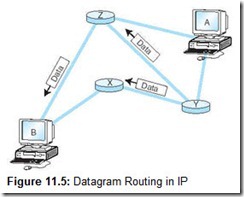

The IP layer of the TCP/IP protocol stack provides essentially the same services as the Network and Data Link layers of the OSI Reference Model: It divides TCP packets into protocol data units called datagrams, and then attaches the routing information required to get the datagrams to their destinations. The concept of the datagram was fundamental to the robustness of ARPAnet, and now, the Internet. Datagrams can take any route available to them without intervention by a human network manager. Take, for example, the network shown in Figure 11.5. If intermediate node X becomes congested or fails, intermediate node Y can route datagrams through node Z until X is back up to full speed. Routers are the Internet’s most critical components, and researchers are continually seeking ways to improve their effectiveness and performance. We look at routers in detail in Section 11.6.7.

The bytes that constitute any of the TCP/IP protocol data units are called octets. This is because at the time the ARPAnet protocols were being designed, the word byte was thought to be a proprietary term for the 8-bit groups used by IBM mainframes. Most TCP/IP literature uses the word octet, but we use byte for the sake of clarity.

![]()

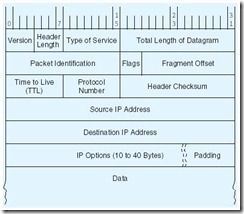

The IP Version 4 Datagram Header

Each IPv4 datagram must contain at least 40 bytes, which include a 24-byte header as shown above. The horizontal rows represent 32-bit words. Upon inspection of the figure, you can see, for example, that the Type of Service field occupies bits 8 through 15, while the Packet Identification field occupies bits 32 through 47 of the header. The Padding field shown as the last field of the header assures that the data that follows the header starts on an even 32-bit boundary. The Padding always contains zeroes. The other fields in the IPv4 header are:

-

Version—Specifies the IP protocol version being used. The version number tells all of the hardware along the way the length of the datagram and what content to expect in its header fields. For IPv4, this field is always 0100 (because 01002 = 410).

-

Header Length—Gives the length of the header in 32-bit words. The size of the IP header is variable, depending on the value of the IP Options fields, but the minimum value for a correct header is 5.

-

Type of Service—Controls the priority that the datagram is given by intermediate nodes. Values can range from “routine” (000) to “critical” (101). Network control datagrams are indicated with 110 and 111.

-

Total Length—Gives the length of the entire IP datagram in bytes. As you can see by the layout above, 2 bytes are reserved for this purpose. Hence, the largest allowable IP datagram is 216 – 1, or 65,535.

-

Packet ID—Each datagram is assigned a serial number as it is placed on the network. The combination of Host ID and Packet ID uniquely identifies each IP datagram in existence at any time in the world.

-

Flags—Specify whether the datagram may be fragmented (broken into smaller datagrams) by intermediate nodes. IP networks must be able to handle datagrams of at least 576 bytes. Most IP networks can deal with packets that are about 8KB long. With the “Don’t Fragment” bit set, an 8KB datagram will not be routed over a network that says it can handle only 2KB packets, for example.

-

Fragment Offset—Indicates the location of a fragment within a certain datagram. That is, it tells which part of the datagram the fragment came from.

-

Time to Live (TTL)—TTL was originally intended to measure the number of seconds for which the datagram would remain valid. Should a datagram get caught in a routing loop, the TTL would (theoretically) expire before the datagram could contribute to a congestion problem. In practice, the TTL field is decremented each time it passes through an intermediate network node, so this field does not really measure the number of seconds that a packet lives, but the number of hops it is allowed before it reaches its destination.

-

Protocol Number—Indicates which higher-layer protocol is sending the data that follows the header. Some of the important values for this field are:

0 = Reserved

1 = Internet Control Message Protocol (ICMP)

6 = Transmission Control Protocol (TCP)

17 = User Datagram Protocol (UDP)

TCP is described in Section 11.5.3.

-

Header Checksum—This field is calculated by first calculating the one’s complement sum of all 16-bit words in the header, and then taking the one’s complement of this sum, with the checksum field itself originally set to all zeroes. The one’s complement sum is the arithmetic sum of two of the words with the (seventeenth) carry bit added to the lowest bit position of the sum. (See Section 2.4.2.) For example, 11110011 + 10011010 = 110001101 = 10001110 using one’s complement arithmetic. What this means is that if we have an IP datagram of the form shown to the right, each wi is a 16-bit word in the IP datagram. The complete checksum would be computed over two 16-bit words at a time: w1 + w2 = S1; S1 + w3 = S2; . . . Sk + wk–2 = Sk+1.

-

Source and Destination Addresses—Tell where the datagram is going. We have much more to say about these 32-bit fields in Section 11.5.2.

-

IP Options—Provides diagnostic information and routing controls. IP Options are, well, optional.

![]()

11.5.2 The Trouble with IP Version 4

The number of bytes allocated for each field in the IP header reflects the technological era in which IP was designed. Back in the ARPAnet years, no one could have imagined how the network would grow, or even that there would ever be a civilian use for it.

With the slowest networks of today being faster than the fastest networks of the 1960s, IP’s packet length limit of 65,536 bytes has become a problem. The packets simply move too fast for certain network equipment to be sure that the packet hasn’t been damaged between intermediate nodes. (At gigabit speeds, a 65,535-byte IP datagram passes over a given point in less than one millisecond.)

By far the most serious problem with IPv4 headers concerns addressing. Every host and router must have an address that is unique over the entire Internet. To assure that no Internet node duplicates the address of another Internet node, host IDs are administered by a central authority, the Internet Corporation for Assigned Names and Numbers (ICANN). ICANN keeps track of groups of IP addresses, which are subsequently allocated or assigned by regional authorities. (The ICANN also coordinates the assignment of parameter values used in protocols so that everyone knows which values evoke which behaviors over the Internet.)

As you can see by looking at the IP header shown in the sidebar, there are 232 or about 4.3 billion host IDs. It would be reasonable to think that there would be plenty of addresses to go around, but this is not the case. The problem lies in the fact that these addresses are not like serial numbers sequentially assigned to the next person who asks for one. It’s much more complicated than that.

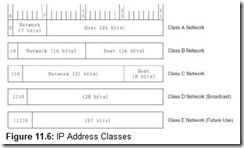

IP allows for three types, or classes, of networks, designated A, B, and C. They are distinguished from each other by the number of nodes (called hosts) that each can directly support. Class A networks can support the largest number of hosts; Class C, the least.

The first three bits of an IP address indicate the network class. Addresses for Class A networks always begin with 0, Class B with 10, and Class C with 110. The remaining bits in the address are devoted to the network number and the host ID within that network number, as shown in Figure 11.6.

IP addresses are 32-bit numbers expressed in dotted decimal notation, for example 18.7.21.69 or 146.186.157.6. Each of these decimal numbers represents 8 bits of binary information and can therefore have a decimal value between 0 and 255. 127.x.x.x is a Class A network but is reserved for loopback testing, which checks the TCP/IP protocol processes running on the host. During the loopback test no datagrams enter the network. The 0.0.0.0 network is typically reserved for use as the default route in the network.

Allowing for the reserved networks 0 and 127, only 126 Class A networks can be defined using a 7-bit network field. Class A networks are the largest networks of all, each able to support about 16.7 million nodes. Although it is unlikely that a Class A network would need all 16 million possible addresses, the Class A addresses, 1.0.0.0 through 126.255.255.255, were long ago assigned to early Internet adopters such as MIT and the Xerox Corporation. Furthermore, all of the 16,382 Class B network IDs (128.0.0.0 to 191.255.255.255) have also been assigned. Each Class B network can contain 65,534 unique node addresses. Because very few organizations need more than 100,000 addresses, their next choice is to identify themselves as Class C network owners, giving them only 256 addresses within the Class C space of 192.0.0.0 through 233.255.255.255. This is far fewer than would meet the needs of even a moderately sized company or institution. Thus, many networks have been unable to obtain a contiguous block of IP addresses so that each node on the network can have its own address on the Internet. A number of clever workarounds have been devised to deal with this problem, but the ultimate solution lies in reworking the entire IP address structure. We look at this new address structure in Section 11.5.6. (Classes D and E do exist, but they aren’t networks at all. Instead, they’re groups of reserved addresses. The Class D addresses, 224 through 240, are used for multicasting by groups of hosts that share a common characteristic. The Class E addresses, 241 through 248, are reserved for future use.)

In addition to the eventual depletion of address space, there are other problems with IPv4. Its original designers did not anticipate the growth of the Internet and the routing problems that would result from the address class scheme. There are typically 70,000-plus routes in the routing table of an Internet backbone router. The current routing infrastructure of IPv4 needs to be modified to reduce the number of routes that routers must store. As with cache memory, larger router memories result in slower routing information retrieval. There is also a definite need for security at the IP level. A protocol called IPSec (Internet Protocol Security) is currently defined for the IP level. However, it is optional and hasn’t been standardized or universally adopted.

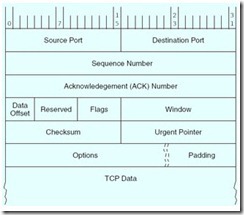

The TCP Segment Format

The TCP segment format is shown above. The numbers at the top of the figure are the bit positions spanned by each field. The horizontal rows represent 32-bit words. The fields are defined as follows:

-

Source and Destination Ports—Specifies interfaces to applications running above TCP. These applications are known to TCP by their port number.

-

Sequence Number—Indicates the sequence number of the first byte of data in the payload. TCP assigns each transmitted byte a sequence number. If 100 data bytes will be sent 10 bytes at a time, the sequence number in the first segment might be 0, the second 10, the third 20, and so forth. The starting sequence number is not necessarily 0, so long as the number is unique between the sender and receiver.

-

Acknowledgement Number—Contains the next data sequence number that the receiver is expecting. TCP uses this value to determine whether any datagrams have gotten lost along the way.

-

Data Offset—Contains the number of 32-bit words in the header, or equivalently, the relative location of the word where the data starts within the segment. Also known as the header length.

-

Reserved—These six bits must be zero until someone comes up with a good use for them.

-

Flags—Contains six bits that are used mostly for protocol management. They are set to “true” when their values are nonzero. The TCP flags and their meanings are:

URG: Indicates that urgent data exists in this segment. The Urgent Pointer field (see below) points to the location of the first byte that follows the urgent information.

ACK: Indicates whether the Acknowledgement Number field (see above) contains significant information.

PSH: Tells all TCP processes involved in the connection to clear their buffers, that is, “push” the data to the receiver. This flag should also be set when urgent data exists in the payload.

RST: Resets the connection. Usually, it forces validation of all packets received and places the receiver back into the “listen for more data” state.

SYN: Indicates that the purpose of the segment is to synchronize sequence numbers. If the sender transmits [SYN, SEQ# = x], it should subsequently receive [ACK, SEQ# = x + 1] from the receiver. At the time that two nodes establish a connection, both exchange their respective initial sequence numbers.

FIN: This is the “finished” flag. It lets the receiver know that the sender has completed transmission, having the effect of starting closedown procedures for the connection.

-

Window—Allows both nodes to define the size of their respective data windows by stating the number of bytes that each is willing to accept within any single segment. For example, if the sender transmits bytes numbered 0 to 1023 and the receiver acknowledges with 1024 in the ACK# field and a window value of 512, the sender should reply by sending data bytes 1024 through 1535. (This may happen when the receiver’s buffer is starting to fill up so it requests that the sender slow down until the receiver catches up.) Notice that if the receiver’s application is running very slowly, say it’s pulling data 1 or 2 bytes at a time from its buffer, the TCP process running at the receiver should wait until the application buffer is empty enough to justify sending another segment. If the receiver sends a window size of 0, the effect is acknowledgement of all bytes up to the acknowledgement number, and to stop further data transmission until the same acknowledgment number is sent again with a nonzero window size.

-

Checksum—This field contains the checksum over the fields in the TCP segment (except the data padding and the checksum itself), along with an IP pseudoheader as follows:

-

As with the IP checksum explained earlier, the TCP checksum is the 16-bit one’s complement of the sum of all 16-bit words in the header and text of the TCP segment.

-

Urgent Pointer—Points to the first byte that follows the urgent data. This field is meaningful only when the URG flag is set.

-

Options—Concerns, among other things, negotiation of window sizes and whether selective acknowledgment (SACK) can be used. SACK permits retransmission of particular segments within a window as opposed to requiring the entire window to be retransmitted if a segment from somewhere in the middle gets lost. This concept will be clearer to you after our discussion of TCP flow control.

![]()

11.5.3 Transmission Control Protocol

The sole purpose of IP is to correctly route datagrams across the network. You can think of IP as a courier who delivers packages with no concern as to their contents or the order in which they are delivered. Transmission Control Protocol (TCP) is the consumer of IP services, and it does indeed care about these things as well as many others.

The protocol connection between two TCP processes is much more sophisticated than the one at the IP layer. Where IP simply accepts or rejects datagrams based only on header information, TCP opens a conversation, called a connection, with a TCP process running on a remote system. A TCP connection is very much analogous to a telephone conversation, with its own protocol “etiquette.” As part of initiating this conversation, TCP also opens a service access point, SAP, in the application running above it. In TCP, this SAP is a numerical value called a port. The combination of the port number, the host ID, and the protocol designation becomes a socket, which is logically equivalent to a file name (or handle) to the application running above TCP. Instead of accessing data by using its disk file name, the application using TCP reads data through the socket. Port numbers 0 through 1023 are called “well-known” port numbers because they are reserved for particular TCP applications. For example, the TCP/IP File Transfer Protocol (FTP) application uses ports 20 and 21. The Telnet terminal protocol uses port 23. Port numbers 1024 through 65,535 are available for user-defined implementations.

TCP makes sure that the stream of data it provides to the application is complete, in its proper sequence, and with no duplicated data. TCP also compensates for irregularities in the underlying network by making sure that its segments (data packets with headers) aren’t sent so fast that they overwhelm intermediate nodes or the receiver. A TCP segment requires at least 20 bytes for its header. The data payload is optional. A segment can be at most 65,515 bytes long, including the header, so that the entire segment fits into an IP payload. If need be, IP can fragment a TCP segment if requested to do so by an intermediate node.

TCP provides a reliable, connection-oriented service. Connection-oriented means simply that the connection must be set up before the hosts can exchange any information (much like a telephone call). The reliability is provided by a sequence number assigned to each segment. Acknowledgements are used to verify that segments are received, and must be sent and received within a specific period of time. If no acknowledgement is forthcoming, the data is retransmitted. We provide a brief introduction to how this protocol works in the next section.

11.5.4 The TCP Protocol at Work

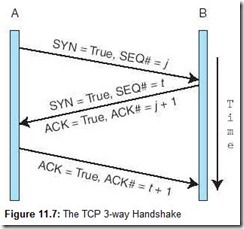

So how does all of this fit together to make a solid, sequenced, error-free connection between two (or more) TCP processes running on separate systems? Successful communication takes place in three phases: one to initiate the connection, a second to exchange the data, and a third to tear down the connection. First, the initiator, which we’ll call A, transmits an “open” primitive to a TCP process running on the remote system, B. B is assumed to be listening for an “open” request. This “open” primitive has the form:

If B is ready to accept a TCP connection from the sender, it replies with:

To which A responds:

A and B have now acknowledged each other and synchronized starting sequence numbers. A‘s next sequence number will be t + 2; B‘s will be j + 2. Protocol exchanges like these are often referred to as three-way handshakes. Most networking literature display these sorts of exchanges schematically, as shown in Figure 11.7.

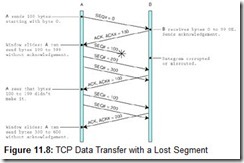

After the connection between A and B is established, they may proceed to negotiate the window size and set other options for their connection. The window tells the sender how much data to send between acknowledgments. For example, suppose A and B negotiate a window size of 500 bytes with a data payload size of 100 bytes, both agreeing not to use selective acknowledgement (discussed below). Figure 11.8 shows how TCP manages the flow of data between the two hosts. Notice what happens when a segment gets lost: The entire window is retransmitted, despite the fact that subsequent segments were delivered without error.

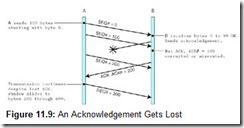

If an acknowledgment gets lost, however, a subsequent acknowledgement can prevent retransmission of the one that got lost, as shown in Figure 11.9. Of course, the acknowledgement must be sent in time to prevent a “timeout” retransmission.

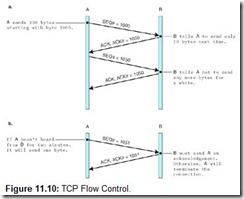

Using acknowledgement numbers, the receiver can also ask the sender to slow down or halt transmission. It is necessary to do so when the receiver’s buffer gets too full. Figure 11.10a illustrates how this is done. Figure 11.10b shows how B keeps the connection alive while it cannot receive any more data.

a. B tells A to slow down.

b. B keeps the connection alive while unable to receive more data.

Upon completion of the data exchange, one or both of the TCP processes gracefully terminates the connection. One side of the connection, say A, may indicate to the other side, B, that it is finished by sending a segment with its FIN flag set to true. This effectively closes down the connection from A to B. B, however, could continue its side of the conversation until it no longer has data to send. Once B is finished, it also transmits a segment with the FIN flag set. If A acknowledges B‘s FIN, the connection is terminated on both ends. If B receives no acknowledgement for the duration of its timeout interval, it automatically terminates the connection.

As opposed to having hard and fast rules, TCP allows the sender and receiver to negotiate a timeout period. The timeout should be set to a greater value if the connection is slower than when it is faster. The sender and receiver can also agree to use selective acknowledgement. When selective acknowledgement (SACK) is enabled, the receiver must acknowledge each datagram. In other words, no sliding window is used. SACK can save some bandwidth when an error occurs, because only the segment that has not been acknowledged (instead of the entire window) will be retransmitted. But if the exchange is error-free, bandwidth is wasted by sending acknowledgement segments. For this reason, SACK is chosen only when there is little TCP buffer space on the receiver. The larger the receiver’s buffer, the more “wiggle room” it has for receiving segments out of sequence. TCP does whatever it can to provide the applications running above it with an error-free, sequenced stream of data.

11.5.5 IP Version 6

By 1994, it appeared that IP’s Class B address problem was a crisis in the making, having the potential to bring the explosive growth of the Internet to an abrupt halt. Spurred by this sense of approaching doom, the IETF began concerted work on a successor to IPv4, now called IPv6. IETF participants released a number of experimental protocols that, over time, became known as IPv5. The corrected and enhanced versions of these protocols became known as IPv6. Experts predict that IPv6 won’t be widely implemented until late in the first decade of the twenty-first century. (Every day more Internet applications are being modified to work with IPv6.) In fact, some opponents argue that IPv6 will “never” be completely deployed because so much costly hardware will need to be replaced and because workarounds have been found for the most vexing problems inherent in IPv4. But, contrary to what its detractors would have you believe, IPv6 is much more than a patch for the Class B address shortage problem. It fixes many things that most people don’t realize are broken, as we will explain.

The IETF’s primary motivation in designing a successor to IPv4 was, of course, to extend IP’s address space beyond its current 32-bit limit to 128 bits for both the source and destination host addresses. This is an incredibly large address space, giving 2128 possible host addresses. In concrete terms, if each of these addresses were assigned to a network card weighing 28 grams (1 oz), 2128 network cards would have a mass 1.61 quadrillion times that of the entire Earth! So it would seem that the supply of IPv6 addresses is inexhaustible.

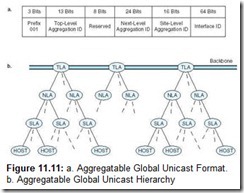

The down side of having such a large address space is that address management becomes critical. If addresses are assigned haphazardly with no organization in mind, effective packet routing would become impossible. Every router on the Internet would eventually require the storage and speed of a supercomputer to deal with the ensuing routing table explosion. To head off this problem, the IETF came up with a hierarchical address organization that it calls the Aggregatable Global Unicast Address Format shown in Figure 11.11a. The first 3 bits of the IPv6 address constitute a flag indicating that the address is a Global Unicast Address. The next 13 bits form the Top-Level Aggregation Identifier (TLA ID), which is followed by 8 reserved bits that allow either the TLA ID or the 24-bit Next-Level Aggregation Identifier (NLA ID) to expand, if needed. A TLA entity may be a country or perhaps a major global telecommunications carrier. An NLA entity could be a large corporation, a government, an academic institution, an ISP, or a small telecommunications carrier. The 16 bits following the NLA ID are the Site-Level Aggregation Identifier (SLA ID). NLA entities can use this field to create their own hierarchy, allowing each NLA entity to have 65,536 subnetworks, each of which can have 264 hosts. This hierarchy is shown graphically in Figure 11.11b.

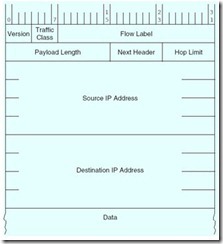

The IP Version 6 Header

The obvious problem with IPv4, of course, is its 32-bit address fields. IPv6 corrects this shortcoming by expanding the address fields to 128 bits. In order to keep the IPv6 header as small as possible (which speeds routing), many of the rarely used IPv4 header fields are not included in the main header of IPv6. If these fields are needed, a Next Header pointer has been provided. With the Next Header field, IPv6 could conceivably support a large number of header fields. Thus, future enhancements to IP would be much less disruptive than the switch from Version 4 to Version 6 portends to be. The IPv6 header fields are explained below.

-

Traffic Class—IPv6 will eventually be able to tell the difference between real-time transmissions (e.g., voice and video) and less time-sensitive data transport traffic. This field will be used to distinguish between these two traffic types.

-

Flow Label—This is another field for which specifications are still in progress. A “flow” is a conversation, either broadcast to all nodes or initiated between two particular nodes. The Flow Label field identifies a particular flow stream and intermediate routers will route the packets in a manner consistent with the code in the flow field.

-

Payload Length—Indicates the length of the payload in bytes, which includes the size of additional headers.

-

Next Header—Indicates the type of header, if any, that follows the main header. If an IPv6 protocol exchange requires more protocol information than can be carried in a single header, the Next Header field provides for an extension header. These extension headers are placed in the payload of the segment. If there is no IP extension header, then this field will contain the value for “TCP,” meaning that the first header data in the payload belongs to TCP, not IP. In general, only the destination node will examine the contents of the extension headers. Intermediate nodes pass them on as if they were common payload data.

-

Hop Limit—With 16 bits, this field is much larger than in Version 4, allowing 256 hops. As in Version 4, this field is decremented by each intermediate router. If it ever becomes zero, the packet is discarded and the sender is notified through an ICMP (for IPv6) message.

-

Source and Destination Addresses—Much larger, but with the same meaning as in Version 4. See text for a discussion of the format for this address.

![]()

At first glance, the notion of making allowances for 264 hosts on each subnet seems as wasteful of address space as the IPv4 network class system. However, such a large field is necessary to support stateless address autoconfiguration, a new feature in IPv6. In stateless address autoconfiguration, a host uses the 48-bit address burned into its network interface card (its MAC address, explained in Section 11.6.2), along with the network address information that it retrieves from a nearby router to form its entire IP address. If no problems occur during this process, each host on the network configures its own address information with no intervention by the network administrator. This feature will be a blessing to network administrators if an entity changes its ISP or telecommunications carrier. Network administrators will have to change only the IP addresses of their routers. Stateless address autoconfiguration will automatically update the TLA or SLA fields in every node on the network.

The written syntax of IPv6 addresses also differs from that of IPv4 addresses. Recall that IPv4 addresses are expressed using a dotted decimal notion, as in 146.186.157.6. IPv6 addresses are instead given in hexadecimal, separated by colons, as follows:

30FA:505A:B210:224C:1114:0327:0904:0225

making it much easier to recognize the binary equivalent of an IP address.

IPv6 addresses can be abbreviated, omitting zeroes where possible. If a 16-bit group is 0000, it can be written as 0, or omitted altogether. If more than two consecutive colons result from this omission, they can be reduced to two colons (provided there is only one group of more than two consecutive colons). For example, the IPv6 address:

30FA:0000:0000:0000:0010:0002:0300

can be written:

30FA:0:0:0:10:2:300

or even

30FA::10:2:300

However, an address such as 30FA::24D6::12CB is invalid.

The IETF is also proposing two other routing improvements: implementation of multicasting (where one message is placed on the network and read by multiple nodes) and anycasting (where any one of a logical group of nodes can be the recipient of a message, but no particular receiver is specified by the packet). This feature, along with stateless address autoconfiguration, facilitates support for mobile devices, an increasingly important sector of Internet users, particularly in countries where most telecommunications take place over wireless networks.

As previously mentioned, security is another major area in which IPv6 differs from IPv4. All of IPv4’s security features (IPSec) are “optional,” meaning that no one is forced to implement any type of security, and most installations don’t. In IPv6, IPSec is mandatory. Among the security improvements in IPv6 is a mechanism that prevents address spoofing, where a host can engage in communications with another host using a falsified IP address. (IP spoofing is often used to subvert filtering routers and firewalls intended to keep outsiders from accessing private intranets, among other things.) IPSec also supports encryption and other measures that make it more difficult for miscreants to sniff out unauthorized information.

Perhaps the best feature of IPv6 is that it provides a transition plan that allows networks to gradually move to the new format. Support for IPv4 is built into IPv6. Devices that use both protocols are called dual stack devices, because they support protocol stacks for both IPv4 and IPv6. Most routers on the market today are dual stack devices, with the expectation that IPv6 will become a reality in the not too distant future.

The benefits of IPv6 over IPv4 are clear: a greater address space, better and built-in quality of service, and better and more efficient routing. It is not a question of if but of when we will move to IPv6. The transition will be driven by the business need for IPv6 and development of the necessary applications. Although hardware replacement cost is a significant barrier, technician training and replacement of minor IP devices (such as network fax machines and printers) will contribute to the overall cost of conversion. With the advent of IP-ready automobiles, as well as many other Internet devices, IPv4 no longer meets the needs of many current applications.

11.6 Network Organization

Computer networks are often classified according to their geographic service areas. The smallest networks are local area networks (LANs). Although they can encompass thousands of nodes, LANs typically are used in a single building, or a group of buildings that are near each other. When a LAN covers more than one building, it is sometimes called a campus network. Usually, the region (the property) covered by a LAN is under the same ownership (or control) as the LAN itself. Metropolitan area networks (MANs) are networks that cover a city and its environs. They often span areas that are not under the ownership of the people who also own the network. Wide area networks (WANs) can cover multiple cities, or span the entire world.

At one time, the protocols employed by LANs, MANs, and WANs differed vastly from one another. MANs and WANs were usually designed for high-speed throughput because they served as backbone systems for multiple slower LANs, or they offered access to large host computers in data centers far away from end users. As network technologies have evolved, however, these networks are now distinguished from each other not so much by their speed or by their protocols, but by their ownership. One person’s campus LAN might be another person’s MAN. In fact, as LANs are becoming faster and more easily integrated with WAN technology, it is conceivable that eventually the concept of a MAN may disappear entirely.

This section discusses the physical network components common to LANs, MANs, and WANs. We start at the lowest level of network organization, the physical medium level, Layer 1.

11.6.1 Physical Transmission Media

Virtually any medium with the ability to carry a signal can support data communication. There are two general types of communications media: Guided transmission media and unguided transmission media. Unguided media broadcast data over the airwaves using infrared, microwave, satellite, or broadcast radio carrier signals. Guided media are physical connectors such as copper wire or fiber optic cable that directly connect to each network node.

The physical and electrical properties of guided media determine their ability to accurately convey signals of given frequencies over various distances. In Chapter 7, we mentioned that signals attenuate (get weaker) over long distances. The longer the distance and the higher the signal frequency, the greater the attenuation. Attenuation in copper wire results from the interactions of several electrical phenomena. Chief among these are the internal resistance of copper conductors and the electrical interference (inductance and capacitance) that occurs when signal-carrying wires are in close proximity to each other. External electrical fields such as those surrounding fluorescent lights and electric motors can also attenuate—or even garble—signals as they are transmitted over copper wire. Collectively, the electrical phenomena that work against the accurate transmission of signals are called noise. Signal and noise strengths are both measured in decibels (dB). Cables are rated according to how well they convey signals at different frequencies in the presence of noise. The resulting quantity is the signal-to-noise rating for the communications channel, and it is also measured in decibels:

The bandwidth of a medium is technically the range of frequencies that it can carry, measured in hertz. The wider the medium’s bandwidth, the more information it can carry. In digital communications, bandwidth is the general term for the information-carrying capacity of a medium, measured in bits per second (bps). Another important measure is bit error rate (BER), which is the ratio of the number of bits received in error to the total number of bits received. If signal frequencies exceed the signal-carrying capacity of the line, the BER may become so extreme that the attached devices will spend more of their time doing error recovery than in doing useful work.

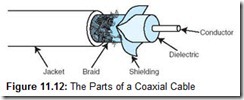

Coaxial Cable

Coaxial cable was once the medium of choice for data communications. It can carry signals up to trillions of cycles per second with low attenuation. Today, it is used mostly for broadcast and closed circuit television applications. Coaxial cable also carries signals for residential Internet services that piggyback on cable television lines.

The heart of a coaxial cable is a thick (12 to 16 gauge) inner conductor surrounded by an insulating layer called a dielectric. The dielectric is surrounded by a foil shield to protect it from transient electromagnetic fields. The foil shield is itself wrapped in a steel or copper braid to provide an electrical ground for the cable. The entire cable is then encased in a durable plastic coating (see Figure 11.12).

The coaxial cable employed by cable television services is called broadband cable because it has a capacity of at least 2Mbit/second. Broadband communication provides multiple channels of data, using a form of multiplexing. Computer networks often use narrowband cable, which is optimized for a typical bandwidth of 64kbit/second, consisting of a single channel.

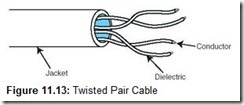

Twisted Pair

The easiest way to connect two computers is simply to run a pair of copper wires between them. One of the wires is used for sending data, the other for receiving. Of course, the further apart the two systems are from each other, the stronger the signal has to be to prevent them from attenuating into oblivion over long distances. The distance between the two systems also affects the speed at which data can be transferred. The further apart they are, the slower the line speed must be to avoid excessive errors. Using thicker conductors (smaller wire gauge numbers) can reduce attenuation. Of course, thick wire is more costly than thin wire.

In addition to attenuation, cable makers are also challenged by an electrical phenomenon known as inductance. When two wires lay perfectly flat and adjacent to each other, strong high-frequency signals in the wires create magnetic (inductive) fields around the copper conductors, which interfere with the signals in both lines.

The easiest way to reduce the electrical inductance between conductors is to twist them together. To a point, the more twists that are introduced in a pair of wires per linear foot, the less attenuation that is caused by the wires interfering with each other. Twisted wire is more costly to manufacture than untwisted wire because more wire is consumed per linear foot and the twisting must be carefully controlled. Twisted pair cabling, with two twisted wire pairs, is used in most local area network installations today (see Figure 11.13). It comes in two varieties: shielded and unshielded. Unshielded twisted pair is the most popular.

Shielded twisted pair cable is suitable for environments having a great deal of electrical interference. Today’s business environments are teeming with sources of electromagnetic radiation that can interfere with network signals. These sources can be as seemingly benign as fluorescent lights or as obviously hostile as large, humming power transformers. Any device that produces a magnetic field has the potential for scrambling network communication links. Interference can limit the speed of a network because higher signal frequencies are more sensitive to any kind of signal distortion. As a safeguard against environmental interference (called electromagnetic interference [EMI] or radio-frequency interference [RFI]), shielded twisted pair wire can be installed to help maintain the integrity of network communications in hostile environments.

Experts disagree as to whether this shielding is worth the higher material and installation costs. They point out that if the shielding is not properly grounded, it can actually cause more problems than it solves. Specifically, it can act as an antenna that actually attracts radio signals to the conductors!

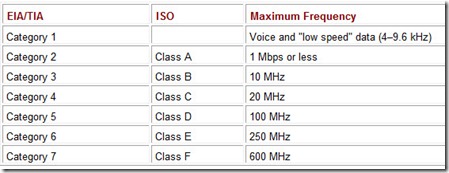

Whether shielded or unshielded, network conductors must have signal-carrying capacity appropriate to the network technology being used. The Electronic Industries Alliance (EIA), along with the Telecommunications Industry Association (TIA), established a rating system for network cabling in 1991. The latest revision of this rating system is EIA/TIA-568B. The EIA/TIA category ratings specify the maximum frequency that the cable can support without excessive attenuation. The ISO rating system, which is not as often used as the EIA/TIA category system, refers to these wire grades as classes. These ratings are shown in Table 11.1. Most local area networks installed today are equipped with Category 5 or better cabling. Many installations are abandoning copper entirely and installing fiber optic cable instead (see the next section).

Table 11.1: EIA/TIA-568B and ISO Cable Specifications

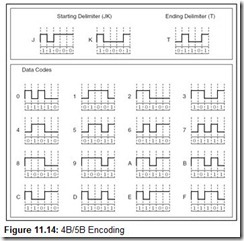

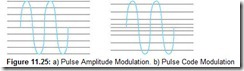

Note that the signal-carrying capacity of the cable grades shown in Table 11.1 is given in terms of megahertz. This is not the same as megabits. As we saw in Section 2.7, the number of bits carried at any given frequency is a function of the encoding method used in the network. Networks running below 100Mbps could easily afford to use Manchester coding, which requires two signal transitions for every bit transmitted. Networks running at 100Mbps and above use different encoding schemes, one of the most popular being the 4B/5B, 4 bits in 5 baud using NRZI signaling, as shown in Figure 11.14.

Baud is the unit of measure for the number of signal transitions supported by a transmission medium or transmission method over a medium. For networks other than the voice telephone network, the line speed is rated in hertz, but hertz and baud are equivalent with regard to digital signals. As you can see by Figure 11.14, if a network uses 4B/5B encoding, a signal-carrying capacity of 125MHz is required for the line to have a bit rate of 100Mbps.

Fiber Optic Cable

Optical fiber network media can carry signals faster and farther than either twisted pair or coaxial cable. Fiber optic cable is theoretically able to support frequencies in the terahertz range, but transmission speeds are more commonly in the range of about 2 gigahertz, carried over runs of 10 to 100 km (without repeaters). Optical cable consists of bundles of thin (1.5 to 125µm) glass or plastic strands surrounded by a protective plastic sheath. Although the underlying physics is quite different, you can think of a fiber optic strand as a conductor of light just as copper is a conductor of electricity. The cable is a type of “light guide” that routes the light from one end of the cable to the other. At the sending end, a light emitting diode or laser diode emits pulses of light that travel through the glass strand, much as water goes through a pipe. On the receiving end, photodetectors convert the light pulses into electrical signals for processing by electronic devices.

Optical fiber supports three different transmission modes depending on the type of fiber used. The types of fiber are shown in Figure 11.15. The narrowest fiber, single-mode fiber, conveys light at only one wavelength, typically 850, 1300, or 1500 nanometers. It allows the fastest data rates over the longest distances.

Multimode fiber can carry several different light wavelengths simultaneously through a larger fiber core. In multimode fiber, the laser light waves bounce off of the sides of the fiber core, causing greater attenuation than single-mode fiber. Not only do the light waves scatter, but they also collide with one another to some degree, causing further attenuation.

Multimode graded index fiber also supports multiple wavelengths concurrently, but it does so in a more controlled manner than regular multimode fiber. Multimode graded index fiber consists of concentric layers of plastic or glass, each with refractive properties that are optimized for carrying specific light wavelengths. Like regular multimode fiber, light travels in waves through multimode graded index optical fiber. But unlike multimode fiber, the waves are confined to the area of the optical fiber that is suitable to propagating its particular wavelength. Thus, the different wavelengths concurrently transmitted through the fiber do not interfere with each other.

The fiber optic medium offers many advantages over copper, the most obvious being its enormous signal-carrying capacity. It is also immune to EMI and RFI, making it ideal for deployment in industrial facilities. Fiber optic is small and lightweight, one fiber being capable of replacing hundreds of pairs of copper wires.

But optical cable is fragile and costly to purchase and install. Because of this, fiber is most often used as network backbone cable, which bears the traffic of hundreds or thousands of users. Backbone cable is like an interstate highway. Access to it is limited to specific entrance and exit points, but a large volume of traffic is carried at high speed. For a vehicle to get to its final destination, it has to exit the highway and perhaps drive through a residential street. The network equivalent of a residential street most often takes the form of twisted pair copper wire. This “residential street” copper wire is sometimes called horizontal cable, to differentiate it from backbone (vertical) cable. Undoubtedly, “fiber to the desktop” will eventually become a reality as costs decrease. At the same time, demand is steadily increasing for the integration of data voice and video over the same cable. With the deployment of these new technologies, network media probably will be stretched to their limits before the next generation of high-speed cabling is introduced.

11.6.2 Interface Cards

Transmission media are connected to clients, hosts, and other network devices through network interfaces. Because these interfaces are often implemented on removable circuit boards, they are commonly called network interface cards, or simply NICs. (Please don’t say “NIC card”!) A NIC usually embodies the lowest three layers of the OSI protocol stack. It forms the bridge between the physical components of the network and your system. NICs attach directly to a system’s main bus or dedicated I/O bus. They convert the parallel data passed on the system bus to the serial signals broadcast on a communications medium. NICs change the encoding of the data from binary to the Manchester or 4B/5B of the network (and vice versa). NICs also provide physical connections and negotiate permission to place signals on the network medium.

Every network card has a unique physical address burned into its circuits. This is called a Media Access Control (MAC) address, and it is 6 bytes long. The first 3 bytes are the manufacturer’s identification number, which is designated by the IEEE. The last 3 bytes are a unique identifier assigned to the NIC by the manufacturer. No two cards anywhere in the world should ever have the same MAC address. Network protocol layers map this physical MAC address to at least one logical address. The logical address is the name or address by which the node is known to other nodes on the network. It is possible for one computer (logical address) to have two or more NICs, but each NIC will have a distinct MAC address.

11.6.3 Repeaters

A small office LAN installation will have many NICs within a few feet of each other. In an office complex, however, NICs may be separated by hundreds of feet of cable. The longer the cable, the greater the signal attenuation. The effects of attenuation can be mitigated either by reducing transmission speed (usually an unacceptable option) or by adding repeaters to the network. Repeaters counteract attenuation by amplifying signals as they are passed through the physical cabling of the network. The number of repeaters required for any network depends on the distance over which the signal is transmitted, the medium used, and the signaling speed of the line. For example, high-frequency copper wire needs more repeaters per kilometer than optical cable operating at a comparable frequency.

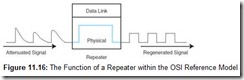

Repeaters are part of the network medium. In theory, they are dumb devices functioning entirely without human intervention. As such, they would contain no network-addressable components. However, some repeaters now offer higher-level services to assist with network management and troubleshooting. Figure 11.16 is a representation of how a repeater regenerates an attenuated digital signal.

11.6.4 Hubs

Repeaters are Physical layer devices having one input and one output port. Hubs are also Physical layer devices, but they can have many ports for input and output. They receive incoming packets from one or more locations and broadcast the packets to one or more devices on the network. Hubs allow computers to be joined to form network segments. The simplest hubs are nothing more than repeaters that connect various branches of a network. Physical network branches stuck together by hubs do not partition the network in any way; they are strictly Layer 1 devices and are not aware of a packet’s source or its destination. Every station on the network continues to compete for bandwidth with every other station on the network, regardless of the presence or absence of intervening hubs. Because hubs are Layer 1 devices, the physical medium must be the same on all ports of the hub. You can think of simple hubs as being nothing more than repeaters that provide multiple station access to the physical network. Figure 11.17 shows a network equipped with three hubs.

As hub architectures have evolved, many now have the ability to connect dissimilar physical media. Although such media interconnection is a Layer 2 function, manufacturers continue to call these devices “hubs.” Switching hubs and intelligent hubs are still further removed from the notion of a hub being a “Layer 1 device.” These sophisticated components not only connect dissimilar media, but also perform rudimentary routing and protocol conversion, which are all Layer 3 functions.

11.6.5 Switches

A switch is a Layer 2 device that creates a point-to-point connection between one of its input ports and one of its output ports. Although hubs and switches perform the same function, they differ in how they handle the data internally. Hubs broadcast the packets to all computers on the network and handle only one packet at a time. Switches, on the other hand, can handle multiple communications between the computers attached to them. If there were only two computers on the network, a hub and a switch would behave in exactly the same way. If more than two computers were trying to communicate on a network, a switch gives better performance because the full bandwidth of the network is available at both sides of the switch. Therefore, switches are preferred to hubs in most network installations. In Chapter 9 we introduced switches that connect processors to memories or processors to processors. Those switches are the same kind of switches we discuss here. Switches contain some number of buffered input ports, an equal number of output ports, a switching fabric (a combination of the switching units, the integrated circuits that they contain, and the programming that allows switching paths to be controlled) and digital hardware that interprets address information encoded on network frames as they arrive in the input buffers.

As with most of the network components we have been discussing, switches have been improved by adding addressability and management features. Most switches today can report on the amount and type of traffic that they are handling and can even filter out certain network packets based on user-supplied parameters. Because all switching functions are carried out in hardware, switches are the preferred devices for interconnecting high-performance network components.

11.6.6 Bridges and Gateways

The purpose of both bridges and gateways is to provide a link between two dissimilar network segments. Both can support different media (and network speeds), and they are both “store and forward” devices, holding an entire frame before sending it on. But that’s where their similarities end.

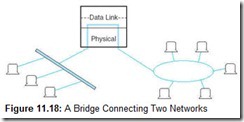

Bridges join two similar types of networks so they look like one network. With bridges, all computers on the network belong to the same subnet (the network consisting of all devices whose IP addresses have the same prefix). Bridges are relatively simple devices with functionality primarily at Layer 2. This means that they know nothing about protocols, but simply forward data depending on the destination address. Bridges can connect different media having different media access control protocols, but the protocol from the MAC layer through all higher layers in the OSI stack must be identical in both segments. This relationship is shown in Figure 11.18.

Each node connected to any particular bridge must have a unique address. (The MAC address is most often used.) The network administrator must program simple bridges with the addresses and segment numbers of each valid node on the network. The only data that is allowed to cross the bridge is data that is being sent to a valid address on the other side of the bridge. For large networks that change frequently (most networks), this continual reprogramming is tedious, time-consuming, and error-prone. Transparent bridges were invented to alleviate this problem. They are sophisticated devices that have the ability to learn the address of every device on each segment. Transparent bridges can also supply management information such as throughput reports. Such functionality implies that a bridge is not entirely a Layer 2 device. However, bridges still require identical Network layer protocols and identical interfaces to those protocols on both interconnected segments.

Figure 11.18 shows two different kinds of local area networks connected to each other through a bridge. This is typically how bridges are used. If, however, users on these LANs needed to connect to a system that uses a radically different protocol, for example a public switched telephone network or a host computer that uses a nonstandard proprietary protocol, then a gateway is required. A gateway is a point of entrance to another network. Gateways are full-featured computers that supply communications services spanning all seven OSI layers. Gateway system software converts protocols and character codes, and can provide encryption and decryption services. Because they do so much work in their software, gateways cannot provide the throughput of hardware-based bridges, but they make up for it by providing enormously more functionality. Gateways are often connected directly to switches and routers.

11.6.7 Routers and Routing

After gateways, routers are the next most complicated components in a network. They are, in fact, small special-purpose computers. A router is a device (or a piece of software) connected to at least two networks that determines the destination to which a packet should be forwarded. Routers are normally located at gateways. Operating correctly, routers make the network fast and responsive. Operating incorrectly, one faulty router can bring down the whole system. In this section, we reveal the inner workings of routers, and discuss the thorny problems that routers are called upon to solve.

Despite their complexity, routers are usually referred to as Layer 3 devices, because most of their work is done at the Network layer of the OSI Reference Model. However, most routers also provide some network monitoring, management, and troubleshooting services. Because routers are by definition Layer 3 devices, they can bridge different network media types (fiber to copper, for example) and connect different network protocols running at Layer 3 and below. Owing to their abilities, routers are sometimes referred to as “intermediate systems” or “gateways” in Internet standards literature. (When the first Internet standards were written, the word router hadn’t yet been coined.)

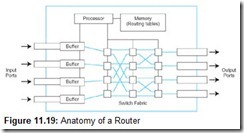

Routers are designed specifically to connect two networks together, typically a LAN to a WAN. They are complex devices because not only do they contain buffers and switching logic, but they also have enough memory and processing power to calculate the best way to send a packet to its destination. A conceptual model of the internals of a router is shown in Figure 11.19.

In large networks, routers find an approximate solution to a problem that is fundamentally NP-complete. An NP-complete problem is one in which its optimal solution is theoretically impossible within a time period that is short enough for that solution to be useful.

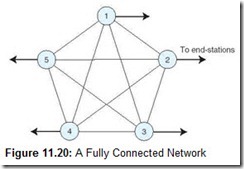

Consider the network shown in Figure 11.20. You may recognize this figure as a complete graph (K5). There are n(n – 1) / 2 edges in a complete graph containing n nodes. In our illustration, we have 5 nodes and 10 edges. The edges represent routes—or hops—between each of the nodes.

If Node 1 (Router 1) needs to send a packet to Node 2, it has the following choices of routes:

1 ® 2

three routes of two hops:

1 ® 3 ® 2 1 ® 4 ® 2 1 ® 5 ® 2

six routes of three hops:

1 ® 3 ® 4 ® 2 1 ® 3 ® 5 ® 2 1 ® 5 ® 4 ® 2 1 ® 4 ® 3 ® 2 1 ® 5 ® 3 ® 2 1 ® 4 ® 5 ® 2

six routes of four hops:

1 ® 3 ® 4 ® 5 ® 2 1 ® 4 ® 3 ® 5 ® 2 1 ® 5 ® 4 ® 3 ® 2 1 ® 3 ® 5 ® 4 ® 2 1 ® 4 ® 5 ® 3 ® 2 1 ® 5 ® 3 ® 4 ® 2

When Node 1 and Node 2 are not directly connected, the traffic between them must pass through at least one intermediate node. Considering all options, the number of possible routes is on the algorithmic order of N!. This problem is further complicated when costs or weights are applied to the routing paths. Worse yet, the weights can change depending on traffic flow. For example, if the connection between Nodes 1 and 2 were a tariffed high-latency (slow) line, we might be a whole lot better off using the 1 ® 4 ® 5 ® 3 ® 2 route. Clearly, in a real-world network, with hundreds of routers, the problem becomes enormous. If each router had to come up with the perfect outbound route for each incoming packet by considering all of the possibilities, the packets would never get where they were going quickly enough to make anyone happy.

Of course, in a very stable network with only a few nodes, it is possible to program each router so that it always uses the optimal route. This is called static routing, and it is feasible in networks where a large number of users in one location use a centralized host, or gateway, in another location. In the short term, this is an effective way to interconnect systems, but if a problem arises in one of the interconnecting links or routers, users are disconnected from the host. A human being must quickly respond to restore service. Static routing just isn’t a reasonable option for networks that change frequently. This is to say static routing isn’t an option for most networks. Conversely, static networks are predictable as the path (and thus the number of hops) a packet will take is always known and can be controlled. Static routing is also very stable, and it creates no routing protocol exchange traffic.

Dynamic routers automatically set up routes and respond to the changes in the network. These routers can also select an optimal route as well as a backup route should something happen to the route of choice. They do not change routing instructions, but instead allow for dynamic altering of routing tables.

Dynamic routers automatically explore their networks through information exchanges with other routers on the network. The information packets exchanged by the routers reveal their addresses and costs of getting from one point to another. Using this information, each router assembles a table of values in memory. This routing table is, in truth, a reachability list for every node on the network, plus some default values. Typically, each destination node is listed along with the neighboring, or next-hop, router to which it is connected.

When creating their tables, dynamic routers consider one of two metrics. They can use either the distance to travel between two nodes, or the condition of the network in terms of measured latency. The algorithms using the first metric are distance vector routing algorithms. Link state routing algorithms use the second metric.

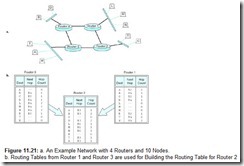

Distance vector routing is derived from a pair of similar algorithms invented in 1957 and 1962 known respectively as the Bellman-Ford and Ford-Fulkerson algorithms. The distance in distance vector routing is usually a measure of the number of nodes (hops) through which a packet must pass before reaching its destination, but any metric can be used. For example, suppose we have the network shown in Figure 11.21a. There are 4 routers and 10 nodes connected as indicated. If node B wants to send a packet to node L, there are two choices: One is B ® Router 4 ® Router 1 ® L, with one hop between Router 4 and Router 1. The other routing choice has three hops between the routers: B ® Router 4 ® Router 3 ® Router 2 ® Router 1 ® L. With distance vector routing, the objective is to always use the shortest route, so our B ® Router 4 ® Router 1 ® L route is the obvious choice.

In distance vector routing, every router needs to know the identities of each node connected to each router as well as the hop counts between them. To do this efficiently, routers exchange node and hop count information with their adjacent neighbors. For example, using the network shown in Figure 11.21a, Router 1 and Router 3 would have routing tables as shown in Figure 11.21b. These routing tables are then sent to Router 2. As shown in the figure, Router 2 selects the shortest path to any of the nodes considering all of the routes that are reported in the routing tables. The final routing table contains the addresses of nodes directly connected to Router 2 along with a list of destination nodes that are reachable through other routers and a hop count to those nodes. Notice that the hop counts in the final table for Router 2 are increased by 1 to account for the one hop between Router 2 and Router 1, and Router 2 and Router 3. A real routing table would also contain a default router address that would be used for nodes that are not directly connected to the network, such as stations on a remote LAN or Internet destinations, for example.

Distance vector routing is easy to implement, but it does have a few problems. For one thing, it can take a long time for the routing tables to stabilize (or converge) in a large network. Additionally, a considerable amount of traffic is placed on the network as the routing tables are updated. And third, obsolete routes can persist in the routing tables, causing misrouted or lost packets. This last problem is called the count-to-infinity problem.

You can understand the count-to-infinity problem by studying the network shown in Figure 11.22a. Notice that there are redundant paths through the network. For example, if Router 3 goes offline, clients can still get to the mainframe and the Internet, but they won’t be able to print anything until Router 3 is again operational.

The paths from all of the routers to the Internet are shown in Figure 11.22b. We call the time that this snapshot was taken time t = 0. As you can see, Router 1 and Router 2 use Router 3 to get to the Internet. Sometime between t = 0 and t = 1, the link between Router 3 and Router 4 goes down, say someone unplugs the cable that connects these routers. At t = 1, Router 3 discovers this break, but has just received the routing table update from its neighbors, both of which advertise themselves as being able to get to the Internet in three hops. Router 3 then assumes that it can get to the Internet using one of these two routers and updates its table accordingly. It picks Router 1 as its next hop to the Internet then sends its routing table to Router 1 and Router 2 at t = 2. At t = 3, Router 1 and Router 2 receive Router 3’s updated hop count for getting to the Internet, so they add 1 to Router 3’s value (because they know that Router 3 is one hop away), and subsequently broadcast their tables. This cycle continues until all of the routers end up with a hop count of infinity, meaning that the registers that hold the hop count eventually overflow, crashing the whole network.

Two methods are commonly used to prevent this situation. One is to use a small value for infinity, facilitating early problem detection (before a register overflows), and the other is to somehow prevent short cycles like the one that happened in our example.

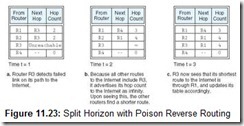

Sophisticated routers use a method called split horizon routing to keep short cycles out of the network. The idea is simple: No router will use a route given by its neighbor that includes itself in the route. (Similarly, the router could go ahead and use the self-referential route, but set the path value to infinity. This is called split horizon with poison reverse. The route is “poisoned” because it is marked as unreachable.) The routing table exchange for our example path to the Internet using split horizon routing would converge as shown in Figure 11.23. Of course, we still have the problem of larger cycles occurring. Say Router 1 points to Router 2, which points to Router 3, which points to Router 1. To some extent, this problem can be remedied if the routers exchange their tables only when a link needs to be updated. (These are called triggered updates.) Updates done in this manner cause fewer cycles in the routing graph and also reduce traffic on the network.

In large internetworks, hop counts can be a misleading metric, particularly when the network includes a variety of equipment and line speeds. For example, suppose a packet has two ways of getting somewhere. One path traverses six routers on a 100Mbps LAN, and the other traverses two routers on a 64Kbps leased line. Although the 100Mbps LAN could provide over ten times the throughput, the hop count metric would force traffic onto the slower leased line. If instead of counting hops we measure the actual line latency, we could prevent such anomalies. This is the idea behind link state routing.

As with distance vector routing, link state routing is a self-managing system. Each router discovers the speed of the lines between itself and its neighboring routers by periodically sending out Hello packets. At the instant it releases the packet, the router starts a timer. Each router that subsequently receives the packet immediately dispatches a reply. Once the initiator gets a reply, it stops its timer and divides the result by 2, giving the one-way time estimate for the link to the router that replied to the packet. Once all of the replies are received, the router assembles the timings into a table of link state values. This table is then broadcast to all other routers, except its adjacent neighbors. Nonadjacent routers then use this information to update all routes that include the sending router. Eventually, all routers within the routing domain end up with identical routing tables. Simply stated, after convergence takes place, a single snapshot of the network exists in the tables of each router. The routers then use this image to calculate the optimal path to every destination in its routing table.

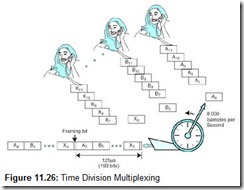

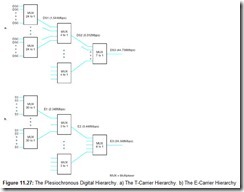

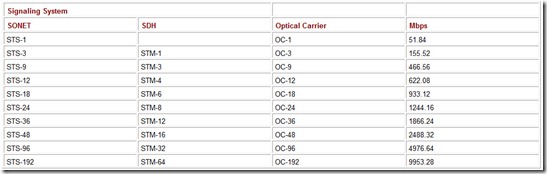

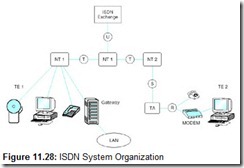

In calculating optimal routes, each router is programmed to think of itself as the root node of a tree with every destination being an internal leaf node of the tree. Using this conceptualization, the router computes an optimal path to each destination using Dijkstra’s algorithm.[1] Once found, the router stores only the next hop along the path. It doesn’t store the entire path. The next (downstream) router should also have computed the same optimal path—or a better one by the time the packet gets there—so it would use the next link in the optimal path that was computed by its upstream predecessor. After Router 1 in Figure 11.22 has applied Dijkstra’s algorithm, it sees the network as shown in Figure 11.24.