SEMAPHORES

Semaphores are used to control access to shared resources by processes, and can be named or unnamed. Named semaphores provide access to a resource shared between multiple processes, while unnamed semaphores provide multiple accesses to a resource within a single process or shared between related processes. Some semaphore functions are specific to either named or unnamed semaphores.

A semaphore is an integer variable taking on values from 0 to a predefined maximum, each being associated with a queue for process suspension. The order of process activation from the queue must be fair. Two atomic operations that are indivisible and uninterruptible are defined for a semaphore:

(a) WAIT: decrease the counter by one; if it becomes negative, block the process and enter this process’s ID in the waiting processes queue.

(b) SIGNAL: increase the semaphore by one; if it is still negative, unblock the first process of the waiting processes queue, removing this process’s ID from the queue itself.

In its simplest form, a semaphore is a location in memory whose value can be tested and set by more than one process. The test and set operation is, so far as each process is concerned, atomic; once started nothing can stop it. The result of this operation is the addition of the current value of the semaphore to the set value, which can be positive or negative. Depending on the result of the operation, one process may have to sleep until the semaphore’s value is changed by another.

Say you had many cooperating processes reading records from and writing records to a single data file. You would want that file access to be strictly coordinated. A semaphore with an initial value of 1 could be used, and, around the file operating code, two semaphore operations could be implemented, the first to test and decrement the semaphore’s value and the second to test and increment it. The first process to access the file would try to decrement the semaphore’s value and it would succeed, the semaphore’s value now being 0. This process can now go ahead and use the data file. If another process wishing to use the file now tries to decrement the semaphore’s value, it would fail as the result would be -1. That process will be suspended until the first process has finished with the data file. When the first process has finished with the data file it will increment the semaphore’s value, making it 1 again. Now the waiting process can be woken and this time its attempt to increment the semaphore will succeed.

If all of the semaphore operations have succeeded and the current process does not need to be suspended, the system’s operating system goes ahead and applies the operations to the appropriate members of the semaphore array. Now it must check any waiting or suspended processes that may now apply for their semaphore operations, looking at each member of the operations pending queue in turn, testing to see whether the semaphore operations will succeed this time. If they will, then it removes the data structure representing this process from the operations pending list and applies the semaphore operations to the semaphore array. It wakes up the sleeping process, making it available to be restarted the next time the scheduler runs. The operating system keeps looking through the pending processes queue from the start until there is a pass where no semaphore operations can be applied and so no more processes can be woken.

Semaphore depth and priority

Semaphores are global entities and are not associated with any particular process. In this sense, semaphores have no owners, making it impossible to track ownership for any purpose, including error recovery. Semaphore protection works only if all the processes using the shared resource cooperate by waiting for it when it is unavailable and incrementing the semaphore value when relinquishing the resource. Since semaphores lack owners, there is no way to determine whether one of the cooperating processes has become uncooperative. Applications using semaphores must carefully detail cooperative tasks. All of the processes that share a resource must agree on which semaphore controls the resource.

There is a problem with semaphores, called deadlocks, which occur when one process has altered the semaphore’s value as it enters a critical region but then fails to leave this region because it crashed or was killed. Maintaining lists of adjustments to the semaphore arrays offers some protection. The idea is that when these adjustments are applied, the semaphores will be put back to the state that they were in before the process set of semaphore operations was applied.

There is another problem, known as process priority inversion, which is a form of indefinite postponement common in multitasking, preemptive executives with shared resources. It occurs when a high-priority task requests access to a shared resource which is currently allocated to a low-priority task. The high-priority task must block until the low-priority task releases the resource. This problem is exacerbated when the low-priority task is prevented from executing by one or more medium-priority tasks. Because the low-priority task is not executing, it cannot complete its interaction with the resource and release it. The high-priority task is effectively prevented from executing by lower-priority tasks.

Priority inheritance is an algorithm that calls for the lowest-priority task holding a resource to have its priority increased to that of the highest-priority task currently waiting for that resource. Each time a task blocks attempting to obtain the resource, the task holding the resource may have its priority increased. Some kernels support priority inheritance for local, binary semaphores that use the priority task wait queue blocking discipline. When a task is of higher priority than the task holding the semaphore blocks, the priority of the task holding the semaphore is increased to that of the blocking task. When the binary semaphore is released (i.e., not for a nested release), the holder’s priority is restored to its original value. The implementation of the priority inheritance algorithm takes into account the scenario in which a task holds more than one binary semaphore. The holding task will execute at the priority of the higher of the highest ceiling priority or at the priority of the highest- priority task blocked waiting for any of the semaphores the task holds. Only when the task releases all of the binary semaphores it holds will its priority be restored to the normal value.

Priority ceiling is an algorithm that calls for the lower-priority task holding a resource to have its priority increased to that of the highest-priority task which will ever block waiting for that resource. This algorithm addresses the problem of priority inversion, although it avoids changing the priority of the task holding the resource. The priority ceiling algorithm will only change the priority of the task holding the resource once. The ceiling priority is set at creation time and must be the priority of the highest-priority task which will ever attempt to acquire that semaphore. Some kernels support priority ceiling for local, binary semaphores that use the priority task wait queue blocking discipline. When a task of lower priority than the ceiling priority successfully obtains the semaphore, its priority is raised to the ceiling priority. When the task holding the task completely releases the binary semaphore (i.e., not for a nested release), the holder’s priority is restored to its previous value.

Identifying the highest-priority task that will attempt to obtain a particular semaphore can be a difficult task in a large, complicated system. Although the priority ceiling algorithm is more efficient than the priority inheritance algorithm with respect to the maximum number of task priority changes that may occur while a task holds a particular semaphore, the latter is more forgiving, in that it does not require this information. The implementation of the priority ceiling algorithm takes into account the scenario where a task holds more than one binary semaphore. The holding task will execute at the priority of the higher of the highest ceiling priority or at the priority of the highest-priority task blocked waiting for any of the semaphores the task holds. Only when the task releases all of the binary semaphores it holds will its priority be restored to its normal value.

Semaphore acquire, release and shutdown

A semaphore can be viewed as a protected variable whose value can be modified only by methods for creating, obtaining, and releasing a semaphore. Many kernels or operating systems support both binary and counting semaphores. A binary semaphore is restricted to values of zero or one, while a counting semaphore can assume any non-negative integer value.

A binary semaphore can be used to control access to a single resource, in particular, to enforce mutual exclusion for a critical section of code. To do this, the semaphore would be created with an initial value of one to indicate that no task is executing the critical section of code. On entry to the critical section, a task must issue the method for obtaining a semaphore to block other tasks from entering. On exit from the critical section, the task must issue the method for releasing the semaphore, to allow access for another task.

A counting semaphore can be used to control access to a pool of two or more resources. For example, access to three printers could be administered by a semaphore that is created with an initial count of three. When a task requires access to one of the printers, it issues the method for obtaining a semaphore. If a printer is not currently available, the task can wait for a printer to become available or return immediately. When the task has completed printing, it should issue the method for releasing a semaphore to allow other tasks access to the printer.

Task synchronization may be achieved by creating a semaphore with an initial count of zero. One task waits for the arrival of another task by issuing a method that obtains a semaphore when it reaches a synchronization point. The other task performs a corresponding semaphore release operation when it reaches its synchronization point, thus unblocking the pending task.

(1) Creating a semaphore

This method creates a binary or counting semaphore with a user-specified name as well as an initial count. If a binary semaphore is created with a count of zero (0) to indicate that it has been allocated, then the task that creates the semaphore is considered to be its the current holder. At create time, the method for ordering tasks waiting in the semaphore’s task wait queue (by FIFO or task priority) is specified. In addition, the priority inheritance or priority ceiling algorithm may be selected for local, binary semaphores that use the priority task wait queue blocking discipline. If the priority ceiling algorithm is selected, then the highest priority of any task that will attempt to obtain this semaphore must be specified.

(2) Obtaining semaphore IDs

When a semaphore is created, the kernel generates a unique semaphore ID and assigns it to the created semaphore by either of the two methods. First, after the semaphore is invoked by means of the semaphore creation method, the semaphore ID is stored in a user-provided location. Second, the semaphore’s ID may be obtained later using the semaphore identify method. The semaphore’s ID is used by other semaphore manager methods to access this semaphore.

(3) Acquiring a semaphore

A simplified version of this method can be described as follows: if the semaphore’s count is greater than zero then decrement the semaphore’s count, or else wait for release of the semaphore and return “successful”.

When the semaphore cannot be immediately acquired, one of the following situations applies. By default, the calling task will wait forever to acquire the semaphore. If the task waits to acquire the semaphore, then it is placed in the semaphore’s task wait queue in either FIFO or task priority order. If the task blocked waiting for a binary semaphore is using priority inheritance and the task’s priority is greater than that of the task currently holding the semaphore, then the holding task will inherit the priority of the blocking task. All tasks waiting on a semaphore are returned an error code when the semaphore is deleted.

When a task successfully obtains a semaphore using priority ceiling and the priority ceiling for this semaphore is greater than that of the holder, then the holder’s priority will be elevated.

(4) Releasing a semaphore

A simplified version of the semaphore release method is as follows: if no tasks are waiting for this semaphore then increment the semaphore’s count, or else assign the semaphore to a waiting task and return “successful”. If this is the outermost release of a binary semaphore that uses priority inheritance or priority ceiling and the task does not currently hold any other binary semaphores, then the task performing the semaphore release will have its priority restored to its normal value.

(5) Deleting a semaphore

The semaphore delete method removes a semaphore from the system and frees its control block. A semaphore can be deleted by any local task that knows its ID. As a result of this directive, all tasks blocked waiting to acquire the semaphore will be readied and returned a status code that indi- cates that the semaphore was deleted. Any subsequent references to the semaphore’s name and ID are invalid.

Condition and locker

The paragraphs below present a series of basic synchronization problems including serialization and mutual exclusion, and show some ways of using semaphores to solve them.

(1) Signaling

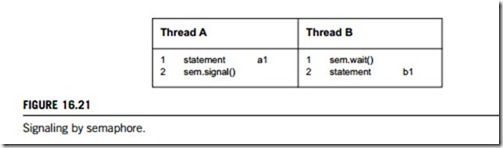

Possibly the simplest use for a semaphore is signaling, which means that one thread sends a signal to another to indicate that something has happened. Signaling makes it possible to guarantee that a section of code in one thread will run before a section of code in another; in other words, it solves the serialization problem.

Assume that we have a semaphore named “sem” with initial value 0, and the threads A and B have shared access to it as given in Figure 16.21. The word “statement” represents an arbitrary program statement. To make the example concrete, imagine that “a1” reads a line from a file, and “b1” displays the line on the screen. The semaphore in this program guarantees that thread A has completed a1 before thread B begins b1. Here is how it works: if thread B gets to the wait statement first, it will find the initial value, zero, and it will block. Then when thread A signals, thread B proceeds. Similarly, if thread A gets to the signal first then the value of the semaphore will be incremented, and when thread B gets to the wait, it will proceed immediately. Either way, the order of a1 and b1 is guaranteed.

This use of semaphores is the basis of the names signal and wait, and in this case the names are conveniently mnemonic. Unfortunately, we will see other cases where the names are less helpful. Speaking of meaningful names, “sem” is not one. When possible, it is a good idea to give a semaphore a name that indicates what it represents. In this case, a name such as a1 Done might be good, where a1 Done ¼ 0 means that a1 has not executed and a1 Done ¼ 1 means it has.

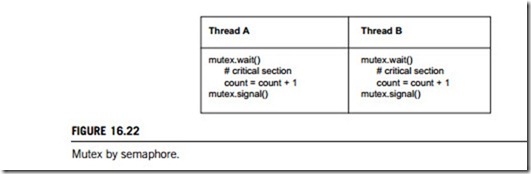

(2) Mutex

A second common use for semaphores is to enforce mutual exclusion. We have already seen one use for this, when controlling concurrent access to shared variables. The mutex guarantees that only one thread accesses the shared variable at a time.

A mutex is like a token that passes from one thread to another, allowing one thread at a time to proceed. For example, in “The Lord of the Flies” a group of children use a conch as a mutex. To speak, you have to hold the conch. As long as only one child holds the conch, only one can speak. Similarly, in order for a thread to access a shared variable, it has to “get” the mutex; when it is finished, it “releases” the mutex. Only one thread can hold the mutex at a time.

Create a semaphore named mutex that is initialized to 1. A value of “one” (1) means that a thread may

precede and may access the shared variable. A value of “zero” (0) means that it has to wait for another thread to release the mutex. A code segment for performing mutex by semaphore is given in Figure 16.22.

Since mutex is initially 1, whichever thread gets to the wait first will be able to proceed immediately. Of course, the act of waiting on the semaphore has the effect of decrementing it, so the second thread to arrive will have to wait until the first signals.

In this example, both threads are running the same code. This is sometimes called a symmetric solution. If the threads have to run different code, the solution is asymmetric. Symmetric solutions are often easier to generalize. In this case, the mutex solution can handle any number of concurrent threads without modification. As long as every thread waits before performing an update and signals

afterwards, then no two threads can access the count concurrently. Often the code that needs to be protected is called the critical section, to which it is critically important to prevent concurrent access.

In the tradition of computer science and mixed metaphors, there are several other ways people sometimes talk about mutexes. In the metaphor we have been using so far, the mutex is a token that is passed from one thread to another. In an alternative metaphor, we think of the critical section as a room, and only one thread is allowed to be in the room at a time. In this metaphor, mutexes are called locks, and a thread is said to lock the mutex before entering and unlock it while exiting. Occasionally, though, people mix the metaphors and talk about “getting” or “releasing” a lock, which does not make much sense. Both metaphors are both potentially useful and potentially misleading. As you work on the next problem, try to figure out both ways of thinking and see which one leads you to a solution.

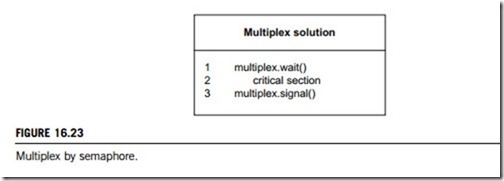

(3) Multiplex

Generalize the previous solution so that it allows multiple threads to run in the critical section at the same time, but it enforces an upper limit on the number of concurrent threads. In other words, no more than n threads can run in the critical section at the same time.

This pattern is called a multiplex. In real life, the multiplex problem occurs at busy nightclubs where there are a maximum number of people allowed in the building at a time, either to maintain fire safety or to create the illusion of exclusivity. At such places a bouncer usually enforces the synchronization constraint by keeping track of the number of people inside and barring arrivals when the room is at capacity. Then, whenever one person leaves another is allowed to enter. Enforcing this constraint with semaphores may sound difficult, but it is almost trivial (Figure 16.23).

To allow multiple threads to run in the critical section, just initialize the mutex to n, which is the maximum number of threads that should be allowed. At any time, the value of the semaphore

represents the number of additional threads that may enter. If the value is zero, then the next thread will block until one of the threads inside exits and signals. When all threads have exited the value of the semaphore is restored to n.

Since the solution is symmetric, it is conventional to show only one copy of the code, but you should imagine multiple copies of the code running concurrently in multiple threads.

What happens if the critical section is occupied and more than one thread arrives? Of course, what we want is for all the arrivals to wait. This solution does exactly that. Each time an arrival joins the queue, the semaphore is decremented, so that the value of the semaphore (negated) represents the number of threads in the queue. When a thread leaves, it signals the semaphore, incrementing its value and allowing one of the waiting threads to proceed.

Thinking again of metaphors, in this case it is useful to think of the semaphore as a set of tokens (rather than a lock). As each thread invokes wait, it picks up one of the tokens; when it invokes a signal it releases one. Only a thread that holds a token can enter the room, and if none are available when a thread arrives, it waits until another thread releases one.

In real life, ticket windows sometimes use a system like this. They hand out tokens (sometimes poker chips) to customers in line. Each token allows the holder to buy a ticket.

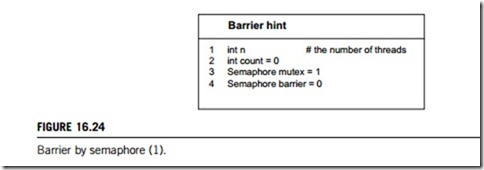

(4) Barrier

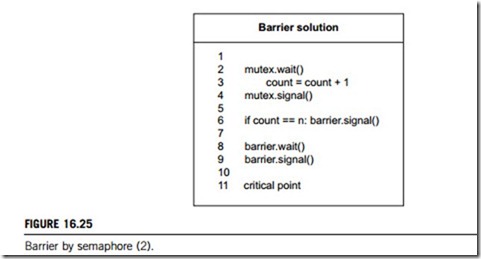

The barrier method is hinted at by presenting the variables used in the solution and examining their roles in Figure 16.24.

Finally, here in Figure 16.25 is a working barrier. The only change from signaling is the addition of another signal after waiting at the barrier. Now as each thread passes, it signals the semaphore so that the next thread can pass. This pattern, a wait and a signal in rapid succession, occurs often enough that it has a name; it is called a turnstile, because it allows one thread to pass at a time, and it can be locked to bar all threads.

In its initial state (zero), the turnstile is locked. The n-th thread unlocks it and then all n threads go through.

TIMER

Most computer control systems include electronic timers, which are usually just digital counters that are set to a number by software, and then count down to zero. When they reach zero, they interrupt the microprocessor or CPU, which triggers interrupt handling. Another common form of timer is a number

that is compared to a counter. This is somewhat harder to program, but can be used to measure events or to control motors (using a digital electronic amplifier to perform pulse-width modulation). Embedded systems often use a hardware timer to implement a list of software timers. Basically, the hardware timer is set to expire at the time the next software timer comes up. The hardware timer’s interrupt software handles the housekeeping of notifying the rest of the software, finding the next software timer to expire, and resetting the hardware timer to the next software timer’s expiration.

Kernel timers

Whenever you need to schedule an action to happen later, without blocking the current process until that time arrives, kernel timers are the tool for you. These timers are used to schedule execution of a function at a particular time in the future, based on the clock tick, and can be used for a variety of tasks; for example, polling a device by checking its state at regular intervals when the hardware cannot fire interrupts. Other typical uses of kernel timers are turning off the floppy motor or finishing another lengthy shut down operation. Finally, the kernel itself uses timers in several situations, including the implementation of schedule timeout.

A kernel timer is a data structure that instructs the kernel to execute a function with an argument at a given time, all user-defined. The functions almost certainly do not run while the process that registered them is executing. When a timer runs, however, the process that scheduled it could be asleep, executing on a different microprocessor, or quite possibly have exited altogether.

In fact, kernel timers are run as the result of a software interrupt. When running in this sort of atomic context, your code is subject to a number of constraints. Timer functions must be atomic in all ways, but there are some additional issues brought about by the lack of a process context. Repetition is called for because the rules for atomic contexts must be followed assiduously, or the system will find itself in deep trouble.

One other important feature of kernel timers is that a task can reregister itself to run again at a later time. This is possible because each timer list structure is unlinked from the list of active timers before being run and can, therefore, be immediately relinked elsewhere. Although rescheduling the same task over and over might appear to be a pointless operation, it is sometimes useful, for example, in the polling of devices. Therefore, a timer that reregisters itself always runs on the same CPU.

An important feature of timers is that they are a potential source of race conditions, even on single- processor systems because they are asynchronous with other code. Therefore, any data structures accessed by the timer function should be protected from concurrent access, either by being atomic types or by using spin locks.

The implementation of the kernel timers has been designed to meet the following requirements and assumptions: (1) timer management must be as lightweight as possible; (2) the design should scale well as the number of active timers increases; (3) most timers expire within a few seconds or minutes at most, while timers with long delays are pretty rare; (4) a timer should run on the same CPU that registered it.

The solution devised by kernel developers is based on a per-CPU data structure. The “Timer list” structure includes a pointer to that data structure in its base field. If base is null, the timer is not scheduled to run; otherwise, the pointer tells which data structure (and, therefore, which CPU) runs it. Whenever kernel code registers a timer, the operation is eventually performed, which, in turn, adds the new timer to a double-linked list within a cascading table associated with the current CPU.

The cascading table works like this: if the timer expires in the next 0 255 jiffies, it is added to one of the 256 lists devoted to short-range timers using the least significant bits of the expired field. If it expires farther in the future (but before 16,384 jiffies), it is added to one of 64 lists based on bits 9 14 of the expired fields. For timers expiring even farther, the same trick is used for bits 15 20, 21 26, and 27 31. Timers with an expire field pointing still farther into the future (something that can happen only on 64-bit platforms) are hashed with a delay value of 0xffffffff, and timers that expired in the past are scheduled to run at the next timer tick. (A timer that is already expired may sometimes be registered in high-load situations, especially if you run a preemptible kernel.)

Keep in mind, however, that a kernel timer is far from perfect, as it suffers from artifacts induced by hardware interrupts, as well as other timers and other asynchronous tasks. While a timer associated with simple digital I/O can be enough for simple tasks such as running a stepper motor or other amateur electronics, it is usually not suitable for production systems in industrial environments. For such tasks, you will most likely need to resort to a real-time kernel extension.

Watchdog timers

(1) Working mechanism

A watchdog timer is a piece of hardware, often built into a microcontroller or CPU chipset, that can cause a microprocessor reset when it judges that the system has hung, or is no longer executing the correct sequence of program code. The hardware component of a watchdog is a counter that is set to a certain value and then counts down towards zero. It is the responsibility of the software to set the count to its original value often enough to ensure that it never reaches zero. If it does reach zero, it is assumed that the software has failed in some manner and the CPU is reset.

It is also possible to design the hardware so that a kick which occurs too soon will cause a bite, but to use such a system, very precise knowledge of the timing characteristics of the main loop of the program is required. A properly designed watchdog mechanism should, at the very least, catch events that hang the system. In electrically noisy environments, a power glitch may corrupt the program counter, stack pointer, or data in RAM, and the software would crash almost immediately, even if the code is completely bug-free. This is exactly the sort of transient failure that watchdogs will catch.

Bugs in software can also cause the system to hang, if they lead to an infinite loop, an accidental jump out of the code area of memory, or a deadlock condition (in multitasking situations). Obviously, it is preferable to fix the root cause, rather than getting the watchdog to pick up the pieces, but in a complex embedded system it may not be possible to guarantee that there are no bugs. At least by using a watchdog you can guarantee that none of those bugs will hang the system indefinitely.

Once your watchdog has bitten, you have to decide what action to take. The hardware will usually assert the microprocessor’s reset line, but other actions are also possible. For example, when the watchdog bites it may directly disable a motor, engage an interlock, or sound an alarm until the software recovers. Such actions must leave the system in a safe state if, for some reason, the system’s software is unable to run at all (perhaps due to chip death) after the failure.

A microcontroller or CPU with an internal watchdog will almost always contain a status bit that gets set when a bite occurs. By examining this bit after emerging from a watchdog-induced reset, we can decide whether to continue running, switch to a fail-safe state, and/or display an error message. At the very least, you should count such events, so that a persistently errant application would not be restarted indefinitely. A reasonable approach might be to shut the system down if three watchdog bites occur in one day.

If we want the system to recover quickly, initialization after a watchdog reset should be much shorter than at power-on. On the other hand, in some systems it is better to do a full set of self- tests, since this might identify the root cause of the watchdog timeout. In terms of the outside world, the recovery may be instantaneous, and the user may not even know a reset occurred. The recovery time will be the length of the watchdog timeout plus the time it takes the system to reset and perform its initialization. How well the device recovers depends on how much persistent data it requires, and whether those data are stored regularly and read after the system resets.

(2) Sanity checks

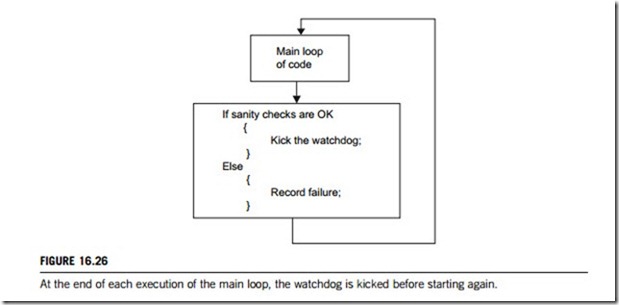

Kicking the watchdog at a regular interval proves that the software is running. It is often a good idea to kick the watchdog only if the system passes some sanity check, as shown in Figure 16.26. Stack depth, number of buffers allocated, or the status of some mechanical component may be checked before deciding to kick the watchdog. Good design of such checks will help the watchdog to detect more errors.

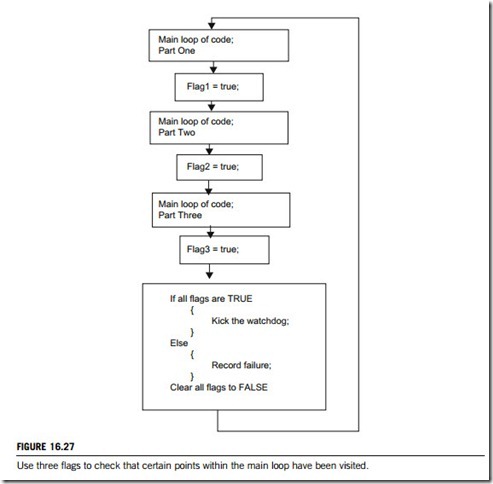

One approach is to clear a number of flags before each loop is started, as shown in Figure 16.27. Each flag is set at a certain point in the loop. At the bottom of the loop the watchdog is kicked, but first the flags are checked to see that all of the important points in the loop have been visited. The multitasking or the multithreads approach is based on a similar set of sanity flags.

For a specific failure, it is often a good idea to try to record the cause (possibly in non-volatile RAM), since this may be difficult to establish after the reset. If the watchdog bite is due to a bug, then any other information you can record about the state of the system or the currently active task will be valuable when trying to diagnose the problem.

(3) Timeout interval

Any safety chain is only as good as its weakest link, and if the software policy that is used to decide when to kick the watchdog is not good, watchdog hardware can make the system less reliable. One

approach is to pick an interval that is several seconds long. This is a robust approach; some systems require fast recovery, but others only need the system not to be left in a hung state indefinitely. For these more sluggish systems, there is no need to do precise measurements of the worst-case time of the program’s main loop to the nearest millisecond.

When picking the timeout one needs to consider the greatest amount of damage the device can do between the original failure and the watchdog biting. With a slowly responding system, such as a large thermal mass, it may be acceptable to wait 10s before resetting, since this will guarantee that there will be no false watchdog resets.

While on the subject of timeouts, it is worth pointing out that some watchdog circuits allow the very first timeout to be considerably longer than those following. This allows the microprocessor to initialize without having to worry about the watchdog biting.

Although the watchdog can often respond fast enough to halt mechanical systems, it offers little protection for damage that can be done by software alone. Consider an area of nonvolatile RAM that may be overwritten with rubbish data if some loop goes out of control. It is likely that overwrite would occur far faster than a watchdog could detect the fault, and for those situations some other protection, such as a checksum may be needed. The watchdog is really just one layer of protection, and should form part of a comprehensive safety network.

On some microcontrollers or CPUs, the built-in watchdog has a maximum timeout of the order of a few hundred milliseconds, obtained by multiplying the time interval in software. Say the hardware provides a 100ms timeout, but you only want to check the system for sanity every 300ms. You will have to kick the watchdog at an interval shorter than 100ms, but it will only do the sanity check every third time the kick function is called. This approach may not be suitable for a single-loop design if the main loop could take longer than 100ms to execute.

One possibility is to move the sanity check out to an interrupt. This would be called every 100ms, and would then kick the watchdog. On every third try, the interrupt function would check a flag that

indicates that the main loop is still spinning. This flag is set at the end of the main loop, and cleared by the interrupt as soon as it has read it. If kicking the watchdog from an interrupt, it is vital to have a check on the main loop. Otherwise it is possible to get into a situation where the main loop has hung, but the interrupt continues to kick the watchdog, which never gets a chance to reset the system.

(4) Self-test

Assume that the watchdog hardware fails in such a way that it never bites. The fault would only be discovered when some failure that normally leads to a reset leads instead to a hung system. If such a failure was acceptable, the watchdog was not needed in the first place.

Many systems contain a means to disable the watchdog, such as a jumper that connects its output to the reset line. This is necessary for some test modes, and for debugging with any tool that can halt the program. If the jumper falls out, or a service engineer who removed the jumper for a test forgets to replace it, the watchdog will be rendered toothless.

The simplest way for a device to do a start-up self-test is to allow the watchdog to timeout, causing a microprocessor to be reset. To avoid looping infinitely in this way, it is necessary to distinguish the power-on case from the watchdog reset case. If the reset was due to a power-on, then perform this test, but if due to a watchdog bite, then we may already be running the test. By writing a value in RAM that will be preserved through a reset, you can check whether the reset was due to a watchdog test or to a real failure. A counter should be incremented while waiting for the reset, after which it should be checked to see how long before the timeout, so you are sure that the watchdog bit after the correct interval. If counting the number of watchdog resets to decide whether the system should give up trying, then be sure that you do not inadvertently count the watchdog test reset as one of those.

Task timers

A task-timer strategy has four objectives in a multitasking or multithreading system: (1) to detect an operating system, (2) to detect an infinite loop in any of the tasks, (3) to detect deadlock involving two or more tasks, (4) to detect whether some lower-priority tasks are never running because higher- priority tasks are hogging the CPU.

Typically, not enough timing information is available on the possible paths of any given task to check for a minimum execution time, or to set the time limit on a task to be exactly the time taken for the longest path. Therefore, although all infinite loops are detected, an error that causes a loop to execute a number of extra iterations may go undetected by the designed task timer mechanism. A number of other considerations have to be taken into account to make any scheme feasible:

(a) the extra code added to the normal tasks (as distinct from a task created for monitoring tasks) must be small, to reduce the likelihood of becoming prone to errors itself;

(b) the amount of system resources used, especially CPU cycles, must be reasonable.

Most tasks have some minimum period during which they are required to run. A task may run in reaction to a timer that occurs at a regular interval. These tasks have a start point through which they pass in each execution loop. They are referred to as regular tasks. Other tasks respond to outside events, the frequency of which cannot be predicted, and are referred to as waiting tasks.

The watchdog can be used as a task timer. Its timeout can be chosen to be the maximum time during which all regular tasks have had a chance to run from their start point through one full loop back to their start point again. Each task has a flag that can have two values, ALIVE and UNKNOWN. The flag is later read and written by the monitor. The monitor’s job is to wake up before the watchdog timeout expires and check the status of each flag. If all flags contain the value ALIVE, every task had its turn to execute and the watchdog may be kicked. Some tasks may have executed several loops and set their flag several times to ALIVE, which is acceptable. After kicking the watchdog, the monitor sets all of the flags to UNKNOWN. By the time the monitor task executes again, all of the UNKNOWN flags should have been overwritten with ALIVE.

Timer creation and expiration

An active timer will perform its synchronization action when its expiration time is reached. A timer can be created using the Timer-Create system call. It is bound to a task and to a clock device that will measure the passage of time for it. This is useful for ownership and proper tear-down of timer resources. If a task is terminated, then the timer resources owned by it are also terminated as well. It is also possible to explicitly terminate a timer using the Timer-Terminate system call.

Timers allow synchronization primarily through two interfaces. Timer-Sleep is a synchronous call, in which the caller specifies a time to wake up and indicates whether that time is relative or absolute. Relative times are less useful for real-time software since program-wide accuracy may be skewed by preemption. For example, a thread which samples data and then sleeps for 5 seconds may be preempted between sampling and sleeping, causing a steadily increasing skew from the correct time base. Timers that are terminated or canceled while sleeping return an error to the user. Another interface that can be named “Timer-Arm” provides an asynchronous interface to timers. Through it the user specifies an expiration time, an optional period, and a port which will receive expiration notification messages.

When the expiration time is reached, the kernel sends an asynchronous message containing the current time to this port, which then carries on stopping the related task that owns this timer. If the timer is specified as periodic, then it will rearm itself with the specified period relative to the last expiration time.

Last, the Timer-Cancel call allows the user to cancel the expiration of a pending timer. For periodic timers, only the current expiration, or all forthcoming expirations can be cancelled. These last facilities, periodic timers and partial cancelation, are important because they allow an efficient and correct implementation of periodic computation. This permits a user-level implementation of real-time periodic threads.