MULTICOMPUTER OPERATING SYSTEMS

A multicomputer system may be either a network (loosely coupled computers) or a cluster (tightly coupled computers).

A computer network is an open system in which two or more computers are connected together to share resources such as hardware, data, and software. Most common are the local area network (LAN) and the wide area network (WAN). A LAN can range from a few computers in a small office to several thousand computers spread throughout dozens of buildings on a university campus or in an industrial park. Expand this latter scenario to encompass multiple geographic locations, possibly on different continents, and you have a WAN.

A computer cluster is a group of physically linked computers which work closely so that in many respects they behave as a single computer. A cluster consists of multiple stand-alone computers acting in parallel across a local high-speed network; thus clustered computers are usually much more tightly coupled. Its components are commonly, but not always, connected to each other through fast local area networks. An example of computer clusters is Columbia in NASA in the United States, which is a new supercomputer, built of 20 SGI Altix clusters, giving a total of 10,240 CPUs (Figure 17.4).

In Chapter 10, we discussed some industrial control networks including CAN, SCADA, Ethernet and LAN. CAN and Ethernet networks are tightly coupled networks similar to computer clusters, while SCADA and LAN networks are more loosely coupled, similar to computer networks.

This section will explain the operating systems used in these multicomputer systems, including cluster, network and parallel operating systems.

Cluster operating systems

In a distributed operating system, it is imperative to have a single system image so that users can interact with the system as if it were a single computer. It is also necessary to implement fault tolerance or error recovery features; that is, when a node fails, or is removed from the cluster, the rest of the processes running on the cluster can continue. The cluster operating system should be scalable, and should make the system highly available.

The single system image should implement a single entry point to the cluster, a single file hierarchy, a single control point, virtual networking, a single memory space, a single job management system, and a single user interface. Furthermore, for the high availability of the cluster, the operating system should feature a single I/O space, a single process space, and process migration.

Over the last three decades, researchers have constructed many prototype and production-quality cluster operating systems. We will now outline two of these cluster operating systems: Solaris MC and MOSIX.

(1) Solaris MC

Solaris MC is a prototype, distributed operating system for clusters, built on top of the Solaris operating system. It provides a single system image for a cluster that runs the Solaris™ UNIX® operating system so that it appears to the user, and to applications as a single computer. Thus, Solaris MC shows how an existing, widely used operating system can be extended to support clusters.

Most of Solaris MC consists of loadable modules extending the Solaris operating system, and minimizes modifications to the existing kernel. It provides the same message-passing interface as that in the Solaris operating system, which means that existing application and device driver binaries can run unmodified. To do this, Solaris MC has a global file system, extends process operations across all the nodes, allows transparent access to remote devices, and makes the cluster appear as a single machine on the network. It also supports remote process execution and the external network is transparently accessible from any node in the cluster.

Solaris MC is designed for high availability. If a node fails, the cluster can continue to operate. It runs a separate kernel on each node, a failed node is detected automatically and system services are reconfigured to use the remaining nodes, so only the programs that were using the resources of the failed node are affected by its failure. Solaris MC does not introduce new failure modes into the UNIX operating system.

It has a distributed caching file system with UNIX consistency semantics, based on virtual memory and file system architecture, taking advantage of the idea of using an object model as the communication mechanism, virtual memory and file system architecture, and the use of Cþþ as the programming language.

It has a global file system, called the Proxy File System (PXFS), which makes file accesses location-transparent. A process can open a file located anywhere on the system and each node uses the same pathname to the file. The file system also preserves the standard UNIX file access semantics, and files can be accessed simultaneously by different nodes. PXFS is built on top of the original Solaris file system by modifying the node interface, and does not require any kernel modifications.

Solaris MC has a global process management system that makes the location of a process trans- parent to the user. The threads of a single process must run on the same node, but each process can run on any node. The system is designed so that the semantics used in Solaris for process operations are supported, as well as providing good performance, supplying high availability, and minimizing changes to the existing kernel.

It has an I/O subsystem that makes it possible to access any device from any node on the system, no matter which device it is physically connected to. Applications can access all devices on the system as if they were local devices. Solaris MC carries over Solaris’s dynamically loadable and configurable device drivers, and configurations are shared through a distributed device server.

Solaris MC has a networking subsystem that creates a single image environment for networking applications. All network connectivity is consistent for each application, no matter which node it runs on. This is achieved by using a packet filter to route packets to the proper node and performing protocol processing on that node. Network services can be replicated on multiple nodes to provide lighter throughput and lower response times. Multiple processes register themselves as servers for a particular service. The network subsystem then chooses a particular process when a service request is received. For example, rlogin, telnet, http and ftp servers are replicated on each node by default. Each new connection to these services is sent to a different node in the cluster based on a load-balancing policy, which allows the cluster to handle multiple http requests, for example, in parallel.

In conclusion, Solaris MC provides high availability in many ways, including a failure detection and membership service, an object and communication framework, a system reconfiguration service and a user-level program reconfiguration service. Some nodes can be specified to run as backup nodes, if a node attached to a disk drive fails, for example. Any failures are transparent to the user.

(2) MOSIX

MOSIX is a software package that extends the Linux kernel with cluster computing capabilities. The enhanced Linux kernel allows any sized cluster of Intel based computers to work together as a single system with SMP (symmetrical multiprocessor) architecture.

MOSIX operations are transparent to user applications. Users run applications sequentially or in

parallel just as they would do on a SMP, not needing to know where their processes are running, or be concerned with what other users are doing at the time. After a new process is created, the system attempts to assign it to the best available node at that time. MOSIX continues to monitor all running processes (including the new one). In order to maximize overall cluster performance, it will auto- matically move processes amongst the cluster nodes when the load is unbalanced. This is all accomplished without changing the Linux interface.

As may be obvious already, existing applications do not need any modifications to run on a MOSIX cluster, nor do they need to be linked to any special libraries. In fact, it is not even necessary to specify the cluster nodes on which the application will run. MOSIX does all this automatically and trans- parently. In this respect, MOSIX acts like a “fork and forget” paradigm for SMP systems. Many user processes can be created at a home node, and MOSIX will assign the processes to other nodes if necessary. If the user was to run a process, it would be shown all processes owned by this user as if they were running on the node the user started them on, so providing the user with a single server image. Since MOSIX is implemented in the operating system’s kernel its operations are completely trans- parent to user-level applications.

At its core are three algorithms: load-balancing, memory ushering, and resource management. These respond to changes in the usage of cluster resources so as to improve the overall performance of all running processes. The algorithms use preemptive (online) process migration to assign and reassign running processes amongst the nodes, which ensures that the cluster takes full advantage of the available resources. The dynamic load-balancing algorithm ensures that the load across the cluster is evenly distributed. The memory ushering algorithm prevents excessive hard disk swapping by allocating or migrating processes to nodes that have sufficient memory.

MOSIX resource management algorithms are decentralized, so every node in the cluster is both a master for locally created processes, and a server for remote (migrated) processes. The benefit of this decentralization is that processes that are running on the cluster are minimally affected when nodes are added to or removed from it. This greatly adds to the scalability and the high availability of the system.

Another advantageous feature of MOSIX is its self-tuning and monitoring algorithms. These detect the speed of the nodes, and monitor their load and available memory, as well as the interprocess communication and I/O rates of each running process. Using these data, MOSIX can make optimized decisions about where to locate processes.

In the same manner that a NFS (network file system) provides transparent access to a single consistent file system, MOSIX enhancements provide a transparent and consistent view of processes running on the cluster. To provide consistent access to the file system, it uses a shared MOSIX File System. This makes all directories and regular files throughout a cluster available from all nodes, and provides absolute consistency to files that are viewed from different nodes. The Direct File System Access (DFSA) provision extends the capability of a migrated process to perform I/O operations locally, in the current (remote) node. This provision decreases communication between I/O bound processes and their home nodes, allowing such processes to migrate with greater flexibility among the cluster’s nodes, e.g., for more efficient load-balancing, or to perform parallel file and I/O operations. Currently, the MOSIX File System meets the DFSA standards.

The single system image model of MOSIX is based on the home node model. In this model, all users’ processes seem to run on the users’ login node. Every new process is created on the same node (or nodes) as its parent. The execution environment of a user’s process is the same as the user’s login node. Migrated processes interact with the user’s environment through the user’s home node; however, wherever possible, the migrated process uses local resources. The system is set up such that as long as the load of the user’s login node remains below a threshold value, all their processes are confined to that node. When the load exceeds this value, the process migration mechanism will begin to migrate a process or processes to other nodes in the cluster. This migration is done transparently without any user intervention.

MOSIX is flexible and can be used to define different cluster types, even those on various kinds of machines or LAN speeds. Similarly to Solaris MC, MOSIX is built upon existing software so that it is easy to make the transition from a standalone to a clustered system.

It supports cluster configurations having a small or large number of computers with minimal scaling overheads. A small low-end configuration may consist of several PCs, connected by Ethernet. A larger configuration may consist of workstations connected by a higher-speed LAN such as Fast Ethernet. A high-end configuration may consist of a large number of SMP and non-SMP computers connected by a high-performance LAN, such as Gigabit Ethernet. For example, the scalable PC cluster at Hebrew University, where the MOSIX development is based, consists of Pentium servers connected by a Fast Ethernet LAN.

Network operating systems

Network operating systems are implementations of loosely coupled operating systems on top of loosely coupled hardware. They are software that supports the use of a network of machines and provides users, who are aware of using a set of computers, with facilities designed to ease the use of remote resources located over the network, made available as services and possibly being digital controllers, printers, microprocessors, file systems or other devices. Some resources, of which dedicated hardware devices such as printers, tape drives, etc., are classic examples, are connected to and managed by a particular machine and are made available to other machines in the network via a service or daemon. Other resources, such as disks and memory, can be organized into true distributed systems, used seamlessly by all machines. Examples of basic services available on a network operating system are starting a shell session on a remote computer (remote login), running a program on a remote machine (remote execution) and transferring files to and from remote machines (remote file transfer).

Generally speaking, network operating systems run using network routers and switches. They can be traced to three generations of development, each with distinctively different architectural and design goals.

(1) The first generation of network operating systems: monolithic architecture Typically, first-generation network operating systems for routers and switches were proprietary images running in a flat memory space, often directly from flash memory or ROM. While supporting multiple processes for protocols, packet handling and management, they operated using a cooperative, multi- tasking model in which each process would run to completion or until it voluntarily relinquished the CPU. All first-generation network operating systems shared one trait: they eliminated the risks of running full-size commercial operating systems on embedded hardware. Memory management, protection and context switching were either rudimentary or non-existent, with the primary goals being a small footprint and high speed of operation. Nevertheless, these operating systems made networking commercially viable and were deployed on a wide range of products.

(2) The second-generation of network operating system: control plane modularity The mid-1990s were marked by a significant increase in the use of data networks worldwide, which quickly challenged the capacity of existing routers and switches. By this time, it had become evident that embedded platforms could run full-size commercial operating systems, at least on high-end hardware, but with one catch: they could not sustain packet forwarding at satisfactory data rates. A breakthrough solution was needed. It came in the concept of a hard separation between the control and forwarding planes, an approach that became widely accepted after the success of the industry’s first application-specific integrated circuit (ASIC)-driven routing platform, the Juniper Networks M40. These systems are free from packet switching and thus are focused on control plane functions. Unlike its first-generation counterparts, a second-generation operating system can fully use the potential of multitasking, multithreading, memory management and context manipulation, all making system- wide failures less common. Most core and edge routers or switches installed in the late-1990s are running second-generation operating systems that are currently responsible for moving the bulk of traffic on the Internet and in corporate networks.

(3) The third-generation of network operating system: flexibility, scalability and continuous operation Although they were very successful, there are new challenges to the second generation of network operating systems. Increased competition led to the need for lower operating expenses; which made a coherent case for network software flexible enough to be redeployed in network devices across the larger part of the end-to-end packet path. From multiple-terabit routers to layer 2 (data-link) switches and security appliances, the “best-in-class” catchphrase can no longer justify a splintered operational experience. Thus, true “network” operating systems are clearly needed. Such systems must also operate continuously, so that software failures in the routing code, as well as system upgrades, do not affect the state of the network. Meeting this challenge requires availability and convergence characteristics that go far beyond the hardware redundancy that is available in second-generation routers and switches. Another key goal of third-generation operating systems is the capability to run with zero downtime (planned or unplanned).

Drawing on the lesson learned from previous designs with respect to the difficulty of moving from one operating system to another, third-generation operating systems should also make the migration path completely transparent to customers.

The following will discuss two of the most relevant topics in network operating systems.

(1) Generic kernel design

In the computer network world, both monolithic and microkernel designs can be used successfully, but the ever-growing requirements for an operating system kernel quickly turn any classic implementation into a compromise. Most notably, the capability to support a real-time forwarding plane along with state and stateless forwarding models and extensive state replication requires a mix of features not available from any existing implementation. This lack can be overcome in two ways.

Firstly, a system can be constrained to a limited class of products by design. For instance, if the operating system is not intended for mid- to low-level routing platforms, some requirements can be lifted. The same can be done for flow-based forwarding devices, such as security appliances. This artificial restriction allows the network operating system to stay closer to its general- purpose siblings at the cost of fracturing the product line-up. Different network element classes will now have to maintain their own operating systems, along with unique code bases and protocol stacks, which may negatively affect code maturity and customer experience.

Secondly, the network operating system can evolve into a specialized design that combines the architecture and advantages of multiple classic implementations.

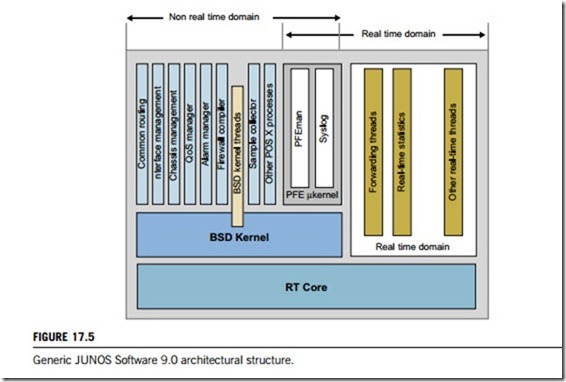

According to the formal criteria, the JUNOS (Juniper’s single network operating system) software kernel is fully customizable (Figure 17.5). At the very top is a portion of code that can be considered a microkernel, responsible for real-time packet operations and memory management, as well as interrupts and CPU resources. One level below this is a more conventional kernel that contains a scheduler, memory manager and device drivers in a package that looks more like a monolithic design. Finally, there are user-level processes in POSIX (Portable Operating System for UNIX) that actually serve the kernel and implement functions which normally reside inside the kernels of classic monolithic router operating systems. Some of these processes can be compound or run on external CPUs (or packet-forwarding engines). In JUNOS software, examples include periodic hello management, kernel state replication, and protected system domains.

The entire structure is strictly hierarchical, with no underlying layers dependent on the operations of the top layers. This high degree of virtualization allows the JUNOS software kernel to be both fast and flexible. However, even the most advanced kernel structure is not a revenue-generating asset of the network element. Uptime is the only measurable metric of system stability and quality. This is why the fundamental difference between the JUNOS software kernel and competing designs lies in its focus on reliability.

Coupled with Juniper’s industry-leading nonstop active routing and system upgrade imple- mentation, kernel state replication acts as the cornerstone for continuous operation. In fact, the JUNOS software redundancy scheme is designed to protect data plane stability and routing protocol adja- cencies at the same time. With in-service software upgrade, networks powered by JUNOS software are becoming immune to downtime related to the introduction of new features or bug fixes, enabling them to approach true continuous operation. Continuous operation demands that the integrity of the control and forwarding planes remains intact in the event of failover or system upgrades, including minor and major release changes. Devices that run JUNOS software will not miss or delay any routing updates when either a failure or a planned upgrade event occurs.

This goal of continuous operation under all circumstances and during maintenance tasks is ambitious,

and it reflects Juniper’s innovation and network expertise, which is unique among network vendors.

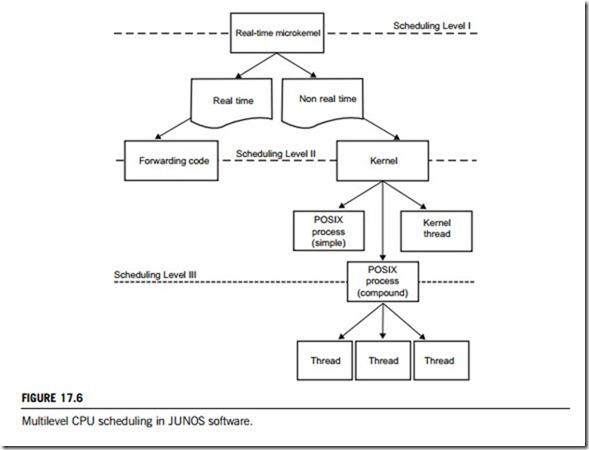

Innovation in JUNOS software does not stop at the kernel level; rather, it extends to all aspects of system operation. There are two tiers of schedulers in JUNOS software, the topmost becoming active in systems with a software data plane to ensure the real-time handling of incoming packets. It operates in real time and ensures that QoS (quality of service) requirements are met in the forwarding path. The second-tier (non-real-time) scheduler resides in the base JUNOS software kernel and is similar to its FreeBSD counterpart. It is responsible for scheduling system and user processes in a system to enable preemptive multitasking.

In addition, a third-tier scheduler exists within some multithreaded user-level processes, where threads operate in a cooperative, multitasking model. When a compound process gets its CPU share, it may treat it like a virtual CPU, with threads taking and leaving the processor according to their execution flow and the sequence of atomic operations. This approach allows closely coupled threads to run in a cooperatively multitasking environment and avoid being entangled in extensive interprocess communication and resource-locking activities (Figure 17.6).

Another interesting aspect of multi-tiered scheduling is resource separation. Unlike first-generation designs, JUNOS software systems with a software forwarding plane cannot freeze when overloaded with data packets, as the first-level scheduler will continue granting CPU cycles to the control plane.

(2) Network routing processes

The routing protocol process daemon (RPD) is the most complex process in a JUNOS software system. It not only contains much of the actual code for routing protocols, but also has its own scheduler and

memory manager. The scheduler within RPD implements a cooperative multitasking model, in which each thread is responsible for releasing the CPU after an atomic operation has been completed. This design allows several closely related threads to coexist without the overhead of interprocess communication and to scale without risk of unwanted interactions and mutual locks.

The threads within RPD are highly modular and may also run externally as standalone POSIX processes, which is how many periodic protocol operations are performed. In the early days of RPD, each protocol was responsible for its own adjacency management and control. Now, most keep-alive processing resides outside RPD, in the bidirectional forwarding detection protocol (BFD) daemon and periodic packet management process daemon (PPMD), which are, in turn, distributed between the routing engine and the line cards. The unique capability of RPD to combine preemptive and cooperative multitasking powers the most scalable routing stack in the market.

Compound processes similar to RPD are known to be very effective, but sometimes are criticized for the lack of protection between components. It has been said that a failing thread will cause the entire protocol stack to restart. Although this is a valid point, it is easy to compare the impact of this error against the performance of the alternative structure, in which every routing protocol runs in a dedicated memory space.

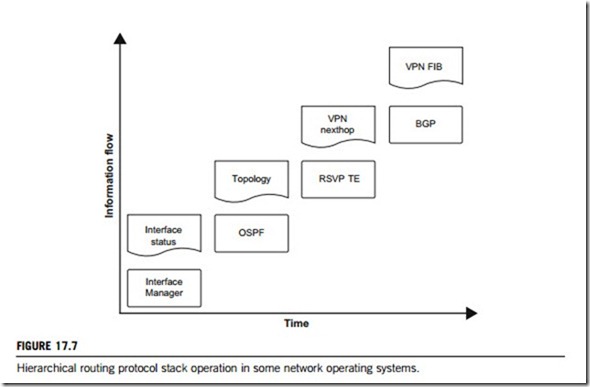

Assume that the router serves business VPN (virtual private network) customers, and the ultimate revenue-generating product is continuous reachability between remote sites. At the very top is a BGP (Border Gateway Protocol) process responsible for creating forwarding table entries. Those entries are ultimately programmed into a packet path ASIC for the actual header lookup and forwarding. If the BGP process hits a bug and restarts, forwarding table entries may become stale and would have to be flushed, thus disrupting customer traffic. But BGP relies on lower protocols in the stack for traffic engineering and topology information, and it will not be able to create the forwarding table without OSPF or RSVP. If any of these processes are restarted, BGP will also be affected (Figure 17.7). This case supports the benefits of running BGP, OSPF and RSVP in shared memory space, where the protocols can access common data without interprocess communication overhead.

Routing threads may operate using a cooperative, preemptive or hybrid task model, but failure recovery still calls for state restoration using external checkpoint facilities. If vital routing information were duplicated elsewhere and could be recovered promptly, the failure would be transparent to user traffic and protocol peers alike. Transparency through prompt recovery is the principal concept underlying any design for network service routines, and the main idea behind the contemporary Juniper Networks RPD implementation.

Instead of focusing on one technology or structure, Juniper Networks engineers evolve the JUNOS software protocol stack according to a “survival of the fittest” principle, toward the goal of true nonstop operation, reliability and usability. State replication, check pointing and interprocess communication are all used to reduce the impact of software and hardware failures. The JUNOS software control plane is designed to maintain speed, uptime and full state under the most unfavorable network situations.

17.2.3 Parallel operating systems

Parallelism, or concurrency, is an important requirement for large-scale numerical engineering computations, wide-area telephone networks, multi-agent database systems, and massively distributed control networks. It must be approached by both application level and kernel level in these target systems. However, application and operating system parallelism are two distinct issues. For example,

multiprocessor supercomputers are machines that can support parallel numerical computations, but, whether a weather forecast model can be parallel-processed on such supercomputers depends upon whether parallelism is programmed into the model. This subsection involves operating system parallelism, i.e. the parallel operating system.

Parallel operating systems are primarily used for managing the resources of parallel machines. In fact, the architecture of a parallel operating system is closely influenced by the hardware architecture of the machines it runs on. Thus, parallel operating systems can be divided into three groups: parallel operating systems for small-scale symmetric multiprocessors, parallel operating system support for large-scale distributed-memory machines, and scalable distributed shared-memory machines.

The first group includes the current versions of UNIX and Windows NT, where a single operating system manages all the resources centrally. The second group comprises both large-scale machines connected by special-purpose interconnections and networks of personal computers or workstations, where the operating system of each node locally manages its resources, and also collaborates with its peers via message passing to globally manage the machine. The third group is composed of non- uniform memory access machines where each node’s operating system manages local resources and interacts at a very fine-grain level with its peers to manage and share global resources.

Parallelism required by these operating systems involves the basic mechanisms that constitute their core: process management, file system, memory management and interior communications. These mechanisms include support for multithreading, internal parallelism of the operating system components, distributed process management, parallelism systems, interprocess communication, low- level resource sharing, and fault isolation.

(1) Parallel computer architectures

Parallel operating systems in particular enable user interaction with computers with parallel architectures. The physical architecture of a computer system is therefore an important starting point for understanding the operating system that controls it. There are two famous classifications of parallel computer architectures: Flynn’s and Johnson’s.

(a) Flynn’s classification of parallel architectures

Flynn divides computer architectures along two axes according to the number of data sources and the number of instruction sources that a computer can process simultaneously. This leads to four cate- gories of computer architectures:

1. SISD (single instruction single data);

2. MISD (multiple instruction single data);

3. SIMD (single instruction multiple data);

4. MIMD (multiple instruction multiple data).

These four categories have been discussed in subsection 17.1.1 of this textbook.

(b) Johnson’s classification of parallel architectures

Johnson’s classification is oriented towards the different memory access methods. This is a much more practical approach since, as we saw above, all but the MIMD class of Flynn are either virtually extinct or never existed. We take the opportunity of presenting Johnson’s categories of parallel architectures to give some examples of MIMD machines in each of those categories. Johnson divides computer architectures into:

1. UMA (Uniform Memory Access);

2. NUMA (Non-Uniform Memory Access);

3. NORMA (No Remote Memory Access).

These three categories have been also discussed in subsection 17.1.1 of this textbook.

(2) Parallel operating facilities

There are several components in an operating system that can be parallelized. Most do not approach all of them and do not support parallel applications directly. Rather, parallelism is frequently exploited by some additional software layer such as a distributed file system, distributed shared memory support or libraries and services that support particular parallel programming languages while the operating system manages concurrent task execution.

The convergence in parallel computer architectures has been accompanied by a reduction in the diversity of operating systems running on them. The current situation is that most commercially available machines run a flavor of the UNIX operating system. Others run a UNIX-based microkernel with reduced functionality to optimize the use of the CPU, such as Cray Research’s UNICOS. Finally, a number of shared-memory MIMD machines run Microsoft Windows NT (soon to be superseded by the high-end variant of Windows 2000).

There are a number of core aspects to the characterization of a parallel operating system, including general features such as the degrees of coordination, coupling and transparency; and more particular aspects such as the type of process management, interprocess communication, parallelism and synchronization and the programming model.

(a) Coordination

The type of coordination among microprocessors in parallel operating systems is a distinguishing characteristic which conditions how applications can exploit the available computational nodes. Furthermore, as mentioned before, application parallelism and operating system parallelism are two distinct issues: while application concurrency can be obtained through operating system mechanisms or by a higher layer of software, concurrency in the execution of the operating system is highly dependent on the type of processor coordination imposed by the operating system and the machine’s architecture. There are three basic approaches to coordinating microprocessors:

1. Separate supervisor. In a separate supervisor parallel machine, each node runs its own copy of the

operating system. Parallel processing is achieved via a common process management mechanism allowing a processor to create processes and/or threads on remote machines. For example, in a mul- ticomputer such as the Cray-T3E, each node runs its own independent copy of the operating system. Parallel processing is enabled by a concurrent programming infrastructure such as a MPI (message- passing interface) library, whereas I/O is performed by explicit requests to dedicated nodes. Having a front-end that manages I/O and that dispatches jobs to a back-end set of processors is the main motivation for a separate supervisor operating system.

2. Master-slave. Master-slave parallel operating system architecture assumes that the operating

system will always be executed on the same processor, and that this processor will control all shared resources, in particular process management. A case of master-slave operating system is the CM-5. This type of coordination is particularly adapted to single-purpose machines running applications that can be broken into similar concurrent tasks. In these scenarios, central control may be maintained without any penalty to the other processors’ performance since all processors tend to be beginning and ending tasks simultaneously.

3. Symmetric. Symmetric operating systems are the current most common configuration. In this type of system, any processor can execute the operating system kernel. This leads to concurrent accesses to operating system components and requires careful synchronization.

(b) Coupling and transparency

Another important characteristic of parallel operating systems is the system’s degree of coupling. Just as in the case of loosely coupled hardware architectures (e.g. a network of workstation computers) and highly coupled hardware architectures (e.g. vector parallel multiprocessors), parallel operating systems can be either loosely or tightly coupled. Many current distributed operating systems have a highly modular architecture, so there is a wide spectrum of distribution and parallelism in different operating systems. To see how influential coupling is in forming the abstraction presented by a system, consider the following extreme examples: on the highly coupled end a special-purpose vector computer dedicated to one parallel application, e.g. weather forecasting, with master-slave coordi- nation and a parallel file system; and on the loosely coupled end a network of workstations with shared resources (printer, file server) each running their own application and perhaps sharing some client- server applications. These scenarios show that truly distributed operating systems correspond to the concept of highly coupled software using loosely coupled hardware. Distributed operating systems aim at giving the user the possibility of transparently using a virtual single processor. This requires having an adequate distribution of all the layers of the operating system and providing a global unified view over process management, file system and interprocess communication, thereby allowing applications to perform transparent migration of data, computations and/or processes.

(c) Hardware vs. software

A parallel operating system provides users with an abstract computational model of the computer architecture, which can be achieved by the computer’s parallel hardware architecture or by a software layer that unifies a network of processors or computers. In fact, there are implementations of every computational model in both hardware and software systems:

1. The hardware of the shared memory model is represented by symmetric multiprocessors, whereas the software is achieved by unifying the memory of a set of machines by means of a distributed shared-memory layer.

2. In the case of the distributed-memory model there are multicomputer architectures where accesses to local and remote data are explicitly different and, as we saw, have different costs. The equivalent software abstractions are explicit message-passing inter-process communication mechanisms and programming languages.

3. Finally, the SIMD computation model of massively parallel computers is mimicked by software through data parallel programming. Data parallelism is a style of programming geared towards applying parallelism to large data sets, by distributing this data over the available processors in a “divide and conquer” mode. An example of a data parallel programming language is HPF (High Performance Fortran).

(d) Protection

Parallel computers, being multiprocessing environments, require that the operating system provide protection among processes and between processes and the operating system so that erroneous or malicious programs are not able to access resources belonging to other processes. Protection is the access-control barrier, which all programs must pass before accessing operating system resources. Dual- mode operation is the most common protection mechanism in operating systems. It requires that all operations that interfere with the computer’s resources and their management are performed under operating system control in what is called protected or kernel mode (as opposed to unprotected or user mode). The operations that must be performed in kernel mode are made available to applications as an operating system API (application program interface). A program wishing to enter kernel mode calls one of these system functions via an interruption mechanism, whose hardware implementation varies among different processors. This allows the operating system to verify the validity and authorization of the request and to execute it safely and correctly using kernel functions, which are trusted and well-behaved.

Related posts:

Incoming search terms:

- multicomputer operating system

- multicomputing operating system in distributed computing

- multicomputer system

- multicomputer

- multicomputer in distributed sysam

- a multicomputer operating system defined for

- what is multicomputers in os

- what is multicomputer

- multicomputers in disribited system

- multicomputer in distributed systems

- multi computer system

- multi computer operating system

- mosix os

- features of mosix operating system

- what is parallel operating system on electrical