Chapter 11. Ports

No computer has everything you need built in to it. The computer gains its power from what you connect to it—its peripherals. Somehow your computer must be able to send data to its peripherals. It needs to connect, and it makes its connections through its ports.

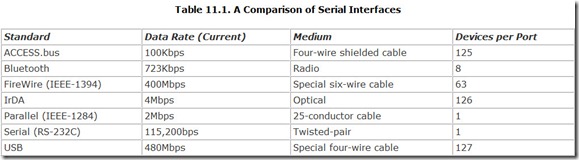

Today’s computers include two or three of these modern interfaces. Those that you’re likely to encounter include Universal Serial Bus (USB), today’s general-purpose choice; FireWire, most popular as a digital video interface; IrDA, a wireless connection most often used for beaming data between handheld computers; Bluetooth, a radio-based networking system most suited for voice equipment; legacy serial ports, used by a variety of slower devices such as external modems, drawing tablets, and even PC-to-PC connections; and legacy parallel ports, most often used by printers. Table 11.1 compares these port alternatives.

If you were only to buy new peripherals to plug into your computer, you could get along with only USB ports. Designed to be hassle-free for installing new gear, USB is fast enough (at least in its current version 2.0) that you need not consider any other connection. You can consider all the other ports special-purpose designs—the legacy pair (parallel and serial) for accommodating stuff that would otherwise be gathering dust in your attic; FireWire for plugging in your digital camcorder; IrDA for talking to your notebook computer; and Bluetooth for, well, you’ll think of something.

Universal Serial Bus

In 1995, Compaq, Digital, IBM, Intel, Microsoft, NEC, and Northern Telecom, determined to design a better interface, pooled their efforts and laid the groundwork for the Universal Serial Bus, better known as USB. Later that year, they started the Universal Serial Bus Implementers Forum and in 1996 unveiled the new interface to the world. The world yawned.

Aimed at replacing both legacy serial and parallel port designs, the USB design corrected all three of their shortcomings. To improve performance, they designed USB with a 12Mbps data rate (with an alternative low-speed signaling rate of 1.5Mbps). To eliminate wiring hassles and worries about connector gender, crossover cables, and device types, they developed a strict wiring system with exactly one type of cable to serve all interconnection needs. And to allow one jack on the back of a computer to handle as many peripherals as necessary, they designed the system to accommodate up to 127 devices per port. In addition, they built in Plug-and-Play support so that every connection could be self-configuring. You could even hot-plug new devices and use them immediately without reloading your operating system.

On April 27, 2000, a new group, led by Compaq, Hewlett-Packard, Intel, Lucent, Microsoft, NEC, and Philips, published a revised USB standard, version 2.0. The key change was an increase in performance, upping the speed from 12Mbps to 480Mbps. The new system incorporates all the protocols of the old and is fully backward compatible. Devices will negotiate the highest common speed and use it for their transfers. Connectors and cabling remained unchanged.

Background

Designed for those who would rather compute than worry about hardware, the premise underlying USB is the substitution of software intelligence for cabling confusion. USB handles all the issues involved in linking multiple devices with different capabilities and data rates with a layer-cake of software. Along the way, it introduces its own new technology and terminology.

USB divides serial hardware into two classes: hubs and functions. A USB hub provides jacks into which you can plug functions. A USB function is a device that actually does something. USB’s designers imagined that a function may be anything you can connect to your computer, including keyboards, mice, modems, printers, plotters, scanners, and more.

Rather than a simple point-to-point port, the USB acts as an actual bus that allows you to connect multiple peripherals to one jack on your computer with all the linked functions (devices) sharing exactly the same signals. Information passes across the bus in the form of packets, and all functions receive all packets. Your computer accesses individual functions by adding a specific address to the packets, and only the function with the correct address acts on the packets addressed to it.

The physical manifestation of USB is a port—a jack on the back of your computer or in a hub. Although your computer’s USB port can handle up to 127 devices, each physical USB port connects to a single device. To connect multiple devices, you need multiple jacks. Typically, a new computer comes equipped with two USB ports. When you need more, you add a hub, which offers multiple jacks to let you plug in several devices. You can plug one hub into another to provide several additional jacks and ports to connect more devices.

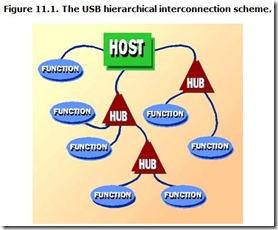

The USB design envisions a hierarchical system with hubs connected to hubs connected to hubs. In that each hub allows for multiple connections, the reach of the USB system branches out like a tree—or a tree’s roots. Figure 11.1 gives a conceptual view of the USB wiring system.

Your computer acts as the base hub for a USB system and is termed the host. The circuitry in your computer that controls this integral hub and the rest of the USB system is called the buscontroller. Each USB system has one and only one bus controller.

Under USB 2.0, a device can operate at any of three speeds: Low speed is 1.5Mbps. Full speed is 12Mbps, and high speed is 480Mbps.

USB 2.0 is backward compatible with USB 1.1—all USB 1.1 devices will work with USB 2.0 devices, and vice versa, but USB 1.1 will impose its speed limit on USB 2.0 devices. The mixing of speeds makes matters complicated. If you plug both a USB 2.0 and a USB 1.1 device into a USB 2.0 hub, both devices will operate at their respective top speeds. But if a USB 1.1 hub appears in the chain between USB 2.0 devices, the slower hub will limit the speed of the overall system. Plug any USB 2.0 device into a USB 1.1 hub—even a USB 2.0 hub—and it will degrade to USB 1.1 operation (and if it’s a hub, so will all the devices connected to that hub).

Other than this speed issue, the USB system doesn’t care which device you plug into which hub or how many levels down the hub hierarchy you put a particular device. All the system requires is that you properly plug everything together following its simple rule: Each device must plug into a hub. The USB software then sorts everything out. This software, making up the USB protocol, is the most complex part of the design. In comparison, the actual hardware is simple—but the hardware won’t work without the protocol.

The wiring hardware imposes no limit on the number of devices/functions you can connect in a USB system. You can plug hubs into hubs into hubs, fanning out into as many ports as you like. You do face limits, however. The protocol constrains the number of functions on one bus to 127 because of addressing limits. Seven bits are allowed for encoding function addresses, and one of the potential addresses (128) is reserved.

In addition, the wiring limits the distance at which you can place functions from hubs. The maximum length of a USB cable is five meters. Because hubs can regenerate signals, however, your USB system can stretch out for greater distances by making multiple hops through hubs.

As part of the Plug-and-Play process, the USB controller goes on a device hunt when you start up your computer. It interrogates each device to find out what it is. It then builds a map that locates each device by hub and port number. These become part of the packet address. When the USB driver sends data out the port, it routes that data to the proper device by this hub-and-port address.

USB requires specific software support. Any device with a USB connector will have the necessary firmware to handle USB built in. But your computer will also require software to make the USB system work. Your computer’s operating system must know how to send the appropriate signals to its USB ports. All Windows versions starting with Windows 98 have USB support. Windows 95 and Windows NT do not. In addition, each function must have a matching software driver. The function driver creates the commands or packages the data for its associated device. An overall USB driver acts as the delivery service, providing the channel—in USB terminology, a pipe—for routing the data to the various functions. Consequently, each USB you add to your computer requires software installation along with plugging in the hardware.

Connectors

The USB system involves four different styles of connectors—two chassis-mounted jacks and two plugs at the ends of cables. Each jack and plug comes in two varieties: A and B.

Hubs have A jacks. These are the primary outward manifestation of the USB port—the wide, thin USB slots you’ll find on the back of your computer. The matching A plug attaches to the cable that leads to the USB device. In the purest form of USB, this cable is permanently affixed to the device, and you need not worry about any other plugs or jacks.

This configuration may someday become popular when manufacturers discover they can save the cost of a connector by integrating the cable. Unfortunately, too many manufacturers have discovered that by putting a jack on their USB devices they save the cost of the cable by not including it with the device.

To accommodate devices with removable cables (and manufacturers that don’t want the add the expense of a few feet of wire to their USB devices), the USB standard allows for a second, different style of plug and jack meant only to be used for inputs to USB devices. If a USB device (other than a hub) requires a connector so that, as a convenience, you can remove the cable, it uses a USB B jack, which is a small, nearly square hole into which you slide the mating B plug.

The motivation behind this multiplicity of connectors is to prevent rather than cause confusion. All USB cables will have an A plug at one end and a B plug at the other. One end must attach to a hub and the other to a device. You cannot inadvertently plug things together incorrectly.

Because all A jacks are outputs and all B jacks are inputs, only one form of detachable USB cable exists—one with an A plug at one end and a B plug at the other. No crossover cables or adapters are needed for any USB wiring scheme.

Cable

The USB system uses two kinds of cable—that meant for low-speed connections and that meant for full- and high-speed links. But you only have to worry about the higher-speed variety. Low-speed cables, those capable of supporting only 1.5Mbps signaling rates, must be permanently attached to the equipment using them. Higher-speed cables can be either permanently attached or removable.

Both speeds of physical USB wiring use a special four-wire cable. Two conductors in the cable transfer the data as a differential digital signal. That is, the voltage on the two conductors is of equal magnitude and opposite polarity so that when subtracted from one another (finding the difference) the result cancels out any noise that ordinarily would add equally to the signal on each line. In addition, the USB cable includes a power signal, nominally five-volts DC, and a ground return. The power signal allows you to supply power for external serial devices through the USB cable. The two data wires are twisted together as a pair. The power cables may or may not be.

The difference between low- and higher-speed cables is that the capacitance of the low-speed cable is adjusted to support its signaling rate. In addition, the low speed does not need twisted-pair wires, and the standard doesn’t require them.

All removable cables must be able to handle both full-speed and high-speed connections. To achieve its high data rate, the USB specification requires that certain physical characteristics of the cable be carefully controlled. Even so, the maximum length permitted for any USB cable is five meters.

One limit on cable length is the inevitable voltage drop suffered by the power signal. All wires offer some resistance to electrical flow, and the resistance is proportional to the wire gauge. Hence, lower wire gauges (thicker wires) have lower resistance. Longer cables require lower wire gauges. At maximum length, the USB specification requires 20-gauge wire, which is one step (two gauge numbers) thinner than ordinary lamp cord.

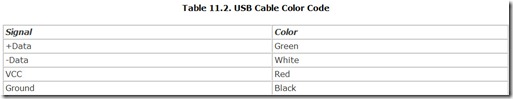

The individual wires in the USB cable are color-coded. The data signals form a green-white pair, with the +Data signal on green. The positive five-volt signal rides on the red wire. The ground wire is black. Table 11.2 sums up this color code.

Normally, you cannot connect one computer to another using USB. The standard calls for only one USB controller in the entire interconnection system. Physically the cabling system prevents you from making such a connection. The exception is that some cables have a bridge built in that allows two USB hosts to talk to each other. The bridge is active circuitry that converts the signals.

Protocol

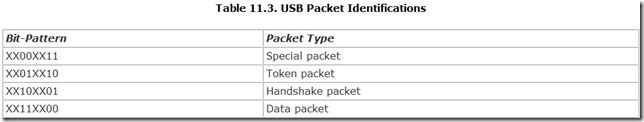

As with all more recent interface introductions, the USB design uses a packet-based protocol using No Return-to-Zero Inverted (NRZI) data coding. All message exchanges require the swapping of three packets. The exchange begins with the host sending out a token packet. The token packet bears the address of the device meant to participate in the exchange as well as control information that describes the nature of the exchange. A data packet holds the actual information that is to be exchanged. Depending on the type of transfer, either the host or the device will send out the data packet. Despite the name, the data packet may contain no information. Finally, the exchange ends with a handshake packet, which acknowledges the receipt of the data or other successful completion of the exchange. A fourth type of packet, called Special, handles additional functions.

Each packet starts with two components—a Sync Field and a Packet Identifier—each one byte long. The Sync Field is a series of bits that serves as a consistent burst of clock pulses so that the devices connected to the USB bus can reset their timing and synchronize themselves to the host. The Sync Field appears as three on/off pulses followed by a marker two pulses wide. The Packet Identifier byte includes four bits to define the nature of the packet itself and another four bits as check-bits that confirm the accuracy of the first four. The four bits provide a code that allows for the definition of 16 different kinds of packets.

USB uses the 16 values in a two-step hierarchy. The two more significant bits specify one of the four types of packets. The two lesser significant bits subdivide the packet category. Table 11.3 lists the PIDs of the four basic USB packet types.

Token Packets

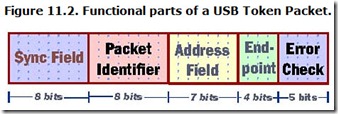

Only the USB host sends out token packets. Each token packet takes up four bytes, which are divided up into five functional parts. Figure 11.2 graphically shows the layout of a token packet.

The two bytes take the standard form of all USB packets. The first byte is a Sync Field that marks the beginning of the token’s bit-stream. The second byte is the Packet Identifier.

The PID byte defines four types of token packets. These include an Out packet that carries data from the host to a device; an In packet that carries data from the device to the host; a Setup packet that targets a specific endpoint; and a Start of Frame packet that helps synchronize the system.

Data Packets

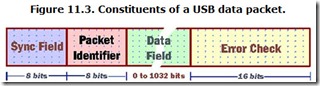

The actual information transferred through the USB system takes the form of data packets. As with all USB packets, a data packet begins with a one-byte Sync Field followed by the Packet Identifier. The actual data follows as a sequence of 0 to 1,023 bytes. A two-byte cyclic redundancy check verifies the accuracy of only the Data Field, as shown in Figure 11.3. The PID field relies on its own redundancy check mechanism.

Handshake Packets

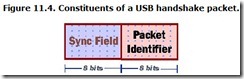

Handshake packets handle flow-control in the USB system. All are two bytes long, comprised of nothing more than the Sync Field and a Packet Identifier byte that acknowledges proper receipt of a packet, as shown in Figure 11.4.

Standard

The USB standard is maintained by USB Implementers Forum. You can download a complete copy of the current version of the specifications from the USB Website.

USB Implementers Forum

5440 SW Westgate Dr., Suite 217

Portland, OR 97221

Phone: 503-296-9892

Fax: 503-297-1090

Web site: www.usb.org

FireWire

Also known as IEEE-1394, I.link, and DV, FireWire is a serial interface that’s aimed at high-throughput devices such as a hard disk and tape drives, as well as consumer-level multimedia devices such as digital camcorders, digital VCRs, and digital televisions. Originally it was conceived as a general-purpose interface suitable for replacing legacy serial ports, but with blazing speed. However, it has been most used in digital video—at least so far. Promised new performance and a choice of media may rekindle interest in FireWire as a general-purpose, high-performance interconnection system.

For the most part, FireWire is a hardware interface. It specifies speeds, timing, and a connection system. The software side is based on SCSI. In fact, FireWire is one of the several hardware interfaces included in the SCSI-3 standards.

As with other current port standards, FireWire continues to evolve. Development of the standard began when the Institute of Electrical and Electronic Engineers (IEEE) assigned a study group the task of clearing the murk of thickening morass of serial standards in September 1986. Hardly four months later (in January 1987), the group had already outlined basic concepts underlying FireWire, some of which still survive in today’s standard—including low cost, a simplified wiring scheme, and arbitrated signals supporting multiple devices. The IEEE approved the first FireWire standard (as IEEE 1394-1995) in 1995, based on a design with one connector style and two speeds (100 and 200Mbps).

In the year 2000, the institute approved the standard IEEE 1394a-2000, which boasts a new, miniaturized connector, a higher speed (400Mbps), and streamlined signaling that makes connections quicker (because of reduced overhead) and more reliable.

As this is written, the engineers at the institute are developing a successor standard, IEEE 1394b, that will quadruple the speed of connections, add both fiber-optic and twisted-pair wiring schemes, and add a new, more reliable transport protocol.

For now, FireWire is best known as a 400Mbps connection system for plugging digital camcorders into computers, letting you capture video images (live or tape), edit them, and publish them on CD or DVD.

Overview

FireWire differs from today’s other leading port standard, USB, in that it is a point-to-point connection system. That is, you plug a FireWire device directly into the port on your computer. To accommodate more than one FireWire device (the standard allows for a maximum of 63 interconnected devices), the computer host may have multiple jacks or the FireWire device may have its own input jack so that you can daisy-chain multiple devices to a single computer port.

Although FireWire does not use hubs in the network or USB sense, in its own nomenclature it does. In the FireWire scheme, a device with a single FireWire port is a leaf. A device with two ports is called a pass-through, and a device with three ports is called a branch or hub. Pass-through and branch nodes operate as repeaters, reconstituting the digital signal for the next hop. Each FireWire system also has a single root, which is the foundation around which the rest of the system organizes itself.

You can daisy-chain devices with up to 16 links to the chain. After that, the delays in relaying the signals from device to device go beyond those set in the standard. Accommodating larger numbers of devices requires using branches to create parallel data paths.

Under the current standard (1394a), FireWire allows a maximum cable length of 4.5 meters (about 15 feet). With 16 links to a daisy-chain, two FireWire devices could be separated by as much as 72 meters (almost 200 feet).

Each FireWire cable contains two active connections for a full-duplex design (signals travel both ways simultaneously in the cable on different wire pairs). Connectors at each end of the cable are the same, so wiring is easy—you just plug things together. Software takes care of all the details of the connection. The exception is that the 1394a standard also allows for a miniaturized connector to fit in tight places (such as a camcorder).

FireWire also allows for engineers to use the same signaling system for backplane designs. That is, FireWire could be used as an expansion bus inside a computer as well as the port linking to external peripherals. Currently, however, FireWire is not used as a backplane inside personal computers.

The protocol used by FireWire uses 64-bit addressing. The 63 device limitation per chain results from only six bits being used for node identification. The rest of the addressing bits provide for large networks and the use of direct memory addressing—10 bits for network identifications and 48 bits for memory addresses (the same as the latest Intel microprocessor, enough for uniquely identifying 281TB of memory per device). A single device may use multiple identifications.

Signaling

To minimize noise, data connections in FireWire use differential signals, which means it uses two wires that carry the same signal but of opposite polarity. Receiving equipment subtracts the signal on one wire from that on the other to find the data as the difference between the two signals. The benefit of this scheme is that any noise gets picked up by the wires equally. When the receiving equipment subtracts the signals on the two wires, the noise gets eliminated—the equal noise signals subtracted from each other equals zero.

The original FireWire standard used a patented form of signal coding called data strobe coding, using two differential wire pairs to carry a single data stream. One pair carried the actual data; the second pair, called the strobe lines, complimented the state of the data pair so that one and only one of the pairs changed polarity every clock cycle. For example, if the data line carried two sequential bits of the same value, the strobe line reversed polarity to mark the transition between them. If a sequence of two bits changed the polarity of the data lines (a one followed by a zero, or zero followed by a one), the strobe line did not change polarity. Summing the data and strobe lines together exactly reconstructed the clock signal of the sending system, allowing the sending and receiving devices to precisely lock up.

FireWire operates as a two-way channel, with different pairs of wire used for sending and receiving. When one pair is sending data, the other operates as its strobe signal. In receiving data, the pair used for strobe in sending contains the data, and the other (data in sending) carries the receive strobe. In other words, as a device shifts from sending and receiving, it shifts which wire pairs it uses for data and strobe.

The 1394b specification alters the data coding to use a system termed 8B/10B coding, developed by IBM. The scheme encodes eight-bit bytes in 10-bit symbols that guarantee a sequence of more than five identical bits never occurs and that the number of ones and zeros in the code balance—a characteristic important to engineers because it results in no shift of the direct current voltage in the system.

Configuration

FireWire allows you to connect multiple devices together and uses an addressing system so that the signals sent through a common channel are recognized only by the proper target device. The linked devices can independently communicate among themselves without the intervention of your computer. Each device can communicate at its own speed—a single FireWire connection shifts between speeds to accommodate each device. Of course, a low-speed device may not be able to pass through higher-speed signals, so some forethought is required to put together a system in which all devices operate at their optimum speeds.

FireWire eliminates such concern about setting device identifications with its own automated configuration process. Whenever a new device gets plugged into a FireWire system (or when the whole system gets turned on), the automatic configuration process begins. By signaling through the various connections, each device determines how it fits into the system, either as a root node, a branch, a pass-through, or a leaf. The node also sends out a special clock signal. Once the connection hierarchy is set up, the FireWire devices determine their own ID numbers from their location in the hierarchy and send identifying information (ID and device type) to their host.

You can hot-plug devices into a FireWire tree. That is, you can plug in a new device to a group of FireWire devices without switching off the power. When you plug in a new device, the change triggers a bus reset that erases the system’s stored memory of the previous set of devices. Then the entire chain of devices goes through the configuration process again and is assigned an address. The devices then identify themselves to one another and wait for data transfers to begin.

Arbitration

FireWire transfers data in packets, a block of data preceded by a header that specifies where the data goes and its priority. In the basic cable-based FireWire system, each device sharing a connection gets a chance to send one packet in an arbitration period that’s called a fairness interval. The various devices take turns until all have had a chance to use the bus. After each packet gets sent, a brief time called the sub-action gap elapses, after which another device can send its packet. If no devices start to transmit when the sub-action gap ends, all devices wait a bit longer, stretching the time to an arbitration reset gap. After that time elapses, a new fairness interval begins, and all devices get to send one more packet. The cycle continues.

To handle devices that need a constant stream of data for real-time display, such as video or audio signals, FireWire uses a special isochronous mode. Every 125 microseconds, one device in the FireWire that needs isochronous data sends out a special timing packet that signals that isochronous devices can transmit. Each takes a turn in order of its priority, leaving a brief isochronous gap delay between their packets. When the isochronous gap delay stretches out to the sub-action gap length, the devices using ordinary asynchronous transfers take over until the end of the 125-microsecond cycle when the next isochronous period begins.

The scheme guarantees that video and audio gear can move its data in real time with a minimum of buffer memory. (Audio devices require only a byte of buffer; video may need as many as six bytes.) The 125-microsecond period matches the sampling rate used by digital telephone systems to help digital telephone services.

The new 1394b standard also brings a new arbitration system called Bus Owner/Supervisor/Selector (BOSS). Under this scheme, a device takes control of the bus as the BOSS by being the last device to acknowledge the receipt of a packet sent to it (rather than broadcast over the entire tree) or by receiving a specific grant of control. The BOSS takes full command of the tree, even selecting the next node to be the BOSS.

Connectors

In the original FireWire version only a single, small, six-pin connector was defined for all purposes. Each cable had an identical connector on each end, and all FireWire ports were the same. The contacts were arranged in two parallel rows on opposite sides inside the metal-and-plastic shield of the connector. The asymmetrical “D” shape of the active end of the connector ensured that you plugged it in properly. Figure 11.5 shows this connector.

The revised 1394a standard added a miniaturized connector. To keep it compact, the design omitted the two power contacts. This design is favored for personal electronic devices such as camcorders. Figure 11.6 shows this connector.

The 1394b standard will add two more connectors to the FireWire arsenal, each with eight contacts. The Beta connector is meant for systems that use only the new 1394b signaling system and do not understand the earlier versions of the standard. In addition, the new standard defines a bilingual connector, one that speaks both the old and new FireWire standards. The new designs are keyed so that while both beta and bilingual connectors will fit a bilingual port, only a beta connector will fit a beta-only port. Figure 11.7 shows this keying system.

Cabling

In current form, FireWire uses ordinary copper wires in a special cable design. Two variations are allowed—one with solely four signal wires and one with the four signal wires and two power wires. In both implementations, data travels across two shielded twisted pairs of AWG 28 gauge wire, with a nominal impedance of 110 ohms. In the six-wire version, two AWG 22 gauge wires additionally carry power at 8 to 33 volts with up to 1.5 amperes to power a number of peripherals. Another shield will cover the entire collection of conductors.

The upcoming IEEE 1394b standard also allows for two forms of fiber optical connection—glass and plastic—as well as ordinary Category 5 twisted-pair network cable. The maximum length of plastic fiber optical connections is 50 meters (about 160 feet); for glass optical fiber, the maximum length is 100 meters (about 320 feet). Either style of optical connection can operate at speeds of 100 or 200Mbps. Category 5 wire allows connections of up to 100 meters but only at the lowest data rate sanctioned by the standard, which is 100Mbps.

All FireWire cables are crossover cables. That is, the signals that appear on pins 1 and 2 at one end of the cable “cross over” to pins 3 and 4 at the other end. This permits all FireWire ports to be wired the same and serve both as inputs and outputs. The same connector can be used at each end of the cable, and no keying is necessary, as is the case with USB.

The FireWire wiring scheme depends on each of the devices that are connected together to relay signals to the others. Pulling the plug to one device could potentially knock down the entire connection system. To avoid such difficulties and dependencies, FireWire uses its power connections to keep in operation the interface circuitry in otherwise inactive devices. These power lines could also supply enough current to run entire devices. No device may draw more than three watts from the FireWire bus, although a single device may supply up to 40 watts. The FireWire circuitry itself in each interface requires only about two milliwatts.

The FireWire wiring standard allows for up to 16 hops of 4.5 meters (about 15 feet) each. As with current communications ports, the standard allows you to connect and disconnect peripherals without switching off power to them. You can daisy-chain FireWire devices or branch the cable between them. When you make changes, the network of connected devices will automatically reconfigure itself to reflect the alterations.

IrDA

The one thing you don’t want with a portable computer is a cable to tether you down; yet most of the time you have to plug into one thing or another. Even a simple and routine chore like downloading files from your notebook machine into your desktop computer gets tangled in cable trouble. Not only do you have to plug in both ends, reaching behind your desktop machine is only a little more elegantly done than fishing into a catch basin for a fallen quarter—and, more likely than not, unplugging something else that you’ll inevitably need later only to discover the dangling cord—but you’ve got to tote that writhing cable along with you wherever you go. There has to be a better way.

There is. You can link your computer to other systems and components with a light beam. On the rear panel of many notebook computers, you’ll find a clear LED or a dark red window through which your system can send and receive invisible infrared light beams. Although originally introduced to allow you to link portable computers to desktop machines, the same technology can tie in peripherals such as modems and printers, all without the hassle of plugging and unplugging cables.

History

On June 28, 1993, a group of about 120 representatives from 50 computer-related companies got together to take the first step in cutting the cord. Creating what has come to be known as the Infrared Developers Association (IrDA), this group aimed at more than making your computer more convenient to carry. It also saw a new versatility and, hardly incidentally, a way to trim its own costs.

The idea behind the get together was to create a standard for using infrared light to link your computer to peripherals and other systems. The technology had already been long established, not only in television remote controls but also in a number of notebook computers already on the market. Rather than build a new technology, the goal of the group was to find common ground, a standard so that the products of all manufacturers could communicate with the computer equivalent of sign language.

Hardly a year later, on June 30, 1994, the group approved its first standard. The original specification, now known as IrDA version 1.0, essentially gave the standard RS-232C port an optical counterpart, one with the same data structure and, alas, speed limit. In August 1995, IrDA took the next step and approved high-speed extensions that pushed the wireless data rate to 4Mbps.

Overview

More than a gimmicky cordless keyboard, IrDA holds an advantage that makes computer manufacturers—particularly those developing low-cost machines—eye it with interest. It can cut several dollars from the cost of a complex system by eliminating some expensive hardware, a connector or two, and a cable. Compared to the other wireless technology, radio, infrared requires less space because it needs only a tiny LED instead of a larger and more costly antenna. Moreover, infrared transmissions are not regulated by the FCC as are radio transmissions. Nor do they cause interference to radios, televisions, pacemakers, and airliners. The range of infrared is more limited than radio and restricted to the line of sight over a narrow angle. However, these weaknesses can become strengths for those who are security conscious.

The original design formulated by IrDA was for a replacement for serial cables. To make the technology easy and inexpensive to implement with existing components, it was based on the standard RS-232C port and its constituent components. The original IrDA standard used asynchronous communication using the same data frame legacy serial ports as well as its data rates from 2400 to 115,200 bits per second.

To keep power needs low and prevent interference among multiple installations in a single room, IrDA kept the range of the system low, about one meter (three feet). Similarly, the IrDA system concentrates the infrared beam used to carry data because diffusing the beam would require more power for a given range and be prone to causing greater interference among competing units. The laser diodes used in the IrDA system consequently focus their beams into a cone with a spread of about 30 degrees.

After the initial serial-port replacement design was in place, IrDA worked to make its interface suitable for replacing parallel ports as well. That goal led to the creation of the IrDA high-speed standards for transmissions at data rates of 0.576, 1.152, and 4.0Mbps. The two higher speeds use a packet-based synchronous system that requires a special hardware-based communication controller. This controller monitors and controls the flow of information between the host computer’s bus and communications buffers.

Consequently, a watershed of differences separate low-speed and high-speed IrDA systems. Although IrDA designed the high-speed standard to be backward compatible with old equipment, making the higher speeds work requires special hardware. In other words, although high-speed IrDA devices can successfully communicate with lower-speed units, such communications are constrained to the speeds of the lower-speed units. Low-speed units cannot operate at high speeds without their hardware being upgraded.

IrDA defines not only the hardware but also the data format used by its system. The group has published six standards to cover these aspects of IrDA communications. The hardware itself forms the physical layer. In addition, IrDA defines a link access protocol termed IrLAP and a link management protocol called IrLMP that describe the data formats used to negotiate and maintain communications. All IrDA ports must follow these standards. In addition, IrDA has defined an optional transport protocol and optional Plug-and-Play extensions to allow for the smooth integration of the system into modern computers. The group’s IrCOMM standard describes a standard way for infrared ports to emulate conventional computer serial and parallel ports.

Infrared Light

Infrared light is invisible electromagnetic radiation that has a wavelength longer than that of visible light. Whereas you can see light that ranges in wavelength from 400 angstroms (deep violet) to 700 angstroms (dark red), infrared stretches from 700 angstroms to 1000 or more. IrDA specifies that the infrared signal used by computers for communication has a wavelength between 850 and 900 angstroms.

Data Rates

All IrDA ports must be able to operate at one basic speed—9600 bits per second. All other speeds are optional.

The IrDA specification allows for all the usual speed increments used by conventional serial ports, from 2400bps to 115,200bps. All these speeds use the default modulation scheme, Return-to-Zero Inverted (RZI). High-speed IrDA version 1.1 adds three additional speeds, 576Kbps, 1.152Mbps, and 4.0Mbps, based on a pulse-position modulation scheme.

Regardless of the speed range implemented by a system or used for communications, IrDA devices first establish communications at the mandatory 9600bps speed using the Link Access Protocol. Once the two devices establish a common speed for communicating, they switch to it and use it for the balance of their transmissions.

Pulse Width

The infrared cell of an IrDA transmitter sends out its data in pulses, each lasting only a fraction of the basic clock period or bit-cell. The relatively wide spacing between pulses makes each pulse easier for the optical receiver to distinguish.

At speeds up to and including 115,200 bits per second, each infrared pulse must be at least 1.41 microseconds long. Each IrDA data pulse nominally lasts just 3/16th of the length of a bit-cell, although pulse widths a bit more than 10 percent greater remain acceptable. For example, each bit cell of a 9600bps signal would occupy 104.2 microseconds (that is, one second divided by 9600). A typical IrDA pulse at that data rate would last 3/16th that period, or 19.53 microseconds.

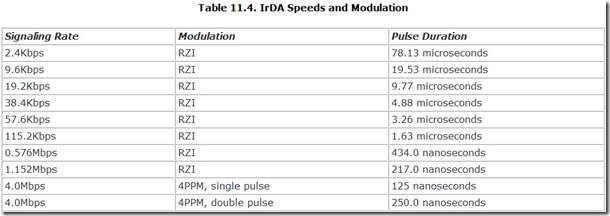

At higher speeds, the minimum pulse length is reduced to 295.2 nanoseconds at 576Kbps and to only 115 nanoseconds at 4.0Mbps. At these higher speeds, the nominal pulse width is one-quarter of the character cell. For example, at 4.0Mbps, each pulse is only 125 nanoseconds long. Again, pulses about 10 percent longer remain permissible. Table 11.4 summarizes the speeds and pulse lengths.

Modulation

Depending on the speed at which a link operates, it may use one of two forms of modulation. At speeds lower than 4.0Mbps, the system employs Return-to-Zero Invert (RZI) modulation.

At the 4.0Mbps data rate, the IrDA system shifts to pulse position modulation. Because the IrDA system involves four discrete pulse positions, it is abbreviated 4PPM.

IrDA requires data to be transmitted only in eight-bit format. In terms of conventional serial-port parameters, a data frame for IrDA comprises a start bit, eight data bits, no parity bits, and a stop bit, for a total of 10 bits per character. Note, however, that zero insertion may increase the length of a transmitted byte of data. Any inserted zeroes are removed automatically by the receiver and do not enter the data stream. No matter the form of modulation used by the IrDA system, all byte values are transmitted with the least significant bit first.

Note that with RZI modulation, long sequences of logical ones will suppress pulses for the entire duration of the sequence. To prevent such a lengthy gap from appearing in the signal and causing a loss of sync, moderate speed IrDA systems add extra pulses to the signal with bit-stuffing (as discussed in Chapter 8).

Format

The IrDA system doesn’t deal with data at the bit or byte level but instead arranges the data transmitted through it in the form of packets, which the IrDA specification also terms frames. A single frame can stretch from 5 to 2050 bytes (and sometimes more) in length. As with other packetized systems, an IrDA frame includes address information, data, and error correction, the last of which is applied at the frame level. The format of the frame is rigidly defined by the IrDA Link Access Protocol standard, discussed later.

Aborted Frames

Whenever a receiver detects a string of seven or more consecutive logical ones—that is, an absence of optical pulses—it immediately terminates the frame in progress and disregards the data it received (which is classed as invalid because of the lack of error-correction data). The receiver then awaits the next valid frame, signified by a start-of-frame flag, address field, and control field. Any frame that ends in this summary manner is termed an aborted frame.

A transmitter may intentionally abort a frame or a frame may be aborted because of an interruption in the infrared signal. Anything that blocks the light path will stop infrared pulses from reaching the receiver and, if long enough, abort the frame being transmitted.

Interference Suppression

High-speed systems automatically mute lower-speed systems that are operating in the same environment to prevent interference. To stop the lower-speed link from transmitting, the high-speed system sends out a special Serial Infrared Interaction Pulse (SIP) at intervals no longer than half a second. The SIP is a pulse 1.6 microseconds long, followed by 7.1 microseconds of darkness, parameters exactly equal to a packet start pulse. When the low-speed system sees what it thinks is a start pulse, it automatically starts looking for data at the lower rates, suppressing its own transmission for half a second. Before it has a chance to start sending its own data (if any), another SIP quiets the low-speed system for the next half second.

Bluetooth

Radio yields the most versatile connection system: no wires and no worries. Because radio waves can slip through most office walls, desktop ornaments, office supplies, and even employees, radio-based links eliminate the line-of-sight requirements of optical links such as IrDA. Linking devices with radio waves consequently yields the most convenient connection for workers to free their peripherals from the chains of interconnecting cables. A radio link can provide a reliable, cord-free connection that eliminates the snarl on the rear of every desktop PC. It also allows you to link your wireless devices—in particular, your cell phone—to your PC and keep everything wireless. Hardly a novel idea, of course, but one that has been a long time coming for practical connections.

Finally, a single standard may bring the wireless dream to life. To provide common ground and a standard for radio-based connections between PCs, their peripherals, and related communications equipment, several major corporations worked together to develop the Bluetooth specification. They designed the standard for the utmost in convenience, coupled with low cost but sacrificing range—Bluetooth is a short-range system suitable for linking devices in an office suite rather than across miles like a cell phone.

Originally conceived as a way to link cellular devices to PCs, the actual specification transcends its origins. Bluetooth makes possible not only cell phone connections but also could allow you to use your keyboard or mouse without a physical connection to your PC and without fretting about office debris blocking optical signals. But Bluetooth is more than a simple interface. It can become a small wireless network of intercommunicating devices, handling both voice and data with equal ease. Although not a rival to traditional networking systems—its speed limitations alone see to that—Bluetooth adds versatility that combines cell phone and PC technology.

The Bluetooth promoters refer to its multidevice links as a piconet (smaller than even a micronet), able to link up to eight devices. Bluetooth allows even greater assemblages of equipment by linking piconets together and accommodating temporarily inactive equipment within its reach.

On the other hand, the data speed of the Bluetooth system is modest. At most, Bluetooth can move bits at a claimed rate of about 723Kbps asymmetrically—that is, the high rate is in one direction; the return channel is slower, about one-fifth that rate. Moreover, Bluetooth slows to accommodate bidirectional data and phone conversations. Despite the modest data rate, however, the Bluetooth bit-rate is high enough to handle three simultaneous telephone conversations or a combination of voice and data simultaneously.

Good as it sounds, Bluetooth currently has a number of handicaps to overcome. It is not supported by any version of Microsoft Windows in current release (including the initial release of Windows XP). Microsoft, however, promises to add its own native support for Bluetooth in subsequent releases of Windows.

History

Certainly Bluetooth was not the first attempt at creating radio-based data links for computers. Wireless schemes for exchanging data have been around longer than personal computers. But Bluetooth differs from any previous radio-based data-exchange system in that it was conceived as an open standard for the computer and communications industries to facilitate the design of compatible wireless hardware.

As with so many modern standards, Bluetooth represents the work of an industry consortium. In May 1998, representatives from five major corporations involved with PCs, office equipment, and cellular telephones jointly conceived the idea of Bluetooth and began working toward creating the standard. The five founders were Ericsson (Telefonaktiebolaget LM Ericsson), International Business Machines Corporation, Intel Corporation, Nokia Corporation, and Toshiba Corporation. Together they formed the Bluetooth Special Interest Group (SIG) and started work on the standard and the technologies needed to make it a reality. The SIG released the first version of the specification, Bluetooth 1.0, on July 24, 1999. A slightly revised version was released in December 1999.

Membership in the Bluetooth SIG grew to nine on December 1, 1999, when 3Com Technologies, Lucent Technologies, Microsoft Corporation, and Motorola, Inc., joined the group. In addition, over 1,200 individuals and companies have adopted the technology by entering an agreement with the SIG that allows them to use the standard and share the intellectual property required to implement it.

Although support for Bluetooth has been slow in coming, manufacturers have adapted the technology for low-speed computer peripherals (such as wireless keyboards and mice). Owing to the success of other wireless technologies, most successful applications of Bluetooth are in communications products.

Overview

Bluetooth is a wireless packetized communications system that allows multiple devices to share data in a small network. Heir to both cell phone and digital technologies, it nestles between several existing standards, embracing them. It can link to your PC using a USB connection, and it shares logical layers with IrDA. It not only handles data like a traditional serial port but also can carry more than 60 RS-232C connections.

In theory, a Bluetooth system operates entirely transparently. Devices link themselves together without you having to do anything. All you need to do is turn on your Bluetooth devices and bring them within range of one another. For example, available devices should automatically pop up on your Windows desktop—at least once Windows gains Bluetooth support. You can then drag files to and from the device as if it were a local Windows resource.

Behind the scenes, however, things aren’t quite so simple. The Bluetooth system must accommodate a variety of device and data types. It needs to keep in constant contact with each device. It must be able to detect when a new device appears and when other devices get switched off or venture out of range. It has to moderate the conversations between units, ensuring they don’t all try to talk at the same time and interfere with one another.

Software Side

As an advanced interface, Bluetooth heavily processes the raw data it transmits. It repackages serial data bits into packets with built-in error control. It then combines a series of packets of related serial data into links. It further processes the links through multiplexing so that several serial streams can simultaneously share a single Bluetooth connection.

Bluetooth packetizes data, breaking a serial input stream into small pieces, each containing address and optional error-correction information. A series of packets that starts as a single data stream and is later reconstructed into a replica of that stream is a link.

The Bluetooth standard supports two kinds of data links: synchronous and asynchronous. Synchronous data is typically voice information, such as audio from telephone conversations. Asynchronous data is typically computer data. The chief difference is that synchronous data is time dependent, so synchronous packets get transmitted once without regard to their reception. If a synchronous packet gets lost during transmission, it is forever lost.

Synchronous links between Bluetooth devices provide a full-duplex channel with an effective data rate of 64Kbps in each direction. In effect, a synchronous link is a standard digital telephone channel with eight-bit resolution and an 8-KHz sampling rate. The Bluetooth standard allows for two devices to simultaneously share three such synchronous links, the equivalent of three real-time telephone conversations. All links begin asynchronously because commands can only be sent in asynchronous packets. After the link is established, the master and slave can negotiate to switch over to a synchronous link for voice transfers or to move data asynchronously.

Each piconet has a single master and, potentially, multiple slaves. Each piconet shares a single communications channel with all the devices (master and slave) locked together on a common frequency and using a common clock, as discussed later. The single channel is subdivided into one or more links—asynchronous and/or synchronous.

To handle contention between multiple links on its single channel, Bluetooth uses time-division multiplexing. That is, each separate packet of a link gets a time period for transmission. The standard divides the communications channel into time slots, each 625 microseconds long. The shortest single packet fits a single slot with room to spare, although Bluetooth allows packets to stretch out for up to five slots. Bluetooth allows a maximum length of single-slot packets of 366 microseconds. The system accommodates larger packets by letting them extend through up to five slots, filling the entire time of four of the slots and part of the fifth.

In the Bluetooth system, each packet also defines a hop. That is, after each packet is sent, the Bluetooth system switches to (or hops to) another carrier frequency. As noted later, frequency-hopping helps ensure the integrity of Bluetooth transmissions. The minimum hop length corresponds to a single slot, although a hop can last for up to five slots to accommodate a long packet.

The time division duplexing of the Bluetooth system works by assigning even-numbered slots to the master and odd-numbered slots to the slaves. Masters can begin their transmissions only in even-numbered slots. If a packet lasts for an even number of slots (two or four), no slave can begin until the next odd-numbered slot. In effect, then, packets use an odd number of slots even if they use only a shorter, even number of slots.

Hardware Side

Bluetooth hardware provides the connection that carries the processed and packetized data. Although in that Bluetooth makes a wireless connection, its hardware is essentially invisible—the system requires a collection of circuits to transmit and receive the data properly.

As with all radio systems, Bluetooth starts with a carrier wave and modulates it with data. Unlike most common radio systems, however, Bluetooth does not use a single fixed carrier frequency but rather hops to different frequencies more than a thousand times each second. As a serial transmission system, time is important to Bluetooth to sort out data bits. Each Bluetooth device maintains a clock that helps it determine when each bit in its serial stream appears. Bluetooth cleverly combines these necessary elements to make a wireless communications network.

Clocks

Each Bluetooth device has its own internal clock that paces its communications. The clock of each device operates independently at approximately the necessary rate.

For the Bluetooth signals to be effectively demodulated, the clocks of the master and slaves must be synchronized. The master device sets the frequency for all the slaves with which it communicates. The slaves determine the exact frequency of the clock from the packet data. The preamble of each packet contains a predetermined pattern of several cycles, which the slaves can use to achieve synchrony. The Bluetooth system does not alter the operation of the clock of the slaves, however. Instead, it stores the difference between the master and slave clocks and uses this difference value to maintain its lock on the master.

When another master takes control during a communication session, each slave readjusts the stored difference value to maintain its precise frequency coordination.

Topology

Bluetooth is designed to handle a variety of connection types. The basic link is point-to-point, two devices communicating only with one another. In such a link, one device operates as the master and the other as the slave. In a piconet configuration, a single master can communicate with up to seven active slaves (a total of eight devices intercommunicating). In addition, other slaves may lock on to the master’s signal and be ready to communicate without sending out active signals. Such inactive slaves are said to be in a parked state.

The master in the piconet determines which devices can communicate (that is, which slaves are active or parked). In addition, several piconets can be linked together into a scatternet, with the master of one piconet communicating to a master or slave in another.

Frequencies

Bluetooth operates at radio frequencies assigned to industrial, scientific, and medical devices; a range termed the ISM band. This range of frequencies in the UHF (Ultra High Frequency) band has been set aside throughout most of the world for unlicensed, low-power electronic equipment. Near the top of the UHF range, the ISM band uses frequencies about twice that of the highest UHF television channel.

The exact frequencies available vary somewhat in North America, Europe, and Japan. In addition, France and Spain differ from the rest of Europe (although both countries are working on moving to the standards used throughout the rest of Europe).

Bluetooth uses channels one megahertz wide for its signals. Rather than operating on a single channel, a Bluetooth system uses them all. It uses the channels one at a time but switches between them to help minimize interference and fading. It can also help keep communications secure. Only the devices participating in a piconet know which channel they will hop to next.

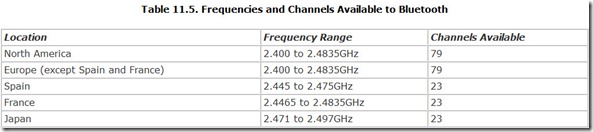

In Europe (except France and Spain) and North America, the Bluetooth system can hop between 79 different channels. Elsewhere, the choices are limited to 23 channels. The available frequencies and number of channels available are summarized in the Table 11.5.

A given Bluetooth system does not operate on one frequency but rather uses them all, hopping from one channel to another, up to 1,600 times per second. If a given asynchronous packet does not get through on one frequency due to interference (and is therefore not acknowledged), the next hop will send out a duplicate packet at a different frequency.

Unfortunately, Bluetooth does not have the entire 2.4GHz band to itself. The IEEE 802.11 wireless-networking standard currently uses the same frequencies, and interference between the two systems (where both are active) is inevitable. Although in the long term IEEE 802.11 will migrate to the 5GHz frequency range, at present the only way to entirely prevent interference between the two systems is to use one or the other, not both.

Power

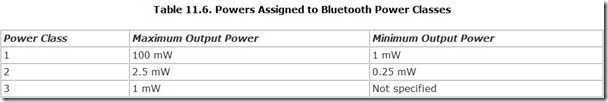

The Bluetooth specification defines three classes of equipment based on transmitter power. Class 1 devices are the most powerful and can transmit with up to 100 milliwatts of output power. Class 3 devices transmit with less than 1 milliwatt. Table 11.6 lists the maximum and minimum output powers for each power class.

As with any radio-based system, greater power increases the coverage area, so a Class 1 device will have greater range than a Class 3 device (about 10 times greater because radio propagation follows an inverse-square law). On the downside, greater output power means the need for greater input power, which directly translates into battery drain. That 100 mW of output power will require about 100 times the battery power as a 1-mW device. Fortunately, even Class 1 devices are modest power consumers compared to other facets of notebook computers. For example, the power needs of a Class 1 device are less than one-tenth the demand of a typical display screen.

Modulation

Bluetooth uses Gaussian frequency shift keying (FSK)—that is, the presence of a data bit alters (or shifts) the frequency of the carrier wave. Bluetooth specifies the polarity of the FSK modulation. It represents a binary one with a positive deviation of the carrier wave and a binary zero with a negative deviation. The raw data rate is 1Mbps (one million symbols per second).

Because of how the digital code affects the frequency shift keying modulation, the information content of the modulation affects the deviation of the signal. The Bluetooth standard specifies that the minimum deviation should never be smaller than 115KHz. The maximum deviation will be between 140 and 175KHz.

Components

Bluetooth architecture builds a system from three parts: a radio unit, a link control unit, and a support unit that provides link management and the host terminal interface. These are functional divisions, and all will be integrated into most handheld Bluetooth devices. In your PC, all three will likely reside on a Bluetooth interface card that installs like any other expansion board in a standard PCI slot.

The radio unit implements the hardware aspects of Bluetooth described earlier. It determines the power and coverage of the Bluetooth device, and its circuitry creates the carrier wave (altering its frequency for each hop), modulates it, amplifies it, and radiates it through an antenna. The ultra-high frequencies used by the Bluetooth system have a short wavelength that allows the antenna to be integrated invisibly into the cases of many mobile devices.

The link control unit is the mastermind of the Bluetooth system. It implements the various control and management protocols for setting up and maintaining the wireless connection. It searches out and identifies new devices wanting to join the piconet, tracks the frequency hopping, and controls the operating state of the device.

The support unit provides the actual interface between the logic of the host device and the Bluetooth connection. It adapts the signals of the host to match the Bluetooth system, both electrically and logically. For example, in a PC-based Bluetooth interface card, the support unit adapts the parallel bus signals of the PCI connection into the packetized serial form used by Bluetooth. It also checks data coming in from the wireless connection for errors and requests retransmission when necessary.

Standards and Coordination

The Bluetooth Special Interest Group promulgates the Bluetooth specifications. It also facilitates the licensing of Bluetooth intellectual property. You can obtain the complete specification from the SIG at www.bluetooth.com.

RS-232C Serial Ports

The day you win the vmega-lottery and instantly climb into wealth and social status, you may be tempted to leave your old friends to belch and scratch while drinking someone else’s beer. But it’s hard to leave old friends behind, particularly when you need someone to watch the house during your ’round-the-world cruise. So it is with the classic RS-232C port. It’s got so many bad habits it’s hard to talk about in polite company, but it’s just too dang useful to forget about.

The serial port is truly the old codger of computer interfaces, a true child of the ’60s. An industry trade group, the Electronics Industry Association (EIA) hammered out the official RS-232C specification in 1969, but the port had been in use for years at the time. It found ready acceptance on the first personal computer because no other electronic connection for data equipment was so widely used. The ports survive today because some folks still want to connect gear they bought in 1969 to their new computers.

Electrical Operation

RS-232C ports are asynchronous. They operate without a clock signal. But in order for two devices to communicate they need at least a general idea of what rate to expect data. Consequently, you must set the speed of each RS-232C port before you begin communicating, and the speeds of any two connected ports must match.

You have quite a wide variety to choose from. The serial ports in computers generally operate at any speed in the odd-looking sequence that runs 150, 300, 600, 1200, 2400, 4800, 9600, 19,200, 38,400, 57,600, and 115,200 bits per second.

The RS-232C moves data one byte at a time. To suit its asynchronous nature, each byte requires its own packing into a serial frame. In this form the typical serial port takes about a dozen bits to move a byte—a frame comprises two start bits, eight data bits, one parity bit, and one stop bit to indicate the end of the frame. As a result, a serial port has overhead of about one-third of its potential peak data rate. A 9600 bit per second serial connection actually moves text at about 800 characters per second (6400bps).

A basic RS-232C connection needs only three connections: one for sending data, one for receiving data, and a common ground. Most serial links also use hardware flow-control signals. The most common serial port uses eight separate connections.

RS-232C port use single-ended signaling. Although this design simplifies the circuitry to make the ports, it also limits the potential range of a connection. Long cable runs are apt to pick up noise and blur high-data-rate signals. You can probably extend a 9600bps connection to a hundred feet or more. At a quarter mile, you’ll probably be down to 1200 or 300bps (slower than even cheap printers can type).

Because the RS-232C port originated in the data communications rather than computer industry, some of its terminology is different from that used to describe other ports. For example, the low and high logic states are termed space and mark in the RS-232C scheme. Space is the absence of a bit, and mark is the presence of a bit. On the serial line, a space is a positive voltage; a mark is a negative voltage.

In other words, when you’re not sending data down a serial line, it has an overall positive voltage on it. Data will appear as a serial of negative-going pulses. The original design of the serial port specification called for the voltage to shift from a positive 12 volts to negative 12 volts. Because 12 volts is an uncommon potential in many computers, the serial voltage often varies from positive 5 to negative 5 volts.

Connectors

The physical manifestation of a serial port is the connector that glowers on the rear panel of your computer. It is where you plug your serial peripheral into your computer. And it can be the root of all evil—or so it will seem after a number of long evenings during which you valiantly try to make your serial device work with your computer, only to have text disappear like phantoms at sunrise. Again, the principal problem with serial ports is the number of options they allow designers. Serial ports can use either of two styles of connectors, each of which has two options in signal assignment. Worse, some manufacturers venture bravely in their own directions with the all-important flow-control signals. Sorting out all these options is the most frustrating part of serial port configuration.

25-Pin

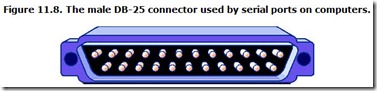

The basic serial port connector is called a 25-pin D-shell. It earns its name from having 25 connections arranged in two rows that are surrounded by a metal guide that roughly takes the form of a letter D. The male variety of this connector—the one that actually has pins inside it—is normally used on computers. Most, but hardly all, serial peripherals use the female connector (the one with holes instead of pins) for their serial ports. Although both serial and parallel ports use the same style 25-pin D-shell connectors, you can distinguish serial ports from parallel ports because on most computers the latter use female connectors. Figure 11.8 shows the typical male serial port DB-25 connector that you’ll find on the back of your computer.

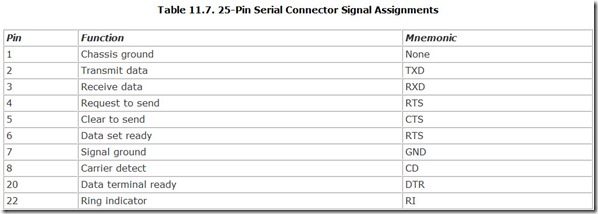

Although the serial connector allows for 25 discrete signals, only a few of them are ever actually used. Serial systems may involve as few as three connections. At most, computer serial ports use 10 different signals. Table 11.7 lists the names of these signals, their mnemonics, and the pins to which they are assigned in the standard 25-pin serial connector.

Note that in the standard serial cable, the signal ground (which is the return line for the data signals on pins 2 and 3) is separated from the chassis ground on pin 1. The chassis ground pin is connected directly to the metal chassis or case of the equipment, much like the extra prong of a three-wire AC power cable, and it provides the same protective function. It ensures that the case of the two devices linked by the serial cable are at the same potential, which means you won’t get a shock if you touch both at the same time. As wonderful as this connection sounds, it is often omitted from serial cables. On the other hand, the signal ground is a necessary signal that the serial link cannot work without. You should never connect the chassis ground to the signal ground.

Nine-Pin

If nothing else, using a 25-pin D-shell connector for a serial port is a waste of at least 15 pins. Most serial connections use fewer than the complete 10; some as few as four with hardware handshaking, and three with software flow control. For the sake of standardization, the computer industry sacrificed the cost of the other unused pins for years until a larger—or smaller, depending on your point of view—problem arose: space. A serial port connector was too big to fit on the retaining brackets of expansion boards along with a parallel connector. In that all the pins in the parallel connector had an assigned function, the serial connector met its destiny and got miniaturized.

Moving to a nine-pin connector allowed engineers to put connectors for both a serial port and a parallel port on the retaining bracket of a single expansion board. This was an important concern because all ports in early computers were installed on expansion boards. Computer makers could save the cost of an entire expansion board by putting two ports on one card. Later, after most manufacturers moved to putting ports on computer motherboards, the smaller port design persisted.

As with the 25-pin variety of serial connector, the nine-pin serial jack on the back of computers uses a male connector. Figure 11.9 shows the nine-pin male connector that’s used on some computers for serial ports.

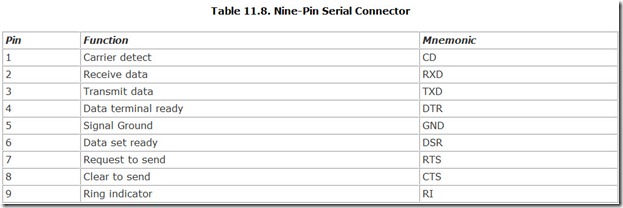

Nine-pin connectors necessarily have different pin assignments than 25-pin connectors. Table 11.8 lists the signal assignments on the most common nine-pin implementation of the RS-232C port.

Other than the rearrangement of signals, the nine-pin and 25-pin serial connectors are essentially the same. All the signals behave identically, no matter the size of the connector on which they appear.

Signals

Serial communications is an exchange of signals across the serial interface. These signals involve not just data but also the flow-control signals that help keep the data flowing as fast as possible—but not too fast.

First, we’ll look at the signals and their flow in the kind of communication system for which the serial port was designed—linking a computer to a modem. Then we’ll examine how attaching a serial peripheral to a serial port complicates matters and what you can do to make the connection work.

Definitions

As with space and mark, RS-232C ports use other odd terminology. Serial terminology assumes that each end of a connection has a different type of equipment attached to it. One end has a data terminal connected to it. In the old days when the serial port was developed, a terminal was exactly that—a keyboard and a screen that translated typing into serial signals. Today, a terminal is usually a computer. For reasons known but to those who revel in rolling their tongues across excess syllables, the term Data Terminal Equipment is often substituted. To make matters even more complex, many discussions talk about DTE devices, which means exactly the same thing as data terminals.

The other end of the connection has a data set, which corresponds to a modem. Often engineers substitute the more formal name Data Communication Equipment or talk about DCE devices.

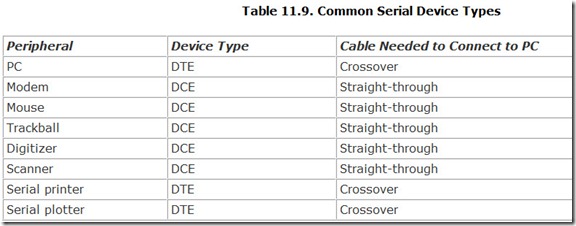

The distinction between data terminals and data sets (or DTE and DCE devices) is important. Serial communications were originally designed to take place between one DTE and one DCE, and the signals used by the system are defined in those terms. Moreover, the types of RS-232 serial devices you wish to connect determines the kind of cable you must use. First, however, let’s look at the signals; then we’ll consider what kind of cable you need to carry them.

Transmit Data

The serial data leaving the RS-232 port travels on what is called the Transmit Data line, which is usually abbreviated TXD. The signal on it comprises the long sequence of pulses generated by the UART in the serial port. The data terminal sends out this signal, and the data set listens to it.

Receive Data

The stream of bits going the other direction—that is, coming in from a distant serial port—goes through the ReceiveData line (usually abbreviated RXD) to reach the input of the serial port’s UART. The data terminal listens on this line for the data signal coming from the data set.

Data Terminal Read

When the data terminal is able to participate in communications—that is, it is turned on and in the proper operating mode—it signals its readiness to the data set by applying a positive voltage to the Data Terminal Ready line, which is abbreviated DTR.

Data Set Ready

When the data set is able to receive data—that is, it is turned on and in the proper operating mode—it signals its readiness by applying a positive voltage to the Data Set Ready line, which is abbreviated DSR. Because serial communications must be “two way,” the data terminal will not send out a data signal unless it sees the DSR signal coming from the data set.

Request to Send

When the data terminal is on and capable of receiving transmissions, it puts a positive voltage on its Request to Send line, usually abbreviated RTS. This signal tells the data set that it can send data to the data terminal. The absence of an RTS signal across the serial connection will prevent the data set from sending out serial data. This allows the data terminal to control the flow of the data set to it.

Clear to Send

The data set, too, needs to control the signal flow from the data terminal. The signal it uses is called Clear to Send, which is abbreviated CTS. The presence of the CTS signal in effect tells the data terminal that the coast is clear and the data terminal can blast data down the line. The absence of a CTS signal across the serial connection will prevent the data terminal from sending out serial data.

Carrier Detect

The serial interface standard shows its roots in the communication industry with the Carrier Detect signal, which is usually abbreviated CD. This signal gives a modem, the typical data set, a means of signaling to the data terminal that it has made a connection with a distant modem. The signal says that the modem or data set has detected the carrier wave of another modem on the telephone line. In effect, the carrier detect signal gets sent to the data terminal to tell it that communications are possible. In some systems, the data terminal must see the carrier detect signal before it will engage in data exchange. Other systems simply ignore this signal.

Ring Indicator

Sometimes a data terminal has to get ready to communicate even before the flow of information begins. For example, you might want to switch your communications program into answer mode so that it can deal with an incoming call. The designers of the serial port provided such an early warning in the Ring Indicator signal, which is usually abbreviated RI. When a modem serving as a data set detects ringing voltage—the low-frequency, high-voltage signal that makes telephone bells ring—on the telephone line to which it is connected, it activates the RI signal, which alerts the data terminal to what’s going on. Although useful in setting up modem communications, you can regard the ring indicator signal as optional because its absence usually will not prevent the flow of serial data.

Signal Ground

All the signals used in a serial port need a return path. The signal ground provides this return path. The single ground signal is the common return for all other signals on the serial interface. Its absence will prevent serial communications entirely.

Flow Control

Serial ports can use both hardware and software flow control. Hardware flow control involves the use of special control lines that can be (but don’t have to be) part of a serial connection. Your computer signals whether it is ready to accept more data by sending a signal down the appropriate wire. Software flow control involves the exchange of characters between computer and serial peripherals. One character tells the computer your peripheral is ready, and another warns that it can’t deal with more data. Both hardware and software flow control take more than one form. As a default, computer serial ports use hardware flow control (or hardware handshaking). Most serial peripherals do, too. In general, hardware flow control uses the Carrier Detect, Clear to Send, and Data Set Ready signals.

Software flow control requires your serial peripheral and computer to exchange characters or tokens to indicate whether they should transfer data. The serial peripheral normally sends out one character to indicate it can accept data and a different character to indicate that it is busy and cannot accommodate more. Two pairs of characters are often used: XON/XOFF and ETX/ACK.

Cables

The design of the standard RS-232 serial interface anticipates that you will connect a data terminal to a data set. When you do, all the connections at one end of the cable that link them are carried through to the other end—pin for pin, connection for connection. The definitions of the signals at each end of the cable are the same, and the function and direction of travel (whether from data terminal to data set or the other way around) of each are well defined. Each signal goes straight through from one end to the other. Even the connectors are the same at either end. Consequently, a serial cable should be relatively easy to fabricate.

In the real world, nothing is so easy. Serial cables are usually much less complicated or much more complicated than this simple design. Unfortunately, if you plan to use a serial connection for a printer or plotter, you have to suffer through the more complex design.

Straight-Through Cables

Serial cables are often simpler than pin-for-pin connections from one end to the other because no serial link uses all 25 connector pins. Even with the complex handshaking schemes used by modems, only nine signals need to travel from the data terminal to the data set, computer to modem. (For signaling purposes, the two grounds are redundant—most serial cables do not connect the chassis ground.) Consequently, you need only make these 10 connections to make virtually any data terminal–to–data set link work. Assuming you have a 25-pin D-shell connector at either end of your serial cable, the essential pins that must be connected are 2 through 8, 20, and 22 on a 25-pin D-shell connector. This is usually called a nine-wire serial cable because the connection to pin 7 uses the shield of the cable rather than a wire inside. With nine-pin connectors at either end of your serial cable, all nine connections are essential.

Not all systems use all the handshaking signals, so you can often get away with fewer connections in a serial cable. The minimal case is a system that uses software handshaking only. In that case, you need only three connections: Transmit Data, Receive Data, and the signal ground. In other words, you need only connect pins 2, 3, and 7 on a 25-pin connector or pins 2, 3, and 5 on a nine-pin serial connector (providing, of course, you have the same size connector at each end of the cable).