MPEG Systems

When the CD system was launched, following the commercial failure (in the United Kingdom, but not elsewhere) of the earlier laser-disc moving picture system, there was no form of compression of data used. The whole system was designed with a view to recording an hour of music on a disc of reasonable size, and the laser scanning system that was developed from the earlier “silver disc” was quite capable of achieving tight packing of data, sufficient for the needs of audio.

Data compression was, by that time, fairly well developed, but only for computer data, and by the start of the 1980s several systems were in use. Any form of compression for audio use had to be standardized so that it would be as universal as the compact cassette and the CD, and in 1987 the standardizing institutes started to work on a project known as EUREKA, with the aim of developing an algorithm (a procedure for manipulating data) for video and audio compression. This has become the standard known as ISO MPEG Audio Layer-3. The letters MPEG stand for Moving Picture Expert Group, because the main aim of the project was to find a way of tightly compressing digital data that could eventually allow a moving picture to be contained in a CD, even though the CD as used for audio was not of adequate capacity (see 21.9 DVD).

As far as audio signals are concerned, the standard CD system uses 16-bit samples that are recorded at a sampling rate of more than twice the actual audio bandwidth, typically 44 kHz. Without any compression, this requires about 8.8 Mbytes of data per minute of playing time. The MPEG coding system for audio allows this to be compressed by a factor of 12, without losing perceptible sound quality. If a small reduction in quality is allowable, then factors of 24 or more can be used. Even with such high compression ratios the sound quality is still better than can be achieved by reducing either the sampling rate or the number of bits per sample. This is because MPEG operates by what are termed perceptual coding techniques, meaning that the system is based on how the human ear perceives sound.

The MPEG-1 Layer III algorithm is based on removing data relating to frequencies that the human ear cannot cope with. Taking away sounds that you cannot hear will greatly reduce the amount of data required, but the system is lossy, in the sense that the removed data cannot be reinstated. The compression systems used for computer programs, by contrast, cannot be lossy because every data bit is important; there is no unperceived data. Compressing other computer data, notably pictures, can be very lossy, so that the JPEG (Joint Photographic Expert Group) form of compression can achieve even higher compression ratios.

The two features of human hearing that MPEG exploits are its nonlinearity and the adaptive threshold of hearing. The threshold of hearing is defined as the level below which a sound is not heard. This is not a fixed level; it depends on the frequency of the sound and varies even more from one person to another. Maximum sensitivity occurs in the frequency range 2–5 kHz. Whether or not you hear a sound therefore depends on the frequency of the sound and the amplitude of the sound relative to the threshold level for that frequency.

The threshold of hearing adapts to the sounds that are heard, so that the threshold increases greatly, for example, when loud noises accompany soft music. The louder sound masks the softer, and the term masking is used of this effect. Note that this is in direct contradiction of the “cocktail-party effect,” which postulates the ability of the ear to focus on a wanted sound in the presence of a louder unwanted sound.

The masking effect is particularly important in orchestral music recording. When a full orchestra plays fortissimo then the instruments that contribute least to the sound are, according to many sources, not heard. A CD recording will contain all of this information, even if a large part of it is redundant because it cannot be perceived. By recording only what can be perceived, the amount of music that can be recorded on a medium such as a CD is increased greatly, which can be done without any perceptible loss of audio quality.

Musicians will feel uneasy about this argument because they and many others feel that every instrument makes a contribution. Can you imagine what an orchestra would sound like if the softer instruments were not played in any fortissimo passage? Would it still be fortissimo? Would we end up with a brass band, without strings or woodwinds? My own view is that the masking theory is not applicable to live music, but it may well apply to sound that we hear through the restricted channels of loudspeakers. In addition, how will a compressed recording sound when compared to a version using HCDC technology?

MPEG coding starts with circuitry described as a perceptual subband audio encoder. The action of this section is to analyze continually the input audio signal and, from this information, prepare data (the masking curve) that define the threshold level below which nothing will be heard. The input is then divided off in frequency bands, called subbands.

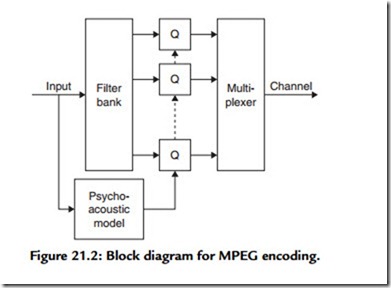

Each subband is quantized separately, controlling the quantization so that the quantization noise will be below the masking curve level for that subband. Data on the quantization used for a subband are held along with the coded audio for that subband so that the decoder can reverse the process. Figure 21.2 shows the block diagram for the encoding process.

Layers

MPEG1, as applied to audio signals, can be used in three modes, called layers I, II, and III. An ascending layer number means more compression and more complex encoding.

Layer I is used in home recording systems and for solid-state audio (sound that has been recorded on chip memory, used for automated voices, etc.).

Layer II offers more compression than layer I and is used for digital audio broadcasting, television, telecommunications, and multimedia work. The bit rates that can be used range from 32 to 192 Kbit/s for mono and from 64 to 384 Kbit/s for stereo. The highest quality, approaching CD levels, is obtained using about 192–256 Kbit/s per stereo pair of channels. The precise figure depends on how complex an encoder is used. In general, the encoder is from two to four times more complex than the level I encoder, but the decoder need be only about 25% more complex. MPEG level II is used in applications such as CD-i full-motion video, video CD, solid state audio, disc storage and editing, DAB, DVD, cable and satellite radio, cable and satellite TV, ISDN links, and film sound tracks.

Layer III offers even more compression and is used for the most demanding applications for narrow band telecommunications and other specialized professional audio areas of audio work. It has found much more use as a compression system for MP3 files (see MP3).

MPEG-1 is intended to be flexible in use, so that a wide range of bit rates from 32 to 320 Kbit/s can be used, with a low sampling frequency (LSF) of 8 Kbit/s added later. Layer III allows the use of a variable bit rate, with the figure in the header taken as the average. Decoders for layers I and II need not support this feature, but most do.

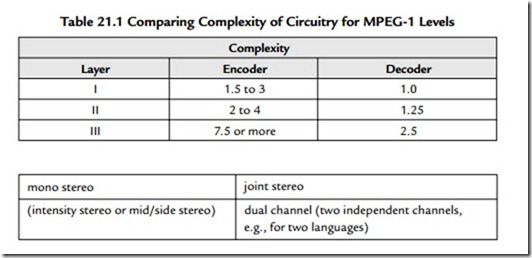

Table 21.1 shows the relative complexity of encoding and decoding for the three levels of MPEG-1. The encoding process is always more complex, but the relative complexity of the decoder is less.

MPEG-1 coding can be applied to mono or stereo signals, and the stereo system makes use of joint stereo coding, a system that achieves further compression by seeking out redundancy between the two channels of a stereo signal. The system supports four modes:

When the digital signal has been encoded, it is divided into blocks of 384 samples (layer I) or 1152 samples (layers II and III) to form the unit MPEG-1 frame. A complete MPEG-1 audio stream consists of a set of consecutive frames, with each frame consisting of a header and encoded sound data. The header of a frame contains general information such as the MPEG layer, the sampling frequency, the number of channels, whether the frame is CRC protected, whether the sound is an original, and so on. Each audio frame uses a separate header so as to simplify synchronization and bit stream editing, even if much of the information is repeated and hence redundant. A layer III frame can achieve further compression by distributing its encoded sound data over several other consecutive frames if those frames do not require all of their bits.

One important point about all digital audio systems is that the analogue concept of S/N ratio is no longer relevant, and so far no replacement has been found. If we try to measure S/N in any of the ways that work perfectly well for analogue signals, the results are widely variable and have no correspondence with the signal as heard by the listener.

● Note that the MUSICAM algorithm is no longer used, it was developed into MPEG-1 Audio Layers I and II. The name MUSICAM is a trademark used by several companies.

● MPEG-1 is one of several (seven at the last count) MPEG standards, and we seem to be in danger of being buried under the weight of standards at a time when development is so rapid that each standard becomes out of date almost as soon as it has been adopted. Think, for example, how soon NICAM has become upstaged by digital TV sound.