Implementation methods

In general, building a real-time control system involves two stages. The first stage is the design of the control laws or models. The second stage is the implementation of the system in hardware and soft- ware. It seems that most modern control systems are implemented digitally. We will therefore focus on the digital implementation of real-time control systems in this section.

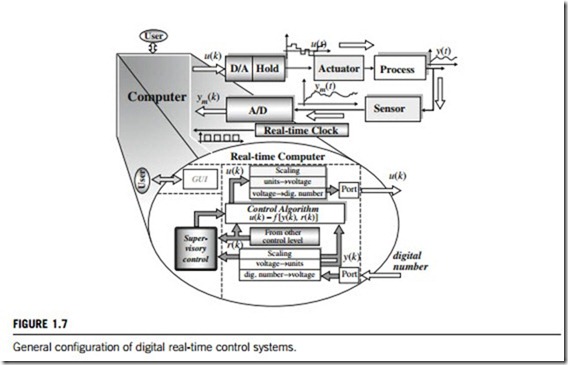

Figure 1.7 shows the general form of a digital real-time control system in which the Computer can be also a digital controller; u(k) and u(t) denote the digital and analog control signals, respectively; r(k) and r(t) denote the digital and analog reference signals, respectively; y(k) and y(t) denote the digital and analog process outputs, respectively. From Figure 1.7, we can see that the digital control system contains not only wired components but also algorithms that must be programmed into software. The majority of control laws applied to digital control systems can be represented by the general equation:

Pðq 1Þ uðkÞ ¼ Tðq 1Þ rðkÞ- Qðq 1Þ yðkÞ (1.1) where P, T, and Q are polynomials.

In real-time implementation, timing is the most important issue. In reality, delays in timing are present in both the signal conversion hardware and the microprocessor hardware. If the delay is of fixed duration, then Equation (1.1) can often be modified to compensate for it. Unfortunately, the delays in a digital control system are not usually fixed. The following are two examples of this

problem. Some microprocessors, especially those with complex instruction-set architecture such as the Pentium, can execute a varying number of CPU cycles. This generates a non-fixed delay duration. In additional, modern microprocessors serve interrupts based on the priority of each individual interrupt. If the CPU is serving a lower-priority interrupt, and a higher-priority interrupt arrives, the CPU must stop and immediately transfer to serve the new interrupt. This also results in a non-fixed delay.

At the implementation stage, all tasks should be scheduled to run with the available microprocessors and available hardware resources. The sampling time should be decided on the limited computation time provided by the system hardware. Thus, the computation time delay (control latency), s is always in conflict with the sampling time, Ts. Depending on the magnitude of s relative to Ts, the conflict can be either a delay (0 < s < Ts) or a loss (Ts >¼ s) problem. Because the time delay (control latency) originates from control errors, delay and loss can occur alternately in the same system at different times. If the control signal at time k is denoted by u(k), when the controller or computer fails to update its output during any one sampling time interval, u(k-1) should be applied again to test;

the loss of the control signal occurs. Because this could occur randomly at any time, the failure to deliver a control signal can be treated as a correlated random disturbance Du(k) at the input of the control system.

In real-time control systems, there is close linkage between control performance and the multi- tasking scheduling. Hence, the control system design has to be re-evaluated in order to introduce real- time control considerations, which could modify the selected task period, attributes etc. based on the control performance.

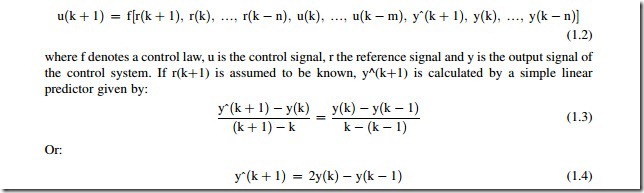

The following is an example of one-step-ahead predictive controller. It supposes that, in order to deliver the control signal u(k) as soon as possible, the one-step-ahead predictive controller can be used in the form:

done, the remaining operations are; reading the y(k) from the analog-to-digital signal converter, calculating y^(kþ1), and then u(kþ1). In this example, there is another approach which is based on the implementation of two periodic tasks. The first step calculates the control signal directly after reading and conditioning y(k), and the second step updates the states after the control signal value is delivered.

(1) Hardware devices

There are a variety of well-designed real-time hardware platforms in the industrial control market for implementing real-time control systems. The following are some generic examples.

(a) Microprocessor chipset

A microprocessor chipset consists of several, specially designed, integrated circuits which incorporate a digital signal processing function and a data acquisition function. There are two types of micro- processor chipsets available for developing real-time systems. The first of these is the generic microprocessor, which is either a multipurpose digital signal processing device or other, general microprocessor. The second is the embedded microprocessor, which can be incorporated into products such as cars, washing machines, industrial equipment, and so on to play a real-time digital signal processing role. A microprocessor chipset can have a CPU, memory, internal address and data bus circuits, registers, interfaces for peripherals, BIOS (basic input/output system), instruction set and timer clock, etc.

Microprocessors are characterized by the technical parameters: bandwidth, which is the number of bits processed in a single instruction (16, 32, and 64 bits nowadays); clock speed, which gives the number of instructions executed per second in units megahertz (MHz); memory volume, which is the storage capacity of the microprocessor memory in units of megabytes (Mb); instruction sets, which are the built-in machine-language instructions defining the basic operations of the microprocessor.

There are several large corporations making such microprocessor chipsets: Intel, AMD, Motorola, IBM, are examples of companies that have been producing microprocessors for different applications for a number of years following.

(b) Single-board computers

Single-board computers are complete computers with all components built on to a single board. Most single-board computers perform all the functions of a standard PC, but all of their components single or dual microprocessor, read-only memory (ROM) and input/output interfaces – must be configured on a single board.

Single-board computers are made in two distinct architectures: slot-supported and no-slot. Slot- supported single-board computers contain from one to several slots, in which one or some system buses connect with a slot processor board or multiple-slot backplane. There can be up to 20 slots on one backplane. This slot-supported architecture makes rapid system repair or upgrade by board substitution possible, and gives considerable scope for system expansion. Most single-board com- puters are made in a rack-mount format with full-size plug-in cards, and are intended to be used in a backplane. Selection of single-board computers should be application-oriented. Single-boards can be used either for industrial process control or in a plug-in system backplane as an I/O interface.

In addition to the big manufacturers such as Intel and AMD, there are many companies around the world that make different types of single-board computers. These companies have produced many products such as the CompactPCI, PCI, PXI, VXI, and PICMG, etc. These single-board computers are characterized by their components: CPU, chipset, timer, cache, BIOS, system memory, system buses, I/O data ports, interfaces and the slots that are generally standard PCI slot card.

(c) Industrial computers

In additional to single-board computers, other types of industrial computers are used in implementing real-time control systems.

A desktop PC can be used for real-time control. However, because standard operating systems do not use hard real-time semantics, such systems cannot be used for hard real-time controls. Desktop PC can be used as supervision and surveillance tools in real-time control systems. A typical example is SCADA systems, where desktop PCs are be used as supervisory and surveillance stations.

To work as real-time computers, all industrial computers real-time embedded software and compatible hardware. Converting an industrial computer into a real-time computer is not always a simple project, so single-board computers are popular in real-time control systems due to their flexibility.

(d) Real-time controllers

Real-time digital controllers are special-purpose industrial devices used to implement real-time control systems. There are two types; the first are called real-time controllers, and are specially made industrial computers that are adapted for industrial process and production controls. The second are purpose-built digital controllers such as PLC, CNC, PID, and fuzzy-logic controllers which are embedded with a RTOS in their own microprocessor units. The first type was discuss in the section on hardware. The second kind of digital controllers will be discussed in Part 4 of this textbook.

(2) Real-time programming

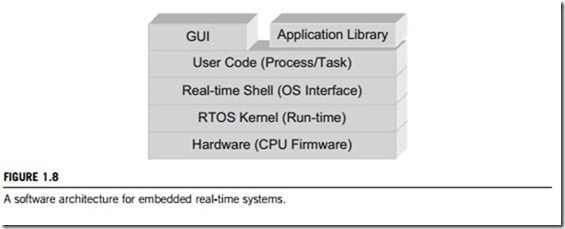

Embedded software architecture is a package of software modules for building control systems which are embedded within real-time devices. In this textbook, section 1.2.3 and Figure 1.4 discuss the software of embedded control systems in detail. In reality, most real-time control systems are

embedded systems where real-time control operations are required. It may seem that changing the operating system to a RTOS would convert the software to real-time control, but this is not the case in industrial control applications.

An RTOS only works in a limited number of control systems, because the interface betwen devices and control operations is specific to the given task. This organisation is not always compatible with a RTOS. Usually, RTOS kernels are do not offer portability because they may provide device drivers but only a fixed organization. Some parameterized RTOS kernels attempt to enhance portability by dividing the kernel into separate scheduling and communication layers. The communication layer abstracts the architecture-specific I/O facilities. The drivers for standard protocols are written once for each system and stored in a library. The scheduler is pre-emptive, priority-driven, and task-based. Whilst this is adequate for data-dominated applications with regular behaviors, this setup is incapable of handling the complex timing constraints often found in control-dominated systems. Instead of tuning code and delays, designers tune the priority assignments and design mostly by trial-and-error. To overcome the limitations of process-based scheduling, schedulers that focus on observable inter- rupts have been proposed. Real-time control can be enhanced by performing dependency analysis and applying code motion when the system is over-constrained by code-based constraints. Frame sched- uling handles more general types of constraints by verifying that constraints are met in the presence of interrupts.

Software architecture is shown in Figure 1.8 for an embedded real-time control. It has one more layer, the Real-Time Shell, added into the embedded software architecture when compared with Figure 1.4. This is because the user interface layer, process handle layer, component control layer, and device driver/interface layer shown in Figure 1.4 are integrated into the user code layer of Figure 1.8.

Real-time systems should perform tasks in response to input interrupts. An interrupt can be a user

request, an external, or an internal event. Real-time systems detect an interrupt by an interrupt mechanism rather than by polling. In embedded systems at run-time, a given interrupt may trigger different reactions, depending on the internal state of the system. We use the term “mode” to explain a given software architecture. A mode is a function that maps a physical interrupt with associated values onto a logical event; the system assigns each logical event a corresponding handler as defined by the user. The defined mode allows a given physical interrupt to take on different logical meanings by triggering an entirely different response or even to be ignored entirely.

Each handler may also instruct the system to change mode. Specifically, if the mode waits on a timer interrupt, a self-transition effectively reinitializes the delay, while in the latter case the delay continues after handling an interrupt without a transition. Sequencing is a simple replacement of the current mapping with that of the target mode. This mapping can combine with additional (trigger, response) entries.

It is a way of composing modes to form hierarchical modes, because it combines the mapping functions of the submodes. Each submode can make its own transitions independently of the other submodes. Different submodes may also transition to a joining mode that does not become effective until all of its predecessors have completed their transitions to it. A join is the inverse of a fork. Finally, a disable kills other forked branches as well.

The real-time shell layer given in Figure 1.8 exists between user code and the RTOS Kernel. This additional layer separates the responsibilities between the upper and lower layers. The real- time shell is responsible for driving devices and I/O interfaces, handling interrupts, managing modes and coordinating timing. Thus this layer should contain the revelant program modules.

(a) Mode manager

The mode manager is divided into two distinct functions: mapping physical interrupts to interrupt handlers for dispatch, and for changing modes. An interrupt is reported by either the upper or the lower layer. Once the mode manager detects an interrupt, it first checks whether this interrupt is worth handling. If it is, it changes the current mode into that specific for handling this interrupt, and then maps this interrupt to the corresponding interrupt handler. The interrupt handler then triggers a task that handles this interrupt

Output requests are also received by the interrupt handler for scheduling. It informs the real-time task engine to disable the interrupts for the exiting mode, and enables the interrupts for the new mode. After the handler completes execution, if there is a mode transition to perform, the mode manager reconfigures the interrupt handler to reflect the current sensitivity list. To construct the new mapping, the mode manager applies one of the transition operators, which are sequencing, fork, join, or disable.

(b) Interrupt handler

Interrupt handling is a key function in real-time software, and comprises interrupts and their handlers. Only those physical interrupts which of high enough priority can be centered into system interrupt table. The software assigns each interrupt to a handler in the interrupt table. An interrupt handler is just a routine containing a sequence of operations. Each of these may request input and output while running.

The routine for handling a specific interrupt is known as the interrupt service routine for the specific interrupt. The RTOS layer often stores a list of the pairs of interrupts and their handlers known as the interrupt table. All interrupt handlers run constant within the background process. Most RTOS kernels issue tasks which can be triggered and terminated at run-time by the context switch paradigm. In object-oriented programming languages (such as Cþþ and Java), tasks can be run as one or more threads. Thus, an interrupt can be handled either as a thread or as a sub-process within a task or process.

(c) Real-time task engine

Interrupts from different device drivers are assigned different priorities.

There are two object queues held in the RTOS shell program. The first is the interrupt calendar queue and the second is the task queue. The interrupt calendar queue is ordered in terms of interrupt

priorities and the first-come first-served principle. The task queue is a key object in the RTOS shell. It is held in the form of a list or a table structure, and holds the following types of tasks: main task, background task, current task, and other tasks. In the task queue, tasks are also ordered in terms of their priorities and the first-come first-served principle.

While running a task at run-time, interrupts arrive. The interrupt handler first checks whether it is a valid interrupt. If it is, the interrupt handler first puts it into the interrupt calendar queue. Then, the interrupt handler picks up the interrupt at the head of the interrupt calendar queue and refers to the interrupt table for corresponding service routine. At this moment, the dispatcher uses the RTOS kernel context switch to stop the current task and store all its data in memory (ROM). Control then transfers to the scheduler, which selects a task from the task queue to run the interrupt service routine.When a task finishes a complete run, the dispatcher takes over, and then transfers to the scheduler to get the next task from the task queue.

There are several ways to approach software for real-time control systems. The hardware layer codes include the machine-instruction set hardcoded inside the CPU firmware. A range of RTOS kernels or nuclei, often written in both assembler and C languages are available. Choice is usually based on the microprocessors used. User code will then need to be developed in a real-time control system. Object-oriented programming languages, such as Cþþ and Java as well Ada, are the programming languages most often used program.

Code development for a given real-time control system will be a complex job. Usually the process involves iterating the following steps: program design including classes, sequences and user case designations; coding programs in accordance with design; performing simulation tests; design modification; coding the program again in terms of the modified design; and repeat until a satisfactory result is obtained.

(3) Real-time verification and tests

In development make mistakes are often made in programming real-time systems. These programming errors are seldom, visible in the debugging phase, hence can appear when the application is running and it is not possible to correct the mistakes. Thorough necessary debugging before the software is delivered.

(a) Program bugs

A program bug is a flaw, fault, or error that prevents the program from executing as intended. Most are the results of mistakes and errors made by programmers in either source code or program design, and a few are caused by compilers producing incorrect code.

Control switching statements (if-then-else or case-switch statements) occur often in program code. These statements allow different paths of execution of varying length, so code will have different execution times. This becomes more significant when the path is very long. Variable functions, state machines and lookup tables should be used instead of these kinds of statements.

Some programs contain a single big loop such as a For or a Do While loop. This means that the complete unit always executes at the same rate. In order to assign different and proper rates, concurrent techniques for a pre-emptive RTOS should be used.

Some code as message passing as the primary intertask communication mechanism. In real-time programming, message passing reduces real-time scheduling (the maximum value of the system’s CPU utilization for a set of tasks up to which all tasks will be guaranteed to meet their deadline) and produces

significant overheads, leading to many aperiodic instead of periodic tasks. Moreover, deadlocks can appear in closed-loop systems, which could cause execution to fail. Shared memory and proper synchronization mechanisms are used to prevent deadlocks, and priority inversion should be used.

(b) Incorrect timing

Many RTOS kernels provide a program delay, running or waiting mechanism for hardware oper- ations, such as I/O data transfer and CPU cleaning of memory partitions. They are implemented as empty or dummy loops, which can be lead to non-optimal delays. RTOS timing mechanisms should be used here for implementing exact time delays.

Program design should be done with execution-time measurement. It is very common to assume that the program is short enough, and the available time is sufficient, but measuring of execution time should be part of the standard testing in order to avoid surprises. The system should be designed such that the execution timing of code is always measurable.

(c) Misuse interrupts

In a real-time system, interrupts cannot be scheduled by the scheduler because interrupts are mandatory for getting services. Because they can occur randomly, interrupts seriously affect real-time multi-tasking predictability. Programs based on interrupts are very difficult to debug and to analyze. Moreover, interrupts operate in the task context handled by the RTOS kernel; a task corresponding to the response to a given event could lack a real-time timing requirement.

There is a very common misunderstanding which says that interrupts save CPU time and they guarantee the start of execution of a task. This can be true in small systems, but it is not the case for complex real-time systems, where non-pre-emptive periodic tasks can provide similar latency with better predictability and CPU utilization. Therefore, interrupt service routines should be programmed in such a form that their only function is to signal an aperiodic server.

(d) Poor analyses

Memory use is often ignored during program design. The amount of memory available in most real- time systems is limited. Frequently, programmers have no idea how much memory is used by a certain program or data structure. A memory analysis is quite simple in most real-time system development environments, but without it a program can easily crash at run-time.

Sometimes, statements are used to specify register addresses, limits for arrays, and configuration constants. Although this practice is common, it is undesirble as it prevents on-the-fly software patches for emergency situations, and it increases the difficulty of reusing the software in other applications. In fact, changes in configuration require that the entire application has to be recompiled.