Chapter 6. Chipsets

Buy a microprocessor, and it’s just an inert lump that sits in its static-free box, super-powered silicon with extreme potential but no motivation. It’s sort of like a Hollywood hunk proto-star before he takes acting classes. Even out of the box, the most powerful microprocessor is hardly impressive—or effective. It can’t do anything on its own. Although it serves as the centerpiece of a computer, it is not the computer itself. It needs help.

The electronics inside a personal computer that flesh out its circuitry and make it work are called support circuits. At one time, support circuits made up everything in a computer except for the microprocessor. Today, most of the support functions needed to make a computer work take the form of a few large integrated circuits called a chipset. Support circuits handle only a few low-level functions to take care of the needs of the chipset.

In current designs, the chipset is second in importance only to the microprocessor in making your computer work—and making your computer a computer. Your computer’s chipset is the foundation of its motherboard, and a computer-maker’s choice of chipsets determines the overall capabilities of your computer. The microprocessor embodies its potential. The chipset makes it a reality.

Although the chipset used by a computer is rarely mentioned in advertisements and specification sheets, its influence is pervasive. Among other issues, the chipset determines the type and number of microprocessors that can be installed in the system; the maximum memory a system can address; the memory technology used by the system (SDR, DDR, or Rambus); whether the system can detect memory errors; the speed of the memory bus; the size and kind of secondary memory cache; the type, speed, and operating mode of the expansion bus; whether you can plug a video board into an Accelerated Graphics Port in your computer; the mass-storage interfaces available on the motherboard and the speed at which they operate; the ports available on your motherboard; and whether your computer has a built-in network adapter. That’s a lot of power packed into a few chips—and a good reason for wondering what you have in your computer.

Background

The functions needed to make a microprocessor into a computer reads like a laundry list for a three-ring circus—long and exotic, with a trace of the unexpected. A fully operational computer requires a clock or oscillator to generate the signals that lock the circuits together; a memory mastermind that ensures each byte goes to the proper place and stays there; a traffic cop for the expansion bus and other interconnecting circuitry to control the flow of data in and out of the chip and the rest of the system; office-assistant circuits for taking care of the daily routines and lifting the responsibility for minor chores from the microprocessor; and, in most modern computers, communications, video, and audio circuitry that let you and the computer deal with one another like rational beings.

Every computer manufactured since the first amphibians crawled from the primeval swamp has required these functions, although in the earliest computers they did not take the form of a chipset. The micro-miniaturization technology was nascent, and the need for combining so many functions in so small a package was negligible. Instead, computer engineers built the required functions from a variety of discrete circuits—small, general-purpose integrated circuits such as logic gates—and a few functional blocks, which are larger integrated circuits designed to a particular purpose but not necessarily one that had anything to do with computers. These garden-variety circuits, together termed support chips, were combined to build all the necessary computer functions into the first computer. The modern chipset not only combines these support functions onto a single silicon slice but also adds features beyond the dreams of the first computer’s designers—things such as USB ports, surround-sound systems, and power-management circuits. This all-in-one design makes for simpler and far cheaper computers.

Trivial as it seems, encapsulating a piece of silicon in an epoxy plastic package is one of the more expensive parts of making chips. For chipmakers, it’s much more expensive than adding more functions to the silicon itself. The more functions the chipmaker puts on a single chip, the less each function costs. Those savings dribble down to computer-makers and, eventually, you. Moreover, designing a computer motherboard with discrete support circuitry was a true engineering challenge because it required a deep understanding of the electronic function of all the elements of a computer. Using a chipset, a computer engineer need only be concerned with the signals going in and out of a few components. The chipset might be a magical black box for all the designer cares. In fact, in many cases the only skill required to design a computer from a chipset is the ability to navigate from a roadmap. Most chipset manufacturers provide circuit designs for motherboards to aid in the evaluation of their products. Many motherboard manufacturers (all too many, perhaps) simply take the chipset-maker’s evaluation design and turn it into a commercial product.

You can trace the chipset genealogy all the way back to the first computer. This heritage results from the simple need for compatibility. Today’s computers mimic the function of the earliest computers—and IBM’s original Personal Computer of 1981 in particular—so that they can all run the same software. Although seemingly anachronistic in an age when those who can remember the first computer also harbor memories of Desotos and dinosaurs, even the most current chipsets must precisely mimic the actions of early computers so that the oldest software will still operate properly in new computers—provided, of course, all the other required support is also present. After all, some Neanderthal will set switchboards glowing from I-95 to the Silicon Valley with threats of lawsuits and aspersions about the parenthood of chipset designers when the DOS utilities he downloaded in 1982 won’t run on his new Pentium 4.

Functions

No matter how simple or elaborate the chipset in a modern computer, it has three chief functions. It must act as a system controller that holds together the entire computer, giving all the support the microprocessor needs to be a true computer system. As a memory controller, it links the microprocessor to the memory system, establishes the main memory and cache architectures, and ensures the reliability of the data stashed away in RAM chips. And to extend the reach of the microprocessor to other system components, it must act as a peripheral controller and operate input/output ports and disk interfaces.

How these functions get divided among silicon chips and the terminology describing the chips has itself evolved.

When computers first took their current form, incorporating the PCI system for linking components together, engineers lumped the system and memory functions together as a unit they called the north bridge. Intel sometimes uses the term host bridge to indicate the same function. The peripheral control functions make up the south bridge, sometimes called the I/O bridge. The circuits that controlled the expansion bus often were grouped together with the north bridge or as a separate chip termed the PCI bridge.

Intel has reworked its design and nomenclature to make the main liaison with the microprocessor into the memory controller hub. This chip includes the essential support functions of the north bridge. It provides a wide, fast data pathway to memory and to the system’s video circuitry.

The I/O controller hub takes over all the other interface functions of the chipset, including control of the expansion bus, all disks, all ports, and sound and power management. The host controller provides a high-speed connection between the I/O controller hub and the microprocessor.

The memory control hub links to the microprocessor through its system bus, sometimes termed the front side bus, which provides the highest speed linkup available to peripheral circuits. The logic inside the memory control hub translates the microprocessor’s address requests, pumped down the system bus as address cycles, into the form used by the memory in the system. Because memory modules differ in their bus connections, the memory hub controller circuitry determines what kind of memory can connect to the system. Some memory hub controllers, for example, link only to Rambus memory, whereas others may use double data-rate (DDR) modules.

Although the connection with graphics circuitry naturally is a part of the input/output system of a computer, the modern chipset design puts the link in the memory hub controller. Video displays involve moving around large blocks of memory, and the memory host controller provides the highest-speed connection with the microprocessor, speeding video access and screen updates. In addition, the design of the current video interconnection standard, accelerated graphics port (AGP), uses a memory aperture to transfer bytes between the microprocessor and the frame buffer (the memory that stores the image on the display screen). Accessing this aperture is easiest and most direct through the memory controller.

The I/O hub controller generates all the signals used for controlling the microprocessor and the operation of the computer. It doesn’t tell the microprocessor what instructions to execute. Rather, it sets the speed of the system by governing the various clocks in the computer, it manages the microprocessor’s power usage (as well as that of the rest of the computer), and it tells the microprocessor when to interrupt one task and switch to another or give a piece of hardware immediate attention. These are all system-control functions, once the province of a dedicated system controller chip.

In addition, the I/O hub controller provides both the internal and external links to the rest of the computer and its peripherals, its true input/output function. It controls the computer’s expansion bus (which not only generates the signals for the expansion slots for add-in cards but also provides the high-speed hark disk interface), all the ports (including USB), and network connections. Audio circuitry is sometimes built in to the I/O hub controller, again linking through the expansion bus circuitry.

Memory Control

Memory speed is one of the most important factors in determining the speed of a computer. If a microprocessor cannot get the data and program code it needs, it has to wait. Using the right kind of memory and controlling it effectively are both issues governed by the chipset.

Handling memory for today’s chipsets is actually easier than a few years ago. When computer-makers first stated using memory caches to improve system performance, they thrust the responsibility for managing the cache on the chipset. But with the current generation of microprocessors, cache management has been integrated with the microprocessor. The chipset only needs to provide the microprocessors access to main memory through its system bus.

Addressing

To a microprocessor, accessing memory couldn’t be simpler. It just needs to activate the proper combination of address lines to indicate a storage location and then read its contents or write a new value. The address is a simple binary code that uses the available address lines, essentially a 64-bit code with a Pentium-level microprocessor.

Unfortunately, the 64-bit code of the microprocessor is completely unintelligible to memory chips and modules. Semiconductor-makers design their memory chips so that they will fit any application, regardless of the addressing of the microprocessor or even whether they link to a microprocessor. Chips, with a few megabytes of storage at most, have no need for gigabyte addressability. They need only a sufficient number of addresses to put their entire contents—and nothing more—online.

Translating between the addresses and storage format used by a microprocessor and the format used by memory chips and modules is the job of the memory decoder. This chip (or, in the case of chipsets, function) determines not only the logical arrangement of memory, but also how much and what kind of memory a computer can use.

The memory functions of modern chipsets determine the basic timing of the memory system, controlling which signals in the memory system are active at each instant for any given function. The ability to adjust these timing values determines the memory technology that a computer can use. For example, early chipsets timed their signals to match Synchronous Dynamic Random Access Memory (SDRAM) memory chips. High-performance chipsets need to address Rambus memory and double-data rate memory, which use not only a different addressing system but also an entirely different access technology.

In addition, the chipset determines how much memory a computer can possibly handle. Although a current microprocessor such as the Pentium 4 can physically address up to 64GB of memory, modern chipsets do not support the full range of microprocessor addresses. Most current Intel chipsets, for example, address only up to 2GB of physical memory. Not that Intel’s engineers want to slight you—they have not purposely shortchanged you. The paltry memory capacity is a result of physical limits of the high-speed memory buses. The distance that the signals can travel at today’s memory speed is severely limited, which constrains how many memory packages can fit near enough to the chipset. Intel actually provides two channels on its Rambus chipsets, doubling the capacity that otherwise would be available through a Rambus connection.

Refreshing

Most memory chips that use current technologies require periodic refreshing of their storage. That is, the tiny charge they store tends to drain away. Refreshing recharges each memory cell. Although memory chips handle the process of recharging themselves, they need to coordinate the refresh operation with the rest of the computer. Your computer cannot access the bits in storage while they are being refreshed.

The chipset is in charge of signaling memory when to refresh. Typically the chipset sends a signal to your system’s memory at a preset interval that’s chosen by the computer’s designer. With SDRAM memory and current chipsets, the refresh interval is usually 15.6 microseconds.

Memory can also self-refresh, triggering its own refresh when necessary. Self-refresh becomes desirable when a system powers down to one of its sleep modes, during which memory access is suspended along with microprocessor operation, and even the major portions of the chipset switch off. Operating in this way, self-refresh preserves the contents of memory while reducing the power required for the chipset.

Error Handling

Computers, for which the integrity of their data is paramount, use memory systems with error detection or error correction. The chipset manages the error-detection and error-correction processes.

Early personal computers relied on simple error detection, in which your computer warned when an error changed the contents of memory (unfortunately “warning” usually meant shutting down your computer). Current systems use an error-correction code (ECC), extra information added to that sent into memory through which the change of a stored bit can be precisely identified and repaired. The chipset adds the ECC to the data sent to memory, an extra eight bits for every eight bytes of data stored. When the chipset later reads back those eight bytes, it checks the ECC before passing the bytes on to your microprocessor. If a single bit is in error, the ECC identifies it, and the chipset restores the correct bit. If an error occurs in more than one bit, the offending bits cannot be repaired, but the chipset can warn that they have changed.

ECC is a programmable feature of most chipsets. Depending on the design of your computer, it can be permanently set on or off at the factory, or you may be able to switch it on and off through your system’s advanced setup procedure. Simply switching ECC on doesn’t automatically make your computer’s memory more secure. An ECC system requires that your computer have special ECC memory modules installed.

System Control

The basic function of a chipset is to turn a chip into a computer, to add what a microprocessor needs to be an entire computer system. Not just any computer. You expect your computer to match the prevailing industry standards so that it can run as wide a variety of software as possible. You don’t want surprises, such as your screen freezing when you try to install an application recommended by a friend. No matter what program you buy, borrow, or steal, you want it to find all the features it needs in your computer.

To assure you this level of compatibility, nearly all the functions of the system controller in a computer chipset are well defined and for the most part standardized. In nearly all computers, the most basic of these functions use the same means of access and control—the ports and memory locations match those used by the very first computers. You might, after all, want to load an old DOS program to balance your checkbook. Some people actually do it, dredging up programs that put roughshod white characters on a black screen, flashing a white block cursor at them that demand they type a command. A few people even attempt to balance their checkbooks.

Some features of the modern chipset exactly mimic the handful of discrete logic circuits that were put together to make the very first personal computer, cobwebs and all. Although many of these features are ignored by modern hardware and software, they continue as a vestigial part of all systems, sort of like an appendix.

Timing Circuits

Although anarchy has much to recommend should you believe in individual freedom or sell firearms, it’s an anathema to computer circuits. Today’s data-processing designs depend on organization and controlled cooperation—factors that make timing critical. The meaning of each pulse passing through a computer depends to a large degree on time relationships. Signals must be passed between circuits at just the right moment for the entire system to work properly.

Timing is critical in computers because of their clocked logic. The circuit element that sends out timing pulses to keep everything synchronized is called a clock. Today’s computers don’t have just one clock, however. They have several, each for a specific purpose. Among these are the system clock, the bus clock, the video clock, and even a real-time clock.

The system clock is the conductor who beats the time that all the circuits follow, sending out special timing pulses at precisely controlled intervals. The clock, however, must get its cues from somewhere, either its own internal sense of timing or some kind of metronome. Most clocks in electronic circuits derive their beats from oscillators.

Oscillators

An electronic circuit that accurately and continuously beats time is termed an oscillator. Most oscillators work on a simple feedback principle. Like the microphone that picks up its own sounds from public address speakers too near or turned up too high, the oscillator, too, listens to what it says. As with the acoustic-feedback squeal that the public address system complains with, the oscillator, too, generates it own howl. Because the feedback circuit is much shorter, however, the signal need not travel as far and the resulting frequency is higher, perhaps by several thousand-fold.

The oscillator takes its output as its input, then amplifies the signal, sends it to its output, where it goes back to the input again in an endless and out-of-control loop. The oscillator is tamed by adding impediments to the feedback loop, through special electronic components added between the oscillator’s output and its input. The feedback and its frequency can thus be brought under control.

In nearly all computers a carefully crafted crystal of quartz is used as this frequency-control element. Quartz is one of many piezoelectric compounds. Piezoelectric materials have an interesting property—if you bend a piezoelectric crystal, it generates a tiny voltage. What’s more, if you apply a voltage to it in the right way, the piezoelectric material bends.

Quartz crystals do exactly that. But beyond this simple stimulus/response relationship, quartz crystals offer another important property. By stringently controlling the size and shape of a quartz crystal, it can be made to resonate at a specific frequency. The frequency of this resonance is extremely stable and very reliable—so much so that it can help an electric watch keep time to within seconds a month. Although computers don’t need the absolute precision of a quartz watch to operate their logic circuits properly, the fundamental stability of the quartz oscillator guarantees that the computer operates at a clock frequency within the design limits always available to it.

The various clocks in a computer can operate separately, or they may be locked together. Most computers link these frequencies together, synchronizing them. They may all originate in a single oscillator and use special circuits such as frequency dividers, which reduce the oscillation rate by a selectable factor, or frequency multipliers, which increase it. For example, a computer may have a 133MHz oscillator that directly controls the memory system. A frequency divider may reduce that to one-quarter the rate to run the PCI bus, and another divider may reduce it by 24 to produce the clock for the ISA legacy bus, should the computer have one.

To go the other way, a frequency multiplier can boost the oscillator frequency to a higher value, doubling the 133MHz clock to 266MHz or raising it to some other multiple. Because all the frequencies created through frequency division and multiplication originate in a single clock signal, they are automatically synchronized. Even the most minute variations in the original clock are reflected in all those derived from it.

Some sections of computers operate asynchronously using their own clocks. For example, the scan rate used by your computer’s video system usually is derived from a separate oscillator on the video board. In fact, some video boards have multiple oscillators for different scan rates. Some computers may even have separate clocks for their basic system board functions and run their buses asynchronously from their memory and microprocessor. For keeping track of the date, most computers have dedicated real-time clocks that are essentially a digital Timex grafted into its chipset.

Although engineers can build the various oscillators needed in a computer into the chipset—only the crystal in its large metal can must reside outside—the current design trend is to generate the needed frequencies outside the chipset. Separate circuits provide the needed oscillators and send their signals to the chipset (and elsewhere in the computer) to keep everything operating at the right rates.

System Clocks

Current Intel chipsets use eight different clock frequencies in their everyday operation. They are used for everything from sampling sounds to passing bytes through your computer’s ports.

What Intel calls the oscillator clock operates at an odd-sounding 14.31818MHz rate. This strange frequency is a carryover from the first personal computer, locked to four times the 3.58MHz subcarrier frequency used to put color in images in analog television signals. The engineers who created the original computer thought compatibility with televisions would be an important design element of the computer—they thought you might use one instead of a dedicated monitor. Rather than anticipating multimedia, they were looking for a cheap way of putting computer images onscreen. When the computer was released, no inexpensive color computer monitors were available (or necessary because color graphic software was almost nonexistent). Making computers work with color televisions seemed an expedient way of creating computer graphics.

The TV-as-monitor never really caught on, but the 14.31818MHz frequency persists, but not for motherboard television circuits. Instead, the chipset locks onto this frequency and slices it down to 1.19MHz to serve as the timebase for the computer’s timer/counter circuit.

Because the chipset serves as the PCI bus controller, it also needs a precision reference from which to generate the timing signals used by the PCI bus. The PCI clock provides what’s needed: a 33MHz signal. In addition, current Intel chipsets also need another clock for the PCI bus, CLK66, which operates at 66MHz. These two signals are usually derived from the same timebase, keeping them exactly synchronized. Typically a 66MHz may be divided down to 33MHz.

New chipsets require a USB clock, an oscillator that provides the operating frequency for the Universal Serial Bus (USB) port circuitry in the chipset, to develop the signals that let you connect peripherals to your computer. This clock operates at 48MHz. It can be divided down to 12MHz for USB 1.1 signals or multiplied up to 480MHz for USB 2.0.

Intel’s current chipsets have built-in audio capabilities, designed to follow the AC97 audio standard. The chipsets use the AC97 Bit Clock as a timebase for digital audio sampling. The required clock operating frequency is 12.288MHz. It is usually supplied by the audio codec (coder/decoder), which performs the digital-to-analog and analog-to-digital conversion on the purely digital audio signals used by the chipset. A single source for this frequency ensures that the chipset and codec stay synchronized.

The Advanced Programmable Interrupt Controller (APIC) system used by the PCI bus sends messages on its own bus conductors. The APIC clock provides a clocking signal (its rising edge indicates when data on the APIC bus is valid). According to Intel’s specifications, its chipsets accept APIC clocks operating at up to 33.3MHz (for example, a signal derived from the PCI bus clock).

The SMBus clock is one of the standard signals of the System Management Bus. All SMBus interfaces use two signals for carrying data: a data signal and the clock signal. The chipset provides this standard clock signal to properly pace the data and indicate when it is valid.

Current Intel chipsets integrate a standard Ethernet local area network controller into their circuitry, designed to work with an external module called LAN Connect, which provides both the physical interface for the network as well as a clock signal for the chipset’s LAN circuitry. The LAN I/F clock operates at one-half the frequency of the network carrier, either 5 or 50MHz under current standards.

Real-Time Clock

The first computers tracked time only when they were awake and running, simply counting the ticks of their timers. Every time you booted up, your computer asked you the time to set its clock. To lift that heavy chore from your shoulders, all personal computers built since 1984 have a built-in real-time clock among their support circuits. It’s like your computer is wearing its own wristwatch (but, all too often, not as accurate).

All these built-in real-time clocks trace their heritage back to a specific clock circuit, the Motorola MC146818, essentially an arbitrary choice that IBM’s engineers made in designing the personal computer AT. No computer has used the MC146818 chip in over a decade, but chipsets usually include circuitry patterned after the MC146818 to achieve full compatibility with older computers.

The real-time clocks have their own timebases, typically an oscillator built into the chip, one that’s independent from the other clocks in a computer. The oscillator (and even the entire clock) may be external to the chipset. Intel’s current design puts all the real-time-clock circuitry, including the oscillator, inside the chipset. To be operational it requires only a handful of discrete components and a crystal tuned to a frequency of 32.768 KHz. A battery supplies current to this clock circuitry (and only this clock circuitry) when your computer is switched off—to keep accurate time, this clock must run constantly.

The battery often is not enough to ensure the accuracy of the real-time clock. Although it should be as accurate as a digital watch (its circuitry is nothing more than a digital watch in a different form), many tell time as imaginatively as a four-year old child. One reason is that although a quartz oscillator is excellent at maintaining a constant frequency, that frequency may not be the one required to keep time correctly. Some computers have trimmers for adjusting the frequency of their real-time clocks, but their manufacturers sometimes don’t think to adjust them properly.

In many computers, a trimmer (an adjustable capacitor), in series with a quartz crystal, allows the manufacturer—or anyone with a screwdriver—to alter the resonate frequency of the oscillator. Giving the trimmer a tweak can bring the real-time clock closer to reality—or further into the twilight zone. (You can find the trimmer by looking for the short cylinder with a slotted shaft in the center near the clock crystal, which is usually the only one in a computer with a kilohertz rather than megahertz rating.) There are many other solutions to clock inaccuracy. The best is to regularly run a program that synchronizes your clock with that of the National Institute of Standards and Technology’s atomic clock, the official U.S. time standard. You can download this time-setting program from the NIST Web site at tf.nist.gov/timefreq/service/its.htm.

The real-time clocks in many computers are also sensitive to the battery voltage. When the CMOS backup battery is nearing failure, the real-time clock may go wildly awry. If your computer keeps good time for a while (months or years) and then suddenly wakes up hours or days off, try replacing your computer’s CMOS backup battery.

The real-time clock chip first installed in early computers also had a built-in alarm function, which has been carried into the real-time clocks of new computers. As with the rest of the real-time clock, the alarm function runs from the battery supply so it can operate while your computer is switched off. This alarm can thus generate an interrupt at the appropriate time to start or stop a program or process. In some systems, it can even cause your computer to switch at a preset time. Throw a WAV file of the national anthem in your startup folder, and you’ll have a truly patriotic alarm clock.

The clock circuitry also includes 128 bytes of low-power CMOS memory that is used to store configuration information. This is discussed in the next chapter.

Timers

In addition to the clock in your computer—both the oscillators and the real-time clock—you’ll find timers. Digitally speaking, computer timers are simply counters. They count pulses received by an oscillator and then perform some action when they achieve a preset value. These timers serve two important functions. They maintain the real time for your computer separately from the dedicated real-time clock, and they signal the time to refresh memory. Also, they generate the sounds that come from the tiny speakers of computers that lack multimedia pretensions.

The original IBM personal computer used a simple timer chip for these functions, specifically chip type 8253. Although this chip would be as foreign in a modern computer as a smudge pot or buggy whip, modern chipsets emulate an updated version of that timer chip, the 8254. The system timer counts pulses from the 1.19MHz clock (that the chipset derives from the 14.31818MHz system clock) and divides them down to the actual frequencies it needs (for example, to make a beep of the right pitch).

The timer works simply. You load one of its registers with a number, and it counts to that number. When it reaches the number, it outputs a pulse and starts all over again. Load a 8254 register with 2, and it sends out a pulse at half the frequency of the input. Load it with 1000, and the output becomes 1/1000th the input. In this mode, the chip (real or emulated) can generate an interrupt at any of a wide range of user-defined intervals. Because the highest value you can load into its 16-bit register is 216 or 65,536, the longest single interval it can count is about .055 seconds (that is, the 1.19MHz input signal divided by 65,536).

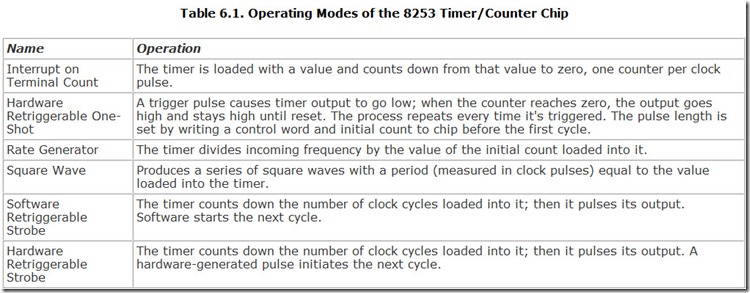

The timer circuitry actually had six operating modes, all of which are carried through on modern computers. Table 6.1 lists these modes.

The real-time signal of the timer counts out its longest possible increment, generating pulses at a rate of 18.2 per second. The pulses cause the real-time interrupt, which the computer counts to keep track of the time. These interrupts can also be used by programs that need to regularly investigate what the computer is doing; for instance, checking the hour to see whether it’s time to dial up a distant computer.

The speaker section of the timer system works the same way, only it generates a square wave that is routed through an amplifier to the internal speaker of the computer to make primitive sounds. Programs can modify any of its settings to change the pitch of the tone, and with clever programming, its timbre.

In modern computers, the memory controller in the chipset rather than the system timer handles refresh operations. Otherwise, the timer functions are much the same as they were with the first computers, and the same signals are available to programmers.

Interrupt Control

Timers and strange oscillator frequencies aren’t the only place where computers show their primitive heritage. Modern machines also carry over the old interrupt and direct memory access systems of the first personal computers, and it’s the chipset’s job to emulate these old functions. Most are left over from the design of the original PC expansion bus that became enshrined as Industry Standard Architecture. Although the newest computers don’t have any ISA slots, they need to incorporate the ISA functions for full compatibility with old software.

The hardware interrupts that signal microprocessors to shift their attention originate outside the chip. In the first personal computers, a programmable interrupt controller funneled requests for interrupts from hardware devices to the microprocessor. The first personal computer design used a type 8259A interrupt controller chip that accepted eight hardware interrupt signals. The needs of even basic computers quickly swallowed up those eight interrupts, so the engineers who designed the next generation of computers added a second 8259A chip. The interrupts from the new chip cascade into one interrupt input of the old one. That is, the new chip consolidates the signals of eight interrupts and sends them to one of the interrupt inputs of the chip used in the original design. The eight new interrupts, combined with the seven remaining on the old chip, yield a total of 15 available hardware interrupts.

The PCI bus does not use this interrupt structure, substituting a newer design that uses a four-wire system with serialized interrupts (discussed in Chapter 9, “Expansion Buses”).

The PCI system uses four interrupt lines, and the function of each is left up to the designer of each individual expansion board. The PCI specification puts no limits on how these interrupt signals are used (the software driver that services the board determines that) but specifies level-sensitive interrupts so that the four signals can be reliably shared. However, all computers that maintain an ISA legacy bus—which currently includes nearly all computers—mimic this design for compatibility reasons. Several legacy functions, such as the keyboard controller, legacy ports, real-time clock, and IDE disk drives still use these interrupts.

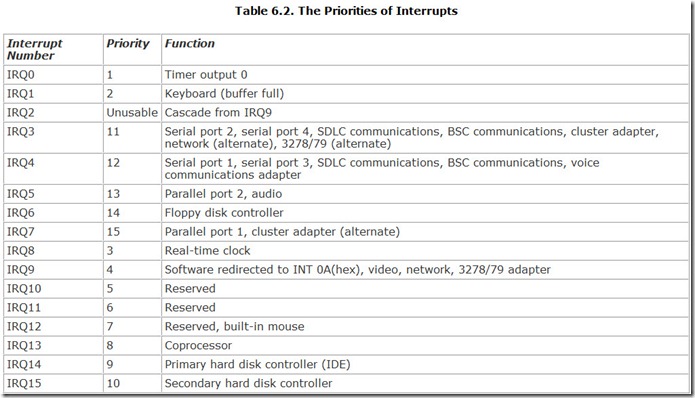

In this legacy scheme, each interrupt is assigned a priority, the more important one getting attention before those of lesser importance. In the original computer scheme of things with eight interrupts, the lower the number assigned to an interrupt, the most priority it got. The two-stage design in current systems, however, abridges the rule.

In the current cascade design, the second interrupt controller links to the input of the first controller that was assigned to interrupt 2 (IRQ2). Consequently, every interrupt on the second controller (that is, numbers 8 through 15) has a higher priority than those on the first controller that are numbered higher than 2. The hardware-based priorities of interrupts in current computers therefore ranges as listed in Table 6.2, along with the functions that usually use those interrupts.

The original Plug-and-Play configuration system altered how computers assign interrupt numbers, making the assignments automatically without regard to the traditional values. In these systems, interrupt values and the resulting priorities get assigned dynamically when the computer configures itself. The values depend on the combination of hardware devices installed in the such systems. Although the standardized step-by-step nature of Plug-and-Play and its Advanced Configuration and Power Interface (ACPI) successor usually assign the same interrupt value to the same devices in a given computer each time that computer boots up, it doesn’t have to. Interrupt values could (but usually do not) change day to day.

A few interrupt assignments are inviolable. In all systems, four interrupts can never be used for expansion devices. These include IRQ0, used by the timer/counter; IRQ1, used by the keyboard controller; IRQ2, the cascade point for the upper interrupts (which is redirected to IRQ9); and IRQ8, used by the real-time clock. In addition, all modern computers have microprocessors with integral floating-point units (FPUs) or accept external FPUs, both of which use IRQ13.

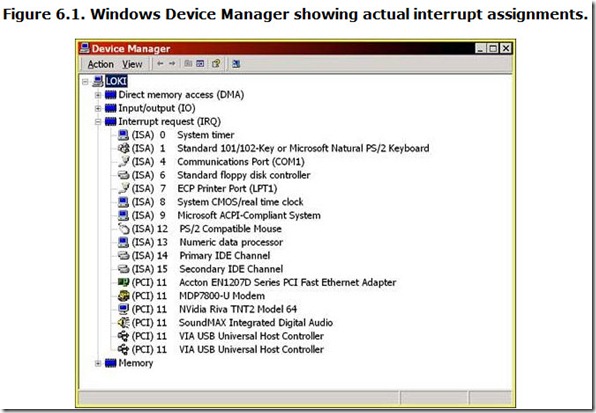

If you want to know what’s what, you can check interrupt assignment through Windows. To view the actual interrupt usage of your computer, you need to consult the Device Manager, available through the System icon in Control Panel. In Device Manager, select View, Resources from the drop-down menu or (under Windows 95, 98, and Me) the View Resources tab. Select your computer on the Device menu and click the Properties button. Click the Interrupt Request menu selection or radio button (depending on your version of Windows), and you’ll see a list of the actual interrupt assignments in use in your computer, akin what’s shown in Figure 6.1.

Although 15 interrupts seem like a lot, this number is nowhere near enough to give every device in a modern computer its own interrupt. Fortunately, engineers found ways to let devices share interrupts. Although interrupt sharing is possible even with the older computer designs, interrupt sharing works best when the expansion bus and its support circuitry are designed from the start for sharing. The PCI system was; ISA was not.

When an interrupt is shared, each device that’s sharing a given interrupt uses the same hardware interrupt request line to signal to the microprocessor. The interrupt-handling software or firmware then directs the microprocessor for the action to take to service the device making the interrupt. The interrupt handler distinguishes between the various devices sharing the interrupt to ensure only the right routine gets carried out.

Although software or firmware actually sorts out the sharing of interrupts, a computer expansion bus needs to be properly designed to make the sharing reliable. Old bus designs such as the ISA legacy bus use edge-triggered interrupts, a technique that is particularly prone to sharing problems. Modern systems use a different technology, level-sensitive interrupts, which avoids some of the pitfalls of the older technique.

An edge-triggered interrupt signals the interrupt condition as the transition of the voltage on the interrupt line from one state to another. Only the change—the edge of the wave form—is significant. The interrupt signal needs only to be a pulse, after which the interrupt line returns to its idle state. A problem arises if a second device sends another pulse down the interrupt line before the first has been fully processed. The system may lose track of the interrupts it is serving.

A level-sensitive interrupt signals the interrupt condition by shifting the voltage on the interrupt request line (for example, from low to high). It then maintains that shifted condition throughout the processing of the interrupt. It effectively ties up the interrupt line during the processing of the interrupt so that no other device can get attention through that interrupt line. Although it would seem that one device would hog the interrupt line and not share with anyone, the presence of the signal on the line effectively warns other devices to wait before sending out their own interrupt requests. This way, interrupts are less likely to be confused.

Direct Memory Access

The best way to speed up system performance is to relieve the host microprocessor of all its housekeeping chores. Among the more time consuming is moving blocks of memory around inside the computer; for instance, shifting bytes from a hard disk (where they are stored) through its controller into main memory (where the microprocessor can use them). Today’s system designs allow computers to delegate this chore to support chips and expansion boards (for example, through bus mastering). Before such innovations, when the microprocessor was in total control of the computer, engineers developed Direct Memory Access (DMA) technology to relieve the load on the microprocessor by allowing a special device called the DMA controller to manage some device-to-memory transfers.

DMA was an integral part of the ISA bus design. Even though PCI lacks DMA features (and soon computers will entirely lack ISA slots), DMA technology survives in motherboard circuitry and the ATA disk interface. Consequently, the DMA function has been carried through into modern chipsets.

DMA functions through the DMA controller. This specialized chip only needs to know the base location of where bytes are to be moved from, the address to where they should go, and the number of bytes to move. Once it has received that information from the microprocessor, the DMA controller takes command and does all the dirty work itself. DMA operations can be used to move data between I/O devices and memory. Although DMA operations could in theory also expedite the transfer of data between memory locations, this mode of operation was not implemented in computers.

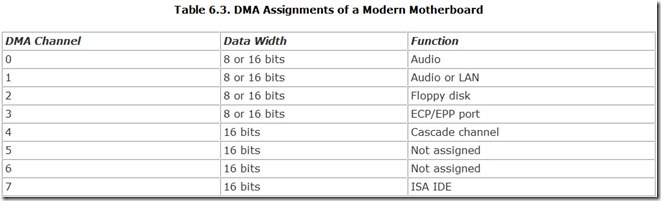

The current DMA system offers seven channels, based on the design of the IBM personal computer AT, which used a pair of type 8237A DMA controller chips. As with the interrupt system, the prototype DMA system emulated by modern chipsets used two four- channel DMA controllers, one cascaded into the other. Each DMA channel is 16 bits wide. Table 6.3 summarizes the functions commonly assigned to the seven DMA channels.

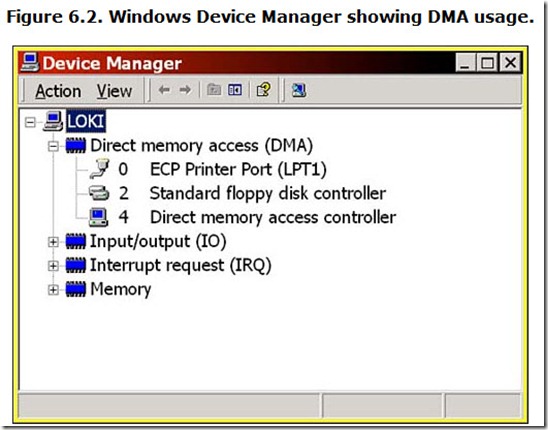

You can check the DMA assignment of your computer much as you do interrupts, through the Device Manager. Simply select DMA from the drop-down menu or radio button instead of Interrupts. You should see your computer’s current DMA assignments, listed much like Figure 6.2.

In general, individual tasks use only one DMA channel at a time. When you run multiple tasks at the same time, nothing changes under Windows 95, 98, or Me, because they serialize I/O. That means those operating systems handle only one I/O task at a time and switch between them. Only a single path for data is available. In rare instances, however, a path may have a DMA channel at both ends (which puts two channels into operation simultaneously). Windows NT and 2000 know no such limits and can run multiple I/O tasks and use multiple DMA channels.

Bus Interface

In early computers, the expansion bus was nothing more than an extension of the connections on the microprocessor. In modern designs, the bus is a free-standing entity with its own bus controller separate from the microprocessor. In fact, the microprocessor connects to the bus as if it were just another expansion device. All modern chipsets use this latter design to control the expansion bus. Not only does the chipset take on the management of the PCI bus, but it also uses this decentralized control system for the legacy ISA bus, should one be used in your computer. The chipset takes over the role of the microprocessor in the traditional ISA system. In fact, the entire ISA bus gets treated as if it were just another PCI device.

The PCI bridge in the chipset handles all the functions of the PCI bus. Circuitry built in to the PCI bridge, independent of the host microprocessor, operates as a DMA master. When a program or the operating system requests a DMA transfer, the DMA master intercepts the signal. It translates the commands bound for the 8237A DMA controller in older computers into signals compatible with the PCI design. These signals are routed through the PCI bus to a DMA slave, which may in turn be connected to the ISA compatibility bus, PCMCIA bus, disk drives, or I/O ports in the system. The DMA slave interprets the instructions from the DMA master and carries them out as if it were an ordinary DMA controller.

In other words, to your software the DMA master looks like a pair of 8237A DMA controller chips. Your hardware sees the DMA slave as an 8237A that’s in control. The signal translations to make this mimicry possible are built in to the PCI bridges in the chipset.

Power Management

To economize on power to meet Energy Star standards or prolong the period of operation on a single battery charge, most chipsets—especially those designed for notebook computers—incorporate power-management abilities. These include both manual power control, which lets you slow your computer or suspend its operation, and automatic control, which reduces power similarly when it detects system inactivity lasting for more than a predetermined period.

Typically, when a chipset switches to standby state, it will instruct the microprocessor to shift to its low-power mode, spin down the hard disk, and switch off the monitor. In standby mode, the chipset itself stays in operation to monitor for alarms, communications requests (answering the phone or receiving a fax), and network accesses. In suspend mode, the chipset itself shuts down until you reactivate it, and your computer goes into a vegetative state.

By design, most new chipsets conform to a variety of standards. They take advantage of the System Management Mode of recent Intel microprocessors and use interrupts to direct the power conservation.

Peripheral Control

If your computer didn’t have a keyboard, it might be less intimidating, but you probably wouldn’t find it very useful. You might make do, drawing pictures with your mouse or trying to teach it to recognize your handwriting, but some devices are so integral to using a computer that you naturally expect them to be a part of it. The list of these mandatory components includes the keyboard (naturally), a mouse, a hard disk, a CD drive, ports, and a display system. Each of these devices requires its own connection system or interface. In early computers, each interface used a separate expansion board that plugged into a slot in the computer. Worse than that, you often had to do the plugging.

You probably don’t like the sound of such a system, and computer manufacturers liked it even less. Individual boards were expensive and, with most people likely not as capable as you in plugging in boards, not very reliable. Clever engineers found a clever way to avoid both the problems and expenses. They built the required interfaces into the chipset. At one time, all these functions were built into a single chip called the peripheral controller, an apt description of its purpose. Intel’s current chipset design scheme puts all the peripheral control functions inside the I/O controller hub.

As with the system-control functions, most of the peripheral interface functions are well-defined standards that have been in use for years. The time-proven functions usually cause few problems in the design, selection, or operation of a chipset. Some interfaces have a more recent ancestry, and if anything will cause a problem with a chipset, they will. For example, the PCI bus and ATA-133 disk interface (described in Chapter 10, “Interfaces”) are newcomers when compared to the decade-old keyboard interface or even-older ISA bus. When a chipset shows teething pains, they usually arise in the circuits relating to these newer functions.

Hard Disk Interface

Most modern chipsets include all the circuitry needed to connect a hard disk to a computer, typically following an enhancement of the AT Attachment standard, such as UDMA. Although this interface is a straightforward extension of the classic ISA bus, it requires a host of control ports for its operation, all provided for in the chipset. Most chipsets now connect the ISA bus through their PCI bridges, which may or may not be an intrinsic part of the south bridge.

The chipset thus determines which modes of the AT Attachment interface your computer supports. Intel’s current chipsets, for example, support all ATA modes up to and including ATA/100. They do not support ATA/133, so computers based on Intel chipsets cannot take full advantage of the highest speeds of the latest ATA (IDE) hard disk drives.

Floppy Disk Controller

Chipsets often include a variety of other functions to make the computer designer’s life easier. These can include everything from controls for indicator lights for the front panel to floppy disk controllers. Nearly all chipsets incorporate circuitry that mimics the NEC 765 floppy disk controller used by nearly all adapter boards.

In that the basic floppy disk interface dates back to the dark days of the first computers, it is a technological dinosaur. Computer-makers desperately want to eliminate it in favor of more modern (and faster) interface alternatives. Some software, however, attempts to control the floppy disk by reaching deep into the hardware and manipulating the floppy controller directly through its powers. To maintain backward compatibility with new floppy interfaces, the chipset must still mimic this original controller and translate commands meant for it to the new interface.

Keyboard Controller

One additional support chip is necessary in every computer: a keyboard decoder. This special-purpose chip (an Intel 8042 in most computers, an equivalent chip, or part of a chipset that emulates an 8042) links the keyboard to the motherboard. The primary function of the keyboard decoder is to translate the serial data that the keyboard sends out into the parallel form that can be used by your computer. As it receives each character from the keyboard, the keyboard decoder generates an interrupt to make your computer aware you have typed a character. The keyboard decoder also verifies that the character was correctly received (by performing a parity check) and translates the scan code of each character. The keyboard decoder automatically requests the keyboard to retransmit characters that arrive with parity errors.

USB-based keyboards promise the elimination of the keyboard controller. These pass packets of predigested keyboard data through the USB port of your computer instead of through a dedicated keyboard controller. In modern system design, the keyboard controller is therefore a legacy device and may be eliminated from new systems over the next few years.

Input/Output Ports

The peripheral control circuitry of the chipset also powers most peripheral ports. Today’s computers usually have legacy serial and parallel ports in addition to two or more USB connections. As with much else of the chipset design, the legacy ports mimic old designs made from discrete logic components. For example, most chipsets emulate 16550 universal asynchronous receiver/transmitters (UARTs) to operate their serial ports.