In this chapter…

Old-Style Design Process

New-Style Design Process

Verifying the Design Works

Using Outside IP

Getting to Tape Out and Film

Current Problems and Future Trends

Chip design has come a long way since the first semiconductor chips were assembled, literally, by hand. Rarely does a profession reinvent itself so often and so fundamentally. New chips today are designed in a completely different manner from those of just 10 years ago; it’s almost certain that the job description will change again in another 10 years.

New chips are designed, naturally enough, with the help of computers. These computers (often called engineering workstations) and the chips inside them were themselves designed this way, providing a nice circular closure to the entire process. The computers that chip-design engineers use are not fundamentally different from a normal PC, and even the specialized software would not look too alien to a casual PC user.

That software is breathtakingly expensive, however, and supports a multibillion-dollar industry all by itself. Called electronic design automation (EDA), the business of creating and selling chip-design software supports thousands of computer programmers around the world. Chip-design engineers rely on their computers and their EDA software “tools” the way a carpenter relies on a collection of specialized tools. There are a handful of large EDA vendors, notably Synopsys (Mountain View, California), Mentor Graphics (Portland, Oregon), and Cadence Design Systems (San Jose, California) that are the equivalent of Craftsman, Stanley, and Black & Decker. There are also numerous smaller “boutique” EDA vendors that supply special-purpose software tools to chip designers in niche markets.

Regardless of the tools a chip designer uses, the goal is always the same: to create a working blueprint for a new chip and get it ready for manufacturing. In the chip-design world, that’s called “getting to film,” as film is the ultimate goal of a chip designer. The film (which is really not film any more, as we shall see) is used by chip makers in their factories to actually manufacture the chip, a process that’s described in the next chapter.

Old-Style Design Process

Before personal computers, EDA, and automated tools, engineers originally used rubylith, a red plastic film sold by art-supply houses that is still used today by sign makers and graphic artists. Early chip designers would cut strips of rubylith with X-acto knives and tape them to large transparent sheets hanging on their wall. Each layer of silicon or aluminum in the final chip required its own separate sheet, covered with a criss-crossing pattern of taped-on stripes.

By laying two or more of these transparent sheets atop one another and lining them up carefully, you could check to make sure that the rubylith from one touched the rubylith from another at exactly the right points. Or, you’d make sure the tape strips didn’t touch, creating an unwanted electrical short in the actual chip. This was painstaking work, to be sure, but such are the tribulations of the pioneers. The whole process was a bit like designing a tall building by drawing each floor on a separate sheet and stacking the sheets to be sure the walls, wiring, stairs, and plumbing all match up precisely.

This task was called taping out, for reasons that are fairly obvious. Tape out was (and still is) a big milestone in every chip-design project. Once you’d taped out, you were nearly done. All the preliminary design work was complete, all the calculations were checked and double-checked, and all the planning was finished. About the only thing left to do was to wait for the chip to be made.

One last step remained, however, before you could get excited about waiting for silicon. You had to make film from your oversized rubylith layers. This was a simple photographic reduction process. Each rubylith-covered layer was used as a mask, projecting criss-crossed shadows onto a small film negative. It works just like a slide projector showing vacation snapshots on a big screen, but instead of making the images bigger, the reduction process makes them smaller. Each separate rubylith layer is projected onto a different film negative that is exactly the size of the chip itself, less than one inch on a side. Now you have a film set that you can send for fabrication.

New-Style Design Process

Today the process is radically different, although no less painstaking or complex. Modern chip-design tools have done away with the error-prone manual work of taping up individual layers, but they only shift the workload. Modern multimillion-transistor chips supply more than enough new challenges to make up the difference.

EDA Design Tools

Modern chips are far too complex to design manually. No single engineer can personally understand everything that goes on inside a new chip. Even teams of engineers have no single member who truly understands all the details and nuances of the design. One person might manage the project and command the overall architecture of the chip, but individual engineers will be responsible for portions of the detailed design. They must work together as a team, and they must rely on and trust each other as well as their tools.

Those tools are all computer programs. Just as an experienced carpenter will accumulate a toolbox full of favored tools, chip designers develop a repertoire of computer programs that aid in various aspects of chip design. No single EDA tool can take a chip design from start to finish, just as no carpenter’s tool can do every job. The assortment is important, and using a mixture of EDA tools is part of an engineer’s craft.

Schematic Capture

Schematic diagrams are the time-honored way of representing electrical circuits. At least that’s true for simple electrical circuits. Schematics, or wiring diagrams, are like subway maps. They draw, more or less realistically, the actual arrangement of wires and components (lines and stations). The diagram might be stylized a bit for easier understanding, like the classic route map for the London Underground, but for the most part, schematics are accurate, maps of circuit connections, as shown in Figure 3.1.

Drawing a schematic on paper doesn’t do an engineer any good if the goal is to ultimately transfer that design into film to have a chip made. Instead, schematics are drawn on a computer screen using schematic-capture software. With this, you can drag and drop symbols for various electrical components (adders, resistors, etc.) across your screen and then connect them by drawing wires between them. The schematic-capture software takes care of the simpler annotation chores like labeling each function and making sure no wires are left dangling in midair. Schematic-capture software is a bit like word-processing software in that it won’t provide inspiration or talent or create designs from nothing, but it will catch common mistakes and keep your workspace free from embarrassing erasure marks.

A number of companies supply schematic-capture software, but most vendors are small firms and their ranks are dwindling. Modern chips are too complex to be designed this way, not because the schematic-capture programs can’t handle it, but because the engineers can’t. Designing a multimillion-transistor chip using schematic-capture software would be like painting a bridge with a tiny artist’s brush and palette. There’s just far too much area to cover and not enough time.

Hardware Synthesis

To alleviate some of the tedium of designing a chip bit by bit, engineers have turned to a new method, called hardware synthesis. Synthesis is not quite as space-age as it might sound: Chips are not magically synthesized from thin air. Instead, engineers feed their computers instructions about the chip’s organization and the computer generates the detailed circuit designs. (The exact method for doing this is described in the next section.) The engineer still has to design the chip, just not at such a detailed level. It’s like the difference between describing a brick wall, brick by brick and inch by inch, or telling an assistant, “Build me a brick wall that’s three feet high by 10 feet long.” If you have an assistant you trust who is skilled in bricklaying, you should get the same result either way.

Theoretically, that’s true of hardware synthesis as well, but the reality is somewhat different. Although today’s chip designers have overwhelmingly adopted hardware synthesis for their work, they still grumble about the trade-offs. For example, synthesized designs tend to run about 20 to 30 percent slower than “handcrafted” chip designs. Instead of running at 500 MHz, a synthesized chip might run at only 400 MHz. That’s fine if it’s a low-cost chip that really only needs to run at, say, 250 MHz. However, it’s a showstopper if you’re selling prestigious high-end microprocessors for personal computers. There are a few types of chips, therefore, that are still not designed using hardware synthesis because the vendor can’t afford the reduction in performance.

Another drawback of hardware synthesis is that the resulting chips are often about 30 to 50 percent larger in terms of silicon real estate. More silicon means more cost, both because they need more raw material and because fewer chips fit on a round silicon wafer. Bigger chips mean lower manufacturing yields. Once again, there are certain chip makers that don’t use hardware synthesis because they’re shaving every penny of cost possible.

Third, chips made from synthesized designs tend to use more electricity than do manually designed chips. That’s a side effect of the larger silicon size previously mentioned. More silicon means more power drained, and the difference can be 20 percent or more. For extremely low-power chips, such as the ones used in cellular telephones, handcrafted chips are still popular.

Despite all these serious drawbacks, most new chips are created from synthesized designs simply because there’s no other way. Even big engineering teams need the help of hardware-synthesis programs to finish a large chip in a reasonable amount of time. It takes a long time to finish a cathedral if you’re laying every brick by hand.

Companies will often use both design styles, synthesis and handcrafting. The first generation of a new chip will usually be designed with hardware-synthesis languages (described later) to get the chip out the door and onto the market as quickly as possible. After the chip is released (assuming that it sells well), the company might order its engineers to revise or redesign the chip, this time using more labor-intensive methods to shrink the silicon size, reduce power consumption, and cut manufacturing costs. This “second spin” of the chip will often appear six to nine months after the first version. When microprocessor makers announce faster, upgraded versions of an existing chip, this is often how the new chip was created.

Hardware-Description Languages

Schematics are fine, up to a point, but they’re too detail-oriented for large-scale designs, which modern chips have become. Instead, engineering teams need something that’s more high-level and more abstract; something that allows them to think big and avoid getting bogged down in so many of the electrical details of what they’re creating. What they’d like is some way to describe what they want, and have a tool magically produce it.

Enter hardware-description languages (HDLs), the next step up the evolutionary ladder from schematic-capture programs. HDLs also enable hardware synthesis. Using an HDL, engineering teams can design the behavior of a circuit without exactly designing the circuit itself in detail. The HDL tool will translate the engineers’ wishes (albeit very specific and carefully defined wishes) into a circuit design. Instead of using schematic-capture software to draw an adder and all of its attendant wiring, an HDL user can simply specify that he or she wants two numbers added together. The HDL tool handles the detail work of selecting an adder and wiring it up.

HDLs are far from magical. Like all computer programs, they’re very literal minded and can misinterpret their user’s wishes. Engineers spend years learning to use HDLs effectively and have to be very methodical about defining what they want. The bigger the chip design, the greater the opportunity for failure, so the greater the pressure to make sure everything’s right.

HDLs look nothing like schematic capture. Instead of drawing figures on a screen, using an HDL is more like writing. It’s a hardware-description language, after all. Engineers use an HDL to tell their computer what kind of circuit they want, in the form of a step-by-step procedure. The computer then interprets the steps and produces an equivalent circuit diagram. If schematics are blueprints, HDLs are recipes.

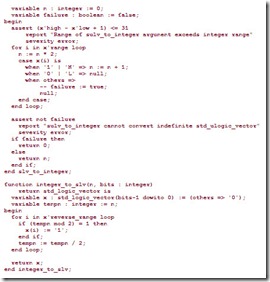

For a large chip, this procedural description can be hundreds of thousands of lines long, as long as a novel. A sample of HDL code is shown in Figure 3.2. It has to describe absolutely everything the chip does under all circumstances.

If you’re familiar with computer programming and software, you’ve probably already recognized the concept of HDLs; they’re programming languages. Paradoxically, HDLs are a software approach to a hardware problem—they’re a way to “write” new hardware.

The HDL Leaders: VHDL and Verilog

The two most common HDLs are called VHDL and Verilog. Practically all new chips are designed using one of these HDLs. The two languages are quite similar, but both have devoted practitioners who’d argue the merits of their chosen HDL with an uncommon zeal.

Both of these languages were developed in the United States, although both are used worldwide. Interestingly, there is a definite geographic division between VHDL users and Verilog users. Verilog aficionados seem to be clustered around the western United States and Canada, whereas VHDL holds sway in Europe and New England. Asian users seem to be evenly split. (Regardless of the local tongue, the VHDL and Verilog languages always use English words and phrases to describe hardware.)

Tech Talk

Verilog is a few years older than VHDL. First developed in 1983, Verilog was for some time a proprietary HDL belonging to Cadence Design Systems. VHDL, on the other hand, was created as an open language and became an Institute for Electrical and Electronics Engineering (IEEE) standard in 1987. Sensing that VHDL’s standard status would jeopardize its investment in Verilog, Cadence put its HDL in the public domain in 1990 and applied for IEEE approval, which it gained in 1995. Since 2001, both sides have been working to unify the languages and add new features to address the challenges of next-generation chips.

Although neither the VHDL nor Verilog languages belong to anyone, as such, several EDA vendors do compete to sell the tool that converts engineers’ HDL descriptions into working circuit designs. These are essentially translation programs, converting from one language (VHDL or Verilog) to another (circuit schematics). Like any translation program or service, there is fierce competition over nuances of how accurate or efficient those translations might be. Some engineers are interested in the most efficient (i.e., fastest) translation because they plan to do several translations per day. Others might be interested more in performance. In HDL terms, this means translating the original HDL into the fewest number of transistors possible, or something comparable to translating German into dense, terse English. Still other customers might want the opposite: a translator that produces long, flowing passages with lots of footnotes and annotations, corresponding to a circuit that’s large and uses lots of transistors but is easy to understand and pick apart for future designs.

Tech Talk

Register-transfer level (RTL) is a generic term that covers both VHDL and Verilog. RTL is not a language; it’s a “zoom level” that’s not quite as detailed as individual transistors but not quite as high level as a complete chip. It’s an intermediate level of detail that works well for today’s electronics engineers. Looking at an RTL description (e.g., VHDL or Verilog), a good engineer can divine what a circuit is going to do, but not exactly how it will do it. Like courtroom shorthand, RTL conveys just enough information to get the message across.

Alternate HDLs

VHDL and Verilog are by no means the only HDLs available, although they are clearly the two top players in the HDL market. Because of their age and rapid increases in chip complexity, both languages have begun to crack a little bit under the strain of modern chip design. Some engineers argue that designing a 10-million-transistor chip using VHDL or Verilog is little better than the rubylith methods from the 1970s. These engineers turn to a number of alternative HDLs.

HDLs come and go, but a few that seem to have reached critical mass in the EDA market are Superlog, Handel-C, and SystemC. Superlog, as the name implies, is a pumped-up version of Verilog. It’s a superset of the language that adds some higher lever, more abstract features to the language. Superlog allows designers experienced in Verilog to handle larger chip designs without going crazy with details.

Handel-C and SystemC are both examples of a more radical approach to HDLs. These take the heretical stance that, because popular HDLs like Verilog and VHDL are already programming languages, why not use an actual programming language in their place? In other words, they use the C programming language to define hardware as well as software. This has the advantage that millions of programmers already know C programming, and thousands more students learn it every year. Idealistically, it might also help close some of the traditional gap between hardware and software engineers.

The C-into-hardware approach has some philosophical appeal but has met with raucous resistance from precisely the hardware engineers it is meant to entice. Programming languages such as C, BASIC, Java, and the rest were never designed to create—or even adequately describe—hardware, the argument goes, so they would naturally do a terrible job at it. It’s like composing love sonnets in Klingon, you might say. Some of the resistance to this approach might just be the natural impulse to protect one’s livelihood, or it might really be a terrible idea. Time will tell.

Tech Talk

Even the strongest backers of the C-as-hardware-language movement realize that the original C programming language isn’t well suited to the job. They’ve all added various extensions to the language to help express parallelism, the ability of electronic circuits to perform multiple functions simultaneously, which programming languages like C can’t describe. Many have also added “libraries” of common hardware functions so that engineers don’t have to create them from scratch. The results clearly do work, but opinions vary widely as to the efficiency and performance of the resulting design.

Producing a Netlist

Whether engineers use schematic capture or an HDL, their input will eventually be translated into something called a netlist. A netlist, or a list of nets, is a tangled list of which electrical circuits are connected to which other circuits. A netlist is generally only readable by a computer; it’s too convoluted and condensed to be of any use to a person. The netlist is just an intermediate stop along the way to a new chip.

Floor Planning

After a chip’s circuit design is created, it’s time to start getting physical. Because the ultimate goal is to produce film, which will then be used to fabricate a chip, there comes a point when the design ceases being an abstract schematic or HDL description and starts to take on a real shape. It’s the point where architecture becomes floor planning. In fact, that’s what it’s called: chip floor planning.

Here a whole new software tool comes out of the engineer’s toolbox. A floor-planning program takes the netlist, counts the number of electronic functions and features that are in it, tallies up the wires needed to connect it all, and estimates how much silicon real estate the chip will cover. Naturally, the more complex the chip design, the bigger the chip will be. However, other factors influence the size of the chip, too, such as precisely what company will be manufacturing the chip and how fast it needs to run. (Slow-running chips can sometimes be packed more densely than high-performance chips, saving silicon area and cost.) Many factors affecting a chip’s size and shape are hard to predict, even for the engineers who designed it. If a certain portion of the chip needs to connect all the other parts of the chip, all the wires, tiny as they are, will take up space and make the chip bigger.

The real job of the floor-planning program is to find the optimal arrangement for all the parts of the chip. Which circuits should be near which other circuits? What’s the best way to shorten the wires? How will electricity be distributed among all the portions of the chip? Are there any sensitive radio receivers or optical sensors on the chip that need to be isolated from other areas? Of course, the floor planner also needs to pack this all into a rectangular (and ideally square) chip design. Square chips fit best on a round silicon wafer, yielding more useful chips per wafer and lowering overall manufacturing costs.

Place and Route

After the chip has been floor-planned (a new verb coined by chip engineers), it’s time for the detail work: routing all the tiny wires that connect the chip’s various parts. Floor-planning blocks out the rough shape of the chip and organizes its major components. Place and route (sometimes shortened to P&R) works out the messy details of exactly where every transistor, capacitor, wire, and resistor actually goes. It is as detailed a blueprint of the chip as will ever exist. If all goes well, place and route is the last step in designing the chip before its creators hand it off to be manufactured.

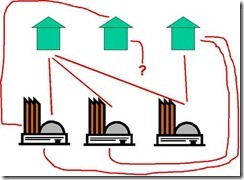

Unfortunately, very little often goes well when a chip is placed and routed for the first time. Place and route software is horribly expensive because its task is so difficult. These programs must manage millions of details, all interconnected with one another, and find the optimal physical arrangement of the pieces without disturbing any of the connections among them. Consider the child’s puzzle of the three houses and three utilities shown in Figure 3.3.

If you don’t solve it after the first few days, don’t feel too bad. The task has been mathematically proven to be impossible. To connect all the houses you’d need to change the rules and use two sheets of paper to build “overpasses” over some of your lines. Like freeway overpasses built over roads below them, coming off the paper and working in three dimensions is the only solution to the problem.

Now imagine the same puzzle with 1 million houses and 1 million utilities, and you begin to get an idea of the problem facing place and route programs. They will take hours, and often days, working on the problem of how to connect all the parts of a chip without dropping a single connection or making one wrong connection (crossed lines). They have to do all this while preserving the floor plan generated by the previous EDA tool.

Granted, place and route tools have more than two layers to work with, but the task is still not easy. Typical chips will have from four to seven “metal layers” used for routing wires. Adding more layers would obviously ease the routing congestion, but it also adds cost. Each additional metal layer adds about a day to the processing time for a chip, slowing down the production line. Each layer also adds hundreds of dollars to the cost of each silicon wafer, which must be divided among the chips that are on it. Throwing money (and time) at the problem will solve it, but not in an economically pleasing manner.

What typically happens after the first bout of placing and routing is that the EDA tool figuratively throws up its hands and says it cannot complete the task. There might be too many wire connections and not enough metal layers on which to route them. Or different portions of the chip might be too far apart to connect them efficiently, a sign that the floor-planning software has not done a good job. Furthermore, the engineering team might force some constraints on the place and route program, prohibiting it from routing any wire that would exceed a certain length, for example. In the world of chip design, if the chip successfully passes place and route the first time, you weren’t trying hard enough.

The next step depends on the attitude of the engineering team, the company’s goals, and the time they feel they have to finish the job. They might resubmit the chip design to the floor-planning tool and hope (or specify) that it gives them different results. They might decide to loosen some of the restrictions they placed on the place and route program. They might decide to spend the extra money on an additional metal layer to ease overcrowding. Or they might go all the way back to the beginning and change some of their original design, eliminating features or connecting them in different ways. Either way, it means repeating some or all of the steps leading up to place and route, and it usually means many sleepless nights bent over a flickering computer screen, waiting for an encouraging result.

Verifying the Design Works

Assuming the chip eventually makes it through place and route in one piece, it would normally be ready to send to the foundry for manufacturing. However, because the cost of tooling up a foundry to make a new chip is so expensive (in the neighborhood of $500,000), it’s vitally important that everyone involved in its design convince themselves that there are no remaining bugs. There are few things more expensive—or more damaging to one’s career—than a brand new chip that doesn’t work, so verifying the design is the last, nail-biting step on the road to silicon.

The enormous costs of chip manufacturing have spawned a subindustry of companies providing tools and tests to verify complex chips without actually building them. The business opportunity for these companies lies in charging only slightly less than the cost of a bad chip.

All these verification tools work by simulating the chip before it’s built. As with many things, the quality of the simulation depends on how much you’re willing to spend. There are roughly three levels of simulation and some engineering teams make use of all three. Others make do with just the simplest verification, not because they want to but because they can’t afford anything more complete.

C Modeling

The first and easiest type of simulation is called C modeling. As you might guess, this consists of writing a computer program in C that attempts to duplicate the features and functions of the chip’s hardware design. The holes in this strategy are fairly obvious: If the program isn’t really an accurate reflection of the chip design, then it won’t accurately reflect any problems, either. This strategy also requires essentially two parallel projects: the actual chip design, and the separate task of writing a program that’s as close to the chip as the programmer can make it. The major upside of this approach, and the reason so many engineering teams use it, is its speed. It’s quick to compile a C program (a few minutes at most) and it’s quick to run one. Within an hour or so, the engineers could have a good idea of whatever shortcomings their chip might have. Many chip-design teams create a new C model every day and run it overnight; the morning’s results determine the hardware team’s task for the rest of the day.

Tech Talk

There aren’t really any commercial C verification tools. That would defeat the purpose. C verification models became popular because they don’t require special tools or skills; they’re simply standard computer programs that happen to model the behavior of a chip under development. They’re written, compiled, and run on entirely normal PCs or workstations. There’s nothing specific about the C language that makes it suitable for this technique, by the way. It’s simply the most commonly used programming language among engineers.

Going back to HDLs for just a moment, easy verification is one of the strengths of the new C-into-hardware design languages such as SystemC and Handel-C. Because the hardware design is created using C (or a derivative of it) in the first place, it’s trivial to use that same C program to verify its operation. This single-source approach also eliminates (or at least, reduces) the problems of matching the C verification model to the actual hardware design.

Hardware Simulation

A significant step up from software simulation of the chip is hardware simulation. Despite the name, hardware simulation doesn’t usually require any special hardware. Instead, a computer picks through the original hardware description of the chip (usually written in VHDL or Verilog) and attempts to simulate its behavior. This process is painfully slow but quite accurate. Because it uses the very HDL description that will be used to create the chip, there’s no quibbling over the fidelity of the model.

Unfortunately, hardware simulation is so slow that large chips are often simulated in chunks, instead of all at once, and this introduces errors. If there are problems when Part A of the chip communicates with Part B, and these two chunks are simulated separately, the hardware simulator won’t find them. The alternatives are to let the simulation run for many days or to buy a faster computer. Regrettably, the larger the chip, the greater the need for accurate simulation, but the longer that simulation will take.

Hardware Emulation Boxes

The non plus ultra of chip verification is an emulator box. This is a relatively large box packed full of reprogrammable logic chips, row after row. (For a more complete description of what these chips are, see Chapter 8, “Essential Guide to Custom and Configurable Chips.”) To emulate a new chip under development, you first download the complete netlist of the chip into the emulator box. The box then acts like a (much) larger and (much) slower version of the chip. The advantages of this system are many. First, the emulator is real hardware, not a simulation, so it behaves more or less like the new chip really will. The major exception is speed: Emulator boxes run at less than 1 percent of the speed of a real chip, but that’s still much faster than a hardware simulator.

Second, emulator boxes generally emulate an entire chip, not just pieces of it. Naturally, emulating larger chips requires a larger box, and commensurately more expense, but at least it’s possible. Because the emulator box is real hardware, you can connect it to other devices in the “real” world outside the box. For example, you can connect an emulated video chip to a real video camera to see how it works. Finally, the emulator can be used and reused at different stages in the chip’s development, or even for different chips. In fact, the latter case is often true. Emulator boxes are so expensive that they generally are treated as company resources, shared among departments as the need arises.

Using Outside IP

One of the quickest ways to design a new chip is to not design it at all. Most big new chips include a fair amount of reused, borrowed, licensed, or recycled circuitry; not physically recycled silicon, of course, but recycled design ideas. Like a musician composing new variations on an old theme, it’s often better to borrow and adapt than to create from scratch.

In engineering circles this is called design reuse or intellectual property (IP) reuse. IP is a high-sounding name for intangible assets that can be sold, borrowed, or traded. Lawyers use IP to refer to trademarks, logos, musical compositions, software, or nearly anything else that’s valuable but insubstantial. Engineers use IP to refer to circuit designs.

Since the mid-1990s there has been a growing market for third-party IP: circuit designs created by an independent engineering company solely for the purpose of selling to other chip designers. As chip designs have grown ever more complex, the demand for this IP has grown and encouraged the supply. There are a few profitable “chip” companies that not only don’t have their own factories, they don’t even have their own chips. They license partial chip designs to others and collect a royalty.

To makers of large chips, it’s an attractive alternative to buy parts of the design from outside IP suppliers. There are dozens of large IP vendors and hundreds of smaller ones. Some IP houses specialize in large (and valuable) types of circuit designs, such as entire 32-bit microprocessors, for which customers gladly pay more than $1 million in licensing fees, plus years of royalties down the road. Many smaller IP firms license their wares for $10,000 or less, sometimes without any royalties at all. There are also sources of free IP on the Internet, just as there are for free software.

Although outside IP is a great boon to productive chip design, it isn’t all smooth sailing. First, customers often balk at the prices. Designing a new chip is an expensive undertaking already without paying large additional sums to an outside firm. Then there are technical problems. Outside IP is designed to appeal to the largest possible audience, so it’s naturally somewhat generic. Potential customers might be looking for something more specific that outside IP doesn’t offer. It’s also possible that the IP won’t be delivered in a form that the customer can use. If the bulk of the chip is being designed using Verilog, but the IP is delivered in VHDL, it’s probably not usable. For IP firms that deliver their products as HDL, there’s about a 50 percent chance of getting it wrong.

There’s also the usual finger pointing when something goes wrong. If the chip design fails its final verification, does the fault lie with the licensed IP or with the original work surrounding it? Neither side will be eager to admit liability, but the IP vendor’s entire business rides on delivering reliable circuitry. Usually these problems have their root in ambiguous or insufficient specifications or a misunderstanding between engineers on either side.

Hard and Soft IP Cores

Among IP vendors and customers, one of the first questions asked is, “Does this IP come in hard or soft form?” What the customer is asking is whether the circuit design will be delivered in a high-level HDL such as Verilog or VHDL, or as an already-synthesized “hard” design ready for manufacturing. There are pros and cons each way, and debate continually simmers over which way is better.

IP that’s delivered in “soft” form (i.e., as an HDL description) is generally easier for the customer (the chip-design team) to work with, but it will have all the same drawbacks that all synthesized hardware suffers: larger size, slower speed, and higher cost when it’s manufactured. That might be okay, but the customer will have to decide. On the plus side, the HDL is probably easier to integrate into the rest of the chip design, especially if the rest of the chip is also being designed with the same HDL.

IP vendors sometimes don’t like to provide their wares in HDL form for two reasons. First, the usual drawbacks of synthesized hardware might make their product look bad. The IP vendor can’t guarantee, for example, that its circuit will run at 500 MHz because it can’t control the synthesis process. Consequently, the vendor winds up being very conservative and cagey about promising hard performance numbers. Unlike chip companies, IP companies rarely advertise MHz numbers (or power usage) in their literature.

Another aspect of soft IP that gives the vendors headaches is the possibility of piracy and IP theft. Soft IP is like sheet music, in that it’s easy to duplicate and to pass copies around to others. As with sheet music, customers are supposed to pay royalties to the copyright holder in that event, but that’s a tough law to enforce. Many IP vendors simply do not offer synthesizable IP cores for exactly that reason.

The alternative to soft IP is, of course, hard IP. A “hard” IP product (often called a core) isn’t really hard. It’s just the same as a soft core after it’s been synthesized, placed, and routed. It’s film, or the electronic equivalent of film. A hard core is nearly ready for manufacturing: All it needs is to be dropped into the rest of the chip design at the last minute.

There are good and bad aspects to using hard cores. They’re generally faster, smaller, and use less power than synthesized soft cores because they’ve been extensively hand-tuned. For very high-performance or very low-power chips, hard cores are the only way to go. On the other hand, hard cores are much more difficult to incorporate into the rest of the chip, especially if that chip is being synthesized. Essentially, the chip’s designers have to leave a rectangular hole in their design that exactly fits the hard core. Then, during the late stages of preparation before manufacturing, the hard core is inserted.

Hard cores prevent the customer’s engineers from altering or modifying the core in any way, which is partially the point. IP vendors like delivering hard IP cores because they know their customers won’t have access to the “recipe” for the IP. Hard cores also perform better than soft cores, as previously mentioned.

Early IP vendors in the mid-1990s almost always delivered hard IP cores. The trend has moved more toward soft IP in recent years, however. As more and more engineering teams use hardware-synthesis and HDLs for their own chips, they demand the same from their outside IP vendors. IP vendors might cringe because of the performance and security issues that soft IP raises, but the alternative is to lose business.

Various industry groups have looked at ways to protect soft IP by “watermarking” the circuit design or through public-key encryption of the data files, but so far these efforts have produced little. There might not be a need; the industry seems to be self-policing. The larger the customer, the more likely they are to regulate themselves. An infringement scandal in a public corporation would be a huge embarrassment, so they protect their suppliers’ products very carefully. Smaller startups are likely to be a bit less conscientious, but they also represent a smaller economic threat to the IP vendor. In the end, an open and thriving IP market shows every sign of taking care of itself.

Physical Libraries

Another form of IP, although a much less glamorous one (if that’s possible), is the physical library. These fit in toward the end of a chip’s design, as it’s being synthesized, placed, and routed. The physical libraries carry the arcane details of the production line that will be used to fabricate the part. As such, these libraries change from one semiconductor manufacturer to another, and often from one building or site to another within the same company. In the later stages of a chip’s design, the EDA tools need to know exactly how thick, how wide, and how high each silicon transistor will be, and these characteristics vary slightly from maker to maker.

There used to be a thriving business in supplying these libraries (which are really just big data files) to chip designers, but no more. The foundries and other semiconductor manufacturers wised up to this business opportunity and began supplying libraries of their own. By supplying chip designers with their libraries for free, manufacturers provide a small incentive for a designer to choose that manufacturer. Soon it became de rigueur for any semiconductor manufacturer to supply a free library or risk being excluded when it came time to bid on manufacturing.

Getting to Tape Out and Film

After the design, synthesis, testing, simulation, and verification are all done, the final steps are almost anticlimactic. Once the engineering team is satisfied that the chip design will work, it’s simply a matter of pressing a button to tape out the new chip. Far from the old days when each layer of silicon and metal was literally taped out by hand, tape out now consists of a few moments for a computer to produce a file and store it on a CD-ROM. Often engineers will print out these files and hang up the colorful poster-sized prints of the chip’s design, but this is done more out of tradition and a sense of camaraderie than for any sound technical reasons.

Film is no longer really film anymore. The file produced by the EDA software is called a GDS-II database, and it takes the place of actual film. Transporting a film box to the foundry is passé; uploading the GDS-II database is as simple as sending an e-mail.

Current Problems and Future Trends

Imagine you’re a little kid again, playing with Tinkertoys (or Lego bricks, Lincoln Logs, or Meccano pieces; pick your favorite). You have an eccentric uncle who believes your birthday comes once every 18 months. On each birthday, he gives you a gift of another complete set equal to what you already have. Your store of building blocks therefore doubles every 18 months. This is great fun; you can build bigger, better, and taller things all the time. Before long, you’re making toy skyscrapers, and then entire cities.

Surprisingly soon, you run out ideas for things to build. Or, if you’re very creative, you run out of time to build them before the next installment of parts is dumped in your lap. You simply can’t snap pieces together fast enough to finish a really big project before it’s time to start the next one. You have a few choices. You can ignore the new gift and steadfastly finish your current project, thus falling behind your more ambitious friends. Or you can start combining small pieces together into bigger pieces and use them as the building blocks.

So it is with modern chip design. The frequently heard (and often misquoted) Moore’s Law provides us with a rich 58 percent compound annual interest rate on our transistor budgets. That’s the same as doubling every 18 months. Given that it frequently takes more than 18 months to design a modern chip, chip designers are presented with a daunting challenge: Take the biggest chip the world has ever seen and design something that’s twice that big. Do it using today’s tools, skills, and personnel, knowing that nobody has ever done it before. When you’re finished, rest assured your boss will ask you to do it again. Sisyphus had it easy.

The modern EDA process is running out of steam. Humans are not becoming noticeably more productive, but the tasks they are given (at least, those in the semiconductor engineering professions) get more difficult at a geometric rate. Either the tools have to change or the nature of the task has to be redefined. Otherwise, the Moore’s Law treadmill we’ve come to enjoy for the past 30 years will start slowing down dramatically.

First, let’s cover the easy stuff. Faster computers will help run the current EDA tools faster. Faster synthesis, simulation, modeling, and verification will all speed up chip design by a little bit. However, even if computers were to get 58 percent faster every year (they don’t), that would only keep pace with the increasing complexity of the chips they’re asked to design. At best, we’d be marking time. In reality, chip designers are falling behind.

A change to testing and verification would provide a small boost. The amount of time devoted to this unloved portion of chip design has grown faster than the other tasks. Testing now consumes more than half of some chips’ project schedules. To cut this down to size, some companies have strict rules about how circuitry must be designed, in such a way that testing is simplified. Better still, these firms encourage their engineers to reuse as much as possible from previous designs, the assumption being that reused circuitry has already been validated. Unfortunately, reused circuitry has only been validated in a different chip; in new surroundings the same circuit might behave differently.

IP is another angle on the problem. Instead of helping designers design faster, this helps them design less. Reuse, renew, and recycle is the battle cry of many engineering managers. Using outside IP doesn’t so much speed design, as it enables more ambitious designs. Engineers tend to design larger and more complex chips than they otherwise might have if they know they don’t have to design every single piece of it themselves. Although that makes the resulting chips more powerful and feature-packed, it doesn’t shorten the design cycle at all. It just packs more features into the same amount of time.

The most attention is devoted to new design tools. How can we make each individual engineer more productive? The great hope seems to be to make the chip-design tools more abstract or high level, submerging detail and focusing on the overall architecture of a large and complex chip. Many ideas are being tried, from free-form drawing tools that allow an engineer to sketch a rough outline of the design (“from napkin to chip” is one firm’s advertising slogan) to procedural languages that an engineer can use to “write” a new chip.

There are two problems with these high-level approaches. For one, giving up detail-level control over a chip’s design will necessarily produce sloppier designs that are slower and bulkier, wasting silicon area and power. These are not trends anyone looks forward to. On the other hand, the same Moore’s Law that bedevils us also hides our sins: Silicon gets faster all the time, and that increased speed can hide the performance lost through the new tools. It might be a good bargain.

The second problem, and a thornier one to solve, is simple human inertia. People are loath to give up their familiar tools and abandon the professional skills they’ve developed. For an industry that thrives on the breakneck pace of innovation, ironically, semiconductor engineers drag their feet when it comes to changing and adapting their own profession. Hardware engineers criticize and malign the new software-into-hardware languages partly because using those languages would require skills the hardware engineers don’t have and would marginalize the skills they do have. Nobody likes to anticipate the death of his or her own profession. Besides, solving a hardware problem by discarding all the hardware engineers seems a bit counterintuitive.

It’s not that today’s chip designers aren’t trying to help solve the problem. After all, it is they who stand to benefit from whatever breakthrough comes. It’s just that nobody knows quite which way to turn. On one hand, gradual evolutions of the current and familiar tools, such as Superlog, can leverage an engineer’s skills and experience, but they’re only a stopgap measure, buying a few years’ grace at most. On the other hand, radically new tools like chip-level abstraction diagrams aren’t familiar to anyone and take months or years to learn to use effectively. This would mean discarding years of hard-won experience doing it “the old way.”

Each engineer must make his or her own decision, or have it forced on him or her by a supervisor or new employer. Left to themselves, most people will choose the path of gradual evolution. It’s less threatening than a complete overhaul and still provides the excitement of learning something (a little bit) new.

With no clear winner and no clear direction to follow, the future of chip design will be determined largely by herd instinct. Engineers will choose what they feel most comfortable with, or what their friends and colleagues seem to be using. Most of all, they want to avoid being left out, choosing a path that will leave them stranded and isolated from the rest of the industry. Macintosh users seem to like this kind of iconoclasm; chip-design engineers dread it.