Chapter 5. Microprocessors

Microprocessors are the most complicated devices ever created by human beings. But don’t despair. A microprocessor is complicated the same way a castle you might build from Legos is complicated. You can easily understand each of the individual parts of a microprocessor and how they fit together, just as you might build a fort or castle from a legion of blocks of assorted shapes. The metaphor is more apt than you might think. Engineers, in fact, design microprocessors as a set of functional blocks—not physical blocks like Legos, but blocks of electronic circuits that perform specific functions. And they don’t fit them together on the floor of a giant playroom. Most of their work is mental, a lot of it performed on computers. Yes, you need a computer to design a computer these days. Using a computer is faster; it can give engineers insight by showing them ideas they could only before imagine, and it doesn’t leave a mess of blocks on the floor that Mom makes them clean up.

In creating a microprocessor, a team of engineers may work on each one of the blocks that makes up the final chip, and the work of one team may be almost entirely unfathomable by another team. That’s okay, because all that teams need to know about the parts besides their own is what those other parts do and how they fit together, not how they are made. All the complex details—the logic gates from which each block is built—are irrelevant to anyone not designing that block. It’s like those Legos. You might know a Lego block is made from molecules of plastic, but you don’t need to know about the carbon backbones and the side-chains that make up each molecule to put the blocks together.

Legos come in different shapes. The functional blocks of a microprocessor have different functions. We’ll start our look at microprocessors by defining what those functions are. Then we’ll leave the playroom behind and look at how you make a microprocessor operate by examining the instructions that it uses. This detour is more important than you might think. Much of the design work in creating a series of microprocessors goes into deciding exactly what instructions it must carry out—which instructions are most useful for solving problems and doing it quickest. From there, we’ll look at how engineers have used their imaginations to design ever-faster microprocessors and at the tricks they use to coax more speed for each new generation of chip.

Microprocessor designers can’t just play with theory in creating microprocessors. They have to deal with real-world issues. Somehow, machines must make the chips. Once they are made, they have to operate—and keep operating. Hard reality puts some tight constraints on microprocessor design. If engineers aren’t careful, microprocessors can become miniature incinerators, burning themselves up. We’ll take a look at some of these real-world issues that guide microprocessor design—including electricity, heat, and packaging, all of which work together (and at times, against the design engineer).

Next, we’ll look at real microprocessors, the chips you can actually buy. We’ll start with a brief history lesson to put today’s commercial offerings in perspective. And, finally, we’ll look at the chips in today’s (and tomorrow’s) computers to see which is meant for what purpose—and which is best for your own computer.

Background

Every modern microprocessor starts with the basics—clocked-logic digital circuitry. The chip has millions of separate gates combined into three basic function blocks: the input/output unit (or I/O unit), the control unit, and the arithmetic/logic unit (ALU). The last two are sometimes jointly called the central processingunit (CPU), although the same term often is used as a synonym for the entire microprocessor. Some chipmakers further subdivide these units, give them other names, or include more than one of each in a particular microprocessor. In any case, the functions of these three units are an inherent part of any chip. The differences are mostly a matter of nomenclature, because you can understand the entire operation of any microprocessor as a product of these three functions.

All three parts of the microprocessor interact together. In all but the simplest microprocessor designs, the I/O unit is under the control of the control unit, and the operation of the control unit may be determined by the results of calculations of the arithmetic/logic unit CPU. The combination of the three parts determines the power and performance of the microprocessor.

Each part of the microprocessor also has its own effect on the processing speed of the system. The control unit operates the microprocessor’s internal clock, which determines the rate at which the chip operates. The I/O unit determines the bus width of the microprocessor, which influences how quickly data and instructions can be moved in and out of the microprocessor. And the registers in the arithmetic/control unit determine how much data the microprocessor can operate on at one time.

Input/Output Unit

The input/output unit links the microprocessor to the rest of the circuitry of the computer, passing along program instructions and data to the registers of the control unit and arithmetic/logic unit. The I/O unit matches the signal levels and timing of the microprocessor’s internal solid-state circuitry to the requirements of the other components inside the computer. The internal circuits of a microprocessor, for example, are designed to be stingy with electricity so that they can operate faster and cooler. These delicate internal circuits cannot handle the higher currents needed to link to external components. Consequently, each signal leaving the microprocessor goes through a signal buffer in the I/O unit that boosts its current capacity.

The input/output unit can be as simple as a few buffers, or it may involve many complex functions. In the latest Intel microprocessors used in some of the most powerful computers, the I/O unit includes cache memory and clock-doubling or -tripling logic to match the high operating speed of the microprocessor to slower external memory.

The microprocessors used in computers have two kinds of external connections to their input/output units: those connections that indicate the address of memory locations to or from which the microprocessor will send or receive data or instructions, and those connections that convey the meaning of the data or instructions. The former is called the address bus of the microprocessor; the latter, the data bus.

The number of bits in the data bus of a microprocessor directly influences how quickly it can move information. The more bits that a chip can use at a time, the faster it is. The first microprocessors had data buses only four bits wide. Pentium chips use a 32-bit data bus, as do the related Athlon, Celeron, and Duron chips. Itanium and Opteron chips have 64-bit data buses.

The number of bits available on the address bus influences how much memory a microprocessor can address. A microprocessor with 16 address lines, for example, can directly work with 216 addresses; that’s 65,536 (or 64K) different memory locations. The different microprocessors used in various computers span a range of address bus widths from 32 to 64 or more bits.

The range of bit addresses used by a microprocessor and the physical number of address lines of the chip no longer correspond. That’s because people and microprocessors look at memory differently. Although people tend to think of memory in terms of bytes, each comprising eight bits, microprocessors now deal in larger chunks of data, corresponding to the number of bits in their data buses. For example, a Pentium chip chews into data 32 bits at a time, so it doesn’t need to look to individual bytes. It swallows them four at a time. Chipmakers consequently omit the address lines needed to distinguish chunks of memory smaller than their data buses. This bit of frugality saves the number of connections the chip needs to make with the computer’s circuitry, an issue that becomes important once you see (as you will later) that the modern microprocessor requires several hundred external connections—each prone to failure.

Control Unit

The control unit of a microprocessor is a clocked logic circuit that, as its name implies, controls the operation of the entire chip. Unlike more common integrated circuits, whose function is fixed by hardware design, the control unit is more flexible. The control unit follows the instructions contained in an external program and tells the arithmetic/logic unit what to do. The control unit receives instructions from the I/O unit, translates them into a form that can be understood by the arithmetic/logic unit, and keeps track of which step of the program is being executed.

With the increasing complexity of microprocessors, the control unit has become more sophisticated. In the basic Pentium, for example, the control unit must decide how to route signals between what amounts to two separate processing units called pipelines. In other advanced microprocessors, the function of the control unit is split among other functional blocks, such as those that specialize in evaluating and handling branches in the stream of instructions.

Arithmetic/Logic Unit

The arithmetic/logic unit handles all the decision-making operations (the mathematical computations and logic functions) performed by the microprocessor. The unit takes the instructions decoded by the control unit and either carries them out directly or executes the appropriate microcode (see the section titled “Microcode” later in this chapter) to modify the data contained in its registers. The results are passed back out of the microprocessor through the I/O unit.

The first microprocessors had but one ALU. Modern chips may have several, which commonly are classed into two types. The basic form is the integer unit, one that carries out only the simplest mathematical operations. More powerful microprocessors also include one or more floating-point units, which handle advanced math operations (such as trigonometric and transcendental functions), typically at greater precision.

Floating-Point Unit

Although functionally a floating-point unit is part of the arithmetic/logic unit, engineers often discuss it separately because the floating-point unit is designed to process only floating-point numbers and not to take care of ordinary math or logic operations.

Floating-point describes a way of expressing values, not a mathematically defined type of number such as an integer, rational, or real number. The essence of a floating-point number is that its decimal point “floats” between a predefined number of significant digits rather than being fixed in place the way dollar values always have two decimal places.

Mathematically speaking, a floating-point number has three parts: a sign, which indicates whether the number is greater or less than zero; a significant (sometimes called a mantissa), which comprises all the digits that are mathematically meaningful; and an exponent, which determines the order of magnitude of the significant (essentially the location to which the decimal point floats). Think of a floating-point number as being like those represented by scientific notation. But whereas scientists are apt to deal in base-10 (the exponents in scientific notation are powers of 10), floating-point units think of numbers digitally in base-2 (all ones and zeros in powers of two).

As a practical matter, the form of floating-point numbers used in computer calculations follows standards laid down by the Institute of Electrical and Electronic Engineers (IEEE). The IEEE formats take values that can be represented in binary form using 80 bits. Although 80 bits seems somewhat arbitrary in a computer world that’s based on powers of two and a steady doubling of register size from 8 to 16 to 32 to 64 bits, it’s exactly the right size to accommodate 64 bits of the significant, with 15 bits leftover to hold an exponent value and an extra bit for the sign of the number held in the register. Although the IEEE standard allows for 32-bit and 64-bit floating-point values, most floating-point units are designed to accommodate the full 80-bit values. The floating-point unit (FPU) carries out all its calculations using the full 80 bits of the chip’s registers, unlike the integer unit, which can independently manipulate its registers in byte-wide pieces.

The floating-point units of Intel-architecture processors have eight of these 80-bit registers in which to perform their calculations. Instructions in your programs tell the microprocessor whether to use its ordinary integer ALU or its floating-point unit to carry out a mathematical operation. The different instructions are important because the eight 80-bit registers in Intel floating-point units also differ from integer units in the way they are addressed. Commands for integer unit registers are directly routed to the appropriate register as if sent by a switchboard. Floating-point unit registers are arranged in a stack, sort of an elevator system. Values are pushed onto the stack, and with each new number the old one goes down one level. Stack machines are generally regarded as lean and mean computers. Their design is austere and streamlined, which helps them run more quickly. The same holds true for stack-oriented floating-point units.

Until the advent of the Pentium, a floating-point unit was not a guaranteed part of a microprocessor. Some 486 and all previous chips omitted floating-point circuitry. The floating-point circuitry simply added too much to the complexity of the chip, at least for the state of fabrication technology at that time. To cut costs, chipmakers simply left the floating-point unit as an option.

When it was necessary to accelerate numeric operations, the earliest microprocessors used in computers allowed you to add an additional, optional chip to your computer to accelerate the calculation of floating-point values. These external floating-point units were termed math coprocessors.

The floating-point units of modern microprocessors have evolved beyond mere number-crunching. They have been optimized to reflect the applications for which computers most often crunch floating-point numbers—graphics and multimedia (calculating dots, shapes, colors, depth, and action on your screen display).

Instruction Sets

Instructions are the basic units for telling a microprocessor what to do. Internally, the circuitry of the microprocessor has to carry out hundreds, thousands, or even millions of logic operations to carry out one instruction. The instruction, in effect, triggers a cascade of logical operations. How this cascade is controlled marks the great divide in microprocessor and computer design.

The first electronic computers used a hard-wired design. An instruction simply activated the circuits appropriate for carrying out all the steps required. This design has its advantages. It optimizes the speed of the system because the direct hard-wire connection adds nothing to slow down the system. Simplicity means speed, and the hard-wired approach is the simplest. Moreover, the hard-wired design was the practical and obvious choice. After all, computers were so new that no one had thought up any alternative.

However, the hard-wired computer design has a significant drawback. It ties the hardware and software together into a single unit. Any change in the hardware must be reflected in the software. A modification to the computer means that programs have to be modified. A new computer design may require that programs be entirely rewritten from the ground up.

Microcode

The inspiration for breaking away from the hard-wired approach was the need for flexibility in instruction sets. Throughout most of the history of computing, determining exactly what instructions should make up a machine’s instruction set was more an art than a science. IBM’s first commercial computers, the 701 and 702, were designed more from intuition than from any study of which instructions programmers would need to use. Each machine was tailored to a specific application. The 701 ran instructions thought to serve scientific users; the 702 had instructions aimed at business and commercial applications.

When IBM tried to unite its many application-specific computers into a single, more general-purpose line, these instruction sets were combined so that one machine could satisfy all needs. The result was, of course, a wide, varied, and complex set of instructions. The new machine, the IBM 360 (introduced in 1964), was unlike previous computers in that it was created not as hardware but as an architecture. IBM developed specifications and rules for how the machine would operate but enabled the actual machine to be created from any hardware implementation designers found most expedient. In other words, IBM defined the instructions that the 360 would use but not the circuitry that would carry them out. Previous computers used instructions that directly controlled the underlying hardware. To adapt the instructions defined by the architecture to the actual hardware that made up the machine, IBM adopted an idea called microcode, originally conceived by Maurice Wilkes at Cambridge University.

In the microcode design, an instruction causes a computer to execute a small program to carry out the logic instructions required by the instruction. The collection of small programs for all the instructions the computer understands is its microcode.

Although the additional layer of microcode made machines more complex, it added a great deal of design flexibility. Engineers could incorporate whatever new technologies they wanted inside the computer, yet still run the same software with the same instructions originally written for older designs. In other words, microcode enabled new hardware designs and computer systems to have backward compatibility with earlier machines.

After the introduction of the IBM 360, nearly all mainframe computers used microcode. When the microprocessors came along, they followed the same design philosophy, using microcode to match instructions to hardware. Using this design, a microprocessor actually has a smaller microprocessor inside it, which is sometimes called a nanoprocessor, running the microcode.

This microcode-and-nanoprocessor approach makes creating a complex microprocessor easier. The powerful data-processing circuitry of the chip can be designed independently of the instructions it must carry out. The manner in which the chip handles its complex instructions can be fine-tuned even after the architecture of the main circuits are laid into place. Bugs in the design can be fixed relatively quickly by altering the microcode, which is an easy operation compared to the alternative of developing a new design for the whole chip (a task that’s not trivial when millions of transistors are involved). The rich instruction set fostered by microcode also makes writing software for the microprocessor (and computers built from it) easier, thus reducing the number of instructions needed for each operation.

Microcode has a big disadvantage, however. It makes computers and microprocessors more complicated. In a microprocessor, the nanoprocessor must go through several of its own microcode instructions to carry out every instruction you send to the microprocessor. More steps means more processing time taken for each instruction. Extra processing time means slower operation. Engineers found that microcode had its own way to compensate for its performance penalty—complex instructions.

Using microcode, computer designers could easily give an architecture a rich repertoire of instructions that carry out elaborate functions. A single, complex instruction might do the job of half a dozen or more simpler instructions. Although each instruction would take longer to execute because of the microcode, programs would need fewer instructions overall. Moreover, adding more instructions could boost speed. One result of this micro code “more is merrier” instruction approach is that typical computer microprocessors have seven different subtraction commands.

RISC

Although long the mainstay of computer and microprocessor design, microcode is not necessary. While system architects were staying up nights concocting ever more powerful and obscure instructions, a counter force was gathering. Starting in the 1970s, the micro code approach came under attack by researchers who claimed it takes a greater toll on performance than its benefits justify.

By eliminating microcode, this design camp believed, simpler instructions could be executed at speeds so much higher that no degree of instruction complexity could compensate. By necessity, such hard-wired machines would offer only a few instructions because the complexity of their hard-wired circuitry would increase dramatically with every additional instruction added. Practical designs are best made with small instruction sets.

John Cocke at IBM’s Yorktown Research Laboratory analyzed the usage of instructions by computers and discovered that most of the work done by computers involves relatively few instructions. Given a computer with a set of 200 instructions, for example, two-thirds of its processing involves using as few as 10 of the total instructions. Cocke went on to design a computer that was based on a few instructions that could be executed quickly. He is credited with inventing the Reduced Instruction Set Computer (RISC) in 1974. The term RISC itself is credited to David Peterson, who used it in a course in microprocessor design at the University of California at Berkeley in 1980.

The first chip to bear the label and to take advantage of Cocke’s discoveries was RISC-I, a laboratory design that was completed in 1982. To distinguish this new design approach from traditional microprocessors, microcode-based systems with large instruction sets have come to be known as Complex Instruction Set Computers (CISC).

Cocke’s research showed that most of the computing was done by basic instructions, not by the more powerful, complex, and specialized instructions. Further research at Berkeley and Stanford Universities demonstrated that there were even instances in which a sequence of simple instructions could perform a complex task faster than a single complex instruction could. The result of this research is often summarized as the 80/20 Rule, meaning that about 20 percent of a computer’s instructions do about 80 percent of the work. The aim of the RISC design is to optimize a computer’s performance for that 20 percent of instructions, speeding up their execution as much as possible. The remaining 80 percent of the commands could be duplicated, when necessary, by combinations of the quick 20 percent. Analysis and practical experience has shown that the 20 percent could be made so much faster that the overhead required to emulate the remaining 80 percent was no handicap at all.

To enable a microprocessor to carry out all the required functions with a handful of instructions requires a rethinking of the programming process. Instead of simply translating human instructions into machine-readable form, the compilers used by RISC processors attempt to find the optimum instructions to use. The compiler takes a more in-depth look at the requested operations and finds the best way to handle them. The result was the creation of optimizing compilers discussed in Chapter 3, “Software.”

If effect, the RISC design shifts a lot of the processing from the microprocessor to the compiler—a lot of the work in running a program gets taken care of before the program actually runs. Of course, the compiler does more work and takes longer to run, but that’s a fair tradeoff—a program needs to be compiled only once but runs many, many times when the streamlined execution really pays off.

RISC microprocessors have several distinguishing characteristics. Most instructions execute in a single clock cycle—or even faster with advanced microprocessor designs with several execution pathways. All the instructions are the same length with similar syntax. The processor itself does not use microcode; instead, the small repertory of instructions is hard-wired into the chip. RISC instructions operate only on data in the registers of the chip, not in memory, making what is called a load-store design. The design of the chip itself is relatively simple, with comparatively few logic gates that are themselves constructed from simple, almost cookie-cutter designs. And most of the hard work is shifted from the microprocessor itself to the compiler.

Micro-Ops

Both CISC and RISC have a compelling design rationale and performance, desirable enough that engineers working on one kind of chip often looked over the shoulders of those working in the other camp. As a result, they developed hybrid chips embodying elements of both the CISC and RISC design. All the latest processors—from the Pentium Pro to the Pentium 4, Athlon, and Duron as well—have RISC cores mated with complex instruction sets.

The basic technique involves converting the classic Intel instructions into RISC-style instructions to be processed by the internal chip circuitry. Intel calls the internal RISC-like instructions micro-ops. The term is often abbreviated as uops (strictly speaking, the initial u should be the Greek letter mu, which is an abbreviation for micro) and pronounced you-ops. Other companies use slightly different terminology.

By design, the micro-ops sidestep the primary shortcomings of the Intel instruction set by making the encoding of all commands more uniform, converting all instructions to the same length for processing, and eliminating arithmetic operations that directly change memory by loading memory data into registers before processing.

The translation to RISC-like instructions allows the microprocessor to function internally as a RISC engine. The code conversion occurs in hardware, completely invisible to your applications and out of the control of programmers. In other words, it shifts back from the RISC shift to doing the work in the compiler. There’s a good reason for this backward shift: It lets the RISC code deal with existing programs—those compiled before the RISC designs were created.

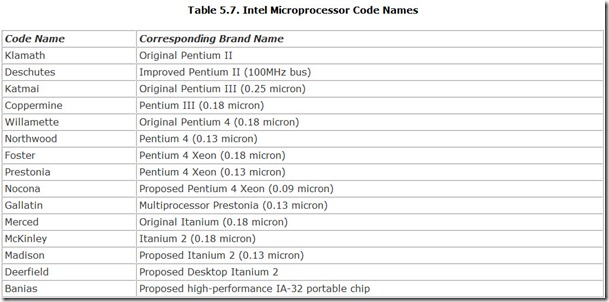

Single Instruction, Multiple Data

In a quest to improve the performance of Intel microprocessors on common multimedia tasks, Intel’s hardware and software engineers analyzed the operations multimedia programs most often required. They then sought the most efficient way to enable their chips to carry out these operations. They essentially worked to enhance the signal-processing capabilities of their general-purpose microprocessors so that they would be competitive with dedicated processors, such as digital signal processor (DSP) chips. They called the technology they developed Single Instruction, Multiple Data (SIMD). In effect a new class of microprocessor instructions, SIMD is the enabling element of Intel’s MultiMedia Extensions (MMX) to its microprocessor command set. Intel further developed this technology to add its Streaming SIMD Extensions (SSE, once known as the Katmai New Instructions) to its Pentium III microprocessors to enhance their 3D processing power. The Pentium 4 further enhances SSE with more multimedia instructions to create what Intel calls SSE2.

As the name implies, SIMD allows one microprocessor instruction to operate across several bytes or words (or even larger blocks of data). In the MMX scheme of things, the SIMD instructions are matched to the 64-bit data buses of Intel’s Pentium and newer microprocessors. All data, whether it originates as bytes, words, or 16-bit double-words, gets packed into 64-bit form. Eight bytes, four words, or two double-words get packed into a single 64-bit package that, in turn, gets loaded into a 64-bit register in the microprocessor. One microprocessor instruction then manipulates the entire 64-bit block.

Although the approach at first appears counterintuitive, it improves the handling of common graphic and audio data. In video processor applications, for example, it can trim the number of microprocessor clock cycles for some operations by 50 percent or more.

Very Long Instruction Words

Just as RISC started flowing into the product mainstream, a new idea started designers thinking in the opposite direction. Very long instruction word (VLIW) technology at first appears to run against the RISC stream by using long, complex instructions. In reality, VLIW is a refinement of RISC meant to better take advantage of superscalar microprocessors. Each very long instruction word is made from several RISC instructions. In a typical implementation, eight 32-bit RISC instructions combine to make one instruction word.

Ordinarily, combining RISC instructions would add little to overall speed. As with RISC, the secret of VLIW technology is in the software—the compiler that produces the final program code. The instructions in the long word are chosen so that they execute at the same time (or as close to it as possible) in parallel processing units in the superscalar microprocessor. The compiler chooses and arranges instructions to match the needs of the superscalar processor as best as possible, essentially taking the optimizing compiler one step further. In essence, the VLIW system takes advantage of preprocessing in the compiler to make the final code and microprocessor more efficient.

VLIW technology also takes advantage of the wider bus connections of the latest generation of microprocessors. Existing chips link to their support circuitry with 64-bit buses. Many have 128-bit internal buses. The 256-bit very long instruction words push a little further yet enable a microprocessor to load several cycles of work in a single memory cycle. Transmeta’s Crusoe processor uses VLIW technology.

Performance-Enhancing Architectures

Functionally, the first microprocessors operated a lot like meat grinders. You put something in such as meat scraps, turned a crank, and something new and wonderful came out—a sausage. Microprocessors started with data and instructions and yielded answers, but operationally they were as simple and direct as turning a crank. Every operation carried out by the microprocessor clicked with a turn of the crank—one clock cycle, one operation.

Such a design is straightforward and almost elegant. But its wonderful simplicity imposes a heavy constraint. The computer’s clock becomes an unforgiving jailor, locking up the performance of the microprocessor. A chip with this turn-the-crank design is locked to the clock speed and can never improve its performance beyond one operation per clock cycle. The situation is worse than that. The use of microcode almost ensures that at least some instructions will require multiple clock cycles.

One way to speed up the execution of instructions is to reduce the number of internal steps the microprocessor must take for execution. That idea was the guiding principle behind the first RISC microprocessors and what made them so interesting to chip designers. Actually, however, step reduction can take one of two forms: making the microprocessor more complex so that steps can be combined or making the instructions simpler so that fewer steps are required. Both approaches have been used successfully by microprocessor designers—the former as CISC microprocessors, the latter as RISC.

Ideally, it would seem, executing one instruction every clock cycle would be the best anyone could hope for, the ultimate design goal. With conventional microprocessor designs, that would be true. But engineers have found another way to trim the clock cycles required by each instruction—by processing more than one instruction at the same time.

Two basic approaches to processing more instructions at once are pipelining and superscalar architecture. All modern microprocessors take advantage of these technologies as well as several other architectural refinements that help them carry out more instructions for every cycle of the system clock.

Clock Speed

The operating speed of a microprocessor is usually called its clock speed, which describes the frequency at which the core logic of the chip operates. Clock speed is usually measured in megahertz (one million hertz or clock cycles per second) or gigahertz (a billion hertz). All else being equal, a higher number in megahertz means a faster microprocessor.

Faster does not necessarily mean the microprocessor will compute an answer more quickly, however. Different microprocessor designs can execute instructions more efficiently because there’s no one-to-one correspondence between instruction processing and clock speed. In fact, each new generation of microprocessor has been able to execute more instructions per clock cycle, so a new microprocessor can carry out more instructions at a given megahertz rating. At the same megahertz rating, a Pentium 4 is faster than a Pentium III. Why? Because of pipelining, superscalar architecture, and other design features.

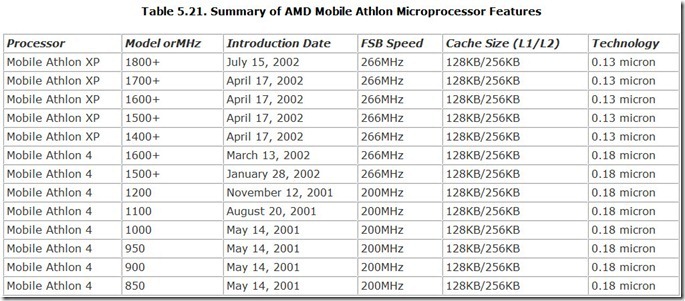

Sometimes microprocessor-makers take advantage of this fact and claim that megahertz doesn’t matter. For example, AMD’s Athlon processors carry out more instructions per clock cycle than Intel’s Pentium III, so AMD stopped using megahertz numbers to describe its chips. Instead, it substituted model designations that hinted at the speed of a comparable Pentium chips. An Athlon XP 2200+ processes data as quickly as a Pentium 4 at 2200MHz chip, although the Athlon chip actually operates at less than 2000MHz. With the introduction of its Itanium series of processors, Intel also made assertions that megahertz doesn’t matter because Itanium chips have clock speeds substantially lower than Pentium chips.

A further complication is software overhead. Microprocessor speed doesn’t affect the performance of Windows or its applications very much. That’s because the performance of Windows depends on the speed of your hard disk, video system, memory system, and other system resources as well as your microprocessor. Although a Windows system using a 2GHz processor will appear faster than a system with a 1GHz processor, it won’t be anywhere near twice as fast.

In other words, the megahertz rating of a microprocessor gives only rough guidance in comparing microprocessor performance in real-world applications. Faster is better, but a comparison of megahertz (or gigahertz) numbers does not necessarily express the relationship between the performance of two chips or computer systems.

Pipelining

In older microprocessor designs, a chip works single-mindedly. It reads an instruction from memory, carries it out, step by step, and then advances to the next instruction. Each step requires at least one tick of the microprocessor’s clock. Pipelining enables a microprocessor to read an instruction, start to process it, and then, before finishing with the first instruction, read another instruction. Because every instruction requires several steps, each in a different part of the chip, several instructions can be worked on at once and passed along through the chip like a bucket brigade (or its more efficient alternative, the pipeline). Intel’s Pentium chips, for example, have four levels of pipelining. Up to four different instructions may be undergoing different phases of execution at the same time inside the chip. When operating at its best, pipelining reduces the multiple-step/multiple-clock-cycle processing of an instruction to a single clock cycle.

Pipelining is very powerful, but it is also demanding. The pipeline must be carefully organized, and the parallel paths kept carefully in step. It’s sort of like a chorus singing a canon such as Fréré Jacques—one missed beat and the harmony falls apart. If one of the execution stages delays, all the rest delay as well. The demands of pipelining push microprocessor designers to make all instructions execute in the same number of clock cycles. That way, keeping the pipeline in step is easier.

In general, the more stages to a pipeline, the greater acceleration it can offer. Intel has added superlatives to the pipeline name to convey the enhancement. Super-pipelining is Intel’s term for breaking the basic pipeline stages into several steps, resulting in a 12-stage design used for its Pentium Pro through Pentium III chips. Later, Intel further sliced the stages to create the current Pentium 4 chip with 20 stages, a design Intel calls hyper-pipelining.

Real-world programs conspire against lengthy pipelines, however. Nearly all programs branch. That is, their execution can take alternate paths down different instruction streams, depending on the results of calculations and decision-making. A pipeline can load up with instructions of one program branch before it discovers that another branch is the one the program is supposed to follow. In that case, the entire contents of the pipeline must be dumped and the whole thing loaded up again. The result is a lot of logical wheel-spinning and wasted time. The bigger the pipeline, the more time that’s wasted. The waste resulting from branching begins to outweigh the benefits of bigger pipelines in the vicinity of five stages.

Branch Prediction

Today’s most powerful microprocessors are adopting a technology called branch prediction logic to deal with this problem. The microprocessor makes its best guess at which branch a program will take as it is filling up the pipeline. It then executes these most likely instructions. Because the chip is guessing at what to do, this technology is sometimes called speculative execution.

When the microprocessor’s guesses turn out to be correct, the chip benefits from the multiple-pipeline stages and is able to run through more instructions than clock cycles. When the chip’s guess turns out wrong, however, it must discard the results obtained under speculation and execute the correct code. The chip marks the data in later pipeline stages as invalid and discards it. Although the chip doesn’t lose time—the program would have executed in the same order anyway—it does lose the extra boost bequeathed by the pipeline.

Speculative Execution

To further increase performance, more modern microprocessors use speculative execution. That is, the chip may carry out an instruction in a predicted branch before it confirms whether it has properly predicted the branch. If the chip’s prediction is correct, the instruction has already been executed, so the chip wastes no time. If the prediction was incorrect, the chip will have to execute a different instruction, which it would have to have done anyhow, so it suffers no penalty.

Superscalar Architectures

The steps in a program normally are listed sequentially, but they don’t always need to be carried out exactly in order. Just as tough problems can be broken into easier pieces, program code can be divided as well. If, for example, you want to know the larger of two rooms, you have to compute the volume of each and then make your comparison. If you had two brains, you could compute the two volumes simultaneously. A superscalar microprocessor design does essentially that. By providing two or more execution paths for programs, it can process two or more program parts simultaneously. Of course, the chip needs enough innate intelligence to determine which problems can be split up and how to do it. The Pentium, for example, has two parallel, pipelined execution paths.

The first superscalar computer design was the Control Data Corporation 6600 mainframe, introduced in 1964. Designed specifically for intense scientific applications, the initial 6600 machines were built from eight functional units and were the fastest computers in the world at the time of their introduction.

Superscalar architecture gets its name because it goes beyond the incremental increase in speed made possible by scaling down microprocessor technology. An improvement to the scale of a microprocessor design would reduce the size of the microcircuitry on the silicon chip. The size reduction shortens the distance signals must travel and lowers the amount of heat generated by the circuit (because the elements are smaller and need less current to effect changes). Some microprocessor designs lend themselves to scaling down. Superscalar designs get a more substantial performance increase by incorporating a more dramatic change in circuit complexity.

Using pipelining and superscalar architecture cycle-saving techniques has cut the number of clock cycles required for the execution of a typical microprocessor instruction dramatically. Early microprocessors needed, on average, several cycles for each instruction. Today’s chips can often carry out multiple instructions in a single clock cycle. Engineers describe pipelined, superscalar chips by the number of instructions they can retire per clock cycle. They look at the number of instructions that are completed, because this best describes how much work the chip has (or can) actually accomplish.

Out-of-Order Execution

No matter how well the logic of a superscalar microprocessor divides up a program, each pipeline is unlikely to get an equal share of the work. One or another pipeline will grind away while another finishes in an instant. Certainly the chip logic can shove another instruction down the free pipeline (if another instruction is ready). But if the next instruction depends on the results of the one before it, and that instruction is the one stuck grinding away in the other pipeline, the free pipeline stalls. It is available for work but can do no work, thus potential processor power gets wasted.

Like a good Type-A employee who always looks for something to do, microprocessors can do the same. They can check the program for the next instruction that doesn’t depend on previous work that’s not finished and work on the new instruction. This sort of ambitious approach to programs is termed out-of-order execution, and it helps microprocessors take full advantage of superscalar designs.

This sort of ambitious microprocessor faces a problem, however. It is no longer running the program in the order it was written, and the results might be other than the programmer had intended. Consequently, microprocessors capable of out-of-order execution don’t immediately post the results from their processing into their registers. The work gets carried out invisibly and the results of the instructions that are processed out of order are held in a buffer until the chip has finished the processing of all the previous instructions. The chip puts the results back into the proper order, checking to be sure that the out-of-order execution has not caused any anomalies, before posting the results to its registers. To the program and the rest of the outside world, the results appear in the microprocessor’s registers as if they had been processed in normal order, only faster.

Register Renaming

Out-of-order execution often runs into its own problems. Two independently executable instructions may refer to or change the same register. In the original program, one would carry out its operation, then the other would do its work later. During superscalar out-of-order execution, the two instructions may want to work on the register simultaneously. Because that conflict would inevitably lead to confusing results and errors, an ordinary superscalar microprocessor would have to ensure the two instructions referencing the same register executed sequentially instead of in parallel, thus eliminating the advantage of its superscalar design.

To avoid such problems, advanced microprocessors use register renaming. Instead of a small number of registers with fixed names, they use a larger bank of registers that can be named dynamically. The circuitry in each chip converts the references made by an instruction to a specific register name to point instead to its choice of physical register. In effect, the program asks for the EAX register, and the chip says, “Sure,” and gives the program a register it calls EAX. If another part of the program asks for EAX, the chip pulls out a different register and tells the program that this one is EAX, too. The program takes the microprocessor’s word for it, and the microprocessor doesn’t worry because it has several million transistors to sort things out in the end.

And it takes several million transistors because the chip must track all references to registers. It does this to ensure that when one program instruction depends on the result in a given register, it has the right register and results dished up to it.

Explicitly Parallel Instruction Computing

With Intel’s shift to 64-bit architecture for its most powerful line of microprocessors (aimed, for now, at the server market), the company introduced a new instruction set to compliment the new architecture. Called Explicitly Parallel Instruction Computing (EPIC), the design follows the precepts of RISC architecture by putting the hard work into software (the compiler), while retaining the advantages of longer instructions used by SIMD and VLIW technologies.

The difference between EPIC and older Intel chips is that the compiler takes a swipe at each program and determines where parallel processes can occur. It then optimizes the program code to sort out separate streams of execution that can be routed to different microprocessor pipelines and carried out concurrently. This not only relieves the chip from working to figure out how to divide up the instruction stream, but it also allows the software to more thoroughly analyze the code rather than trying to do it on the fly.

By analyzing and dividing the instruction streams before they are submitted to the microprocessor, EPIC trims the need and use of speculative execution and branch prediction. The compiler can look ahead in the program, so it doesn’t have to speculate or predict. It knows how best to carry out a complex program.

Front-Side Bus

The instruction stream is not the only bottleneck in a modern computer. The core logic of most microprocessors operates much faster than other parts of most computers, including the memory and support circuitry. The microprocessor links to the rest of the computer through a connection called the system bus or the front-side bus. The speed at which the system bus operates sets a maximum limit on how fast the microprocessor can send data to other circuits (including memory) in the computer.

When the microprocessor needs to retrieve data or an instruction from memory, it must wait for this to come across the system bus. The slower the bus, the longer the microprocessor has to wait. More importantly, the greater the mismatch between microprocessor speed and system bus speed, the more clock cycles the microprocessor needs to wait. Applications that involve repeated calculations on large blocks of data—graphics and video in particular—are apt to require the most access to the system bus to retrieve data from memory. These applications are most likely to suffer from a slow system bus.

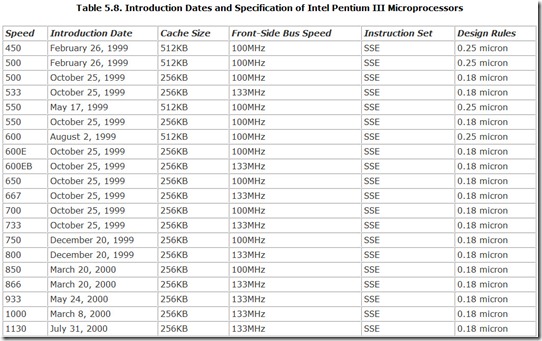

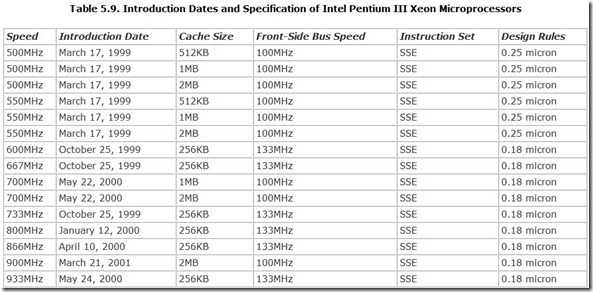

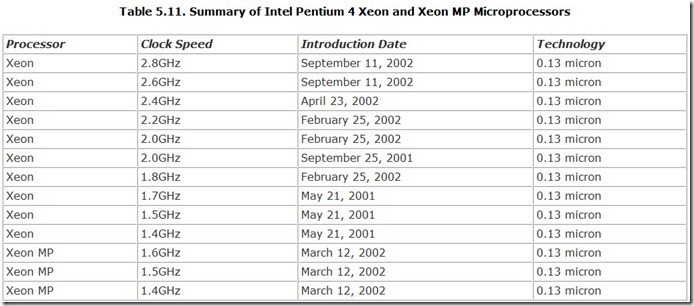

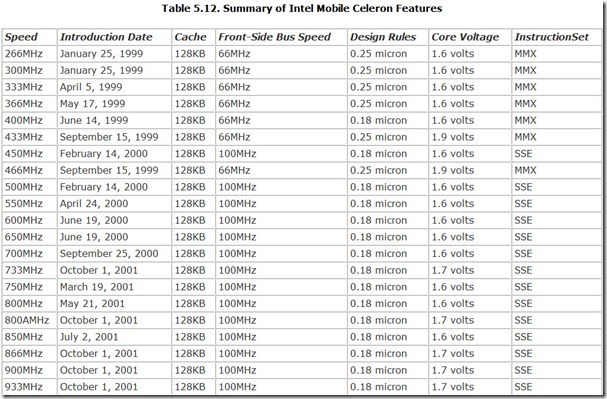

The first commercial microprocessors of the current generation operated their system buses at 66MHz. Through the years, manufacturers have boosted this speed and increased the speed at which the microprocessor can communicate with the rest of the computer. Chips now use clock speeds of 100MHz or 133MHz for their system buses.

With the Pentium 4, Intel added a further refinement to the system bus. Using a technology called quad-pumping, Intel forces four data bits onto each clock cycle of the system bus. A quad-pumped system bus operating at 100MHz can actually move 400Mb of data in a second. A quad-pumped system bus running at 133MHz achieves a 533Mbps data rate.

The performance of the system bus is often described in its bandwidth, the number of total bytes of data that can move through the bus in one second. The data buses of all current computers are 64 bits wide—that’s 8 bytes. Multiplying the clock speed or data rate of the bus by its width yields its bandwidth. A 100MHz system bus therefore has an 800MBps (megabytes per second) bandwidth. A 400MHz bus has a 3.2GBps bandwidth.

Intel usually locks the system bus speed to the clock speed of the microprocessor. This synchronous operation optimizes the transfer rate between the bus and the microprocessor. It also explains some of the odd frequencies at which some microprocessors are designed to operate (for example, 1.33GHz or 2.56GHz). Sometimes the system bus operates at a speed that’s not an even divisor of the microprocessor speed—for example, the microprocessor clock speed may be 4.5, 5, or 5.5 times the system bus speed. Such a mismatch can slow down system performance, although such mismatches are minimized by effective microprocessor caching (see the upcoming section “Caching” for more information).

Translation Look-Aside Buffers

Modern pipelined, superscalar microprocessors need to access memory quickly, and they often repeatedly go to the same address in the execution of a program. To speed up such operations, most newer microprocessors include a quick lookup list of the pages in memory that the chip has addressed most recently. This list is termed a translation look-aside and is a small block of fast memory inside the microprocessor that stores a table that cross-references the virtual addresses in programs with the corresponding real addresses in physical memory that the program has most recently used. The microprocessor can take a quick glance away from its normal address-translation pipeline, effectively “looking aside,” to fetch the addresses it needs.

The translation look-aside buffer (TLB) appears to be very small in relation to the memory of most computers. Typically, a TLB may be 64 to 256 entries. Each entry, however, refers to an entire page of memory, which with today’s Intel microprocessors, totals four kilobytes. The amount of memory that the microprocessor can quickly address by checking the TLB is the TLB address space, which is the product of the number of entries in the TLB and the page size. A 256-entry TLB can provide fast access to a megabyte of memory (256 entries times 4KB per page).

Caching

The most important means of matching today’s fast microprocessors to the speeds of affordable memory, which is inevitably slower, is memory caching. A memory cache interposes a block of fast memory—typically high-speed static RAM—between the micro processor and the bulk of primary storage. A special circuit called a cache controller (which current designs make into an essential part of the microprocessor) attempts to keep the cache filled with the data or instructions that the microprocessor is most likely to need next. If the information the microprocessor requests next is held within the cache, it can be retrieved without waiting.

This fastest possible operation is called a cache hit. If the needed data is not in the cache memory, it is retrieved from outside the cache, typically from ordinary RAM at ordinary RAM speed. The result is called a cache miss.

Not all memory caches are created equal. Memory caches differ in many ways: size, logical arrangement, location, and operation.

Cache Level

Caches are sometimes described by their logical and electrical proximity to the microprocessor’s core logic. The closest physically and electrically to the microprocessor’s core logic is the primary cache, also called a Level One cache. A secondary cache (or Level Two cache) fits between the primary cache and main memory. The secondary cache usually is larger than the primary cache but operates at a lower speed (to make its larger mass of memory more affordable). Rarely is a tertiary cache (or Level Three cache) interposed between the secondary cache and memory.

In modern microprocessor designs, both the primary and secondary caches are part of the microprocessor itself. Older designs put the secondary cache in a separate part of a microprocessor module or in external memory.

Primary and secondary caches differ in the way they connect with the core logic of the microprocessor. A primary cache invariably operates at the full speed of the microprocessor’s core logic with the widest possible bit-width connection between the core logic and the cache. Secondary caches often operate at a rate slower than the chip’s core logic, although all current chips operate the secondary cache at full core speed.

Cache Size

A major factor that determines how successful the cache will be is how much information it contains. The larger the cache, the more data that is in it and the more likely any needed byte will be there when you system calls for it. Obviously, the best cache is one that’s as large as, and duplicates, the entirety of system memory. Of course, a cache that big is also absurd. You could use the cache as primary memory and forget the rest. The smallest cache would be a byte, also an absurd situation because it guarantees the next read is not in the cache. Chipmakers try to make caches as large as possible within the constraints of fabricating microprocessors affordably.

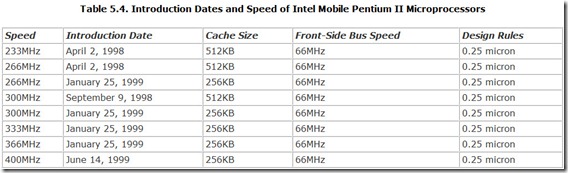

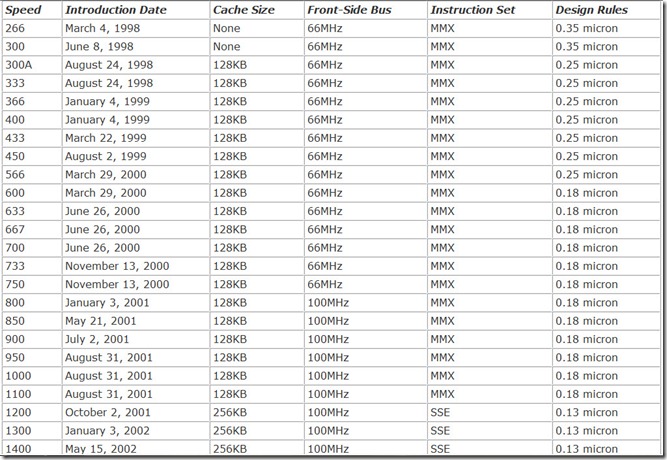

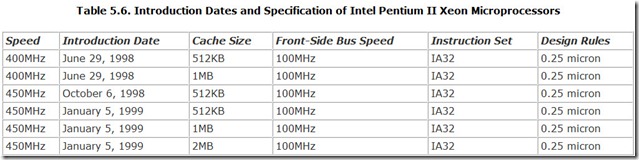

Today’s primary caches are typically 64 or 128KB. Secondary caches range from 128 to 512KB for chips for desktop and mobile applications and up to 2MB for server-oriented microprocessors.

Instruction and Data Caches

Modern microprocessors subdivide their primary caches into separate instruction and data caches, typically with each assigned one-half the total cache memory. This separation allows for a more efficient microprocessor design. Microprocessors handle instructions and data differently and may even send them down different pipelines. Moreover, instructions and data typically use memory differently—instructions are sequential whereas data can be completely random. Separating the two allows designers to optimize the cache design for each.

Write-Through and Write-Back Caches

Caches also differ in the way they treat writing to memory. Most caches make no attempt to speed up write operations. Instead, they push write commands through the cache immediately, writing to cache and main memory (with normal wait-state delays) at the same time. This write-through cache design is the safe approach because it guarantees that main memory and cache are constantly in agreement. Most Intel microprocessors through the current versions of the Pentium use write-through technology.

The faster alternative is the write-back cache, which allows the microprocessor to write changes to its cache memory and then immediately go back about its work. The cache controller eventually writes the changed data back to main memory as time allows.

Cache Mapping

The logical configuration of a cache involves how the memory in the cache is arranged and how it is addressed (that is, how the microprocessor determines whether needed information is available inside the cache). The major choices are direct-mapped, full associative, and set-associative.

The direct-mapped cache divides the fast memory of the cache into small units, called lines (corresponding to the lines of storage used by Intel 32-bit microprocessors, which allow addressing in 16-byte multiples, blocks of 128 bits), each of which is identified by an index bit. Main memory is divided into blocks the size of the cache, and the lines in the cache correspond to the locations within such a memory block. Each line can be drawn from a different memory block, but only from the location corresponding to the location in the cache. Which block the line is drawn from is identified by a tag. For the cache controller—the electronics that ride herd on the cache—determining whether a given byte is stored in a direct-mapped cache is easy. It just checks the tag for a given index value.

The problem with the direct-mapped cache is that if a program regularly moves between addresses with the same indexes in different blocks of memory, the cache needs to be continually refreshed—which means cache misses. Although such operation is uncommon in single-tasking systems, it can occur often during multitasking and slow down the direct-mapped cache.

The opposite design approach is the full-associative cache. In this design, each line of the cache can correspond to (or be associated with) any part of main memory. Lines of bytes from diverse locations throughout main memory can be piled cheek-by-jowl in the cache. The major shortcoming of the full-associative approach is that the cache controller must check the addresses of every line in the cache to determine whether a memory request from the microprocessor is a hit or miss. The more lines there are to check, the more time it takes. A lot of checking can make cache memory respond more slowly than main memory.

A compromise between direct-mapped and full-associative caches is the set-associative cache, which essentially divides up the total cache memory into several smaller direct-mapped areas. The cache is described as the number of ways into which it is divided. A four-way set-associative cache, therefore, resembles four smaller direct-mapped caches. This arrangement overcomes the problem of moving between blocks with the same indexes. Consequently, the set-associative cache has more performance potential than a direct-mapped cache. Unfortunately, it is also more complex, making the technology more expensive to implement. Moreover, the more “ways” there are to a cache, the longer the cache controller must search to determine whether needed information is in the cache. This ultimately slows down the cache, mitigating the advantage of splitting it into sets. Most computer-makers find a four-way set-associative cache to be the optimum compromise between performance and complexity.

Electrical Characteristics

At its heart, a microprocessor is an electronic device. This electronic foundation has important ramifications in the construction and operation of chips. The “free lunch” principle (that is, there is none) tells us that every operation has its cost. Even the quick electronic thinking of a microprocessor takes a toll. The thinking involves the switching of the state of tiny transistors, and each state change consumes a bit of electrical power, which gets converted to heat. The transistors are so small that the process generates a minuscule amount of heat, but with millions of them in a single chip, the heat adds up. Modern microprocessors generate so much heat that keeping them cool is a major concern in their design.

Thermal Constraints

Heat is the enemy of the semiconductor because it can destroy the delicate crystal structure of a chip. If a chip gets too hot, it will be irrevocably destroyed. Packing circuits tightly concentrates the heat they generate, and the small size of the individual circuit components makes them more vulnerable to damage.

Heat can cause problems more subtle than simple destruction. Because the conductivity of semiconductor circuits also varies with temperature, the effective switching speed of transistors and logic gates also changes when chips get too hot or too cold. Although this temperature-induced speed change does not alter how fast a microprocessor can compute (the chip must stay locked to the system clock at all times), it can affect the relative timing between signals inside the microprocessor. Should the timing get too far off, a microprocessor might make a mistake, with the inevitable result of crashing your system. All chips have rated temperature ranges within which they are guaranteed to operate without such timing errors.

Because chips generate more heat as speed increases, they can produce heat faster than it can radiate away. This heat buildup can alter the timing of the internal signals of the chip so drastically that the microprocessor will stop working and—as if you couldn’t guess—cause your system to crash. To avoid such problems, computer manufacturers often attach heatsinks to microprocessors and other semiconductor components to aid in their cooling.

A heatsink is simply a metal extrusion that increases the surface area from which heat can radiate from a microprocessor or other heat-generating circuit element. Most heatsinks have several fins (rows of pins) or some geometry that increases its surface area. Heatsinks are usually made from aluminum because that metal is one of the better thermal conductors, enabling the heat from the microprocessor to quickly spread across the heatsink.

Heatsinks provide passive cooling (passive because cooling requires no power-using mechanism). Heatsinks work by convection, transferring heat to the air that circulates past the heatsink. Air circulates around the heatsink because the warmed air rises away from the heatsink and cooler air flows in to replace it.

In contrast, active cooling involves some kind of mechanical or electrical assistance to remove heat. The most common form of active cooling is a fan, which blows a greater volume of air past the heatsink than would be possible with convection alone. Nearly all modern microprocessors require a fan for active cooling, typically built into the chip’s heatsink.

The makers of notebook computers face another challenge in efficiently managing the cooling of their computers. Using a fan to cool a notebook system is problematic. The fan consumes substantial energy, which trims battery life. Moreover, the heat generated by the fan motor itself can be a significant part of the thermal load of the system. Most designers of notebook machines have turned to more innovative passive thermal controls, such as heat pipes and using the entire chassis of the computer as a heatsink.

Operating Voltages

In desktop computers, overheating rather than excess electrical consumption is the major power concern. Even the most wasteful of microprocessors uses far less power than an ordinary light bulb. The most that any computer-compatible microprocessor consumes is about nine watts, hardly more than a night light and of little concern when the power grid supplying your computer has megawatts at its disposal.

If you switch to battery power, however, every last milliwatt is important. The more power used by a computer, the shorter the time its battery can power the system or the heavier the battery it will need to achieve a given life between charges. Every degree a microprocessor raises its case temperature clips minutes from its battery runtime.

Battery-powered notebooks and sub-notebook computers consequently caused microprocessor engineers to do a quick about-face. Whereas once they were content to use bigger and bigger heatsinks, fans, and refrigerators to keep their chips cool, today they focus on reducing temperatures and wasted power at the source.

One way to cut power requirements is to make the design elements of a chip smaller. Smaller digital circuits require less power. But shrinking chips is not an option; microprocessors are invariably designed to be as small as possible with the prevailing technology.

To further trim the power required by microprocessors to make them more amenable to battery operation, engineers have come up with two new design twists: low-voltage operation and system-management mode. Although founded on separate ideas, both are often used together to minimize microprocessor power consumption. All new microprocessor designs incorporate both technologies.

Since the very beginning of the transistor-transistor logic family of digital circuits (the design technology that later blossomed into the microprocessor), digital logic has operated with a supply voltage of 5 volts. That level is essentially arbitrary. Almost any voltage would work. But 5-volt technology offers some practical advantages. It’s low enough to be both safe and frugal with power needs but high enough to avoid noise and allow for several diode drops, the inevitable reduction of voltage that occurs when a current flows across a semiconductor junction.

Every semiconductor junction, which essentially forms a diode, reduces or drops the voltage flowing through it. Silicon junctions impose a diode drop of about 0.7 volts, and there may be one or more such junctions in a logic gate. Other materials impose smaller drops—that of germanium, for example, is 0.4 volts—but the drop is unavoidable.

There’s nothing magical about using 5 volts. Reducing the voltage used by logic circuits dramatically reduces power consumption because power consumption in electrical circuits increases by the square of the voltage. That is, doubling the voltage of a circuit increases the power it uses by fourfold. Reducing the voltage by one-half reduces power consumption by three-quarters (providing, of course, that the circuit will continue to operate at the lower voltage).

All current microprocessor designs operate at about 2 volts or less. The Pentium 4 operates at just over 1.3 volts with minor variations, depending on the clock frequency of the chip. For example, the 2.53GHz version requires 1.325 volts. Microprocessors designed for mobile applications typically operate at about 1.1 volts; some as low as 0.95 volt.

To minimize power consumption, Intel sets the operating voltage of the core logic of its chips as low as possible—some, such as Intel’s ultra-low-voltage Mobile Pentium III-M, to just under 1 volt. The integral secondary caches of these chips (which are fabricated separately from the core logic) usually require their own, often higher, voltage supply. In fact, operating voltage has become so critical that Intel devotes several pins of its Pentium II and later microprocessors to encoding the voltage needs of the chip, and the host computer must adjust its supply to the chip to precisely meet those needs.

Most bus architectures and most of today’s memory modules operate at the 3.3 volt level. Future designs will push that level lower. Rambus memory systems, for example, operate at 2.5 volts (see Chapter 6, “Chipsets,” for more information).

Power Management

Trimming the power need of a microprocessor both reduces the heat the chip generates and increases how long it can run off a battery supply, an important consideration for portable computers. Reducing the voltage and power use of the chip is one way of keeping the heat down and the battery running, but chipmakers have discovered they can save even more power through frugality. The chips use power only when they have to, thus managing their power consumption.

Chipmakers have two basic power-management strategies: shutting off circuits when they are not needed and slowing down the microprocessor when high performance is not required.

The earliest form of power-savings built into microprocessors was part of system management mode (SMM), which allowed the circuitry of the chip to be shut off. In terms of clock speed, the chip went from full speed to zero. Initially, chips switched off after a period of system inactivity and woke up to full speed when triggered by an appropriate interrupt.

More advanced systems cycled the microprocessor between on and off states as they required processing power. The chief difficulty with this design is that nothing gets done when the chip isn’t processing. This kind of power management only works when you’re not looking (not exactly a salesman’s dream) and is a benefit you should never be able to see. Intel gives this technique the name QuickStart and claims that it can save enough energy between your keystrokes to significantly reduce overall power consumption by briefly cutting the microprocessor’s electrical needs by 95 percent. Intel introduced QuickStart in the Mobile Pentium II processor, although it has not widely publicized the technology.

In the last few years, chipmakers have approached the power problem with more advanced power-saving systems that take an intermediary approach. One way is to reduce microprocessor power when it doesn’t need it for particular operations. Intel slightly reduces the voltage applied to its core logic based on the activity of the processor. Called Intel Mobile Voltage Positioning (IMVP), this technology can reduce the thermal design power—which means the heat produced by the microprocessor—by about 8.5 percent. According to Intel, this reduction is equivalent to reducing the speed of a 750MHz Mobile Pentium III by 100MHz.

Another technique for saving power is to reduce the performance of a microprocessor when its top speed is not required by your applications. Instead of entirely switching off the microprocessor, the chipmakers reduce its performance to trim power consumption. Each of the three current major microprocessor manufacturers puts its own spin on this performance-as-needed technology, labeling it with a clever trademark. Intel offers SpeedStep, AMD offers PowerNow!, and Transmeta offers LongRun. Although at heart all three are conceptually much the same, in operation you’ll find distinct differences between them.

Intel SpeedStep

Internal mobile microprocessor power savings started with SpeedStep, introduced by Intel on January 18, 2000, with the Mobile Pentium III microprocessors, operating at 600MHz and 650MHz. To save power, these chips can be configured to reduce their operating speed when running on battery power to 500MHz. All Mobile Pentium III and Mobile Pentium 4 chips since that date have incorporated SpeedStep into their designs. Mobile Celeron processors do not use SpeedStep.

The triggering event is a reduction of power to the chip. For example, the initial Mobile Pentium III chips go from the 1.7 volts that is required for operating at their top speeds to 1.35 volts. As noted earlier, the M-series step down from 1.4 to 1.15 volts, the low-voltage M-series from 1.35 to 1.1 volts, and the ultra-low-voltage chips from 1.1 to 0.975 volts. Note that a 15-percent reduction in voltage in itself reduces power consumption by about 29 percent, with a further reduction that’s proportional to the speed decrease. The 600MHz Pentium III, for example, cuts its power consumption an additional 17 percent thanks to voltage reduction when slipping down from 600MHz to 500MHz.

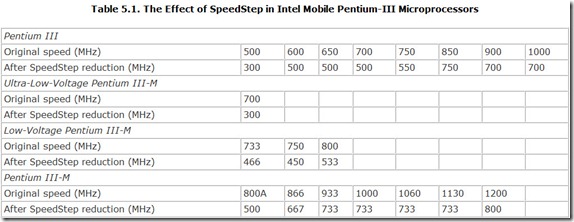

Intel calls the two modes Maximum Performance Mode (for high speed) and Battery Optimized Mode (for low speed). According to Intel, switching between speeds requires about one two-thousandths of a second. The M-series of Mobile Pentium III adds an additional step to provide an intermediary level of performance when operating on battery. Intel calls this technology Enhanced SpeedStep. Table 5.1 lists the SpeedStep capabilities of many Intel chips.

AMD PowerNow!

Advanced Micro Devices devised its own power-saving technology, called PowerNow!, for its mobile processors. The AMD technology differs from Intel’s SpeedStep by providing up to 32 levels of speed reduction and power savings. Note that 32 levels is the design limit. Actual implementations of the technology from AMD have far fewer levels. All current AMD mobile processors—both the Mobile Athlon and Mobile Duron lines—use PowerNow! technology.

PowerNow! operates in one of three modes:

-

Hi-Performance. This mode runs the microprocessor at full speed and full voltage so that the chip maximizes its processing power.

-

Battery Saver. This mode runs the chip at a lower speed using a lower voltage to conserve battery power, exactly as a SpeedStep chip would, but with multiple levels. The speed and voltage are determined by the chip’s requirements and programming of the BIOS (which triggers the change).

-

Automatic. This mode makes the changes in voltage and clock speed dynamic, responding to the needs of the system. When an application requires maximum processing power, the chip runs at full speed. As the need for processing declines, the chip adjusts its performance to match. Current implementations allow for four discrete speeds at various operating voltages. Automatic mode is the best compromise for normal operation of portable computers.

The actual means of varying the clock frequency involves dynamic control of the clock multiplier inside the AMD chip. The external oscillator or clock frequency the computer supplies the microprocessor does not change, regardless of the performance demand. In the case of the initial chip to use PowerNow! (the Mobile K6-2+ chip, operating at 550MHz), its actual operating speed could vary from 200MHz to 550MHz in a system that takes full advantage of the technology.

Control of PowerNow! starts with the operating system (which is almost always Windows). Windows monitors processor usage, and when it dips below a predetermined level, such as 50 percent, Windows signals the PowerNow! system to cut back the clock multiplier inside the microprocessor and then signals a programmable voltage regulator to trim the voltage going to the chip. Note that even with PowerNow!, the chip’s supply voltage must be adjusted externally to the chip to achieve the greatest power savings.

If the operating system detects that the available processing power is still underused, it signals to cut back another step. Similarly, should processing needs reach above a predetermined level (say, 90 percent of the available ability), the operating system signals PowerNow! to kick up performance (and voltage) by a notch.

Transmeta LongRun

Transmeta calls its proprietary power-saving technology LongRun. It is a feature of both current Crusoe processors, the TM5500 and TM5800. In concept, LongRun is much like AMD’s PowerNow! The chief difference is control. Because of the design of Transmeta’s Crusoe processors, Windows instructions are irrelevant to their power usage—Crusoe chips translate the Windows instructions into their own format. To gauge its power needs, the Crusoe chip monitors the flow of its own native instructions and adjusts its speed to match the processing needs of that code stream. In other words, the Crusoe chip does its own monitoring and decision-making regarding power savings without regard to Windows power-conservation information.

According to Transmeta, LongRun allows its microprocessors to adjust their power consumption by changing their clock frequency on the fly, just as PowerNow! does, as well as to adjust their operating voltage. The processor core steps down processor speed in 33MHz increments, and each step holds the potential of reducing chip voltage. For example, trimming the speed of a chip from 667MHz to 633MHz also allows for reducing the operating voltage from 1.65 to 1.60 volts.

Packaging

The working part of a microprocessor is exactly what the nickname “chip” implies: a small flake of a silicon crystal no larger than a postage stamp. Although silicon is a fairly robust material with moderate physical strength, it is sensitive to chemical contamination. After all, semiconductors are grown in precisely controlled atmospheres, the chemical content of which affects the operating properties of the final chip. To prevent oxygen and contaminants in the atmosphere from adversely affecting the precision-engineered silicon, the chip itself must be sealed away. The first semiconductors, transistors, were hermetically sealed in tiny metal cans.

The art and science of semiconductor packaging has advanced since those early days. Modern integrated circuits (ICs) are often surrounded in epoxy plastic, an inexpensive material that can be easily molded to the proper shape. Unfortunately, microprocessors can get very hot, sometimes too hot for plastics to safely contain. Most powerful modern microprocessors are consequently cased in ceramic materials that are fused together at high temperatures. Older, cooler chips reside in plastic. The most recent trend in chip packaging is the development of inexpensive tape-based packages optimized for automated assembly of circuit boards.

The most primitive of microprocessors (that is, those of the early generation that had neither substantial signal nor power requirements) fit in the same style housing popular for other integrated circuits—the infamous dual inline pin (DIP) package. The packages grew more pins—or legs, as engineers sometimes call them—to accommodate the ever-increasing number of signals in data and address buses.

The DIP package is far from ideal for a number of reasons. Adding more connections, for example, makes for an ungainly chip. A centipede microprocessor would be a beast measuring a full five inches long. Not only would such a critter be hard to fit onto a reasonably sized circuit board, it would require that signals travel substantially farther to reach the end pins than those in the center. At modern operating frequencies, that difference in distance can amount to a substantial fraction of a clock cycle, potentially putting the pins out of sync.

Modern chip packages are compact squares that avoid these problems. Engineers developed several separate styles to accommodate the needs of the latest microprocessors.

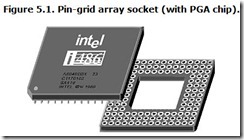

The most common is the pin grid array (PGA), a square package that varies in size with the number of pins that it must accommodate (typically about two inches square). The first PGA chips had 68 pins. Pentium 4 chips in PGA packages have up to 478.

No matter their number, the pins are spaced as if they were laid out on a checkerboard, making the “grid array” of the package name (see Figure 5.1).

To fit the larger number of pins used by wider-bus microprocessors into a reasonable space, Intel rearranged the pins of some processors (notably the Pentium Pro), staggering them so that they can fit closer together. The result is a staggered pin grid array (SPGA) package, as shown in Figure 5.2.

Pins take up space and add to the cost of fabrication, so chipmakers have developed a number of pinless packages. The first of these to find general use was the Leadless Chip Carrier (LCC) socket. Instead of pins, this style of package has contact pads on one of its surfaces. The pads are plated with gold to avoid corrosion or oxidation that would impede the flow of the minute electrical signals used by the chip (see Figure 5.3). The pads are designed to contact special springy mating contacts in a special socket. Once installed, the chip itself may be hidden in the socket, under a heat sink, or perhaps only the top of the chip may be visible, framed by the four sides of the socket.

A related design, the Plastic Leaded Chip Carrier (PLCC), substitutes epoxy plastic for the ceramic materials ordinarily used for encasing chips. Plastic is less expensive and easier to work with. Some microprocessors with low thermal output sometimes use a housing designed to be soldered down—the Plastic Quad Flat Package (PQFP), sometimes called simply the quad flat pack because the chips are flat (they fit flat against the circuit board) and they have four sides (making them a quadrilateral, as shown in Figure 5.4).