Alternative Design Architectures

RISC More Is Nut Always Better Most mainframe computers and personal computers use else (complex instruction set computer and perture . In topic 2, "Computer Systems: Micros to Supercomputers," we learned that the term architecture. when applied to a computer, refers to a computer system’s design. A CLSC computer’s machine language offers programmers a wide variety of instructions from which to choose (add, multiply, compare, move data, and so on). CLSC computers reflect the evolution of increasingly sophisticated machine languages. Computer designers, however, are rediscovering the beauty of simplicity. Computers designed around much smaller instruction sets can realize Significantly increased throughput for certain applications, especially those that involve graphics (for example, computer-aided design), These computers use ruse (reduced instruction set computer) architecture.

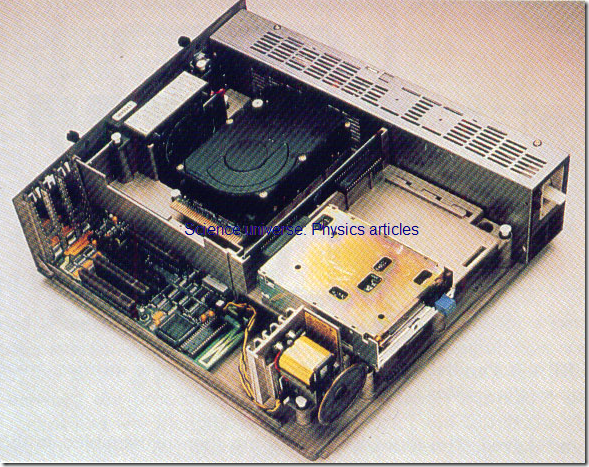

The design architecture of IBM-PC- compatible computers and the IBM PS /2 ( shown here ) is based on the intel line of microprocessors. All components needed to build an IBM -PC- compatible computer are readily available on the open market.

Literally hundreds of companies purchase the individual components and assemble PCs for sale. the most successful companies enbance the fundamental design architecture to give the user added through put and flexibility .

Although RISC architectures are found in all categories of computers, RISE has experienced its greatest success in workstations. (Workstations are discussed ) The dominant application among workstations is graphics. Workstation manufacturers feel that the limitations of a reduced instruction set are easily offset by increased processing speed and the lower cost of RISC microprocessors.

Parallel Processing Computer manufacturers have relied on the single processor design architecture since the late 1940 s. In this environment, the processor addresses the programming problem sequentially, from beginning to end. Today designers are doing research on and building computers that break a programming problem into pieces. Work on each of these pieces is then executed Simultaneously in separate processors, ail of which are part of the same computer. The concept of using multiple processors in the same computer is known as parallel processing .

the point was made that a computer system may be made up of several special-function processors. For example, a single computer system may have a host processor, a front-end processor, and a back-end processor. By dividing the workload among several special-function processors, the system throughput is increased. Computer designers began asking themselves, "lf three or four processors can enhance throughput, what could be accomplished with twenty, or even a thousand, processors?"

In parallel processing, one main processor (a minicomputer or A host mainframe) examines the programming problem and determines what portions, if any, of the problem can be solved in pieces (see Figure 6). Those pieces that can be addressed separately are routed to other processors and solved. The individual pieces are then reassembled in the main processor for further computation. output, or storage. The net result of parallel processing is better throughput.

FIGURE 6 Parallel Processing Il parallel processing. auxiliary processors solve pieces of a problem to enhance system throughput

Research and design in this area, which some say characterizes a fifth generation of computers. is gaining momentum. Computer designers are creating mainframe and supercomputers with thousands of integrated microprocessors. Parallel processing on such a large scale is referred to as massively parallel processing (MPP). These super-fast supercomputers will have sufficient computing capacity to attack applications that have been beyond that of computers with traditional architectures. For example, researchers hope to simulate global warming with these computers They also will enable executives to do data mining. Data mining involves the systematic examination of vast. unstructured data bases to detect trends in everything from consumer buying habits to country-wide migration .

Neural Networks: Wave of the Future? The best processor is the human brain. Scientists are studying the way the human brain and nervous system work in an attempt to build computers that mimic the incredible human mind. The base technology for these computers is neural networks. Neural networks are composed of millions of interconnected artificial neurons which, essentially, are integrated circuits. (The biological neuron is the functional unit of human nerve tissue.) The mature neural networked computer will be able to perform human-oriented tasks, such as pattern recognition, reading handwriting, and learning.

The primary difference between traditional digital computer architectures and neural networks is that digital computers process data sequentially whereas neural networks process data Simultaneously. Digital computer will always he able to outperform neural networked computers and the human brain when it comes to fast, accurate numeric computation. However, if neural networks live up to their potential, they will be able to handle tasks that are currently very time-consuming or impossible for conventional computers, such as recognizing a face in the crowd .